VITA-Group / Enlightengan

Programming Languages

Projects that are alternatives of or similar to Enlightengan

EnlightenGAN

IEEE Transaction on Image Processing, 2020, EnlightenGAN: Deep Light Enhancement without Paired Supervision

Representitive Results

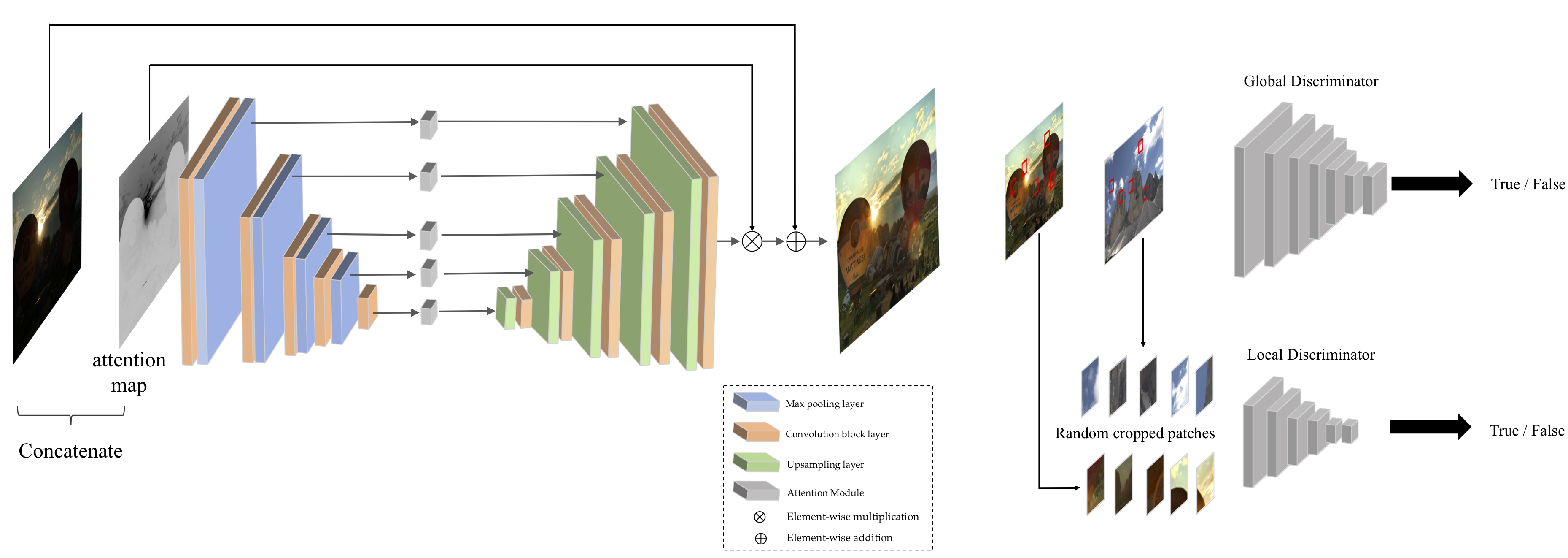

Overal Architecture

Environment Preparing

python3.5

You should prepare at least 3 1080ti gpus or change the batch size.

pip install -r requirement.txt

mkdir model

Download VGG pretrained model from [Google Drive 1], and then put it into the directory model.

Training process

Before starting training process, you should launch the visdom.server for visualizing.

nohup python -m visdom.server -port=8097

then run the following command

python scripts/script.py --train

Testing process

Download pretrained model and put it into ./checkpoints/enlightening

Create directories ../test_dataset/testA and ../test_dataset/testB. Put your test images on ../test_dataset/testA (And you should keep whatever one image in ../test_dataset/testB to make sure program can start.)

Run

python scripts/script.py --predict

Dataset preparing

Training data [Google Drive] (unpaired images collected from multiple datasets)

Testing data [Google Drive] (including LIME, MEF, NPE, VV, DICP)

And [BaiduYun] is available now thanks to @YHLelaine!

If you find this work useful for you, please cite

@article{jiang2021enlightengan,

title={Enlightengan: Deep light enhancement without paired supervision},

author={Jiang, Yifan and Gong, Xinyu and Liu, Ding and Cheng, Yu and Fang, Chen and Shen, Xiaohui and Yang, Jianchao and Zhou, Pan and Wang, Zhangyang},

journal={IEEE Transactions on Image Processing},

volume={30},

pages={2340--2349},

year={2021},

publisher={IEEE}

}