johansatge / Psi Report

Licence: mit

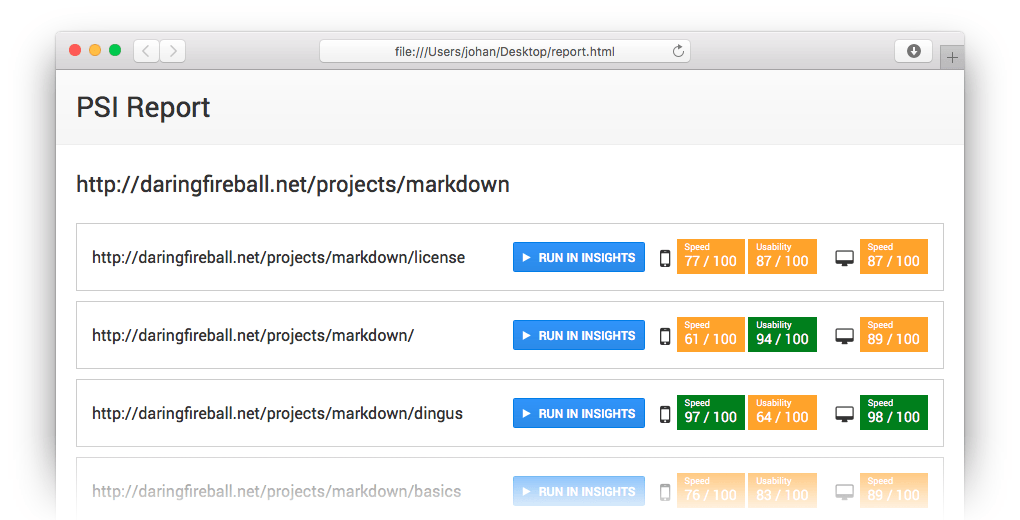

Crawls a website, gets PageSpeed Insights data for each page, and exports an HTML report.

Programming Languages

javascript

184084 projects - #8 most used programming language

Labels

Projects that are alternatives of or similar to Psi Report

Sitemap Generator Cli

Creates an XML-Sitemap by crawling a given site.

Stars: ✭ 214 (+3466.67%)

Mutual labels: cli, crawler

Lumberjack

An automated website accessibility scanner and cli

Stars: ✭ 109 (+1716.67%)

Mutual labels: cli, crawler

Boopsuite

A Suite of Tools written in Python for wireless auditing and security testing.

Stars: ✭ 807 (+13350%)

Mutual labels: cli

Lulu

[Unmaintained] A simple and clean video/music/image downloader 👾

Stars: ✭ 789 (+13050%)

Mutual labels: crawler

Bash My Aws

Bash-my-AWS provides simple but powerful CLI commands for managing AWS resources

Stars: ✭ 782 (+12933.33%)

Mutual labels: cli

Git Repo

Git-Repo: CLI utility to manage git services from your workspace

Stars: ✭ 818 (+13533.33%)

Mutual labels: cli

Ntl

Node Task List: Interactive cli to list and run package.json scripts

Stars: ✭ 800 (+13233.33%)

Mutual labels: cli

Prompts

❯ Lightweight, beautiful and user-friendly interactive prompts

Stars: ✭ 6,970 (+116066.67%)

Mutual labels: cli

Instagram Profilecrawl

📝 quickly crawl the information (e.g. followers, tags etc...) of an instagram profile.

Stars: ✭ 816 (+13500%)

Mutual labels: crawler

psi-report

Crawls a website or get URLs from a sitemap.xml or a file, gets PageSpeed Insights data for each page, and exports an HTML report.

Installation

Install with npm:

$ npm install psi-report --global

# --global isn't required if you plan to use the node module

CLI usage

$ psi-report [options] <url> <dest_path>

Options:

-V, --version output the version number

--urls-from-sitemap [name] Get the list of URLs from sitemap.xml (don't crawl)

--urls-from-file [name] Get the list of URLs from a file, one url per line (don't crawl)

-h, --help output usage information

Example:

$ psi-report daringfireball.net/projects/markdown /Users/johan/Desktop/report.html

Programmatic usage

// Basic usage

var PSIReport = require('psi-report');

var psi_report = new PSIReport({baseurl: 'http://domain.org'}, onComplete);

psi_report.start();

function onComplete(baseurl, data, html)

{

console.log('Report for: ' + baseurl);

console.log(data); // An array of pages with their PSI results

console.log(html); // The HTML report (as a string)

}

// The "fetch_url" and "fetch_psi" events allow to monitor the crawling process

psi_report.on('fetch_url', onFetchURL);

function onFetchURL(error, url)

{

console.log((error ? 'Error with URL: ' : 'Fetched URL: ') + url);

}

psi_report.on('fetch_psi', onFetchPSI);

function onFetchPSI(error, url, strategy)

{

console.log((error ? 'Error with PSI for ' : 'PSI data (' + strategy + ') fetched for ') + url);

}

Crawler behavior

The base URL is used as a root when crawling the pages.

For instance, using the URL https://daringfireball.net/ will crawl the entire website.

However, https://daringfireball.net/projects/markdown/ will crawl only:

https://daringfireball.net/projects/markdown/https://daringfireball.net/projects/markdown/basicshttps://daringfireball.net/projects/markdown/syntaxhttps://daringfireball.net/projects/markdown/license- And so on

This may be useful to crawl only one part of a website: everything starting with /en, for instance.

URLs from a sitemap.xml or a file

Instead of crawling the website, you can set the URL list with a sitemap.xml or a file.

--urls-from-sitemap https://example.com/sitemap.xml--urls-from-file /path/to/urls.txt

Only the URLs inside this file will be processed.

Changelog

This project uses semver.

| Version | Date | Notes |

|---|---|---|

2.2.1 |

2018-01-19 | Fix missing source files on NPM (@blaryjp) |

2.2.0 |

2017-11-27 | Prepend baseurl if not present, for each urls in file (@blaryjp) |

2.1.0 |

2017-11-19 | Add --urls-from-sitemap and --urls-from-file (@blaryjp) |

2.0.0 |

2016-04-02 | Deep module rewrite (New module API, updated CLI usage) |

1.0.1 |

2016-01-15 | Fix call on obsolete package |

1.0.0 |

2015-12-01 | Initial version |

License

This project is released under the MIT License.

Credits

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].