alokwhitewolf / Pytorch Attention Guided Cyclegan

Pytorch implementation of Unsupervised Attention-guided Image-to-Image Translation.

Stars: ✭ 67

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Pytorch Attention Guided Cyclegan

Sockeye

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Stars: ✭ 990 (+1377.61%)

Mutual labels: deep-neural-networks, attention-mechanism, attention-model

Generative inpainting

DeepFill v1/v2 with Contextual Attention and Gated Convolution, CVPR 2018, and ICCV 2019 Oral

Stars: ✭ 2,659 (+3868.66%)

Mutual labels: deep-neural-networks, attention-model

Sparse Structured Attention

Sparse and structured neural attention mechanisms

Stars: ✭ 198 (+195.52%)

Mutual labels: deep-neural-networks, attention-mechanism

Deepattention

Deep Visual Attention Prediction (TIP18)

Stars: ✭ 65 (-2.99%)

Mutual labels: attention-mechanism, attention-model

Fake news detection deep learning

Fake News Detection using Deep Learning models in Tensorflow

Stars: ✭ 74 (+10.45%)

Mutual labels: deep-neural-networks, attention-mechanism

Adnet

Attention-guided CNN for image denoising(Neural Networks,2020)

Stars: ✭ 135 (+101.49%)

Mutual labels: deep-neural-networks, attention-mechanism

Compact-Global-Descriptor

Pytorch implementation of "Compact Global Descriptor for Neural Networks" (CGD).

Stars: ✭ 22 (-67.16%)

Mutual labels: attention-mechanism, attention-model

Image Caption Generator

A neural network to generate captions for an image using CNN and RNN with BEAM Search.

Stars: ✭ 126 (+88.06%)

Mutual labels: attention-mechanism, attention-model

Attention is all you need

Transformer of "Attention Is All You Need" (Vaswani et al. 2017) by Chainer.

Stars: ✭ 303 (+352.24%)

Mutual labels: deep-neural-networks, attention-mechanism

Action Recognition Visual Attention

Action recognition using soft attention based deep recurrent neural networks

Stars: ✭ 350 (+422.39%)

Mutual labels: deep-neural-networks, attention-mechanism

Structured Self Attention

A Structured Self-attentive Sentence Embedding

Stars: ✭ 459 (+585.07%)

Mutual labels: attention-mechanism, attention-model

Sarcasm Detection

Detecting Sarcasm on Twitter using both traditonal machine learning and deep learning techniques.

Stars: ✭ 73 (+8.96%)

Mutual labels: deep-neural-networks, attention-mechanism

Attentionalpoolingaction

Code/Model release for NIPS 2017 paper "Attentional Pooling for Action Recognition"

Stars: ✭ 248 (+270.15%)

Mutual labels: attention-mechanism, attention-model

Hnatt

Train and visualize Hierarchical Attention Networks

Stars: ✭ 192 (+186.57%)

Mutual labels: deep-neural-networks, attention-mechanism

Keras Attention Mechanism

Attention mechanism Implementation for Keras.

Stars: ✭ 2,504 (+3637.31%)

Mutual labels: attention-mechanism, attention-model

Generative Inpainting Pytorch

A PyTorch reimplementation for paper Generative Image Inpainting with Contextual Attention (https://arxiv.org/abs/1801.07892)

Stars: ✭ 242 (+261.19%)

Mutual labels: deep-neural-networks, attention-model

Linear Attention Recurrent Neural Network

A recurrent attention module consisting of an LSTM cell which can query its own past cell states by the means of windowed multi-head attention. The formulas are derived from the BN-LSTM and the Transformer Network. The LARNN cell with attention can be easily used inside a loop on the cell state, just like any other RNN. (LARNN)

Stars: ✭ 119 (+77.61%)

Mutual labels: attention-mechanism, attention-model

attention-mechanism-keras

attention mechanism in keras, like Dense and RNN...

Stars: ✭ 19 (-71.64%)

Mutual labels: attention-mechanism, attention-model

Nmt Keras

Neural Machine Translation with Keras

Stars: ✭ 501 (+647.76%)

Mutual labels: attention-mechanism, attention-model

Keras Attention

Visualizing RNNs using the attention mechanism

Stars: ✭ 697 (+940.3%)

Mutual labels: deep-neural-networks, attention-mechanism

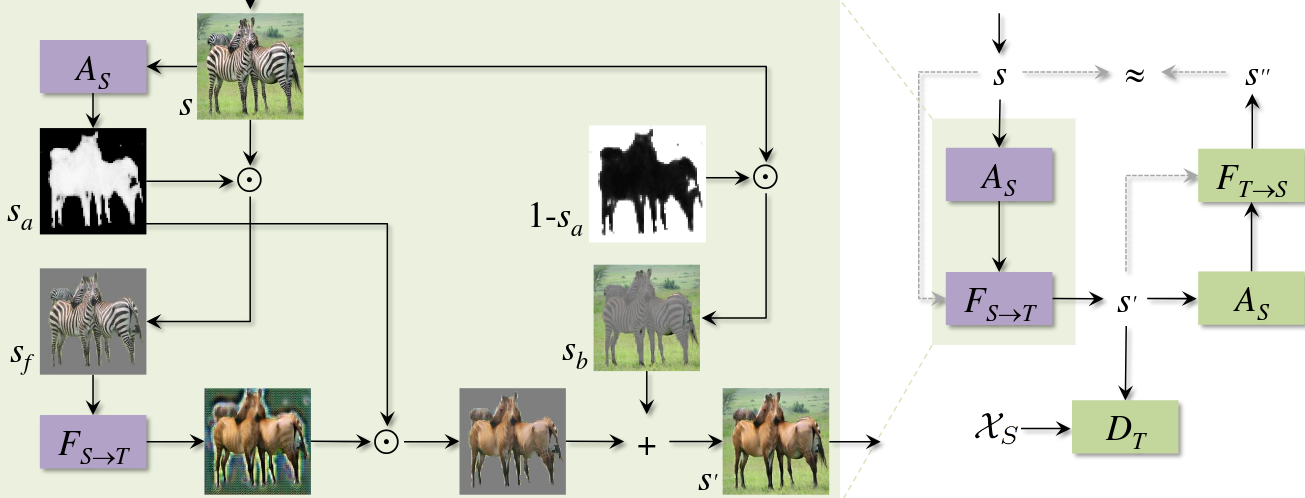

Pytorch-Attention-Guided-CycleGAN

Attempt at Pytorch implementation of Unsupervised Attention-guided Image-to-Image Translation.

This architecture uses an attention module to identify the foreground or salient parts of the images onto which image translation is to be done.

This architecture uses an attention module to identify the foreground or salient parts of the images onto which image translation is to be done.

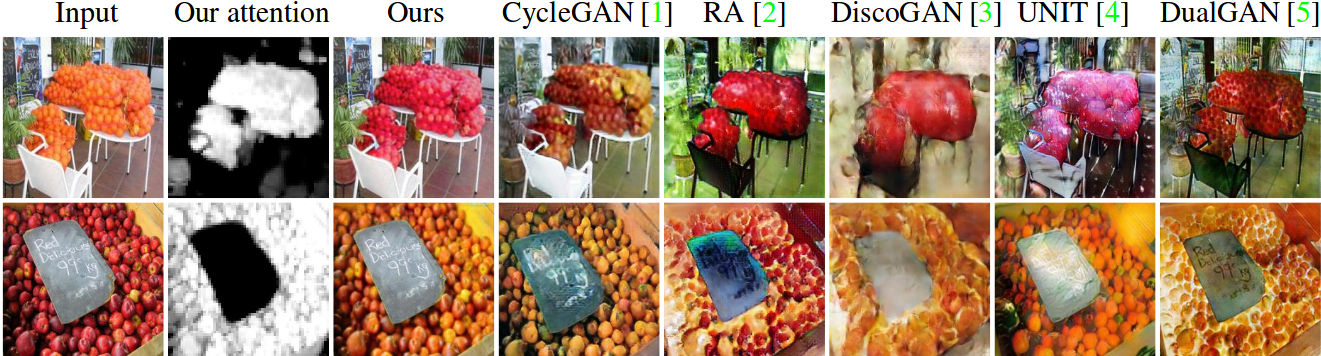

Some of the results shown in the paper -

How to run

Download dataset

bash datasets/download_datasets.sh <cyclegan dataset arguement>

Train

train.py

optional arguments

--resume <checkpoint path to resume from>

--dataroot <root of the dataset images>

--LRgen <lr of generator>

--LRdis <lr of discriminator>

--LRattn <lr of attention module>

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].