StudioGAN is a Pytorch library providing implementations of representative Generative Adversarial Networks (GANs) for conditional/unconditional image generation. StudioGAN aims to offer an identical playground for modern GANs so that machine learning researchers can readily compare and analyze a new idea.

News

- Our new paper "Rebooting ACGAN: Auxiliary Classifier GANs with Stable Training (ReACGAN)" is made public on Neurips 2021 Openreview.

Release Notes (v.0.3.0)

- Add SOTA GANs: LGAN, TACGAN, StyleGAN2, MDGAN, MHGAN, ADCGAN, ReACGAN.

- Add five types of differentiable augmentation: CR, DiffAugment, ADA, SimCLR, BYOL.

- Implement useful regularizations: Top-K training, Feature Matching, R1-Regularization, MaxGP

- Add Improved Precision & Recall, Density & Coverage, iFID, and CAS for reliable evaluation.

- Support Inception_V3 and SwAV backbones for GAN evaluation.

- Verify the reproducibility of StyleGAN2 and BigGAN.

- Fix bugs in FreezeD, DDP training, Mixed Precision training, and ADA.

- Support Discriminator Driven Latent Sampling, Semantic Factorization for BigGAN evaluation.

- Support Wandb logging instead of Tensorboard.

Features

- Extensive GAN implementations using PyTorch.

- The only repository to train/evaluate BigGAN and StyleGAN2 baselines in a unified training pipeline.

- Comprehensive benchmark of GANs using CIFAR10, Tiny ImageNet, CUB200, and ImageNet datasets.

- Provide pre-trained models that are fully compatible with up-to-date PyTorch environment.

- Easy to handle other personal datasets (i.e. AFHQ, anime, and much more!).

- Better performance and lower memory consumption than original implementations.

- Support seven evaluation metrics including iFID, improved precision & recall, density & coverage, and CAS.

- Support Multi-GPU (DP, DDP, and Multinode DistributedDataParallel), Mixed Precision, Synchronized Batch Normalization, Wandb Visualization, and other analysis methods.

Implemented GANs

| Method | Venue | Architecture | GC | DC | Loss | EMA |

|---|---|---|---|---|---|---|

| DCGAN | arXiv'15 | CNN/ResNet1 | N/A | N/A | Vanilla | False |

| LSGAN | ICCV'17 | CNN/ResNet1 | N/A | N/A | Least Sqaure | False |

| GGAN | arXiv'17 | CNN/ResNet1 | N/A | N/A | Hinge | False |

| WGAN-WC | ICLR'17 | ResNet | N/A | N/A | Wasserstein | False |

| WGAN-GP | NIPS'17 | ResNet | N/A | N/A | Wasserstein | False |

| WGAN-DRA | arXiv'17 | ResNet | N/A | N/A | Wasserstein | False |

| ACGAN-Mod2 | - | ResNet | cBN | AC | Hinge | False |

| ProjGAN | ICLR'18 | ResNet | cBN | PD | Hinge | False |

| SNGAN | ICLR'18 | ResNet | cBN | PD | Hinge | False |

| SAGAN | ICML'19 | ResNet | cBN | PD | Hinge | False |

| TACGAN | Neurips'19 | Big ResNet | cBN | TAC | Hinge | True |

| LGAN | ICML'19 | ResNet | N/A | N/A | Vanilla | False |

| BigGAN | ICLR'19 | Big ResNet | cBN | PD | Hinge | True |

| BigGAN-Deep | ICLR'19 | Big ResNet Deep | cBN | PD | Hinge | True |

| BigGAN-Mod3 | - | Big ResNet | cBN | PD | Hinge | True |

| LOGAN | arXiv'19 | Big ResNet | cBN | PD | Hinge | True |

| StyleGAN2 | CVPR' 20 | StyleGAN2 | cAdaIN | SPD | Logistic | True |

| CRGAN | ICLR'20 | Big ResNet | cBN | PD | Hinge | True |

| BigGAN + DiffAugment | Neurips'20 | Big ResNet | cBN | PD | Hinge | True |

| StyleGAN2 + ADA | Neurips'20 | StyleGAN2 | cAdaIN | SPD | Logistic | True |

| ContraGAN | Neurips'20 | Big ResNet | cBN | 2C | Hinge | True |

| MHGAN | WACV'21 | Big ResNet | cBN | MH | MH | True |

| ICRGAN | AAAI'21 | Big ResNet | cBN | PD | Hinge | True |

| ADCGAN | arXiv'21 | Big ResNet | cBN | ADC | Hinge | True |

| ReACGAN | Neurips'21 | Big ResNet | cBN | D2D-CE | Hinge | True |

GC/DC indicates the way how we inject label information to the Generator or Discriminator.

EMA: Exponential Moving Average update to the generator. cBN : conditional Batch Normalization. cAdaIN: Conditional version of Adaptive Instance Normalization. AC : Auxiliary Classifier. PD : Projection Discriminator. TAC: Twin Auxiliary Classifier. SPD : Modified PD for StyleGAN. 2C : Conditional Contrastive loss. MH : Multi-Hinge loss. ADC : Auxiliary Discriminative Classifier. D2D-CE : Data-to-Data Cross-Entropy.

Differentiable Augmentations

| Method | Venue | Target Loss |

|---|---|---|

| CR | ICLR'2020 | - |

| SimCLR | ICML'2020 | - |

| DiffAugment | Neurips'2020 | - |

| BYOL | Neurips'2020 | - |

| ADA | Neurips'2020 | Logistic |

Training Techniques and Misc

| Method | Venue | Target Architecture |

|---|---|---|

| FreezeD | CVPRW'20 | Except for StyleGAN2 |

| Top-K Training | Neurips'2020 | - |

| SeFa | CVPR'2021 | BigGAN |

Evaluation Metrics

| Method | Venue | Architecture |

|---|---|---|

| Inception Score (IS) | Neurips'16 | Inception_V3 |

| Frechet Inception Distance (FID) | Neurips'17 | Inception_V3 |

| Intra-class FID | - | Inception_V3 |

| Improved Precision & Recall | Neurips'19 | Inception_V3 |

| Classifier Accuracy Score (CAS) | Neurips'19 | Inception_V3 |

| Density & Coverage | ICML'20 | Inception_V3 |

| SwAV FID | ICLR'21 | SwAV |

Requirements

First, install PyTorch meeting your environment (at least 1.7, recommmended 1.10):

pip3 install torch==1.10.0+cu111 torchvision==0.11.1+cu111 torchaudio==0.10.0+cu111 -f https://download.pytorch.org/whl/cu111/torch_stable.htmlThen, use the following command to install the rest of the libraries:

pip3 install tqdm ninja h5py kornia matplotlib pandas sklearn scipy seaborn wandb PyYaml click requests pyspng imageio-ffmpeg prdcWith docker, you can use:

docker pull mgkang/studio_gan:latestThis is my command to make a container named "StudioGAN".

docker run -it --gpus all --shm-size 128g --name StudioGAN -v /home/USER:/root/code --workdir /root/code mgkang/studio_gan:latest /bin/bashQuick Start

Before starting, users should login wandb using their personal API key.

wandb login PERSONAL_API_KEYFrom release 0.3.0, you can now define which evaluation metrics to use through -metrics option. Not specifying option defaults to calculating FID only.

i.e. -metrics is fid calculates only IS and FID and -metrics none skips evaluation.

- Train (

-t) and evaluate IS, FID, Prc, Rec, Dns, Cvg (-metrics is fid prdc) of the model defined inCONFIG_PATHusing GPU0.

CUDA_VISIBLE_DEVICES=0 python3 src/main.py -t -metrics is fid prdc -cfg CONFIG_PATH -data DATA_PATH -save SAVE_PATH- Train (

-t) and evaluate FID of the model defined inCONFIG_PATHthroughDataParallelusing GPUs(0, 1, 2, 3). Evaluation of FID does not require (-metrics) argument!

CUDA_VISIBLE_DEVICES=0,1,2,3 python3 src/main.py -t -cfg CONFIG_PATH -data DATA_PATH -save SAVE_PATH- Train (

-t) and skip evaluation (-metrics none) of the model defined inCONFIG_PATHthroughDistributedDataParallelusing GPUs(0, 1, 2, 3),Synchronized batch norm, andMixed precision.

export MASTER_ADDR="localhost"

export MASTER_PORT=2222

CUDA_VISIBLE_DEVICES=0,1,2,3 python3 src/main.py -t -metrics none -cfg CONFIG_PATH -data DATA_PATH -save SAVE_PATH -DDP -sync_bn -mpc Try python3 src/main.py to see available options.

Dataset

-

CIFAR10/CIFAR100: StudioGAN will automatically download the dataset once you execute

main.py. -

Tiny ImageNet, ImageNet, or a custom dataset:

- download Tiny ImageNet and ImageNet. Prepare your own dataset.

- make the folder structure of the dataset as follows:

data

└── ImageNet or Tiny_ImageNet or CUSTOM

├── train

│ ├── cls0

│ │ ├── train0.png

│ │ ├── train1.png

│ │ └── ...

│ ├── cls1

│ └── ...

└── valid

├── cls0

│ ├── valid0.png

│ ├── valid1.png

│ └── ...

├── cls1

└── ...

Supported Training/Testing Techniques

-

Load All Data in Main Memory (

-hdf5 -l)CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -t -hdf5 -l -cfg CONFIG_PATH -data DATA_PATH -save SAVE_PATH

-

DistributedDataParallel (Please refer to Here) (

-DDP)### NODE_0, 4_GPUs, All ports are open to NODE_1 ~/code>>> export MASTER_ADDR=PUBLIC_IP_OF_NODE_0 ~/code>>> export MASTER_PORT=AVAILABLE_PORT_OF_NODE_0 ~/code/PyTorch-StudioGAN>>> CUDA_VISIBLE_DEVICES=0,1,2,3 python3 src/main.py -t -DDP -tn 2 -cn 0 -cfg CONFIG_PATH -data DATA_PATH -save SAVE_PATH

### NODE_1, 4_GPUs, All ports are open to NODE_0 ~/code>>> export MASTER_ADDR=PUBLIC_IP_OF_NODE_0 ~/code>>> export MASTER_PORT=AVAILABLE_PORT_OF_NODE_0 ~/code/PyTorch-StudioGAN>>> CUDA_VISIBLE_DEVICES=0,1,2,3 python3 src/main.py -t -DDP -tn 2 -cn 1 -cfg CONFIG_PATH -data DATA_PATH -save SAVE_PATH

-

Mixed Precision Training (

-mpc)CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -t -mpc -cfg CONFIG_PATH -data DATA_PATH -save SAVE_PATH

-

Change Batch Normalization Statistics

# Synchronized batchNorm (-sync_bn) CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -t -sync_bn -cfg CONFIG_PATH -data DATA_PATH -save SAVE_PATH # Standing statistics (-std_stat, -std_max, -std_step) CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -std_stat -std_max STD_MAX -std_step STD_STEP -cfg CONFIG_PATH -ckpt CKPT -data DATA_PATH -save SAVE_PATH # Batch statistics (-batch_stat) CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -batch_stat -cfg CONFIG_PATH -ckpt CKPT -data DATA_PATH -save SAVE_PATH

-

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py --truncation_factor TRUNCATION_FACTOR -cfg CONFIG_PATH -ckpt CKPT -data DATA_PATH -save SAVE_PATH

-

DDLS (

-lgv -lgv_rate -lgv_std -lgv_decay -lgv_decay_steps -lgv_steps)CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -lgv -lgv_rate LGV_RATE -lgv_std LGV_STD -lgv_decay LGV_DECAY -lgv_decay_steps LGV_DECAY_STEPS -lgv_steps LGV_STEPS -cfg CONFIG_PATH -ckpt CKPT -data DATA_PATH -save SAVE_PATH

-

Freeze Discriminator (

-freezeD)CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -t --freezeD FREEZED -ckpt SOURCE_CKPT -cfg TARGET_CONFIG_PATH -data DATA_PATH -save SAVE_PATH

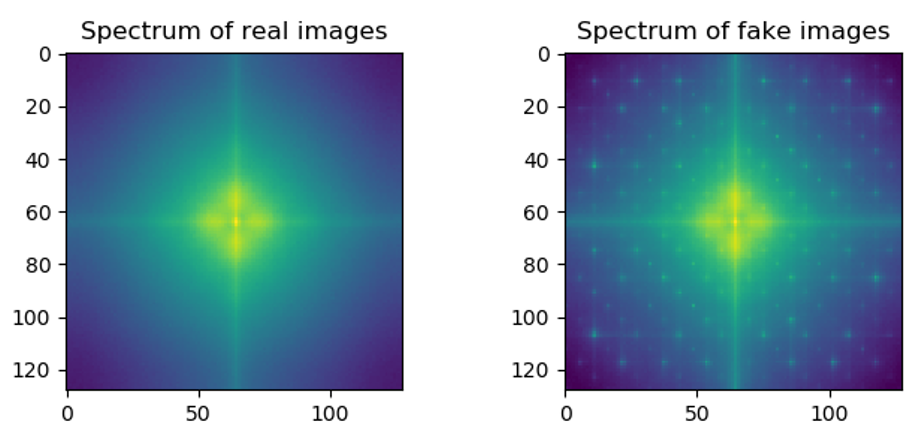

Analyzing Generated Images

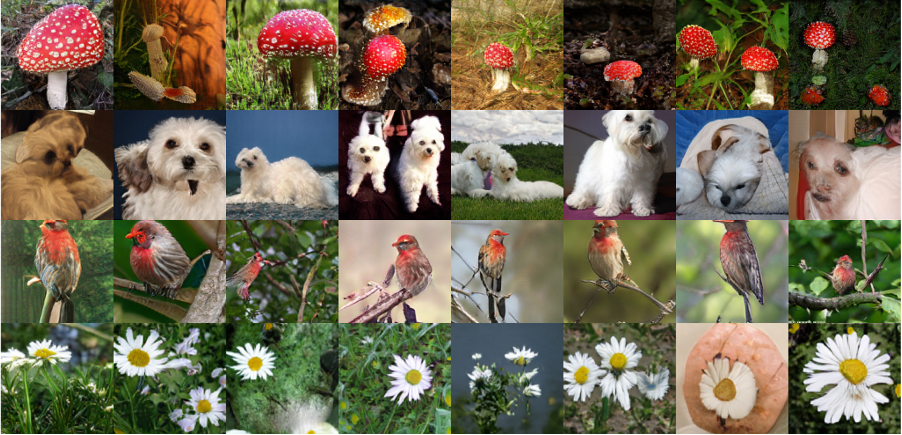

StudioGAN supports Image visualization, K-nearest neighbor analysis, Linear interpolation, Frequency analysis, TSNE analysis, and Semantic factorization. All results will be saved in SAVE_DIR/figures/RUN_NAME/*.png.

- Image Visualization

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -v -cfg CONFIG_PATH -ckpt CKPT -save SAVE_DIR- K-Nearest Neighbor Analysis (we have fixed K=7, the images in the first column are generated images.)

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -knn -cfg CONFIG_PATH -ckpt CKPT -data DATA_PATH -save SAVE_PATH- Linear Interpolation (applicable only to conditional Big ResNet models)

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -itp -cfg CONFIG_PATH -ckpt CKPT -save SAVE_DIR- Frequency Analysis

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -fa -cfg CONFIG_PATH -ckpt CKPT -data DATA_PATH -save SAVE_PATH- TSNE Analysis

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -tsne -cfg CONFIG_PATH -ckpt CKPT -data DATA_PATH -save SAVE_PATH- Semantic Factorization for BigGAN

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -sefa -sefa_axis SEFA_AXIS -sefa_max SEFA_MAX -cfg CONFIG_PATH -ckpt CKPT -save SAVE_PATHMetrics

StudioGAN supports Inception Score, Frechet Inception Distance, Improved Precision and Recall, Density and Coverage, Intra-Class FID, Classifier Accuracy Score, SwAV backbone FID. Users can get Intra-Class FID, Classifier Accuracy Score, SwAV backbone FID scores using -iFID, -GAN_train, -GAN_test, and --eval_backbone "SwAV" options, respectively.

1. Inception Score (IS)

Inception Score (IS) is a metric to measure how much GAN generates high-fidelity and diverse images. Calculating IS requires the pre-trained Inception-V3 network, and recent approaches utilize OpenAI's TensorFlow implementation.

To compute official IS, you have to make a "samples.npz" file using the command below:

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -s -c CONFIG_PATH --checkpoint_folder CHECKPOINT_FOLDER --log_output_path LOG_OUTPUT_PATHIt will automatically create the samples.npz file in the path ./samples/RUN_NAME/fake/npz/samples.npz.

After that, execute TensorFlow official IS implementation. Note that we do not split a dataset into ten folds to calculate IS ten times. We use the entire dataset to compute IS only once, which is the evaluation strategy used in the CompareGAN repository.

CUDA_VISIBLE_DEVICES=0,...,N python3 src/inception_tf13.py --run_name RUN_NAME --type "fake"Keep in mind that you need to have TensorFlow 1.3 or earlier version installed!

Note that StudioGAN logs Pytorch-based IS during the training.

2. Frechet Inception Distance (FID)

FID is a widely used metric to evaluate the performance of a GAN model. Calculating FID requires the pre-trained Inception-V3 network, and modern approaches use Tensorflow-based FID. StudioGAN utilizes the PyTorch-based FID to test GAN models in the same PyTorch environment. We show that the PyTorch based FID implementation provides almost the same results with the TensorFlow implementation (See Appendix F of our paper).

3. Improved Precision and Recall (Prc, Rec)

Improved precision and recall are developed to make up for the shortcomings of the precision and recall. Like IS, FID, calculating improved precision and recall requires the pre-trained Inception-V3 model. StudioGAN uses the PyTorch implementation provided by developers of density and coverage scores.

4. Density and Coverage (Dns, Cvg)

Density and coverage metrics can estimate the fidelity and diversity of generated images using the pre-trained Inception-V3 model. The metrics are known to be robust to outliers, and they can detect identical real and fake distributions. StudioGAN uses the authors' official PyTorch implementation, and StudioGAN follows the author's suggestion for hyperparameter selection.

5. Precision and Recall (PR: F_1/8=Precision, F_8=Recall, Will be deprecated)

Precision measures how accurately the generator can learn the target distribution. Recall measures how completely the generator covers the target distribution. Like IS and FID, calculating Precision and Recall requires the pre-trained Inception-V3 model. StudioGAN uses the same hyperparameter settings with the original Precision and Recall implementation, and StudioGAN calculates the F-beta score suggested by Sajjadi et al.

Benchmark

※ We always welcome your contribution if you find any wrong implementation, bug, and misreported score.

We report the best IS, FID, and F_beta values of various GANs. B. S. means batch size for training.

To download all checkpoints reported in StudioGAN, Please click here.

CR, ICR, DiffAugment, ADA, and LO refer to regularization or optimization techiniques: CR (Consistency Regularization), ICR (Improved Consistency Regularization), DiffAugment (Differentiable Augmentation), ADA (Adaptive Discriminator Augmentation), and LO (Latent Optimization), respectively.

CIFAR10 (3x32x32)

When training and evaluating, we used the command below.

With a single TITAN RTX GPU, training BigGAN takes about 13-15 hours.

CUDA_VISIBLE_DEVICES=0 python3 src/main.py -t -hdf5 -l -batch_stat -metrics is fid prdc -ref "test" -cfg CONFIG_PATH -data DATA_PATH -save SAVE_PATHIS, FID, and F_beta values are computed using 10K test and 10K generated Images.

| Method | Reference | IS(⭡) | FID(⭣) | F_1/8(⭡) | F_8(⭡) | Cfg | Log | Weights |

|---|---|---|---|---|---|---|---|---|

| DCGAN | StudioGAN | 6.638 | 49.030 | 0.833 | 0.795 | Cfg | Log | Link |

| LSGAN | StudioGAN | 5.577 | 66.686 | 0.757 | 0.720 | Cfg | Log | Link |

| GGAN | StudioGAN | 6.227 | 42.714 | 0.916 | 0.822 | Cfg | Log | Link |

| WGAN-WC | StudioGAN | 2.579 | 159.090 | 0.190 | 0.199 | Cfg | Log | Link |

| WGAN-GP | StudioGAN | 7.458 | 25.852 | 0.962 | 0.929 | Cfg | Log | Link |

| WGAN-DRA | StudioGAN | 6.432 | 41.586 | 0.922 | 0.863 | Cfg | Log | Link |

| ACGAN-Mod | StudioGAN | 6.629 | 45.571 | 0.857 | 0.847 | Cfg | Log | Link |

| ProjGAN | StudioGAN | 7.539 | 33.830 | 0.952 | 0.855 | Cfg | Log | Link |

| SNGAN | StudioGAN | 8.677 | 13.248 | 0.983 | 0.978 | Cfg | Log | Link |

| SAGAN | StudioGAN | 8.680 | 14.009 | 0.982 | 0.970 | Cfg | Log | Link |

| BigGAN | Paper | 9.224 | 14.73 | - | - | - | - | - |

| BigGAN + CR | Paper | - | 11.5 | - | - | - | - | - |

| BigGAN + ICR | Paper | - | 9.2 | - | - | - | - | - |

| BigGAN + DiffAugment | Repo | 9.24 | 8.7 | - | - | - | - | - |

| BigGAN-Mod | StudioGAN | 9.746 | 8.034 | 0.995 | 0.994 | Cfg | Log | Link |

| BigGAN-Mod + CR | StudioGAN | 10.380 | 7.178 | 0.994 | 0.993 | Cfg | Log | Link |

| BigGAN-Mod + ICR | StudioGAN | 10.153 | 7.430 | 0.994 | 0.993 | Cfg | Log | Link |

| BigGAN-Mod + DiffAugment | StudioGAN | 9.775 | 7.157 | 0.996 | 0.993 | Cfg | Log | Link |

| LOGAN | StudioGAN | TBA | TBA | TBA | TBA | Cfg | TBA | TBA |

| ContraGAN | StudioGAN | 9.729 | 8.065 | 0.993 | 0.992 | Cfg | Log | Link |

| ContraGAN + CR | StudioGAN | 9.812 | 7.685 | 0.995 | 0.993 | Cfg | Log | Link |

| ContraGAN + ICR | StudioGAN | 10.117 | 7.547 | 0.996 | 0.993 | Cfg | Log | Link |

| ContraGAN + DiffAugment | StudioGAN | 9.996 | 7.193 | 0.995 | 0.990 | Cfg | Log | Link |

| ReACGAN | StudioGAN | 9.974 | 7.792 | 0.995 | 0.990 | Cfg | Log | Link |

| ReACGAN + CR | StudioGAN | 9.833 | 7.176 | 0.996 | 0.993 | Cfg | Log | Link |

| ReACGAN + DiffAugment | StudioGAN | 10.181 | 6.717 | 0.996 | 0.994 | Cfg | Log | Link |

CIFAR10 (3x32x32) using StyleGAN2

When training and evaluating, we used the command below.

CUDA_VISIBLE_DEVICES=0,1 python3 src/main.py -t -hdf5 -l -mpc -metrics is fid prdc -ref "train" -cfg CONFIG_PATH -data DATA_PATH -save SAVE_PATHIS, FID, Dns, and Cvg values are computed using 50K train and 50K generated Images.

| Method | Reference | IS(⭡) | FID(⭣) | Dns(⭡) | Cvg(⭡) | Cfg | Log | Weights |

|---|---|---|---|---|---|---|---|---|

| StyleGAN25 | Paper | 9.534 | 6.96 | - | - | - | - | - |

| StyleGAN2 + ADA5 | Paper | 10.144 | 2.42 | - | - | - | - | - |

| StyleGAN2 | StudioGAN | 10.149 | 3.889 | 0.979 | 0.893 | Cfg | Log | Link |

| StyleGAN2 + D2D-CE | StudioGAN | 10.320 | 3.385 | 0.974 | 0.899 | Cfg | Log | Link |

| StyleGAN2 + ADA | StudioGAN | 10.477 | 2.316 | 1.049 | 0.929 | Cfg | Log | Link |

| StyleGAN2 + ADA + D2D-CE | StudioGAN | 10.548 | 2.325 | 1.052 | 0.929 | Cfg | Log | Link |

Tiny ImageNet (3x64x64)

When training and evaluating, we used the command below.

With 4 TITAN RTX GPUs, training BigGAN takes about 2 days.

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -t -hdf5 -l -batch_stat -metrics is fid prdc -ref "valid" -cfg CONFIG_PATH -data DATA_PATH -save SAVE_PATHIS, FID, and F_beta values are computed using 10K validation and 10K generated Images.

| Method | Reference | IS(⭡) | FID(⭣) | F_1/8(⭡) | F_8(⭡) | Cfg | Log | Weights |

|---|---|---|---|---|---|---|---|---|

| DCGAN | StudioGAN | 5.640 | 91.625 | 0.606 | 0.391 | Cfg | Log | Link |

| LSGAN | StudioGAN | 5.381 | 90.008 | 0.638 | 0.390 | Cfg | Log | Link |

| GGAN | StudioGAN | 5.146 | 102.094 | 0.503 | 0.307 | Cfg | Log | Link |

| WGAN-WC | StudioGAN | 9.696 | 41.454 | 0.940 | 0.735 | Cfg | Log | Link |

| WGAN-GP | StudioGAN | 1.322 | 311.805 | 0.016 | 0.000 | Cfg | Log | Link |

| WGAN-DRA | StudioGAN | 9.564 | 40.655 | 0.938 | 0.724 | Cfg | Log | Link |

| ACGAN-Mod | StudioGAN | 6.342 | 78.513 | 0.668 | 0.518 | Cfg | Log | Link |

| ProjGAN | StudioGAN | 6.224 | 89.175 | 0.626 | 0.428 | Cfg | Log | Link |

| SNGAN | StudioGAN | 8.412 | 53.590 | 0.900 | 0.703 | Cfg | Log | Link |

| SAGAN | StudioGAN | 8.342 | 51.414 | 0.898 | 0.698 | Cfg | Log | Link |

| BigGAN-Mod | StudioGAN | 11.998 | 31.920 | 0.956 | 0.879 | Cfg | Log | Link |

| BigGAN-Mod + CR | StudioGAN | 14.887 | 21.488 | 0.969 | 0.936 | Cfg | Log | Link |

| BigGAN-Mod + ICR | StudioGAN | 5.605 | 91.326 | 0.525 | 0.399 | Cfg | Log | Link |

| BigGAN-Mod + DiffAugment | StudioGAN | 17.075 | 16.338 | 0.979 | 0.971 | Cfg | Log | Link |

| ContraGAN | StudioGAN | 13.494 | 27.027 | 0.975 | 0.902 | Cfg | Log | Link |

| ContraGAN + CR | StudioGAN | 15.623 | 19.716 | 0.983 | 0.941 | Cfg | Log | Link |

| ContraGAN + ICR | StudioGAN | 15.830 | 21.940 | 0.980 | 0.944 | Cfg | Log | Link |

| ContraGAN + DiffAugment | StudioGAN | 17.303 | 15.755 | 0.984 | 0.962 | Cfg | Log | Link |

| ReACGAN | StudioGAN | 14.162 | 26.586 | 0.975 | 0.897 | Cfg | Log | Link |

| ReACGAN + CR | StudioGAN | 16.505 | 20.251 | 0.982 | 0.934 | Cfg | Log | Link |

| ReACGAN + DiffAugment | StudioGAN | 20.479 | 14.348 | 0.988 | 0.971 | Cfg | Log | Link |

ImageNet (3x128x128)

When training, we used the command below.

With 8 TESLA V100 GPUs, training BigGAN2048 takes about a month.

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -t -hdf5 -l -sync_bn -metrics is fid prdc --eval_type "valid" -cfg CONFIG_PATH -std_stat -std_max STD_MAX -std_step STD_STEP -data DATA_PATH -save SAVE_PATHIS, FID, and F_beta values are computed using 50K validation and 50K generated Images.

| Method | Reference | IS(⭡) | FID(⭣) | F_1/8(⭡) | F_8(⭡) | Cfg | Log | Weights |

|---|---|---|---|---|---|---|---|---|

| SNGAN | StudioGAN | 32.247 | 26.792 | 0.938 | 0.913 | Cfg | Log | Link |

| SAGAN | StudioGAN | 29.848 | 34.726 | 0.849 | 0.914 | Cfg | Log | Link |

| BigGAN | Paper | 98.84 | 8.7 | - | - | - | - | - |

| BigGAN + TTUR | Paper | - | 21.072 | - | - | Cfg | - | - |

| BigGAN | StudioGAN | 28.633 | 24.684 | 0.941 | 0.921 | Cfg | Log | Link |

| BigGAN | StudioGAN | 99.705 | 7.893 | 0.985 | 0.989 | Cfg | Log | Link |

| ContraGAN + TTUR | Paper | 31.101 | 19.693 | 0.951 | 0.927 | Cfg | Log | Link |

| ContraGAN | StudioGAN | 25.249 | 25.161 | 0.947 | 0.855 | Cfg | Log | Link |

| ReACGAN | StudioGAN | 67.416 | 13.907 | 0.977 | 0.977 | Cfg | Log | Link |

| ReACGAN | StudioGAN | 96.299 | 8.206 | 0.989 | 0.989 | Cfg | Log | Link |

AFHQ (3x512x512) using StyleGAN2

When training and evaluating, we used the command below.

CUDA_VISIBLE_DEVICES=0,1,2,3 python3 src/main.py -t -hdf5 -l -mpc -metrics is fid prdc -ref "train" -cfg CONFIG_PATH -data DATA_PATH -save SAVE_PATHIS, FID, Dns, and Cvg values are computed using 14,630 train and 14,630 generated Images.

| Method | Reference | IS(⭡) | FID(⭣) | Dns(⭡) | Cvg(⭡) | Cfg | Log | Weights |

|---|---|---|---|---|---|---|---|---|

| StyleGAN2 + ADA | StudioGAN | 12.907 | 4.992 | 1.282 | 0.835 | Cfg | Log | Link |

| StyleGAN2 + ADA + D2D-CE | StudioGAN | 12.792 | 4.950 | - | - | Cfg | Log | Link |

StudioGAN thanks the following Repos for the code sharing

Exponential Moving Average: https://github.com/ajbrock/BigGAN-PyTorch

Synchronized BatchNorm: https://github.com/vacancy/Synchronized-BatchNorm-PyTorch

Self-Attention module: https://github.com/voletiv/self-attention-GAN-pytorch

Implementation Details: https://github.com/ajbrock/BigGAN-PyTorch

Architecture Details: https://github.com/google/compare_gan

StyleGAN2: https://github.com/NVlabs/stylegan2

DiffAugment: https://github.com/mit-han-lab/data-efficient-gans

Adaptive Discriminator Augmentation: https://github.com/NVlabs/stylegan2

Tensorflow IS: https://github.com/openai/improved-gan

Tensorflow FID: https://github.com/bioinf-jku/TTUR

Pytorch FID: https://github.com/mseitzer/pytorch-fid

Tensorflow Precision and Recall: https://github.com/msmsajjadi/precision-recall-distributions

PyTorch Improved Precision and Recall: https://github.com/clovaai/generative-evaluation-prdc

PyTorch Density and Coverage: https://github.com/clovaai/generative-evaluation-prdc

License

PyTorch-StudioGAN is an open-source library under the MIT license (MIT). However, portions of the library are avaiiable under distinct license terms: StyleGAN and StyleGAN-ADA are licensed under NVIDIA source code license, Synchronized batch normalization is licensed under MIT license, HDF5 generator is licensed under MIT license, and differentiable SimCLR-style augmentations is licensed under MIT license.

Citation

StudioGAN is established for the following research projects. Please cite our work if you use StudioGAN.

@inproceedings{kang2020ContraGAN,

title = {{ContraGAN: Contrastive Learning for Conditional Image Generation}},

author = {Minguk Kang and Jaesik Park},

journal = {Conference on Neural Information Processing Systems (NeurIPS)},

year = {2020}

}@inproceedings{kang2021ReACGAN,

title = {{Rebooting ACGAN: Auxiliary Classifier GANs with Stable Training}},

author = {Minguk Kang, Woohyeon Shim, Minsu Cho, and Jaesik Park},

journal = {Conference on Neural Information Processing Systems (NeurIPS)},

year = {2021}

}[1] Experiments on Tiny ImageNet are conducted using the ResNet architecture instead of CNN.

[2] Our re-implementation of ACGAN (ICML'17) with slight modifications, which bring strong performance enhancement for the experiment using CIFAR10.

[3] Our re-implementation of BigGAN/BigGAN-Deep (ICLR'18) with slight modifications, which bring strong performance enhancement for the experiment using CIFAR10.

[4] IS is computed using Tensorflow official code.

[5] The difference in FID values between the original StyleGAN2 and StudioGAN implementation is caused by the presence of random flip augmentation.