carpedm20 / Spiral Tensorflow

Licence: other

in progress

Stars: ✭ 117

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Spiral Tensorflow

Accel Brain Code

The purpose of this repository is to make prototypes as case study in the context of proof of concept(PoC) and research and development(R&D) that I have written in my website. The main research topics are Auto-Encoders in relation to the representation learning, the statistical machine learning for energy-based models, adversarial generation networks(GANs), Deep Reinforcement Learning such as Deep Q-Networks, semi-supervised learning, and neural network language model for natural language processing.

Stars: ✭ 166 (+41.88%)

Mutual labels: reinforcement-learning, generative-adversarial-network

Pytorch Rl

This repository contains model-free deep reinforcement learning algorithms implemented in Pytorch

Stars: ✭ 394 (+236.75%)

Mutual labels: reinforcement-learning, generative-adversarial-network

Gail Tf

Tensorflow implementation of generative adversarial imitation learning

Stars: ✭ 179 (+52.99%)

Mutual labels: reinforcement-learning, generative-adversarial-network

Mlds2018spring

Machine Learning and having it Deep and Structured (MLDS) in 2018 spring

Stars: ✭ 124 (+5.98%)

Mutual labels: reinforcement-learning, generative-adversarial-network

Udacity Deep Learning Nanodegree

This is just a collection of projects that made during my DEEPLEARNING NANODEGREE by UDACITY

Stars: ✭ 15 (-87.18%)

Mutual labels: reinforcement-learning, generative-adversarial-network

Kbgan

Code for "KBGAN: Adversarial Learning for Knowledge Graph Embeddings" https://arxiv.org/abs/1711.04071

Stars: ✭ 186 (+58.97%)

Mutual labels: reinforcement-learning, generative-adversarial-network

Awesome Tensorlayer

A curated list of dedicated resources and applications

Stars: ✭ 248 (+111.97%)

Mutual labels: reinforcement-learning, generative-adversarial-network

Leakgan

The codes of paper "Long Text Generation via Adversarial Training with Leaked Information" on AAAI 2018. Text generation using GAN and Hierarchical Reinforcement Learning.

Stars: ✭ 533 (+355.56%)

Mutual labels: reinforcement-learning, generative-adversarial-network

Pytorch Rl

PyTorch implementation of Deep Reinforcement Learning: Policy Gradient methods (TRPO, PPO, A2C) and Generative Adversarial Imitation Learning (GAIL). Fast Fisher vector product TRPO.

Stars: ✭ 658 (+462.39%)

Mutual labels: reinforcement-learning, generative-adversarial-network

Exposure

Learning infinite-resolution image processing with GAN and RL from unpaired image datasets, using a differentiable photo editing model.

Stars: ✭ 605 (+417.09%)

Mutual labels: reinforcement-learning, generative-adversarial-network

Deterministic Gail Pytorch

PyTorch implementation of Deterministic Generative Adversarial Imitation Learning (GAIL) for Off Policy learning

Stars: ✭ 44 (-62.39%)

Mutual labels: reinforcement-learning, generative-adversarial-network

Conversational Ai

Conversational AI Reading Materials

Stars: ✭ 34 (-70.94%)

Mutual labels: reinforcement-learning, generative-adversarial-network

Chemgan Challenge

Code for the paper: Benhenda, M. 2017. ChemGAN challenge for drug discovery: can AI reproduce natural chemical diversity? arXiv preprint arXiv:1708.08227.

Stars: ✭ 98 (-16.24%)

Mutual labels: reinforcement-learning, generative-adversarial-network

Studybook

Study E-Book(ComputerVision DeepLearning MachineLearning Math NLP Python ReinforcementLearning)

Stars: ✭ 1,457 (+1145.3%)

Mutual labels: reinforcement-learning

A Nice Mc

Code for "A-NICE-MC: Adversarial Training for MCMC"

Stars: ✭ 115 (-1.71%)

Mutual labels: generative-adversarial-network

Ctc Executioner

Master Thesis: Limit order placement with Reinforcement Learning

Stars: ✭ 112 (-4.27%)

Mutual labels: reinforcement-learning

Gpnd

Generative Probabilistic Novelty Detection with Adversarial Autoencoders

Stars: ✭ 112 (-4.27%)

Mutual labels: generative-adversarial-network

Reinforcement Learning An Introduction

Python Implementation of Reinforcement Learning: An Introduction

Stars: ✭ 11,042 (+9337.61%)

Mutual labels: reinforcement-learning

Hccg Cyclegan

Handwritten Chinese Characters Generation

Stars: ✭ 115 (-1.71%)

Mutual labels: generative-adversarial-network

Textsum Gan

Tensorflow re-implementation of GAN for text summarization

Stars: ✭ 111 (-5.13%)

Mutual labels: generative-adversarial-network

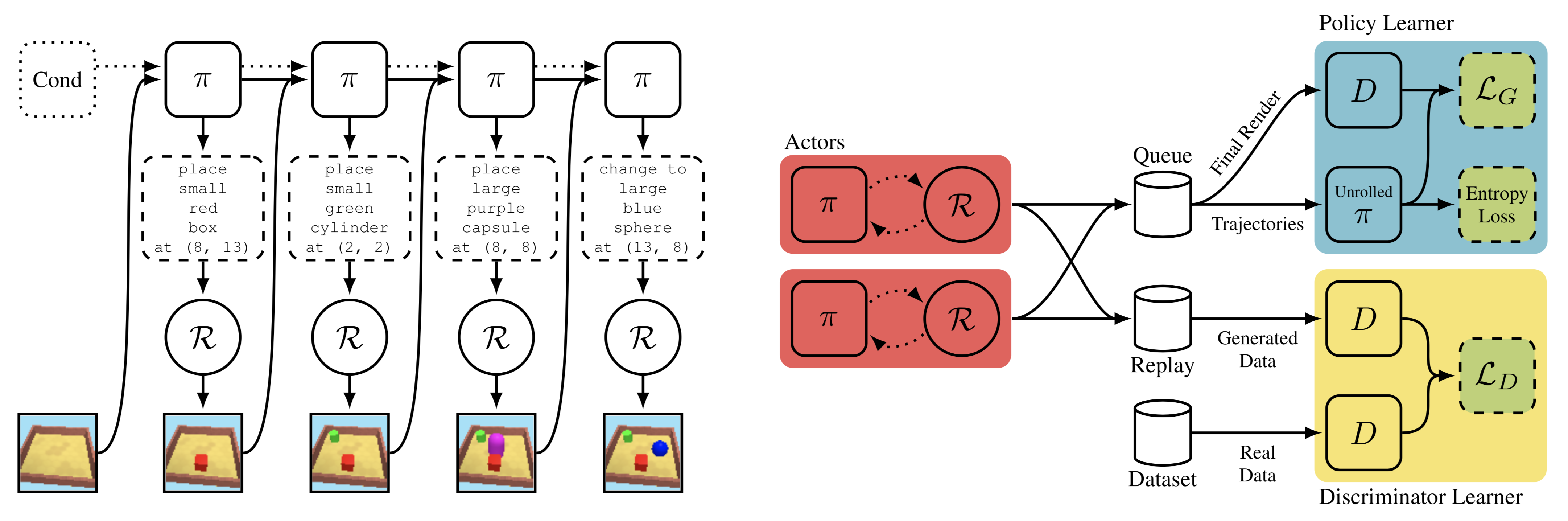

SPIRAL in TensorFlow (in progress)

TensorFlow implementation of Synthesizing Programs for Images using Reinforced Adversarial Learning (SPIRAL).

SPIRAL is an adversarially trained agent that generates a program which is executed by a graphics engine to interpret and sample images. This agent is trained to fool a discriminator with a distributed reinforcement learning without any supervision.

In short, Distributed RL + GAN + Program synthesis.

Prerequisites

- Python 2.7

- MyPaint 1.2.x

- TensorFlow 1.6.0

Usage

Install prerequisites with:

./install.sh

pip install -r requirements.txt

To debug a SPIARL model:

python run.py --num_workers 8 --env simple --episode_length=1 \

--location_size=8 --conditional=True \

--loss=l2 --policy_batch_size=1

To train a SPIARL model:

python run.py --num_workers 16 --env simple_mnist --episode_length=3 \

--color_channel=1 --location_size=32 --loss=gan --num_gpu=1 \

--disc_dim=8 --conditional=False \

--mnist_nums=1,7 --jump=False --curve=False

python run.py --num_workers 24 --env simple_mnist --episode_length=6 \

--color_channel=1 --location_size=32 --loss=gan --num_gpu=2 \

--disc_dim=64 --conditional=False \

--mnist_nums=0,1,2,3,4,5,6,7,8,9 --jump=True

python run.py --num_workers 12 --env simple_mnist --episode_length=2 \

--color_channel=1 --location_size=32 --conditional=True \

--mnist_nums=1 --loss=gan

python run.py --num_workers 24 --env simple_mnist --episode_length=3 \

--color_channel=1 --location_size=32 --conditional=True \

--mnist_nums=1,2,7 --loss=l2

python run.py --num_workers 24 --env simple_mnist --episode_length=3 \

--color_channel=1 --location_size=32 --conditional=True \

--mnist_nums=1,2,7 --loss=gan --num_gpu=2

python run.py --num_workers 24 --env simple_mnist --episode_length=5 \

--color_channel=1 --location_size=32 --conditional=True \

--mnist_nums=0,1,2,7 --loss=gan --num_gpu=2

Results

(in progress)

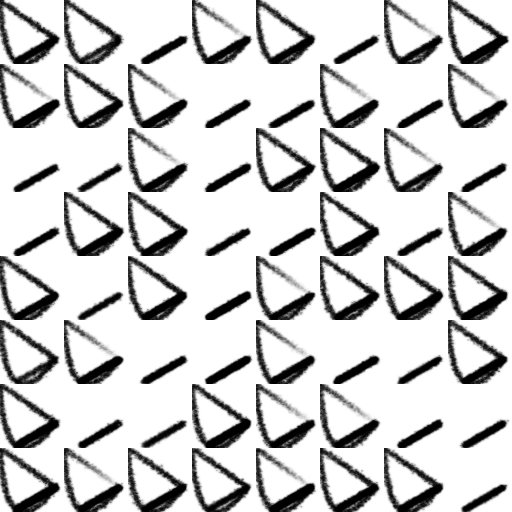

Random generated samples at early stage:

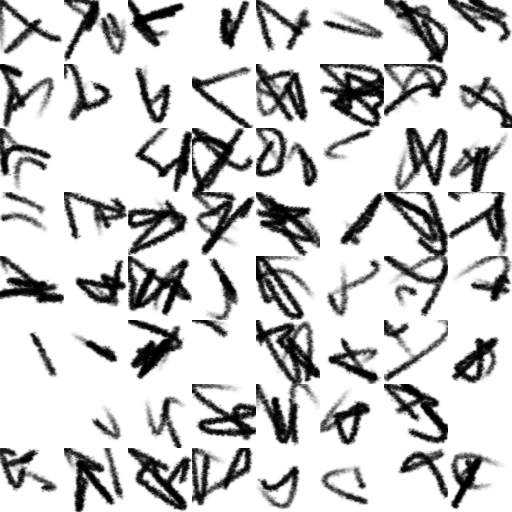

Incorrectly converged samples at early stage:

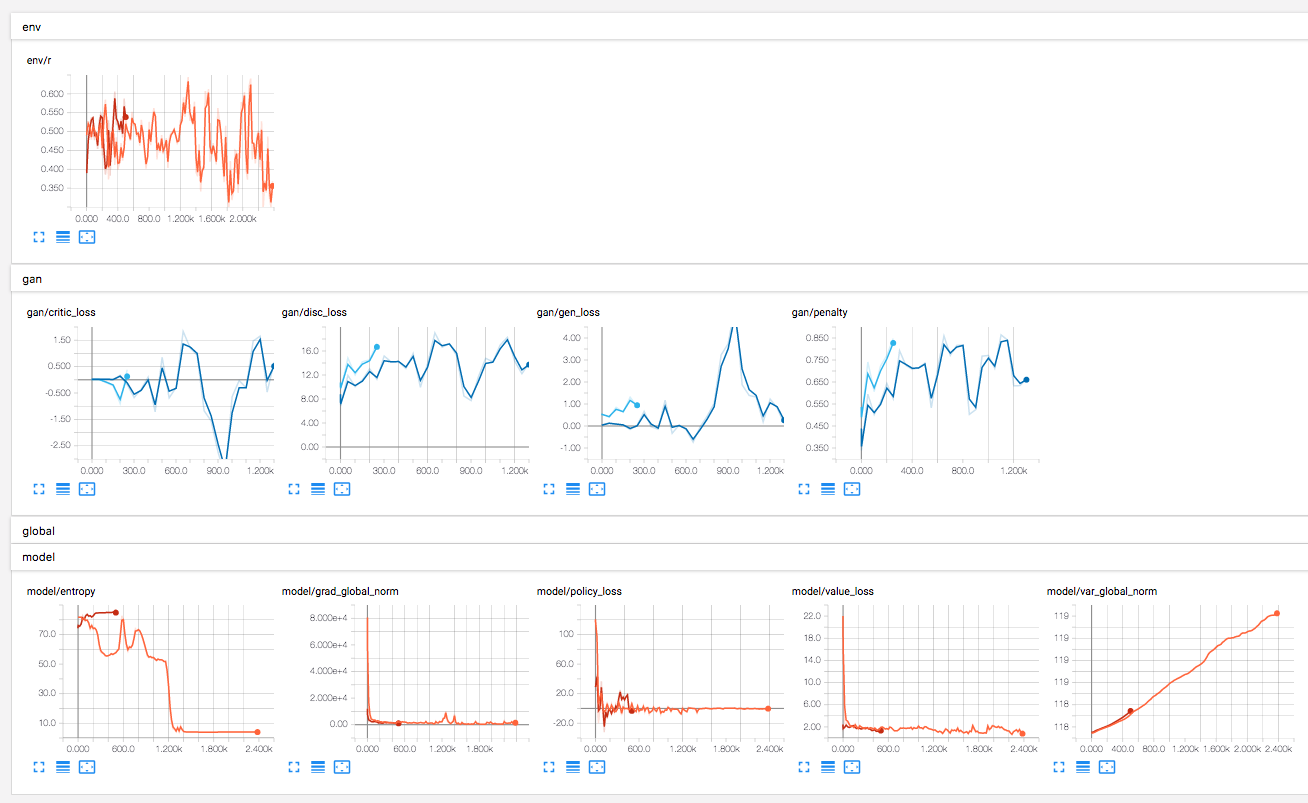

Tensorboard:

To-do

- [x] IMPALA A2C

- [ ] IMPALA V-trace

- [x] Simple environment (debugging)

- [x] Find a correct libmypaint setting

- [x] MNIST environment

- [x] ReplayThread (

--loss=gan) - [x]

--num_gpu=2test - [x]

--conditional=True(need more details) - [ ] Replay memory needs more detailed information

- [ ] Population Based Training (to be honest, I don't have any plan for this)

References

This code is heavily based on openai/universe-starter-agent.

- Population Based Training of Neural Networks

- Asynchronous Methods for Deep Reinforcement Learning

- IMPALA: Scalable Distributed Deep-RL with Importance Weighted Actor-Learner Architectures

Author

Taehoon Kim / @carpedm20

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].