JahstreetOrg / Spark On Kubernetes Helm

Projects that are alternatives of or similar to Spark On Kubernetes Helm

Spark on Kubernetes Cluster Helm Chart

This repo contains the Helm chart for the fully functional and production ready Spark on Kubernetes cluster setup integrated with the Spark History Server, JupyterHub and Prometheus stack.

Refer the design concept for the implementation details.

Getting Started

Initialize Helm (for Helm 2.x)

In order to use Helm charts for the Spark on Kubernetes cluster deployment first we need to initialize Helm client.

kubectl create serviceaccount tiller --namespace kube-system

kubectl create clusterrolebinding tiller --clusterrole cluster-admin --serviceaccount=kube-system:tiller

helm init --upgrade --service-account tiller --tiller-namespace kube-system

kubectl get pods --namespace kube-system -w

# Wait until Pod `tiller-deploy-*` moves to Running state

Install Livy

The basic Spark on Kubernetes setup consists of the only Apache Livy server deployment, which can be installed with the Livy Helm chart.

helm repo add jahstreet https://jahstreet.github.io/helm-charts

helm repo update

kubectl create namespace livy

helm upgrade --install livy --namespace livy jahstreet/livy \

--set rbac.create=true # If you are running RBAC-enabled Kubernetes cluster

kubectl get pods --namespace livy -w

# Wait until Pod `livy-0` moves to Running state

For more advanced Spark cluster setups refer the Documentation page.

Run Spark Job

Now when Livy is up and running we can submit Spark job via Livy REST API.

kubectl exec --namespace livy livy-0 -- \

curl -s -k -H 'Content-Type: application/json' -X POST \

-d '{

"name": "SparkPi-01",

"className": "org.apache.spark.examples.SparkPi",

"numExecutors": 2,

"file": "local:///opt/spark/examples/jars/spark-examples_2.11-2.4.5.jar",

"args": ["10000"],

"conf": {

"spark.kubernetes.namespace": "livy"

}

}' "http://localhost:8998/batches" | jq

# Record BATCH_ID from the response

Track running job

To track the running Spark job we can use all the available Kubernetes tools and the Livy REST API.

# Watch running Spark Pods

kubectl get pods --namespace livy -w --show-labels

# Check Livy batch status

kubectl exec --namespace livy livy-0 -- curl -s http://localhost:8998/batches/$BATCH_ID | jq

To configure Ingress for direct access to Livy UI and Spark UI refer the Documentation page.

Spark on Kubernetes Cluster Design Concept

Motivation

Running Spark on Kubernetes is available since Spark v2.3.0 release on February 28, 2018. Now it is v2.4.5 and still lacks much comparing to the well known Yarn setups on Hadoop-like clusters.

Corresponding to the official documentation user is able to run Spark on Kubernetes via spark-submit CLI script. And actually it is the only in-built into Apache Spark Kubernetes related capability along with some config options. Debugging proposal from Apache docs is too poor to use it easily and available only for console based tools. Schedulers integration is not available either, which makes it too tricky to setup convenient pipelines with Spark on Kubernetes out of the box. Yarn based Hadoop clusters in turn has all the UIs, Proxies, Schedulers and APIs to make your life easier.

On the other hand the usage of Kubernetes clusters in opposite to Yarn ones has definite benefits (July 2019 comparison):

- Pricing. Comparing the similar cluster setups on Azure Cloud shows that AKS is about 35% cheaper than HDInsight Spark.

- Scaling. Kubernetes cluster in Cloud support elastic autoscaling with many cool related features alongside, eg: Nodepools. Scaling of Hadoop clusters is far not as fast though, can be done either manually or automatically (on July 2019 was in preview).

- Integrations. You can run any workloads in Kubernetes cluster wrapped into the Docker container. But do you know anyone who has ever written Yarn App in the modern world?

- Support. You don't have a full control over the cluster setup provided by Cloud and usually there are no latest versions of software available for months after the release. With Kubernetes you can build image on your own.

- Other Kuebernetes pros. CI/CD with Helm, Monitoring stacks ready for use in-one-button-click, huge popularity and community support, good tooling and of course HYPE.

All that makes much sense to try to improve Spark on Kubernetes usability to take the whole advantage of modern Kubernetes setups in use.

Design concept

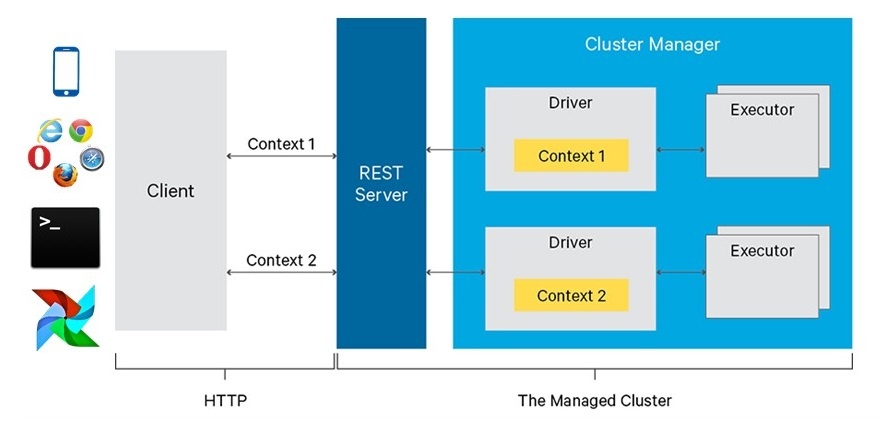

The heart of all the problems solution is Apache Livy. Apache Livy is a service that enables easy interaction with a Spark cluster over a REST interface. It is supported by Apache Incubator community and Azure HDInsight team, which uses it as a first class citizen in their Yarn cluster setup and does many integrations with it. Watch Spark Summit 2016, Cloudera and Microsoft, Livy concepts and motivation for the details.

The cons is that Livy is written for Yarn. But Yarn is just Yet Another resource manager with containers abstraction adaptable to the Kubernetes concepts. Livy is fully open-sourced as well, its codebase is RM aware enough to make Yet Another One implementation of it's interfaces to add Kubernetes support. So why not!? Check the WIP PR with Kubernetes support proposal for Livy.

The high-level architecture of Livy on Kubernetes is the same as for Yarn.

Livy server just wraps all the logic concerning interaction with Spark cluster and provides simple REST interface.

[EXPAND] For example, to submit Spark Job to the cluster you just need to send `POST /batches` with JSON body containing Spark config options, mapped to `spark-submit` script analogous arguments.

$SPARK_HOME/bin/spark-submit \

--master k8s://https://<k8s-apiserver-host>:<k8s-apiserver-port> \

--deploy-mode cluster \

--name SparkPi \

--class org.apache.spark.examples.SparkPi \

--conf spark.executor.instances=5 \

--conf spark.kubernetes.container.image=<spark-image> \

local:///path/to/examples.jar

# Has the similar effect as calling Livy via REST API

curl -H 'Content-Type: application/json' -X POST \

-d '{

"name": "SparkPi",

"className": "org.apache.spark.examples.SparkPi",

"numExecutors": 5,

"conf": {

"spark.kubernetes.container.image": "<spark-image>"

},

"file": "local:///path/to/examples.jar"

}' "http://livy.endpoint.com/batches"

Under the hood Livy parses POSTed configs and does spark-submit for you, bypassing other defaults configured for the Livy server.

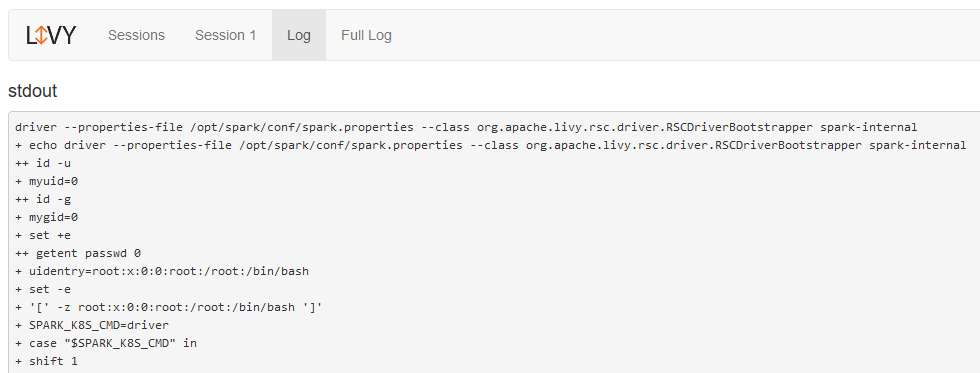

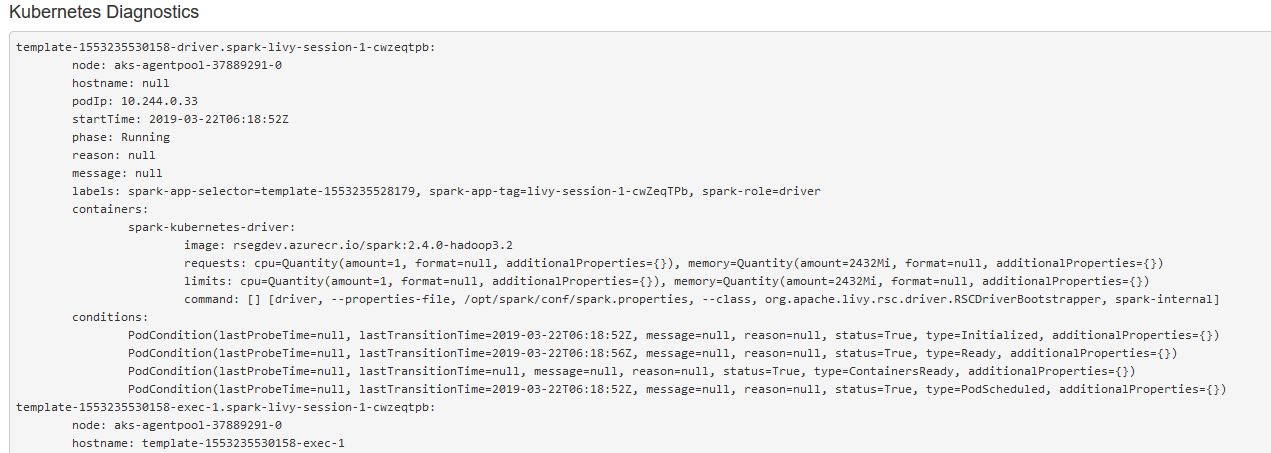

After the job submission Livy discovers Spark Driver Pod scheduled to the Kubernetes cluster with Kubernetes API and starts to track its state, cache Spark Pods logs and details descriptions making that information available through Livy REST API, builds routes to Spark UI, Spark History Server, Monitoring systems with Kubernetes Ingress resources, Nginx Ingress Controller in particular and displays the links on Livy Web UI.

Providing REST interface for Spark Jobs orchestration Livy allows any number of integrations with Web/Mobile apps and services, easy way of setting up flows via jobs scheduling frameworks.

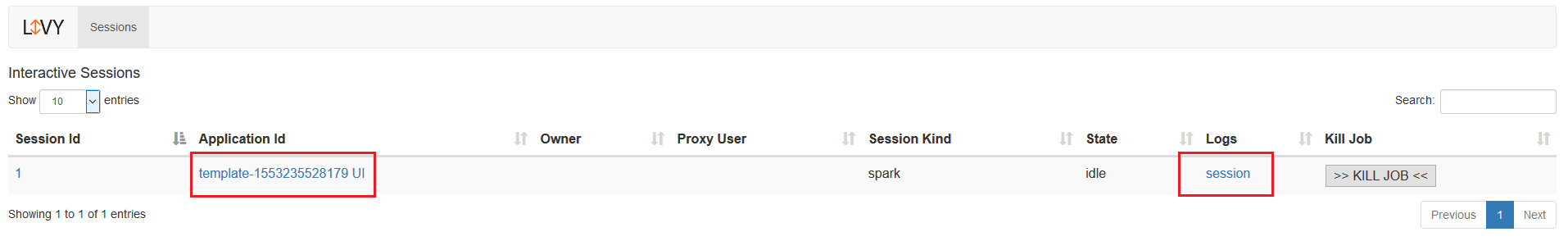

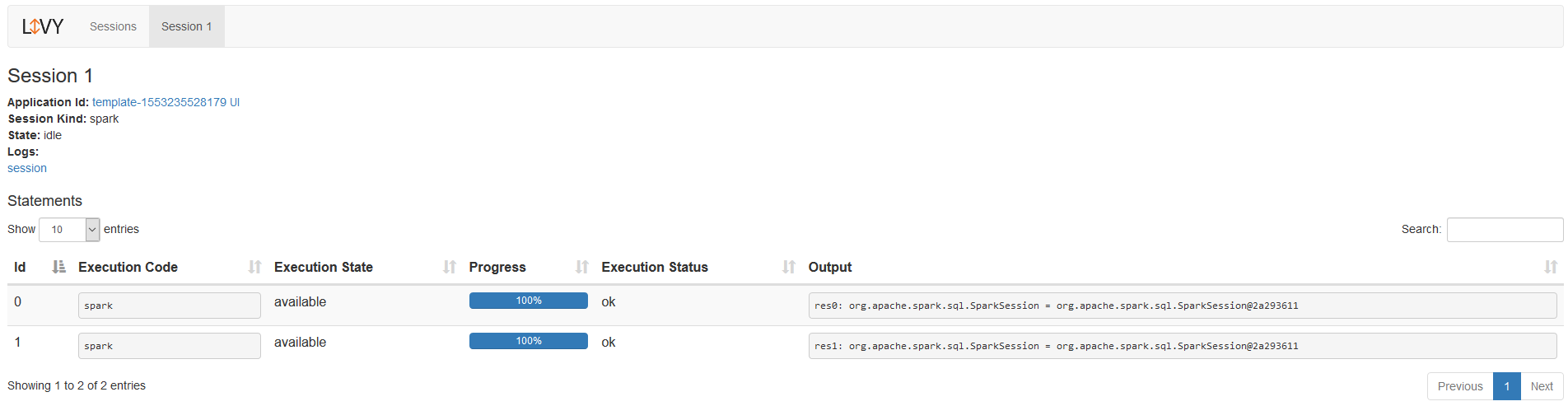

Livy has in-built lightweight Web UI, which makes it really competitive to Yarn in terms of navigation, debugging and cluster discovery.

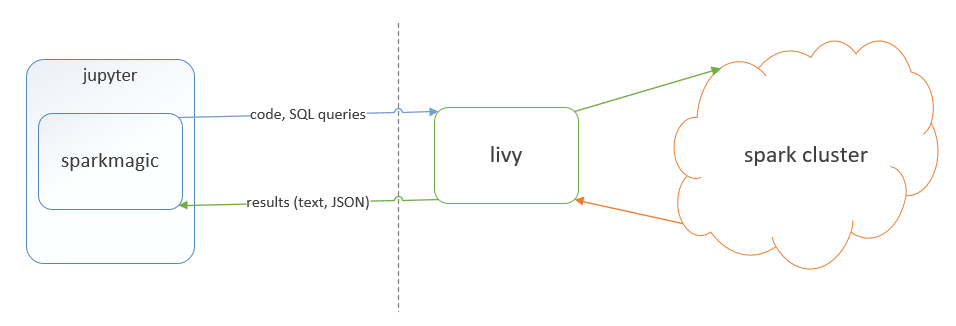

Livy supports interactive sessions with Spark clusters allowing to communicate between Spark and application servers, thus enabling the use of Spark for interactive web/mobile applications. Using that feature Livy integrates with Jupyter Notebook through Sparkmagic kernel out of box giving user elastic Spark exploratory environment in Scala and Python. Just deploy it to Kubernetes and use!

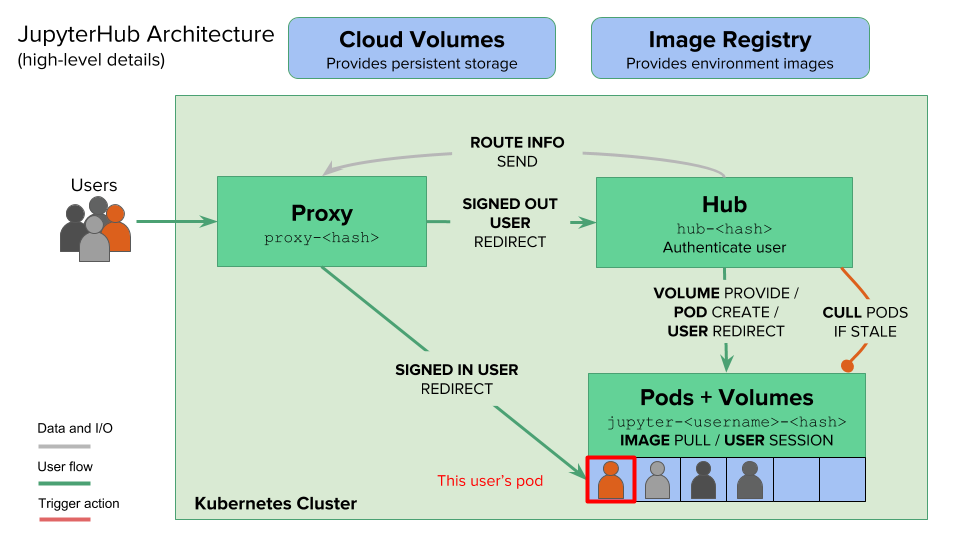

On top of Jupyter it is possible to set up JupyterHub, which is a multi-user Hub that spawns, manages, and proxies multiple instances of the single-user Jupyter notebook servers. Follow the video PyData 2018, London, JupyterHub from the Ground Up with Kubernetes - Camilla Montonen to learn the details of the implementation. JupyterHub provides a way to setup auth through Azure AD with AzureAdOauthenticator plugin as well as many other Oauthenticator plugins.

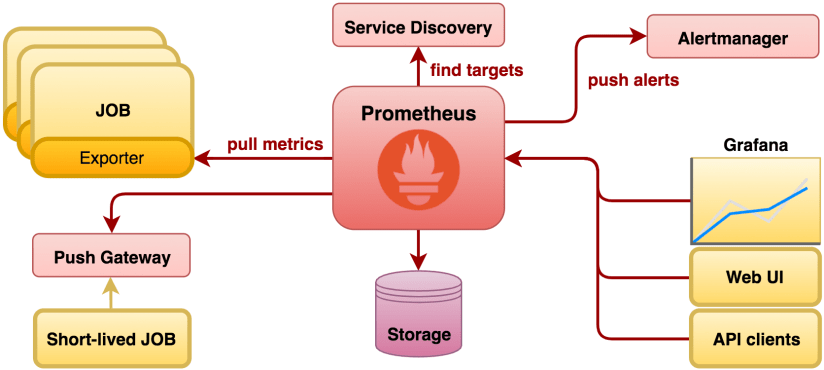

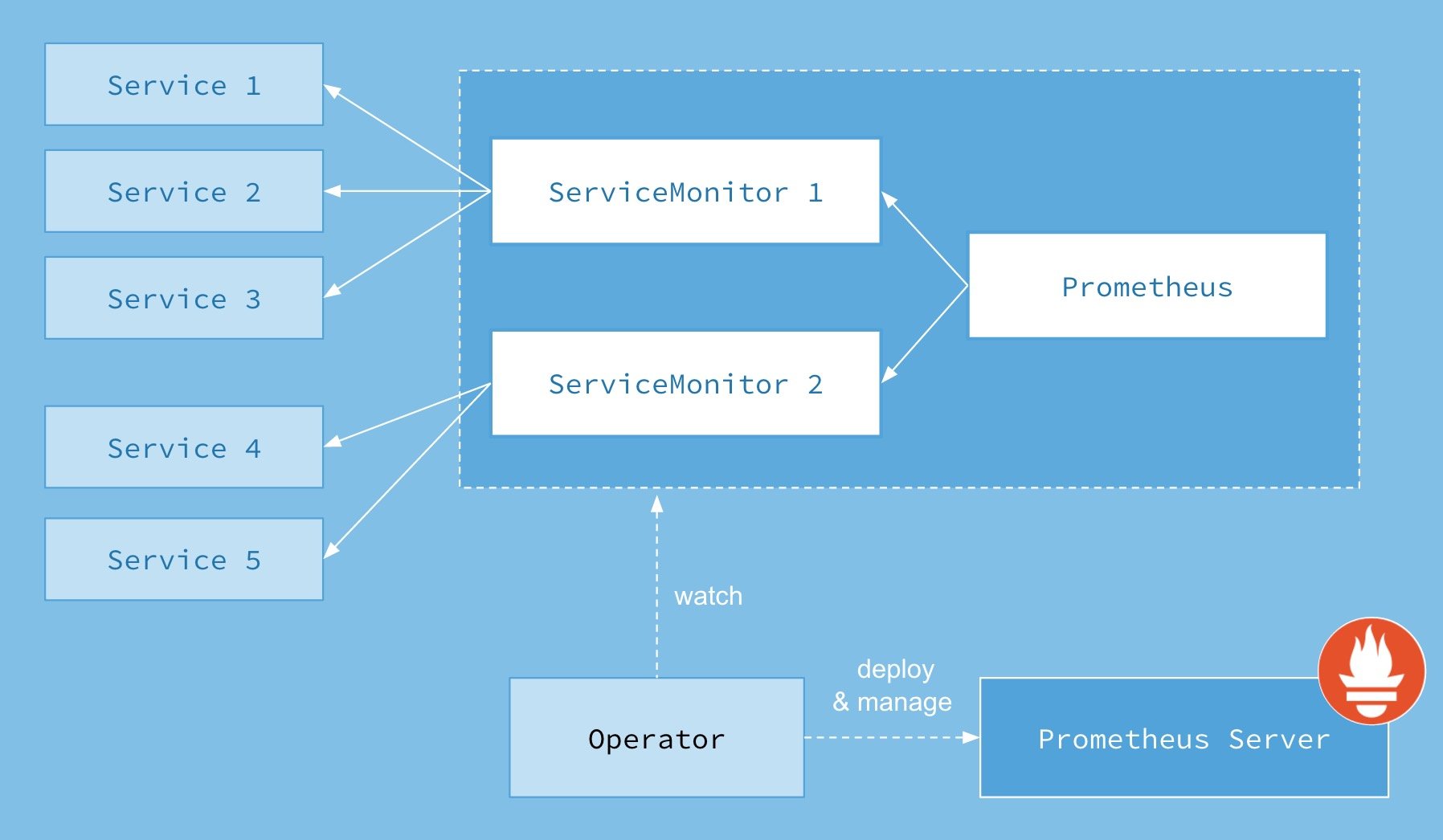

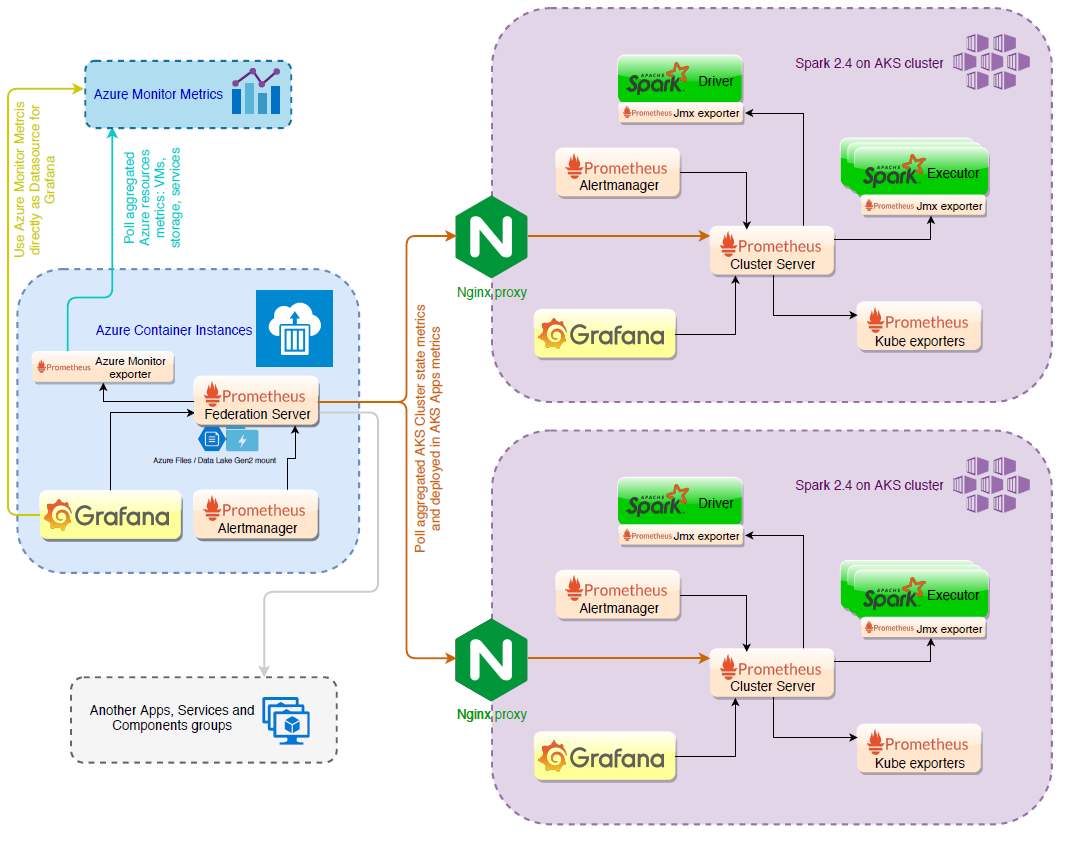

Monitoring setup of Kubernetes cluster itself can be done with Prometheus Operator stack with Prometheus Pushgateway and Grafana Loki using a combined Helm chart, which allows to do the work in one-button-click. Learn more about the stack from videos:

- End to end monitoring with the Prometheus Operator

- Grafana Loki: Like Prometheus, But for logs. - Tom Wilkie, Grafana Labs

The overall monitoring architecture solves pull and push model of metrics collection from the Kubernetes cluster and the services deployed to it. Prometheus Alertmanager gives an interface to setup alerting system.

With the help of JMX Exporter or Pushgateway Sink we can get Spark metrics inside the monitoring system. Grafana Loki provides out-of-box logs aggregation for all Pods in the cluster and natively integrates with Grafana. Using Grafana Azure Monitor datasource and Prometheus Federation feature you can setup complex global monitoring architecture for your infrastructure.

References:

- [LIVY-588][WIP]: Full support for Spark on Kubernetes

- Jupyter Sparkmagic kernel to integrate with Apache Livy

- Spark Summit 2016, Cloudera and Microsoft, Livy concepts and motivation

- PyData 2018, London, JupyterHub from the Ground Up with Kubernetes - Camilla Montonen

- End to end monitoring with the Prometheus Operator

- Grafana Loki: Like Prometheus, But for logs. - Tom Wilkie, Grafana Labs

- NGINX conf 2018, Using NGINX as a Kubernetes Ingress Controller