airalcorn2 / Strike With A Pose

Programming Languages

Projects that are alternatives of or similar to Strike With A Pose

Strike (With) A Pose

This is the companion tool to the paper:

Michael A. Alcorn, Qi Li, Zhitao Gong, Chengfei Wang, Long Mai, Wei-Shinn Ku, and Anh Nguyen. Strike (with) a pose: Neural networks are easily fooled by strange poses of familiar objects. Conference on Computer Vision and Pattern Recognition (CVPR). 2019.

Code to run experiments like those described in the paper can be found in the paper_code directory. The tool allows you to generate adversarial poses of objects with a graphical user interface. Please note that the included jeep object does not meet the realism standards set in the paper. Unfortunately, the school bus object shown in the GIF is proprietary and cannot be distributed with the tool. A browser port of the tool (created by Zhitao Gong) can be found here.

If you use this tool for your own research, please cite:

@article{alcorn-2019-strike-with-a-pose,

Author = {Alcorn, Michael A. and Li, Qi and Gong, Zhitao and Wang, Chengfei and Mai, Long and Ku, Wei-Shinn and Nguyen, Anh},

Title = {{Strike (with) a Pose: Neural Networks Are Easily Fooled by Strange Poses of Familiar Objects}},

Journal = {Conference on Computer Vision and Pattern Recognition (CVPR)},

Year = {2019}

}

Table of Contents

Requirements

- Git (Linux users only)

-

OpenGL ≥ 3.3 (many computers satisfy this requirement)

- On Linux, you can check your OpenGL version with the following command (requires

glx-utilson Fedora ormesa-utilson Ubuntu):

glxinfo | grep "OpenGL version"

- On Windows, you can use this tool.

- On Linux, you can check your OpenGL version with the following command (requires

-

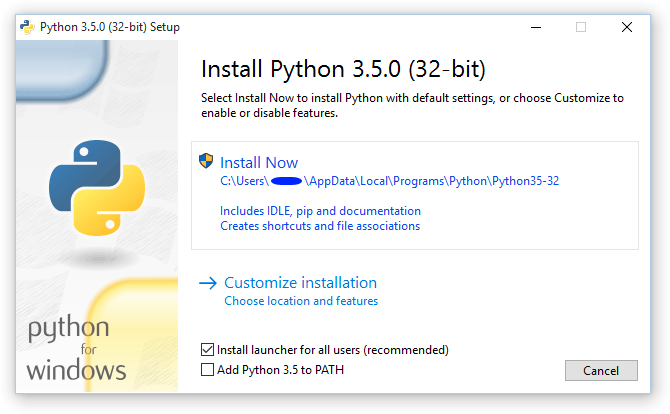

Python 3 (Mac, Windows)

- Mac users might also have to install SSL certificates for Python by double-clicking the file found at:

/Applications/Python\ 3.x/Install\ Certificates.command

wherexis the minor version of your particular Python 3 install. If you used the above link to install Python, the file will be at:/Applications/Python\ 3.6/Install\ Certificates.command

-

Windows users should make sure they select the option to "Add Python 3.x to PATH" where "x" is the minor version of the Python 3 you are installing. If you forget to select this option, you can also add Python to the PATH yourself.

Install/Run

Note: the tool takes a little while to start the first time it's run because it has to download the neural network.

Linux

In the terminal, enter the following commands:

# Clone the strike-with-a-pose repository.

git clone https://github.com/airalcorn2/strike-with-a-pose.git

# Move to the strike-with-a-pose directory.

cd strike-with-a-pose

# Install strike-with-a-pose.

pip3 install .

# Run strike-with-a-pose.

strike-with-a-pose

You can also run the tool (after installing) by starting Python and entering the following:

from strike_with_a_pose import app

app.run_gui()

Mac

- Click here to download the tool ZIP.

- Extract the ZIP somewhere convenient (like your desktop).

- Double-click

install.commandin thestrike-with-a-pose-master/directory.

- Note: you may have to adjust your security settings to allow applications from "unidentified developers".

- Double-click

strike-with-a-pose.commandin thestrike-with-a-pose-master/run/directory.

Windows

- Click here to download the tool ZIP.

- Extract the ZIP somewhere convenient (like your desktop).

- Double-click

install.batin thestrike-with-a-pose-master\directory.

- Note: you may need to click "More info" and then "Run anyway".

- Double-click

strike-with-a-pose.batin thestrike-with-a-pose-master\run\directory.

For Experts

Using Different Objects and Backgrounds

Users can test their own objects and backgrounds in Strike (With) A Pose by:

- Adding the appropriate files to the

scene_files/directory. - Modifying the

BACKGROUND_F,OBJ_F, andMTL_Fvariables insettings.pyaccordingly. - Running the following command inside the

strike-with-a-pose/directory:

PYTHONPATH=strike_with_a_pose python3 -m strike_with_a_pose.app

Using Different Machine Learning Models

Users can experiment with different machine learning models in Strike (With) A Pose by:

- Defining a model class that implements the

get_gui_comps,init_scene_comps,predict,render, andclearfunctions (e.g.,image_classifier.py,object_detector.py,image_captioner.py, andclass_activation_mapper.py[with major contributions by Qi Li]). - Setting the

MODELvariable insettings.pyaccordingly. - Running the following command inside the

strike-with-a-pose/directory:

PYTHONPATH=strike_with_a_pose python3 -m strike_with_a_pose.app

To use the image captioner model, first download and install the COCO API:

git clone https://github.com/pdollar/coco.git

cd coco/PythonAPI/

make

python3 setup.py build

python3 setup.py install

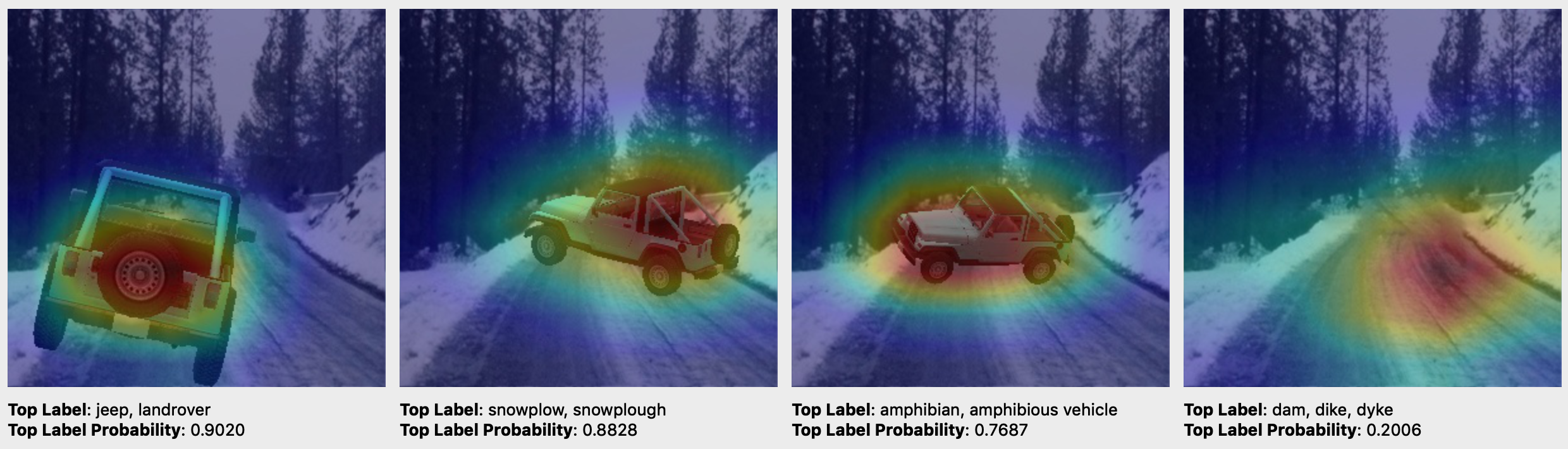

Image Classifier

Object Detector

"The Elephant in the Room"-like (Rosenfeld et al., 2018) examples:

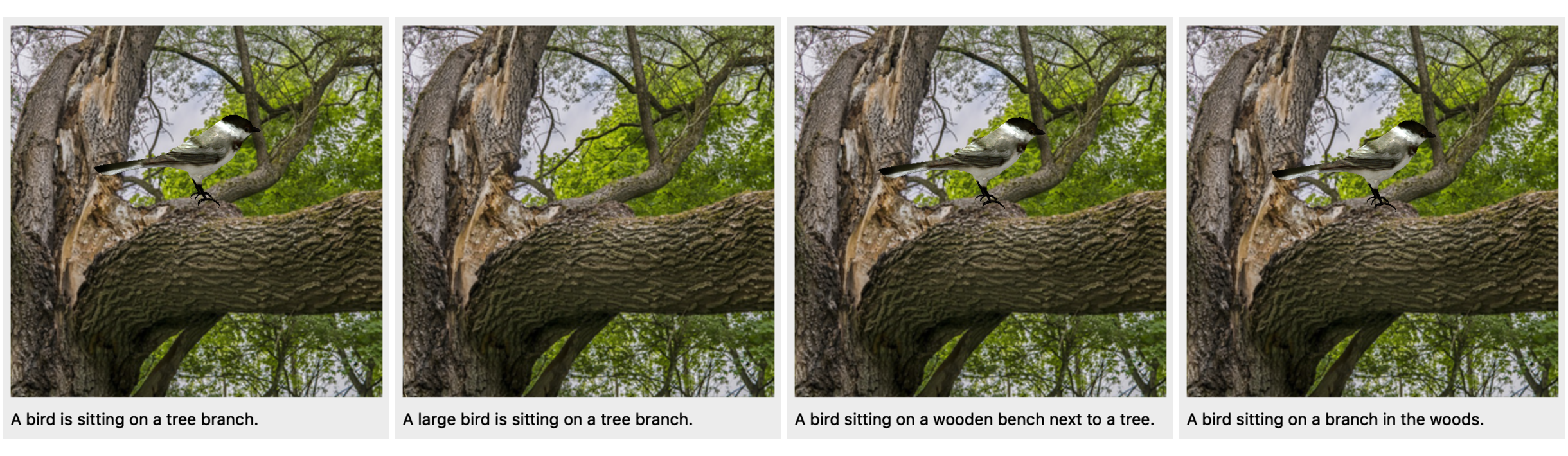

Image Captioner

Class Activation Mapper

"Learning Deep Features for Discriminative Localization"-like (Zhou et al., 2016) examples.

Additional Features

Press L to toggle Live mode. When on, the machine learning model will continuously generate predictions. Note, Live mode can cause considerable lag if you do not have each of (1) a powerful GPU, (2) CUDA installed, and (3) a PyTorch version with CUDA support installed.

Press X to toggle the object's teXture, which is useful for making sure the directional light is properly interacting with your object. If the light looks funny, swapping/negating the vertex normal coordinates can usually fix it. See the fix_normals.py script for an example.

Press F to toggle back-Face culling, which is necessary when rendering certain models (like some found in ShapeNet).

Press I to bring up the Individual component selector. This feature allows you to display individual object components (as defined by each newmtl in the .mtl file) by themselves.

Press C to Capture a screenshot of the current render. Screenshots are saved in the directory where the tool is started.