switchablenorms / Switchable Normalization

Projects that are alternatives of or similar to Switchable Normalization

Switchable Normalization

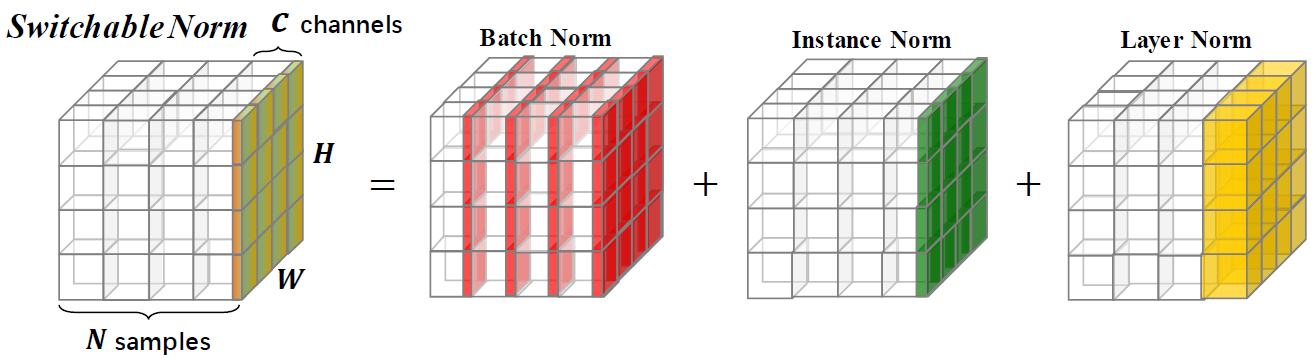

Switchable Normalization is a normalization technique that is able to learn different normalization operations for different normalization layers in a deep neural network in an end-to-end manner.

Update

- 2019/3/21: Release distributed training framework and face recognition framework. We also release a pytorch implementation of SyncBN and SyncSN for small batch tasks such as segmentation and detection. More details about SyncBN and SyncSN can refer to this.

- 2018/7/27: The pretrained models of ResNet50+SN(8,1) and SN(8,4) have been released. These models may help in the finetuning stage when the batch size of a target task is constrained to be small. We also release the pretrained models of ResNet101v2+SN that achieves 78.81%/94.16% top-1/top-5 accuracies on ImageNet. More pretrained models will be released soon!

- 2018/7/26: The code for object detection have been released in the repository of SwitchNorm_Detection.

- 2018/7/9: We would like to explain the merit behind SN. See html preview or this blog (in Chinese).

- 2018/7/4: Model zoo updated!

- 2018/7/2: The code of image classification and a pretrained model on ImageNet are released.

Citation

This repository provides imagenet classification results and models trained with Switchable Normalization. You are encouraged to cite the following paper if you use SN in research.

@article{SwitchableNorm,

title={Differentiable Learning-to-Normalize via Switchable Normalization},

author={Ping Luo and Jiamin Ren and Zhanglin Peng and Ruimao Zhang and Jingyu Li},

journal={International Conference on Learning Representation (ICLR)},

year={2019}

}

Overview of Results

Image Classification in ImageNet

Comparisons of top-1 accuracies on the validation set of ImageNet, by using ResNet50 trained with SN, BN, and GN in different batch size settings. The bracket (·, ·) denotes (#GPUs,#samples per GPU). In the bottom part, “GN-BN” indicates the difference between the accuracies of GN and BN. The “-” in (8, 1) of BN indicates it does not converge.

| (8,32) | (8,16) | (8,8) | (8,4) | (8,2) | (1,16) | (1,32) | (8,1) | (1,8) | |

| BN | 76.4 | 76.3 | 75.2 | 72.7 | 65.3 | 76.2 | 76.5 | – | 75.4 |

| GN | 75.9 | 75.8 | 76.0 | 75.8 | 75.9 | 75.9 | 75.8 | 75.5 | 75.5 |

| SN | 76.9 | 76.7 | 76.7 | 75.9 | 75.6 | 76.3 | 76.6 | 75.0* | 75.9 |

| GN−BN | -0.5 | -0.5 | 0.8 | 3.1 | 10.6 | -0.3 | -0.7 | – | 0.1 |

| SN−BN | 0.5 | 0.4 | 1.5 | 3.2 | 10.3 | 0.1 | 0.1 | – | 0.5 |

| SN−GN | 1.0 | 0.9 | 0.7 | 0.1 | -0.3 | 0.4 | 0.8 | -0.5 | 0.4 |

Model Zoo

We provide models pretrained with SN on ImageNet, and compare to those pretrained with BN as reference. If you use these models in research, please cite the SN paper. The configuration of SN is denoted as (#GPUs, #images per GPU).

| Model | Top-1* | Top-5* | Epochs | LR Scheduler | Weight Decay | Download |

|---|---|---|---|---|---|---|

| ResNet101v2+SN (8,32) | 78.81% | 94.16% | 120 | warmup + cosine lr | 1e-4 | [Google Drive] [Baidu Pan] |

| ResNet101v1+SN (8,32) | 78.54% | 94.10% | 120 | warmup + cosine lr | 1e-4 | [Google Drive] [Baidu Pan] |

| ResNet50v2+SN (8,32) | 77.57% | 93.65% | 120 | warmup + cosine lr | 1e-4 | [Google Drive] [Baidu Pan] |

| ResNet50v1+SN (8,32) | 77.49% | 93.32% | 120 | warmup + cosine lr | 1e-4 | [Google Drive] [Baidu Pan] |

| ResNet50v1+SN (8,32) | 76.92% | 93.26% | 100 | Initial lr=0.1 decay=0.1 steps[30,60,90,10] | 1e-4 | [Google Drive] [Baidu Pan] |

| ResNet50v1+SN (8,4) | 75.85% | 92.7% | 100 | Initial lr=0.0125 decay=0.1 steps[30,60,90,10] | 1e-4 | [Google Drive] [Baidu Pan] |

| ResNet50v1+SN (8,1)† | 75.94% | 92.7% | 100 | Initial lr=0.003125 decay=0.1 steps[30,60,90,10] | 1e-4 | [Google Drive] [Baidu Pan] |

| ResNet50v1+BN | 75.20% | 92.20% | -- | stepwise decay | -- | [TensorFlow models] |

| ResNet50v1+BN | 76.00% | 92.98% | -- | stepwise decay | -- | [PyTorch Vision] |

| ResNet50v1+BN | 75.30% | 92.20% | -- | stepwise decay | -- | [MSRA] |

| ResNet50v1+BN | 75.99% | 92.98% | -- | stepwise decay | -- | [FB Torch] |

*single-crop validation accuracy on ImageNet (a 224x224 center crop from resized image with shorter side=256)

†For (8,1), SN contains IN and LN without BN, as BN is the same as IN in training. When using this model, you should add using_bn : False in yaml file.

License

All materials in this repository are released under the CC-BY-NC 4.0 LICENSE.