DmitryUlyanov / Texture_nets

Programming Languages

Projects that are alternatives of or similar to Texture nets

Texture Networks + Instance normalization: Feed-forward Synthesis of Textures and Stylized Images

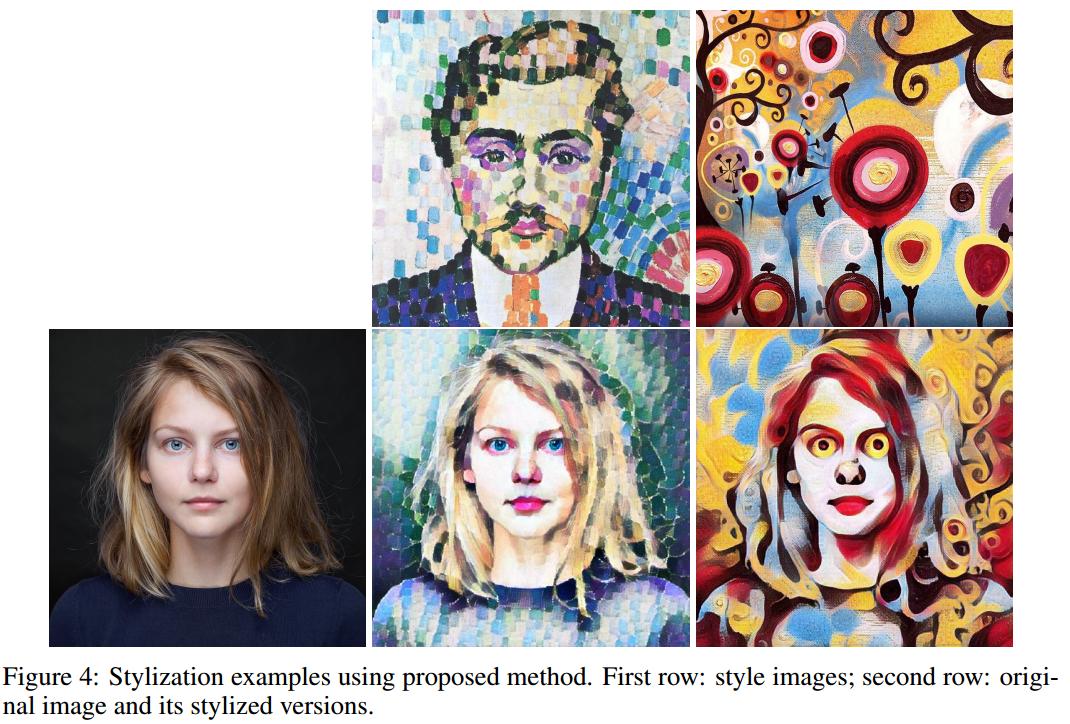

In the paper Texture Networks: Feed-forward Synthesis of Textures and Stylized Images we describe a faster way to generate textures and stylize images. It requires learning a feedforward generator with a loss function proposed by Gatys et al.. When the model is trained, a texture sample or stylized image of any size can be generated instantly.

Improved Texture Networks: Maximizing Quality and Diversity in Feed-forward Stylization and Texture Synthesis presents a better architectural design for the generator network. By switching batch_norm to Instance Norm we facilitate the learning process resulting in much better quality.

This also implements the stylization part from Perceptual Losses for Real-Time Style Transfer and Super-Resolution.

You can find an oline demo here (thanks to RiseML).

Prerequisites

Download VGG-19.

cd data/pretrained && bash download_models.sh && cd ../..

Stylization

Training

Preparing image dataset

You can use an image dataset of any kind. For my experiments I tried Imagenet and MS COCO datasets. The structure of the folders should be the following:

dataset/train

dataset/train/dummy

dataset/val/

dataset/val/dummy

The dummy folders should contain images. The dataloader is based on one used infb.resnet.torch.

Here is a quick example for MSCOCO:

wget http://msvocds.blob.core.windows.net/coco2014/train2014.zip

wget http://msvocds.blob.core.windows.net/coco2014/val2014.zip

unzip train2014.zip

unzip val2014.zip

mkdir -p dataset/train

mkdir -p dataset/val

ln -s `pwd`/val2014 dataset/val/dummy

ln -s `pwd`/train2014 dataset/train/dummy

Training a network

Basic usage:

th train.lua -data <path to any image dataset> -style_image path/to/img.jpg

These parameters work for me:

th train.lua -data <path to any image dataset> -style_image path/to/img.jpg -style_size 600 -image_size 512 -model johnson -batch_size 4 -learning_rate 1e-2 -style_weight 10 -style_layers relu1_2,relu2_2,relu3_2,relu4_2 -content_layers relu4_2

Check out issues tab, you will find some useful advices there.

To achieve the results from the paper you need to play with -image_size, -style_size, -style_layers, -content_layers, -style_weight, -tv_weight.

Do not hesitate to set -batch_size to one, but remember the larger -batch_size the larger -learning_rate you can use.

Testing

th test.lua -input_image path/to/image.jpg -model_t7 data/checkpoints/model.t7

Play with -image_size here. Raise -cpu flag to use CPU for processing.

You can find a pretrained model here. It is not the model from the paper.

Generating textures

soon

Hardware

- The code was tested with 12GB NVIDIA Titan X GPU and Ubuntu 14.04.

- You may decrease

batch_size,image_sizeif the model do not fit your GPU memory. - The pretrained models do not need much memory to sample.

Credits

The code is based on Justin Johnson's great code for artistic style.