lilianweng / Transformer Tensorflow

Implementation of Transformer Model in Tensorflow

Stars: ✭ 286

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Transformer Tensorflow

galerkin-transformer

[NeurIPS 2021] Galerkin Transformer: a linear attention without softmax

Stars: ✭ 111 (-61.19%)

Mutual labels: transformer

Deep Learning In Production

In this repository, I will share some useful notes and references about deploying deep learning-based models in production.

Stars: ✭ 3,104 (+985.31%)

Mutual labels: tensorflow-models

Transformer

Implementation of Transformer model (originally from Attention is All You Need) applied to Time Series.

Stars: ✭ 273 (-4.55%)

Mutual labels: transformer

Swin-Transformer-Tensorflow

Unofficial implementation of "Swin Transformer: Hierarchical Vision Transformer using Shifted Windows" (https://arxiv.org/abs/2103.14030)

Stars: ✭ 45 (-84.27%)

Mutual labels: transformer

bert in a flask

A dockerized flask API, serving ALBERT and BERT predictions using TensorFlow 2.0.

Stars: ✭ 32 (-88.81%)

Mutual labels: transformer

Multiple Relations Extraction Only Look Once

Multiple-Relations-Extraction-Only-Look-Once. Just look at the sentence once and extract the multiple pairs of entities and their corresponding relations. 端到端联合多关系抽取模型,可用于 http://lic2019.ccf.org.cn/kg 信息抽取。

Stars: ✭ 269 (-5.94%)

Mutual labels: tensorflow-models

TextPruner

A PyTorch-based model pruning toolkit for pre-trained language models

Stars: ✭ 94 (-67.13%)

Mutual labels: transformer

ai challenger 2018 sentiment analysis

Fine-grained Sentiment Analysis of User Reviews --- AI CHALLENGER 2018

Stars: ✭ 16 (-94.41%)

Mutual labels: transformer

uformer-pytorch

Implementation of Uformer, Attention-based Unet, in Pytorch

Stars: ✭ 54 (-81.12%)

Mutual labels: transformer

AITQA

resources for the IBM Airlines Table-Question-Answering Benchmark

Stars: ✭ 12 (-95.8%)

Mutual labels: transformer

Allrank

allRank is a framework for training learning-to-rank neural models based on PyTorch.

Stars: ✭ 269 (-5.94%)

Mutual labels: transformer

SwinIR

SwinIR: Image Restoration Using Swin Transformer (official repository)

Stars: ✭ 1,260 (+340.56%)

Mutual labels: transformer

SIGIR2021 Conure

One Person, One Model, One World: Learning Continual User Representation without Forgetting

Stars: ✭ 23 (-91.96%)

Mutual labels: transformer

Nlp Interview Notes

本项目是作者们根据个人面试和经验总结出的自然语言处理(NLP)面试准备的学习笔记与资料,该资料目前包含 自然语言处理各领域的 面试题积累。

Stars: ✭ 207 (-27.62%)

Mutual labels: transformer

Viewpagertransition

viewpager with parallax pages, together with vertical sliding (or click) and activity transition

Stars: ✭ 3,017 (+954.9%)

Mutual labels: transformer

Bmw Tensorflow Inference Api Gpu

This is a repository for an object detection inference API using the Tensorflow framework.

Stars: ✭ 277 (-3.15%)

Mutual labels: tensorflow-models

Remi

"Pop Music Transformer: Beat-based Modeling and Generation of Expressive Pop Piano Compositions", ACM Multimedia 2020

Stars: ✭ 273 (-4.55%)

Mutual labels: transformer

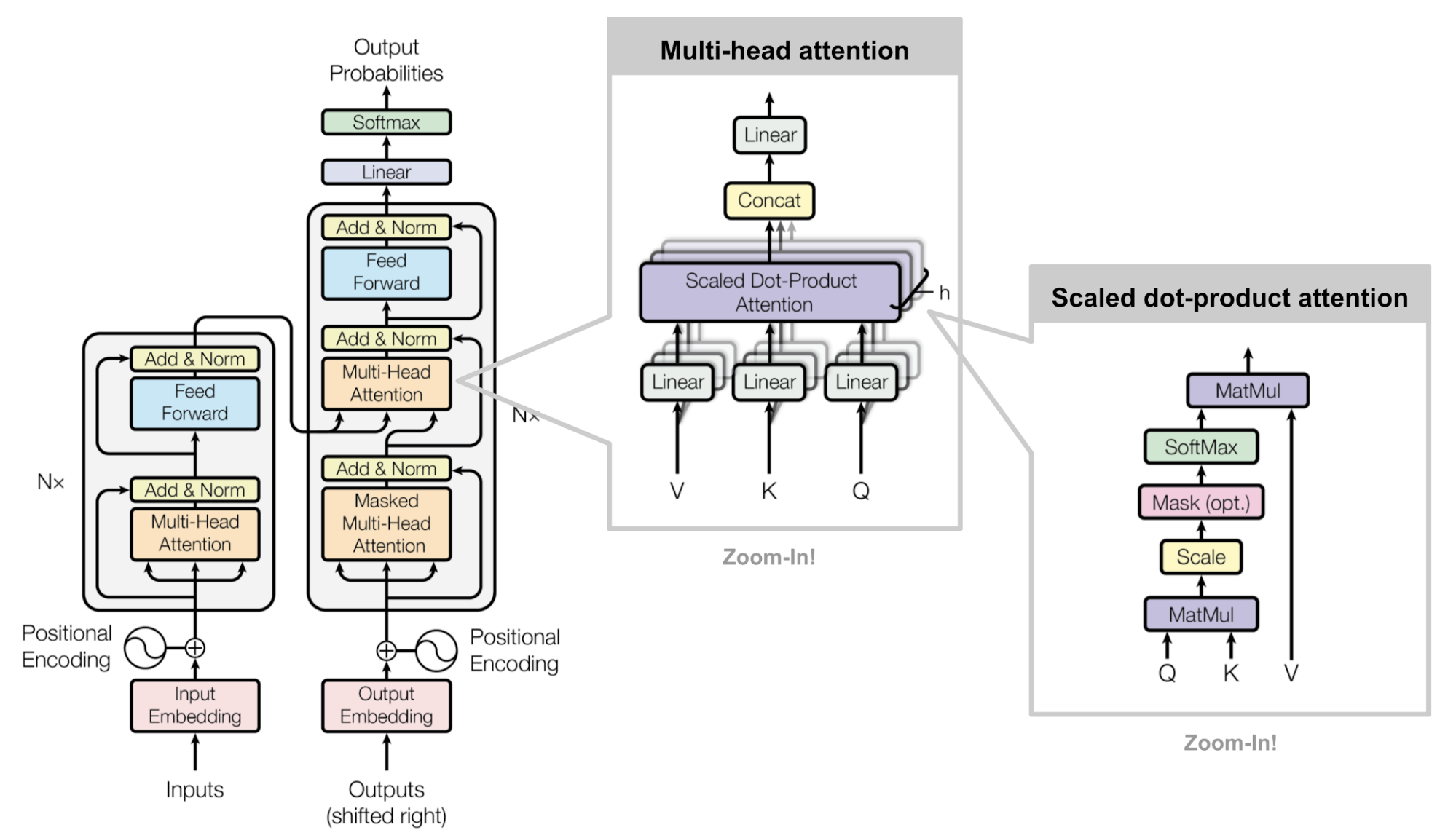

Transformer

Implementation of the Transformer model in the paper:

Ashish Vaswani, et al. "Attention is all you need." NIPS 2017.

Check my blog post on attention and transformer:

Implementations that helped me:

- https://github.com/Kyubyong/transformer/

- https://github.com/tensorflow/tensor2tensor/blob/master/tensor2tensor/models/transformer.py

- http://nlp.seas.harvard.edu/2018/04/01/attention.html

Setup

$ git clone https://github.com/lilianweng/transformer-tensorflow.git

$ cd transformer-tensorflow

$ pip install -r requirements.txt

Train a Model

# Check the help message:

$ python train.py --help

Usage: train.py [OPTIONS]

Options:

--seq-len INTEGER Input sequence length. [default: 20]

--d-model INTEGER d_model [default: 512]

--d-ff INTEGER d_ff [default: 2048]

--n-head INTEGER n_head [default: 8]

--batch-size INTEGER Batch size [default: 128]

--max-steps INTEGER Max train steps. [default: 300000]

--dataset [iwslt15|wmt14|wmt15]

Which translation dataset to use. [default:

iwslt15]

--help Show this message and exit.

# Train a model on dataset WMT14:

$ python train.py --dataset wmt14

Evaluate a Trained Model

Let's say, the model is saved in folder transformer-wmt14-seq20-d512-head8-1541573730 in checkpoints folder.

$ python eval.py transformer-wmt14-seq20-d512-head8-1541573730

With the default config, this implementation gets BLEU ~ 20 on wmt14 test set.

Implementation Notes

[WIP] A couple of tricking points in the implementation.

- How to construct the mask correctly?

- How to correctly shift decoder input (as training input) and decoder target (as ground truth in the loss function)?

- How to make the prediction in an autoregressive way?

- Keeping the embedding of

<pad>as a constant zero vector is sorta important.

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].