akoksal / Turkish Word2vec

Programming Languages

Projects that are alternatives of or similar to Turkish Word2vec

Turkish Pre-trained Word2Vec Model

(Turkish version is below. / Türkçe için aşağıya bakın.)

This tutorial introduces how to train word2vec model for Turkish language from Wikipedia dump. This code is written in Python 3 by using gensim library. Turkish is an agglutinative language and there are many words with the same lemma and different suffixes in the wikipedia corpus. I will write Turkish lemmatizer to increase quality of the model.

You can checkout wiki-page for more details. If you just want to download the pretrained model you can use this link and you can look for examples in 5. Using Word2Vec Model and Examples page in github wiki. Some of them are below:

word_vectors.most_similar(positive=["kral","kadın"],negative=["erkek"])

This is a classic example for word2vec. The most similar word vector for king+woman-man is queen as expected. Second one is "of king(kralı)", third one is "king's(kralın)". If the model was trained with lemmatization tool for Turkish language, the results would be more clear.

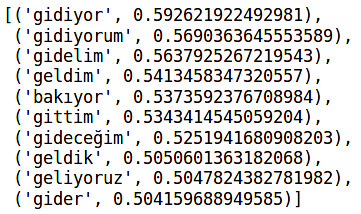

word_vectors.most_similar(positive=["geliyor","gitmek"],negative=["gelmek"])

Turkish is an aggluginative language. I have investigated this property. I analyzed most similar vector for +geliyor(he/she/it is coming)-gelmek(to come)+gitmek(to go). Most similar vector is gidiyor(he/she/it is going) as expected. Second one is "I am going". Third one is "lets go". So, we can see effects of tense and possesive suffixes in word2vec models.

Eğitilmiş Türkçe Word2Vec Modeli

Bu çalışma Wikipedia'daki Türkçe makalelerden Türkçe word2vec modelinin nasıl çıkarılabileceğini anlatmak için yapılmıştır. Kod gensim kütüphanesi kullanılarak Python 3 ile yazılmıştır. Gelecek zamanlarda, Türkçe "lemmatization" algoritmasıyla aynı kök ve yapım ekleri fakat farklı çekim eklerine sahip kelimelerin aynı kelimeye işaret etmesi sağlanarak modelin kalitesi arttırılacaktır.

Ayrıntılar için github wiki sayfasını ziyaret edebilirsiniz. Eğer sadece eğitilmiş modeli kullanmak isterseniz buradan indirebilirsiniz. Aynı zamanda örneklere bakmak için github wikisinde bulunan 5. Word2Vec Modelini Kullanmak/Örnekler sayfasına bakabilirsiniz. Bazı örnekler aşağıda mevcuttur:

word_vectors.most_similar(positive=["kral","kadın"],negative=["erkek"])

Bu word2vec için klasik bir örnektir. Kral kelime vektöründen erkek kelime vektörü çıkarılıp kadın eklendiğinde en yakın kelime vektörü kraliçe oluyor. Benzerlerin bir çoğu da kral ve kraliçenin ek almış halleri oluyor. Türkçe sondan eklemeli bir dil olduğu için bazı sonuçlar beklenildiği gibi çıkmayabiliyor. Eğer word2vec'i kelimelerin lemmalarını bularak eğitebilseydik, çok daha temiz sonuçlar elde edebilirdik.

word_vectors.most_similar(positive=["geliyor","gitmek"],negative=["gelmek"])

Bu örnekte ise filler için zaman eklerinin etkisini inceledik. En benzer kelime vektörleri beklenen sonuç ile alakalı çıktı.