dddzg / Up Detr

Programming Languages

Projects that are alternatives of or similar to Up Detr

UP-DETR: Unsupervised Pre-training for Object Detection with Transformers

This is the official PyTorch implementation and models for UP-DETR paper:

@article{dai2020up-detr,

author = {Zhigang Dai and Bolun Cai and Yugeng Lin and Junying Chen},

title = {UP-DETR: Unsupervised Pre-training for Object Detection with Transformers},

journal = {arXiv preprint arXiv:2011.09094},

year = {2020},

}

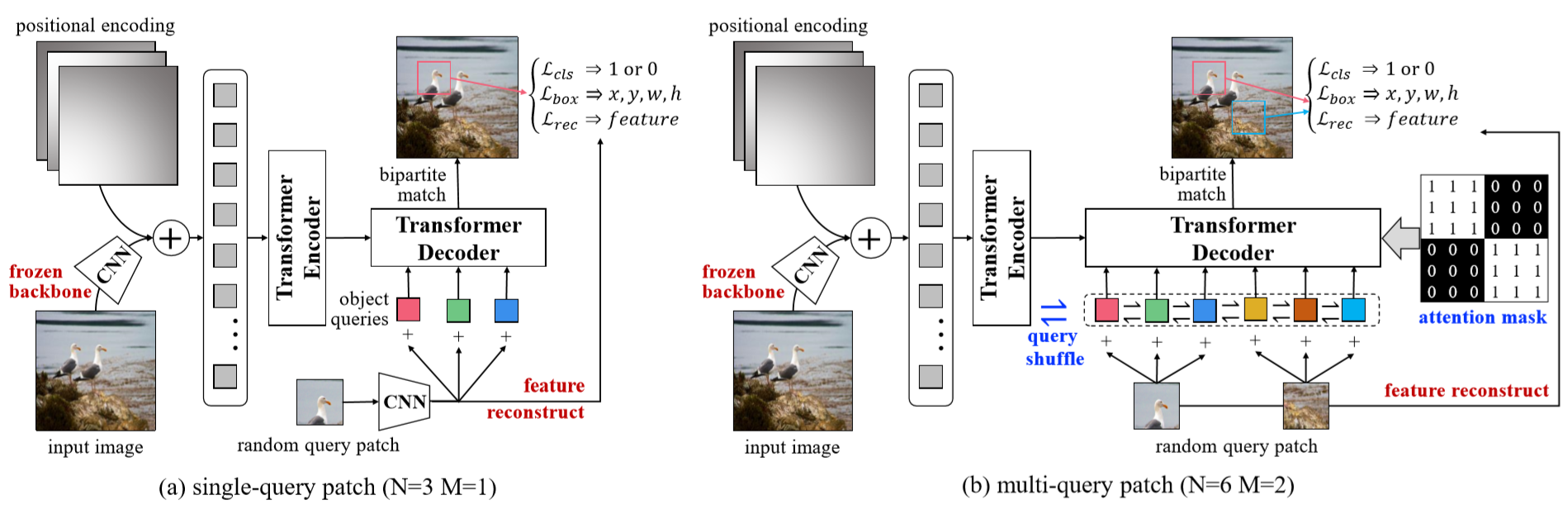

In UP-DETR, we introduce a novel pretext named random query patch detection to pre-train transformers for object detection. UP-DETR inherits from DETR with the same ResNet-50 backbone, same Transformer encoder, decoder and same codebase. With unsupervised pre-training CNN, the whole UP-DETR model doesn't require any human annotations. UP-DETR achieves 43.1 AP on COCO with 300 epochs fine-tuning. The AP of open-source version is a little higher than paper report.

Model Zoo

We provide pre-training UP-DETR and fine-tuning UP-DETR models on COCO, and plan to include more in future. The evaluation metric is same to DETR.

Here is the UP-DETR model pre-trained on ImageNet without labels. The CNN weight is initialized from SwAV, which is fixed during the transformer pre-training:

| name | backbone | epochs | url | size | md5 |

|---|---|---|---|---|---|

| UP-DETR | R50 (SwAV) | 60 | model | logs | 164Mb | 49f01f8b |

Comparision with DETR:

| name | backbone (pre-train) | epochs | box AP | url | size |

|---|---|---|---|---|---|

| DETR | R50 (Supervised) | 500 | 42.0 | - | 159Mb |

| DETR | R50 (SwAV) | 300 | 42.1 | - | 159Mb |

| UP-DETR | R50 (SwAV) | 300 | 43.1 | model | logs | 159Mb |

COCO val5k evaluation results of UP-DETR can be found in this gist.

Usage - Object Detection

There are no extra compiled components in UP-DETR and package dependencies are same to DETR. We provide instructions how to install dependencies via conda:

git clone tbd

conda install -c pytorch pytorch torchvision

conda install cython scipy

pip install -U 'git+https://github.com/cocodataset/cocoapi.git#subdirectory=PythonAPI'

UP-DETR follows two steps: pre-training and fine-tuning. We present the model pre-trained on ImageNet and then fine-tuned on COCO.

Unsupervised Pre-training

Data Preparation

Download and extract ILSVRC2012 train dataset.

We expect the directory structure to be the following:

path/to/imagenet/

n06785654/ # caterogey directory

n06785654_16140.JPEG # images

n04584207/ # caterogey directory

n04584207_14322.JPEG # images

Images can be organized disorderly because our pre-training is unsupervised.

Pre-training

To pr-train UP-DETR on a single node with 8 gpus for 60 epochs, run:

python -m torch.distributed.launch --nproc_per_node=8 --use_env main.py \

--lr_drop 40 \

--epochs 60 \

--pre_norm \

--num_patches 10 \

--batch_size 32 \

--feature_recon \

--fre_cnn \

--imagenet_path path/to/imagenet \

--output_dir path/to/save_model

As the size of pre-training images is relative small, so we can set a large batch size.

It takes about 2 hours for a epoch, so 60 epochs pre-training takes about 5 days with 8 V100 gpus.

In our further ablation experiment, we found that object query shuffle is not helpful. So, we remove it in the open-source version.

Fine-tuning

Data Preparation

Download and extract COCO 2017 dataset train and val dataset.

The directory structure is expected as follows:

path/to/coco/

annotations/ # annotation json files

train2017/ # train images

val2017/ # val images

Fine-tuning

To fine-tune UP-DETR with 8 gpus for 300 epochs, run:

python -m torch.distributed.launch --nproc_per_node=8 --use_env detr_main.py \

--lr_drop 200 \

--epochs 300 \

--lr_backbone 5e-4 \

--pre_norm \

--coco_path path/to/coco \

--pretrain path/to/save_model/checkpoint.pth

The fine-tuning cost is exactly same to DETR, which takes 28 minutes with 8 V100 gpus. So, 300 epochs training takes about 6 days.

The model can also extended to panoptic segmentation, checking more details on DETR.

Notebook

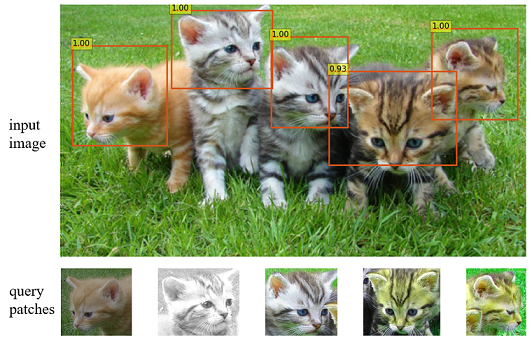

We provide a notebook in colab to get the visualization result in the paper:

- Visualization Notebook: This notebook shows how to perform query patch detection with the pre-training model (without any annotations fine-tuning).

License

UP-DETR is released under the Apache 2.0 license. Please see the LICENSE file for more information.