RasaHQ / Whatlies

Programming Languages

Labels

Projects that are alternatives of or similar to Whatlies

whatlies

A library that tries to help you to understand (note the pun).

"What lies in word embeddings?"

This small library offers tools to make visualisation easier of both word embeddings as well as operations on them.

Feedback is welcome.

Produced

This project was initiated at Rasa as a by-product of our efforts in the developer advocacy and research teams. It's an open source project and community contributions are very welcome!

Features

This library has tools to help you understand what lies in word embeddings. This includes:

- simple tools to create (interactive) visualisations

- an api for vector arithmetic that you can visualise

- support for many dimensionality reduction techniques like pca, umap and tsne

- support for many language backends including spaCy, fasttext, tfhub, huggingface and bpemb

- lightweight scikit-learn featurizer support for all these backends

Installation

You can install the package via pip;

pip install whatlies

This will install the base dependencies. Depending on the transformers and language backends that you'll be using you may want to install more. Here's all the possible installation settings you could go for.

pip install whatlies[tfhub]

pip install whatlies[transformers]

If you want it all you can also install via;

pip install whatlies[all]

Note that this will install dependencies but it will not install all the language models you might want to visualise. For example, you might still need to manually download spaCy models if you intend to use that backend.

Getting Started

For a quick overview, check out our introductory video on youtube. More in depth getting started guides can be found on the documentation page.

Examples

The idea is that you can load embeddings from a language backend and use mathematical operations on it.

from whatlies import EmbeddingSet

from whatlies.language import SpacyLanguage

lang = SpacyLanguage("en_core_web_md")

words = ["cat", "dog", "fish", "kitten", "man", "woman",

"king", "queen", "doctor", "nurse"]

emb = EmbeddingSet(*[lang[w] for w in words])

emb.plot_interactive(x_axis=emb["man"], y_axis=emb["woman"])

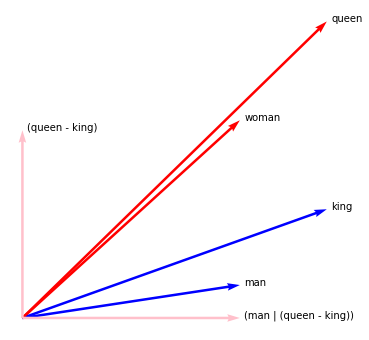

You can even do fancy operations. Like projecting onto and away from vector embeddings! You can perform these on embeddings as well as sets of embeddings. In the example below we attempt to filter away gender bias using linear algebra operations.

orig_chart = emb.plot_interactive('man', 'woman')

new_ts = emb | (emb['king'] - emb['queen'])

new_chart = new_ts.plot_interactive('man', 'woman')

There's also things like pca and umap.

from whatlies.transformers import Pca, Umap

orig_chart = emb.plot_interactive('man', 'woman')

pca_plot = emb.transform(Pca(2)).plot_interactive()

umap_plot = emb.transform(Umap(2)).plot_interactive()

pca_plot | umap_plot

Scikit-Learn Support

Every language backend in this video is available as a scikit-learn featurizer as well.

import numpy as np

from whatlies.language import BytePairLanguage

from sklearn.pipeline import Pipeline

from sklearn.linear_model import LogisticRegression

pipe = Pipeline([

("embed", BytePairLanguage("en")),

("model", LogisticRegression())

])

X = [

"i really like this post",

"thanks for that comment",

"i enjoy this friendly forum",

"this is a bad post",

"i dislike this article",

"this is not well written"

]

y = np.array([1, 1, 1, 0, 0, 0])

pipe.fit(X, y)

Documentation

To learn more and for a getting started guide, check out the documentation.

Similar Projects

There are some similar projects out and we figured it fair to mention and compare them here.

Julia Bazińska & Piotr Migdal Web App

The original inspiration for this project came from this web app and this pydata talk. It is a web app that takes a while to load but it is really fun to play with. The goal of this project is to make it easier to make similar charts from jupyter using different language backends.

Tensorflow Projector

From google there's the tensorflow projector project. It offers highly interactive 3d visualisations as well as some transformations via tensorboard.

- The tensorflow projector will create projections in tensorboard, which you can also load into jupyter notebook but whatlies makes visualisations directly.

- The tensorflow projector supports interactive 3d visuals, which whatlies currently doesn't.

- Whatlies offers lego bricks that you can chain together to get a visualisation started. This also means that you're more flexible when it comes to transforming data before visualising it.

Parallax

From Uber AI Labs there's parallax which is described in a paper here. There's a common mindset in the two tools; the goal is to use arbitrary user defined projections to understand embedding spaces better. That said, some differences that are worth to mention.

- It relies on bokeh as a visualisation backend and offers a lot of visualisation types (like radar plots). Whatlies uses altair and tries to stick to simple scatter charts. Altair can export interactive html/svg but it will not scale as well if you've drawing many points at the same time.

- Parallax is meant to be run as a stand-alone app from the command line while Whatlies is meant to be run from the jupyter notebook.

- Parallax gives a full user interface while Whatlies offers lego bricks that you can chain together to get a visualisation started.

- Whatlies relies on language backends (like spaCy, huggingface) to fetch word embeddings. Parallax allows you to instead fetch raw files on disk.

- Parallax has been around for a while, Whatlies is more new and therefore more experimental.

Local Development

If you want to develop locally you can start by running this command.

make develop

Documentation

This is generated via

make docs

Citation

Please use the following citation when you found whatlies helpful for any of your work (find the whatlies paper here):

@inproceedings{warmerdam-etal-2020-going,

title = "Going Beyond {T}-{SNE}: Exposing whatlies in Text Embeddings",

author = "Warmerdam, Vincent and

Kober, Thomas and

Tatman, Rachael",

booktitle = "Proceedings of Second Workshop for NLP Open Source Software (NLP-OSS)",

month = nov,

year = "2020",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.nlposs-1.8",

doi = "10.18653/v1/2020.nlposs-1.8",

pages = "52--60",

abstract = "We introduce whatlies, an open source toolkit for visually inspecting word and sentence embeddings. The project offers a unified and extensible API with current support for a range of popular embedding backends including spaCy, tfhub, huggingface transformers, gensim, fastText and BytePair embeddings. The package combines a domain specific language for vector arithmetic with visualisation tools that make exploring word embeddings more intuitive and concise. It offers support for many popular dimensionality reduction techniques as well as many interactive visualisations that can either be statically exported or shared via Jupyter notebooks. The project documentation is available from https://rasahq.github.io/whatlies/.",

}