ADAPT

Awesome Domain Adaptation Python Toolbox

ADAPT is a python library which provides several domain adaptation methods implemented with Tensorflow and Scikit-learn.

Documentation Website

Find the details of all implemented methods as well as illustrative examples here: ADAPT Documentation Website

Installation

This package is available on Pypi and can be installed with the following command line:

pip install adapt

The following dependencies are required and will be installed with the library:

numpyscipytensorflow(>= 2.0)scikit-learncvxopt

If for some reason, these packages failed to install, you can do it manually with:

pip install numpy scipy tensorflow scikit-learn cvxopt

Finally import the module in your python scripts with:

import adaptReference

If you use this library in your research, please cite ADAPT using the following reference: https://arxiv.org/pdf/2107.03049.pdf

@article{de2021adapt,

title={ADAPT: Awesome Domain Adaptation Python Toolbox},

author={de Mathelin, Antoine and Deheeger, Fran{\c{c}}ois and Richard, Guillaume and Mougeot, Mathilde and Vayatis, Nicolas},

journal={arXiv preprint arXiv:2107.03049},

year={2021}

}

Quick Start

import numpy as np

from adapt.feature_based import DANN

np.random.seed(0)

# Xs and Xt are shifted along the second feature.

Xs = np.concatenate((np.random.random((100, 1)),

np.zeros((100, 1))), 1)

Xt = np.concatenate((np.random.random((100, 1)),

np.ones((100, 1))), 1)

ys = 0.2 * Xs[:, 0]

yt = 0.2 * Xt[:, 0]

# With lambda set to zero, no adaptation is performed.

model = DANN(lambda_=0., random_state=0)

model.fit(Xs, ys, Xt=Xt, epochs=100, verbose=0)

print(model.evaluate(Xt, yt)) # This gives the target score at the last training epoch.

>>> 0.0231

# With lambda set to 0.1, the shift is corrected, the target score is then improved.

model = DANN(lambda_=0.1, random_state=0)

model.fit(Xs, ys, Xt=Xt, epochs=100, verbose=0)

model.evaluate(Xt, yt)

>>> 0.0011Examples

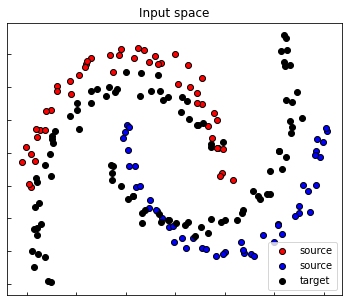

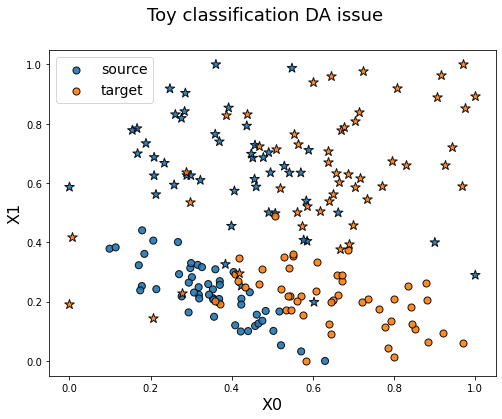

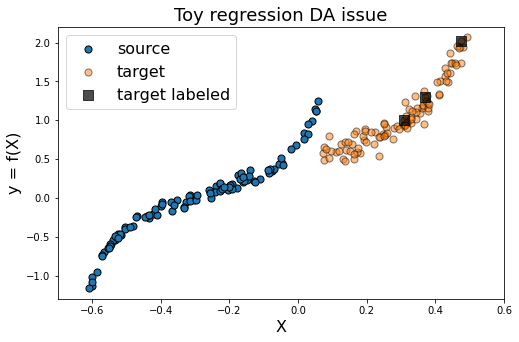

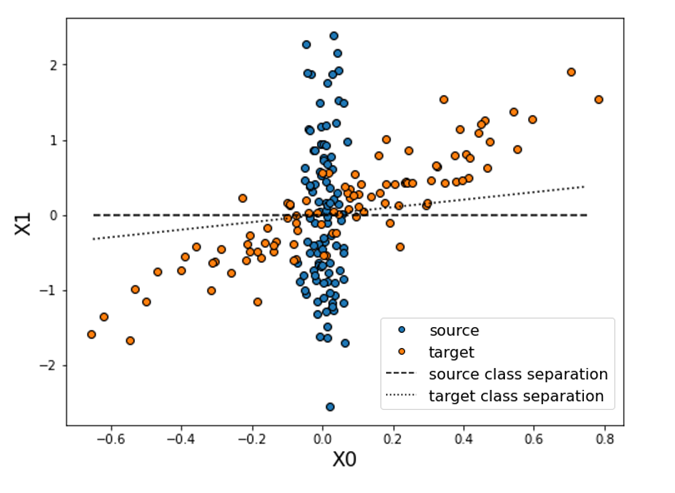

| Two Moons | Classification | Regression |

|---|---|---|

|

|

|

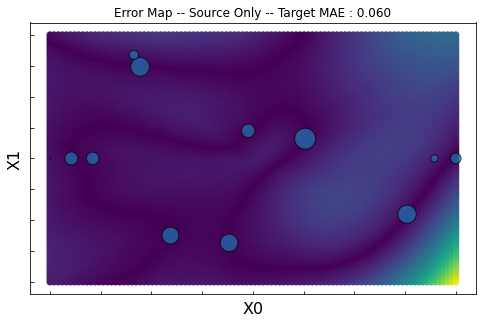

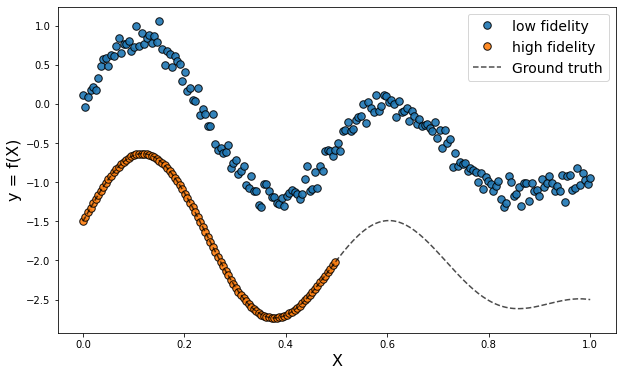

| Sample Bias | Multi-Fidelity | Rotation |

|---|---|---|

|

|

|

Content

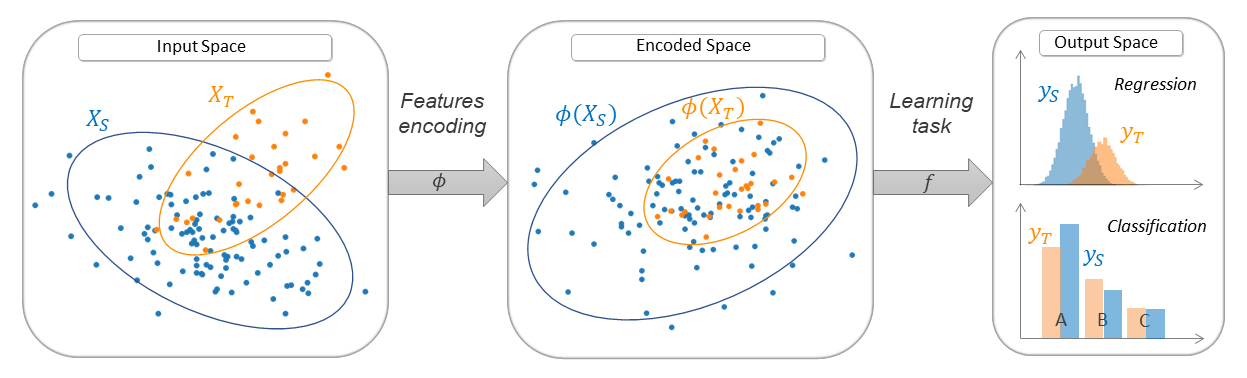

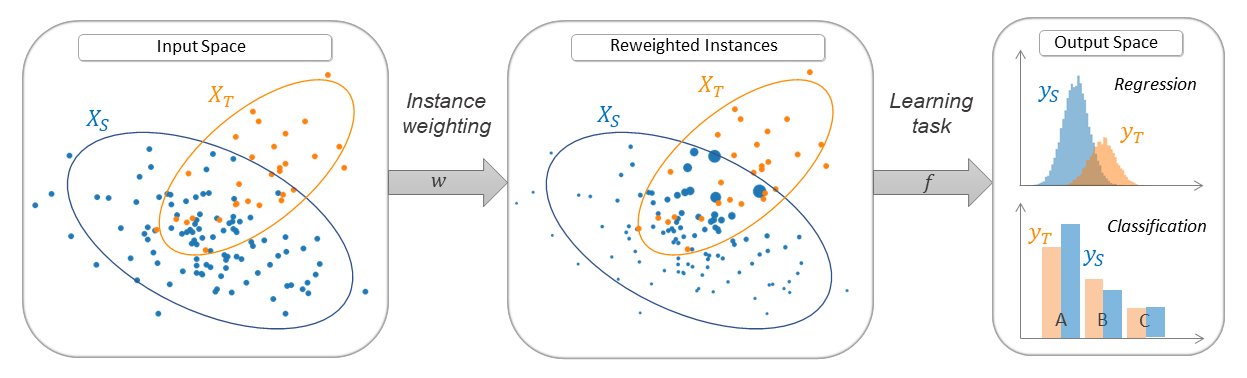

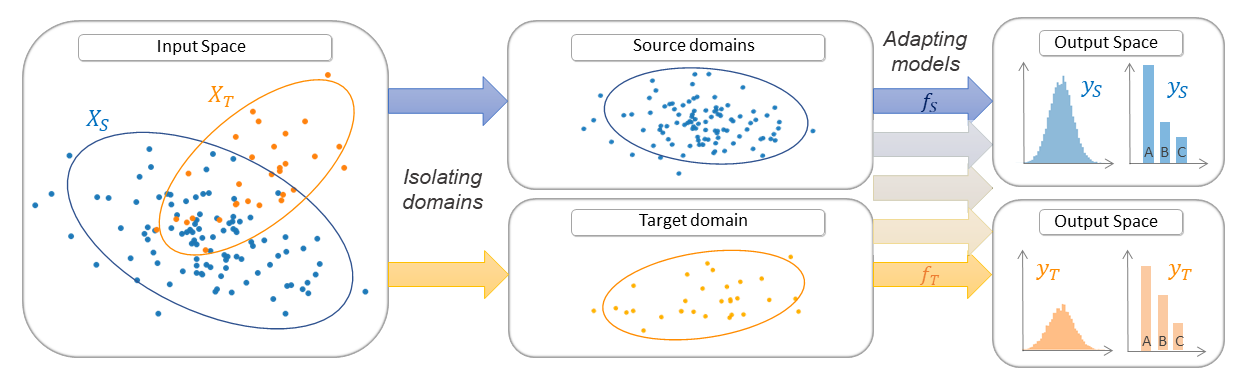

ADAPT package is divided in three sub-modules containing the following domain adaptation methods:

Feature-based methods

- FE (Frustratingly Easy Domain Adaptation) [paper]

- mSDA (marginalized Stacked Denoising Autoencoder) [paper]

- DANN (Discriminative Adversarial Neural Network) [paper]

- ADDA (Adversarial Discriminative Domain Adaptation) [paper]

- CORAL (CORrelation ALignment) [paper]

- DeepCORAL (Deep CORrelation ALignment) [paper]

- MCD (Maximum Classifier Discrepancy) [paper]

- MDD (Margin Disparity Discrepancy) [paper]

- WDGRL (Wasserstein Distance Guided Representation Learning) [paper]

Instance-based methods

- KMM (Kernel Mean Matching) [paper]

- KLIEP (Kullback–Leibler Importance Estimation Procedure) [paper]

- TrAdaBoost (Transfer AdaBoost) [paper]

- TrAdaBoostR2 (Transfer AdaBoost for Regression) [paper]

- TwoStageTrAdaBoostR2 (Two Stage Transfer AdaBoost for Regression) [paper]

Parameter-based methods

- RegularTransferLR (Regular Transfer with Linear Regression) [paper]

- RegularTransferLC (Regular Transfer with Linear Classification) [paper]

- RegularTransferNN (Regular Transfer with Neural Network) [paper]

Acknowledgement

This work has been funded by Michelin and the Industrial Data Analytics and Machine Learning chair from ENS Paris-Saclay, Borelli center.