liuwei16 / Alfnet

Labels

Projects that are alternatives of or similar to Alfnet

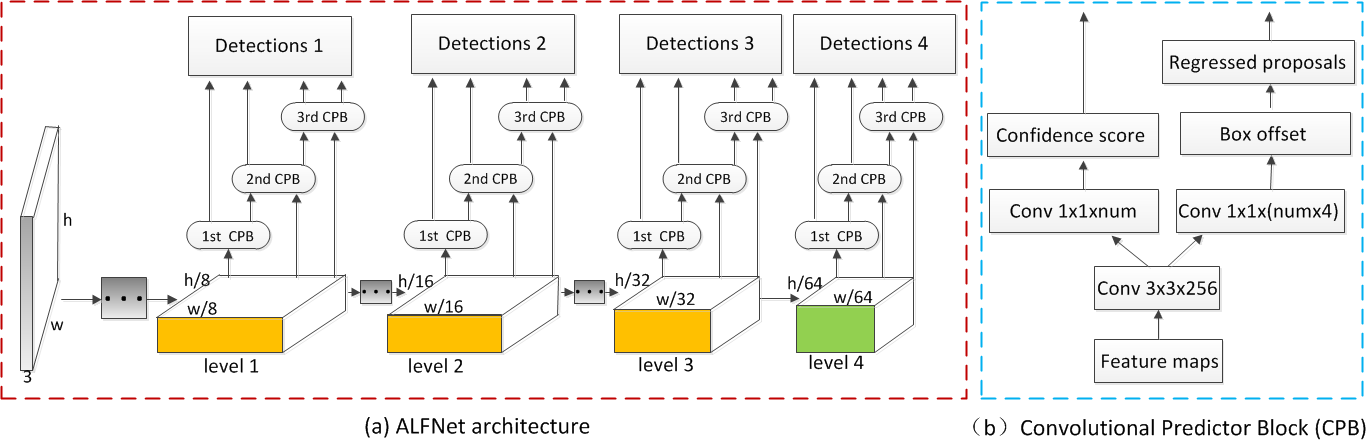

Learning Efficient Single-stage Pedestrian Detectors by Asymptotic Localization Fitting

Keras implementation of ALFNet accepted in ECCV 2018.

Introduction

This paper is a step forward pedestrian detection for both speed and accuracy. Specifically, a structurally simple but effective module called Asymptotic Localization Fitting (ALF) is proposed, which stacks a series of predictors to directly evolve the default anchor boxes step by step into improving detection results. As a result, during training the latter predictors enjoy more and better-quality positive samples, meanwhile harder negatives could be mined with increasing IoU thresholds. On top of this, an efficient single-stage pedestrian detection architecture (denoted as ALFNet) is designed, achieving state-of-the-art performance on CityPersons and Caltech. For more details, please refer to our paper.

Dependencies

- Python 2.7

- Numpy

- Tensorflow 1.x

- Keras 2.0.6

- OpenCV

Contents

Installation

- Get the code. We will call the cloned directory as '$ALFNet'.

git clone https://github.com/liuwei16/ALFNet.git

- Install the requirments.

pip install -r requirements.txt

Preparation

-

Download the dataset. We trained and tested our model on the recent CityPersons pedestrian detection dataset, you should firstly download the datasets. By default, we assume the dataset is stored in '$ALFNet/data/cityperson/'.

-

Dataset preparation. We have provided the cache files of training and validation subsets. Optionally, you can also follow the ./generate_data.py to create the cache files for training and validation. By default, we assume the cache files is stored in '$ALFNet/data/cache/cityperson/'.

-

Download the initialized models. We use the backbone ResNet-50 and MobileNet_v1 in our experiments. By default, we assume the weight files is stored in '$ALFNet/data/models/'.

Models

We have provided the models that are trained on the training subset with different ALF steps and backbone architectures. To help reproduce the results in our paper,

- For ResNet-50:

ALFNet-1s: city_res50_1step.hdf5

ALFNet-2s: city_res50_2step.hdf5

ALFNet-3s: city_res50_3step.hdf5

- For MobileNet:

MobNet-1s: city_mobnet_1step.hdf5

MobNet-2s: city_mobnet_2step.hdf5

Training

Optionally, you should set the training parameters in ./keras_alfnet/config.py.

- Train with different backbone networks.

Follow the ./train.py to start training. You can modify the parameter 'self.network' in ./keras_alfnet/config.py for different backbone networks. By default, the output weight files will be saved in '$ALFNet/output/valmodels/(network)/'.

- Train with different ALF steps.

Follow the ./train.py to start training. You can modify the parameter 'self.steps' in ./keras_alfnet/config.py for different ALF steps. By default, the output weight files will be saved in '$ALFNet/output/valmodels/(network)/(num of)steps'.

- Update: Train with the strategy of weight moving average (WMA)

Optionally, we provide an example of training ALFNet-2s with WMA (./train_2step_wma.py)

WMA is firstly proposed in Mean-Teacher.

We find that WMA is helpful to achieve more stable results and one trial is given in ./results_2step_wma.txt

Test

Follow the ./test.py to get the detection results. By default, the output .txt files will be saved in '$ALFNet/output/valresults/(network)/(num of)steps'.

Evaluation

- Follow the ./evaluation/dt_txt2json.m to convert the '.txt' files to '.json'.

- Follow the ./evaluation/eval_script/eval_demo.py to get the Miss Rate (MR) results of models. By default, the models are evaluated based on the Reasonable settting. Optionally, you can modify the parameters in ./evaluation/eval_script/eval_MR_multisetup.py to evaluate the models in different settings, such as different occlusion levels and IoU thresholds.

Citation

If you think our work is useful in your research, please consider citing:

@InProceedings{Liu_2018_ECCV,

author = {Liu, Wei and Liao, Shengcai and Hu, Weidong and Liang, Xuezhi and Chen, Xiao},

title = {Learning Efficient Single-stage Pedestrian Detectors by Asymptotic Localization Fitting},

booktitle = {The European Conference on Computer Vision (ECCV)},

month = {September},

year = {2018}

}