huangwl18 / Modular Rl

Projects that are alternatives of or similar to Modular Rl

One Policy to Control Them All:

Shared Modular Policies for Agent-Agnostic Control

ICML 2020

[Project Page] [Paper] [Demo Video] [Long Oral Talk]

Wenlong Huang1, Igor Mordatch2, Deepak Pathak3 4

1University of California, Berkeley, 2Google Brain, 3Facebook AI Research, 4Carnegie Mellon University

This is a PyTorch-based implementation of our Shared Modular Policies. We take a step beyond the laborious training process of the conventional single-agent RL policy by tackling the possibility of learning general-purpose controllers for diverse robotic systems. Our approach trains a single policy for a wide variety of agents which can then generalize to unseen agent shapes at test-time without any further training.

If you find this work useful in your research, please cite using the following BibTeX:

@inproceedings{huang2020smp,

Author = {Huang, Wenlong and

Mordatch, Igor and Pathak, Deepak},

Title = {One Policy to Control Them All:

Shared Modular Policies for Agent-Agnostic Control},

Booktitle = {ICML},

Year = {2020}

}

Setup

Requirements

- Python-3.6

- PyTorch-1.1.0

- CUDA-9.0

- CUDNN-7.6

- MuJoCo-200: download binaries, put license file inside, and add path to .bashrc

Setting up repository

git clone https://github.com/huangwl18/modular-rl.git

cd modular-rl/

python3.6 -m venv mrEnv

source $PWD/mrEnv/bin/activate

Installing Dependencies

pip install --upgrade pip

pip install -r requirements.txt

Running Code

| Flags and Parameters | Description |

|---|---|

--morphologies <List of STRING> |

Find existing environments matching each keyword for training (e.g. walker, hopper, humanoid, and cheetah; see examples below) |

--custom_xml <PATH> |

Path to custom xml file for training the modular policy.When <PATH> is a file, train with that xml morphology only. When <PATH> is a directory, train on all xml morphologies found in the directory. |

--td |

Enable top-down message passing (pass --td --bu for both-way message passing) |

--bu |

Enable bottom-up message passing (pass --td --bu for both-way message passing) |

--expID <INT> |

Experiment ID for creating saving directory |

--seed <INT> |

(Optional) Seed for Gym, PyTorch and Numpy |

Train with existing environment

- Train both-way SMP on

Walker++(12 variants of walker):

python main.py --expID 001 --td --bu --morphologies walker

- Train both-way SMP on

Humanoid++(8 variants of 2d humanoid):

python main.py --expID 002 --td --bu --morphologies humanoid

- Train both-way SMP on

Cheetah++(15 variants of cheetah):

python main.py --expID 003 --td --bu --morphologies cheetah

- Train both-way SMP on

Hopper++(3 variants of hopper):

python main.py --expID 004 --td --bu --morphologies hopper

- To train both-way SMP for only one environment (e.g.

walker_7_main), specify the full name of the environment without the.xmlsuffix:

python main.py --expID 005 --td --bu --morphologies walker_7_main

To run with one-way message passing, disable --td for bottom-up-only message passing or disable --bu for top-down-only message passing.

To run without any message passing, disable both --td and --bu.

Train with custom environment

- Train both-way SMP for only one environment:

python main.py --expID 006 --td --bu --custom_xml <PATH_TO_XML_FILE>

- Train both-way SMP for multiple environments (

xmlfiles must be in the same directory):

python main.py --expID 007 --td --bu --custom_xml <PATH_TO_XML_DIR>

Note that the current implementation assumes all custom MuJoCo agents are 2D planar and contain only one body tag with name torso attached to worldbody.

Visualization

- To visualize all

walkerenvironments with the both-way SMP model from experimentexpID 001:

python visualize.py --expID 001 --td --bu --morphologies walker

- To visualize only

walker_7_mainenvironment with the both-way SMP model from experimentexpID 001:

python visualize.py --expID 001 --td --bu --morphologies walker_7_main

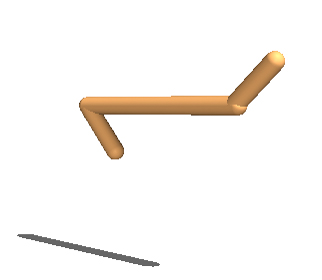

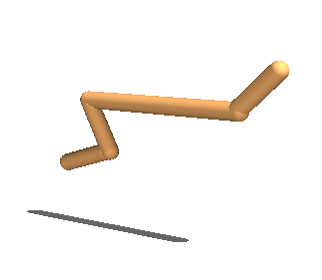

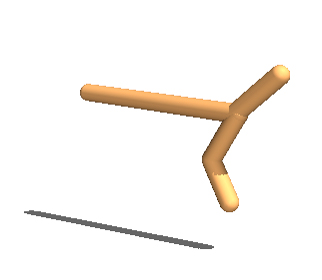

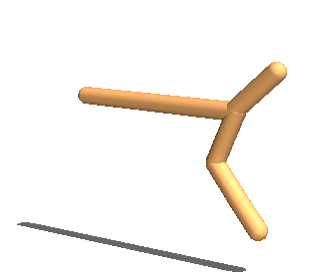

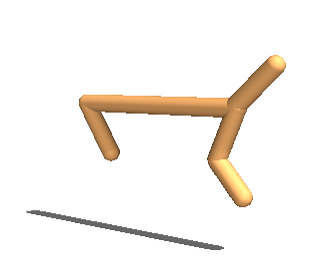

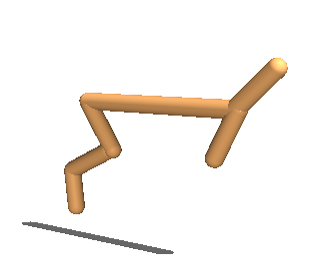

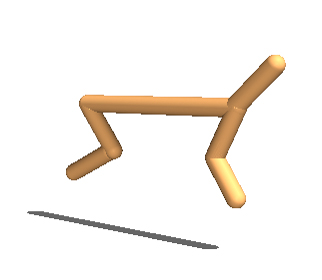

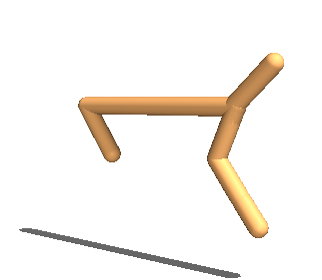

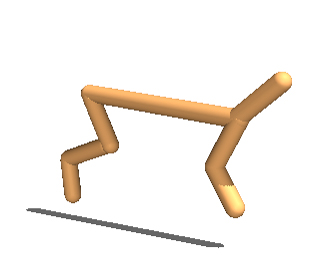

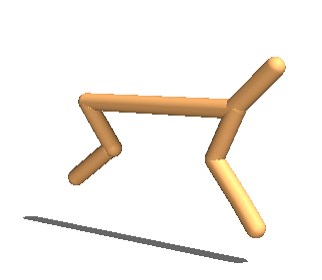

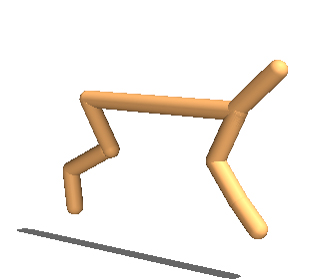

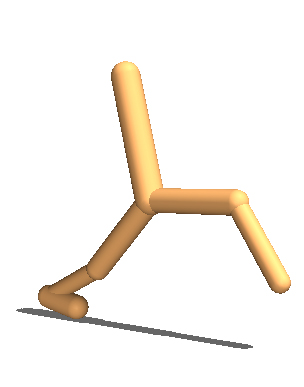

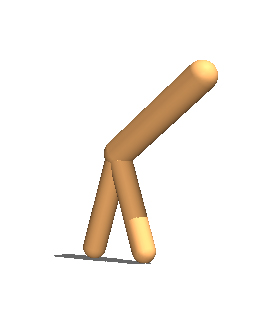

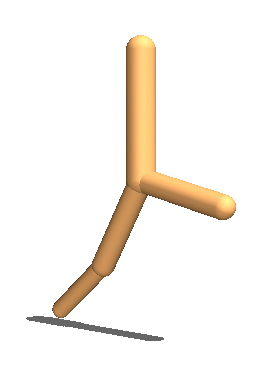

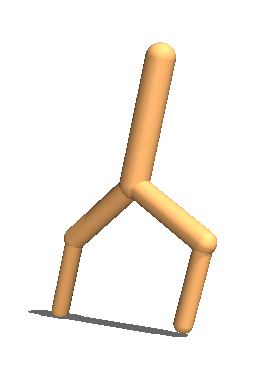

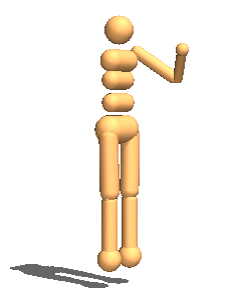

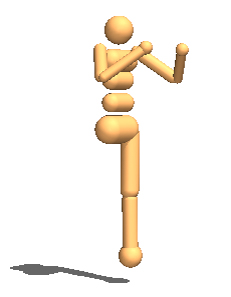

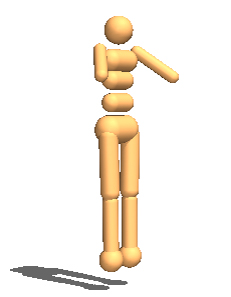

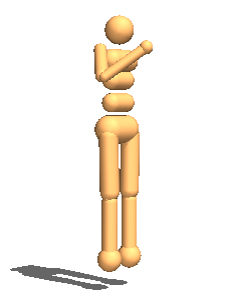

Provided Environments

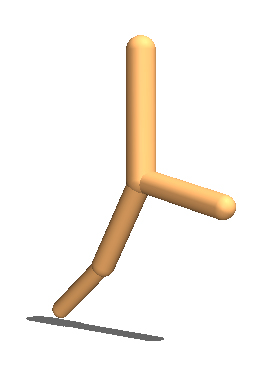

| Walker | |||||

walker_2_main |

walker_3_main |

walker_4_main |

walker_5_main |

walker_6_main |

walker_7_main |

walker_2_flipped |

walker_3_flipped |

walker_4_flipped |

walker_5_flipped |

walker_6_flipped |

walker_7_flipped |

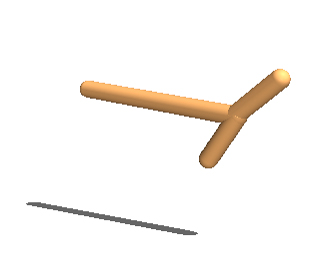

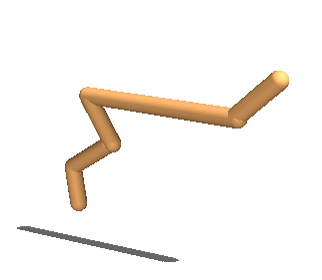

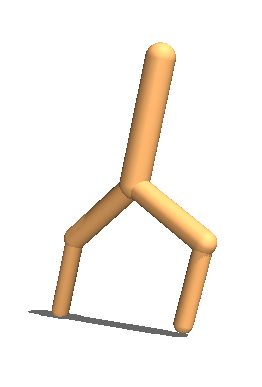

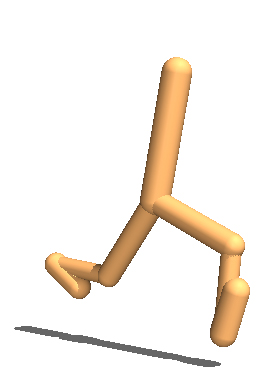

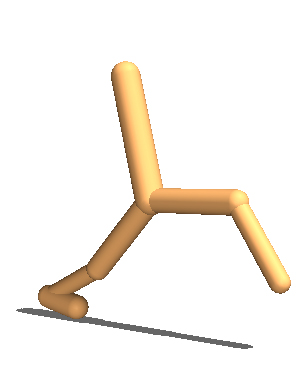

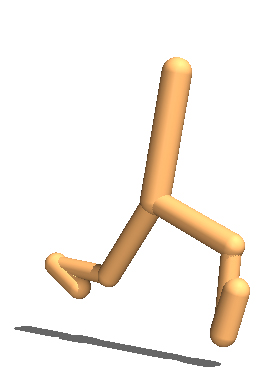

| 2D Humanoid | |||

humanoid_2d_7_left_arm |

humanoid_2d_7_left_leg |

humanoid_2d_7_lower_arms |

humanoid_2d_7_right_arm |

humanoid_2d_7_right_leg |

humanoid_2d_8_left_knee |

humanoid_2d_8_right_knee |

humanoid_2d_9_full |

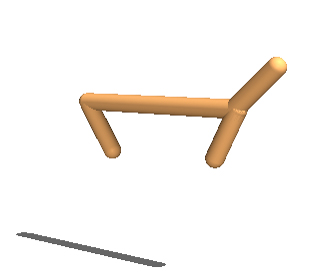

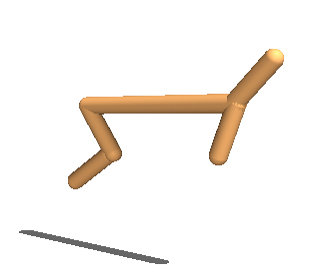

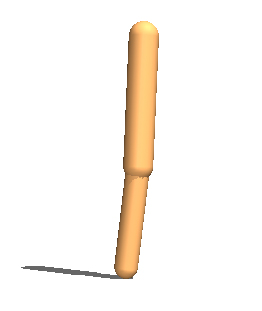

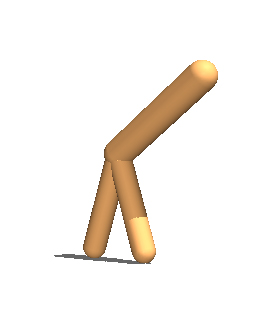

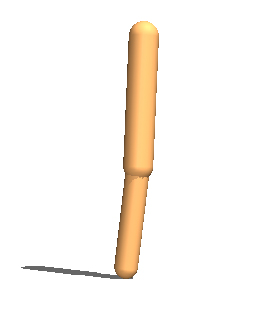

| Hopper | ||

hopper_3 |

hopper_4 |

hopper_5 |

Note that each walker agent has an identical instance of itself called flipped, for which SMP always flips the torso message passed to both legs (e.g. the message that is passed to the left leg in the main instance is now passed the right leg).

Acknowledgement

The TD3 code is based on this open-source implementation. The code for Dynamic Graph Neural Networks is adapted from Modular Assemblies (Pathak*, Lu* et al., NeurIPS 2019).