jnhwkim / Ban Vqa

Programming Languages

Labels

Projects that are alternatives of or similar to Ban Vqa

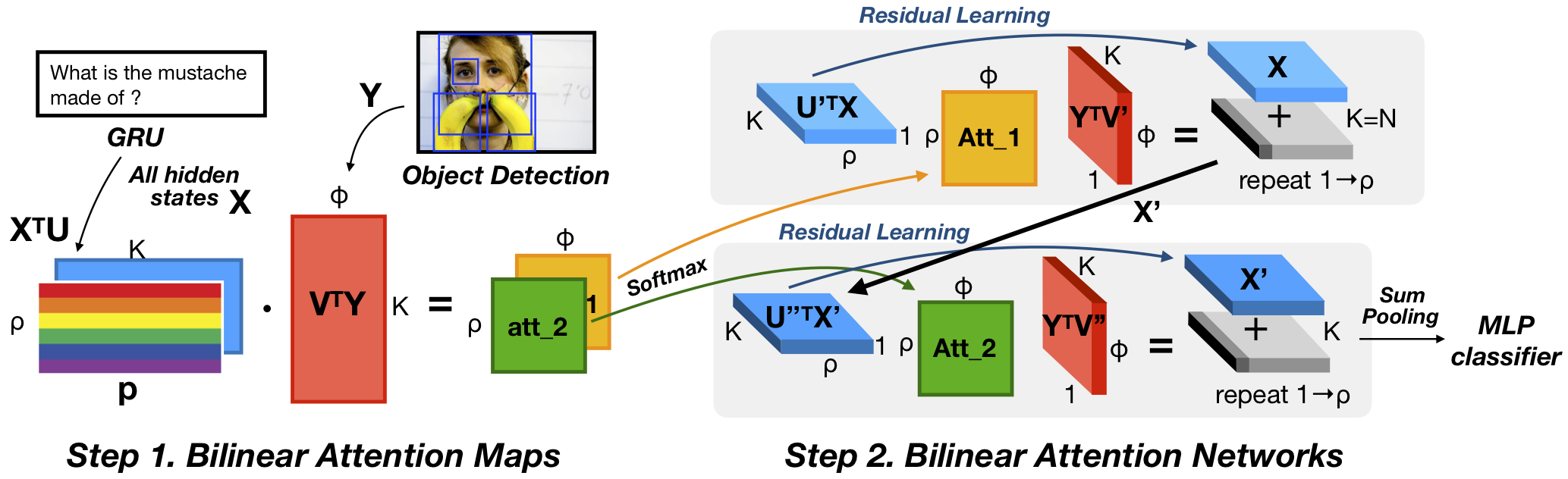

Bilinear Attention Networks

This repository is the implementation of Bilinear Attention Networks for the visual question answering and Flickr30k Entities tasks.

For the visual question answering task, our single model achieved 70.35 and an ensemble of 15 models achieved 71.84 (Test-standard, VQA 2.0). For the Flickr30k Entities task, our single model achieved 69.88 / 84.39 / 86.40 for [email protected], 5, and 10, respectively (slightly better than the original paper). For the detail, please refer to our technical report.

This repository is based on and inspired by @hengyuan-hu's work. We sincerely thank for their sharing of the codes.

Updates

- Bilinear attention networks using

torch.einsum, backward-compatible. (12 Mar 2019) - Now compatible with PyTorch v1.0.1. (12 Mar 2019)

Prerequisites

You may need a machine with 4 GPUs, 64GB memory, and PyTorch v1.0.1 for Python 3.

WARNING: do not use PyTorch v1.0.0 due to a bug which induces underperformance.

VQA

Preprocessing

Our implementation uses the pretrained features from bottom-up-attention, the adaptive 10-100 features per image. In addition to this, the GloVe vectors. For the simplicity, the below script helps you to avoid a hassle.

All data should be downloaded to a data/ directory in the root directory of this repository.

The easiest way to download the data is to run the provided script tools/download.sh from the repository root. If the script does not work, it should be easy to examine the script and modify the steps outlined in it according to your needs. Then run tools/process.sh from the repository root to process the data to the correct format.

For now, you should manually download for the below options (used in our best single model).

We use a part of Visual Genome dataset for data augmentation. The image meta data and the question answers of Version 1.2 are needed to be placed in data/.

We use MS COCO captions to extract semantically connected words for the extended word embeddings along with the questions of VQA 2.0 and Visual Genome. You can download in here. Since the contribution of these captions is minor, you can skip the processing of MS COCO captions by removing cap elements in the target option in this line.

Counting module (Zhang et al., 2018) is integrated in this repository as counting.py for your convenience. The source repository can be found in @Cyanogenoid's vqa-counting.

Training

$ python3 main.py --use_both True --use_vg True

to start training (the options for the train/val splits and Visual Genome to train, respectively). The training and validation scores will be printed every epoch, and the best model will be saved under the directory "saved_models". The default hyperparameters should give you the best result of single model, which is around 70.04 for test-dev split.

Validation

If you trained a model with the training split using

$ python3 main.py

then you can run evaluate.py with appropriate options to evaluate its score for the validation split.

Pretrained model

We provide the pretrained model reported as the best single model in the paper (70.04 for test-dev, 70.35 for test-standard).

Please download the link and move to saved_models/ban/model_epoch12.pth (you may encounter a redirection page to confirm). The training log is found in here.

$ python3 test.py --label mytest

The result json file will be found in the directory results/.

Without Visual Genome augmentation

Without the Visual Genome augmentation, we get 69.50 (average of 8 models with the standard deviation of 0.096) for the test-dev split. We use the 8-glimpse model, the learning rate is starting with 0.001 (please see this change for the better results), 13 epochs, and the batch size of 256.

Flickr30k Entities

Preprocessing

You have to manually download Annotation and Sentence files to data/flickr30k/Flickr30kEntities.tar.gz. Then run the provided script tools/download_flickr.sh and tools/process_flickr.sh from the root of this repository, similarly to the case of VQA. Note that the image features of Flickr30k were generated using bottom-up-attention pretrained model.

Training

$ python3 main.py --task flickr --out saved_models/flickr

to start training. --gamma option does not applied. The default hyperparameters should give you approximately 69.6 for [email protected] for the test split.

Validation

Please download the link and move to saved_models/flickr/model_epoch5.pth (you may encounter a redirection page to confirm).

$ python3 evaluate.py --task flickr --input saved_models/flickr --epoch 5

to evaluate the scores for the test split.

Troubleshooting

Please check troubleshooting wiki and previous issue history.

Citation

If you use this code as part of any published research, we'd really appreciate it if you could cite the following paper:

@inproceedings{Kim2018,

author = {Kim, Jin-Hwa and Jun, Jaehyun and Zhang, Byoung-Tak},

booktitle = {Advances in Neural Information Processing Systems 31},

title = {{Bilinear Attention Networks}},

pages = {1571--1581},

year = {2018}

}

License

MIT License