DongjunLee / Transformer Tensorflow

TensorFlow implementation of 'Attention Is All You Need (2017. 6)'

Stars: ✭ 319

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Transformer Tensorflow

Keras Transformer

Transformer implemented in Keras

Stars: ✭ 273 (-14.42%)

Mutual labels: translation, attention, transformer

Njunmt Tf

An open-source neural machine translation system developed by Natural Language Processing Group, Nanjing University.

Stars: ✭ 97 (-69.59%)

Mutual labels: translation, attention, transformer

seq2seq-pytorch

Sequence to Sequence Models in PyTorch

Stars: ✭ 41 (-87.15%)

Mutual labels: transformer, attention

TRAR-VQA

[ICCV 2021] TRAR: Routing the Attention Spans in Transformers for Visual Question Answering -- Official Implementation

Stars: ✭ 49 (-84.64%)

Mutual labels: transformer, attention

CrabNet

Predict materials properties using only the composition information!

Stars: ✭ 57 (-82.13%)

Mutual labels: transformer, attention

Sockeye

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Stars: ✭ 990 (+210.34%)

Mutual labels: translation, transformer

Onnxt5

Summarization, translation, sentiment-analysis, text-generation and more at blazing speed using a T5 version implemented in ONNX.

Stars: ✭ 143 (-55.17%)

Mutual labels: translation, transformer

learningspoons

nlp lecture-notes and source code

Stars: ✭ 29 (-90.91%)

Mutual labels: transformer, attention

Pytorch Seq2seq

Tutorials on implementing a few sequence-to-sequence (seq2seq) models with PyTorch and TorchText.

Stars: ✭ 3,418 (+971.47%)

Mutual labels: attention, transformer

visualization

a collection of visualization function

Stars: ✭ 189 (-40.75%)

Mutual labels: transformer, attention

Relation-Extraction-Transformer

NLP: Relation extraction with position-aware self-attention transformer

Stars: ✭ 63 (-80.25%)

Mutual labels: transformer, attention

Visual-Transformer-Paper-Summary

Summary of Transformer applications for computer vision tasks.

Stars: ✭ 51 (-84.01%)

Mutual labels: transformer, attention

Rust Bert

Rust native ready-to-use NLP pipelines and transformer-based models (BERT, DistilBERT, GPT2,...)

Stars: ✭ 510 (+59.87%)

Mutual labels: translation, transformer

Pytorch Transformer

pytorch implementation of Attention is all you need

Stars: ✭ 199 (-37.62%)

Mutual labels: translation, transformer

Transformer

A TensorFlow Implementation of the Transformer: Attention Is All You Need

Stars: ✭ 3,646 (+1042.95%)

Mutual labels: translation, transformer

h-transformer-1d

Implementation of H-Transformer-1D, Hierarchical Attention for Sequence Learning

Stars: ✭ 121 (-62.07%)

Mutual labels: transformer, attention

Self Attention Cv

Implementation of various self-attention mechanisms focused on computer vision. Ongoing repository.

Stars: ✭ 209 (-34.48%)

Mutual labels: attention, transformer

transformer

A PyTorch Implementation of "Attention Is All You Need"

Stars: ✭ 28 (-91.22%)

Mutual labels: transformer, attention

pynmt

a simple and complete pytorch implementation of neural machine translation system

Stars: ✭ 13 (-95.92%)

Mutual labels: translation, transformer

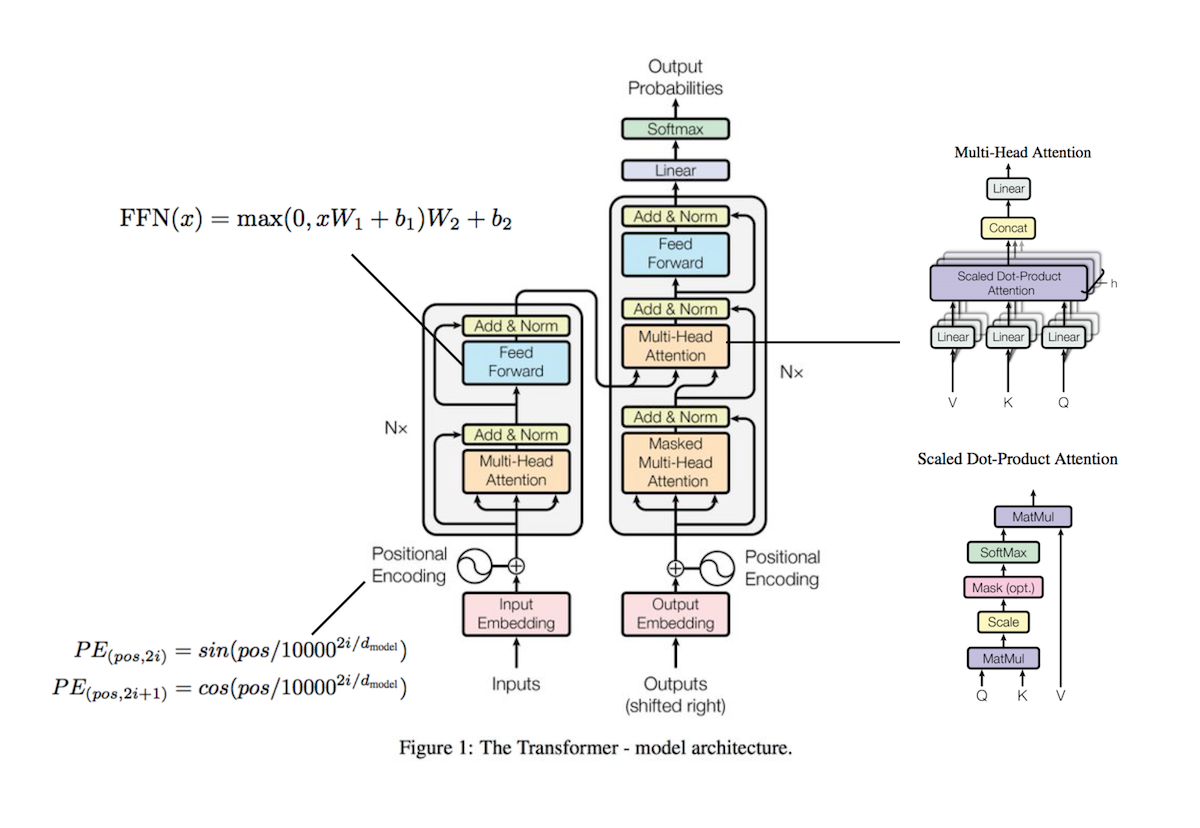

transformer

TensorFlow implementation of Attention Is All You Need. (2017. 6)

Requirements

- Python 3.6

- TensorFlow 1.8

- hb-config (Singleton Config)

- nltk (tokenizer and blue score)

- tqdm (progress bar)

- Slack Incoming Webhook URL

Project Structure

init Project by hb-base

.

├── config # Config files (.yml, .json) using with hb-config

├── data # dataset path

├── notebooks # Prototyping with numpy or tf.interactivesession

├── transformer # transformer architecture graphs (from input to logits)

├── __init__.py # Graph logic

├── attention.py # Attention (multi-head, scaled_dot_product and etc..)

├── encoder.py # Encoder logic

├── decoder.py # Decoder logic

└── layer.py # Layers (FFN)

├── data_loader.py # raw_date -> precossed_data -> generate_batch (using Dataset)

├── hook.py # training or test hook feature (eg. print_variables)

├── main.py # define experiment_fn

└── model.py # define EstimatorSpec

Reference : hb-config, Dataset, experiments_fn, EstimatorSpec

Todo

- Train and evaluate with 'WMT German-English (2016)' dataset

Config

Can control all Experimental environment.

example: check-tiny.yml

data:

base_path: 'data/'

raw_data_path: 'tiny_kor_eng'

processed_path: 'tiny_processed_data'

word_threshold: 1

PAD_ID: 0

UNK_ID: 1

START_ID: 2

EOS_ID: 3

model:

batch_size: 4

num_layers: 2

model_dim: 32

num_heads: 4

linear_key_dim: 20

linear_value_dim: 24

ffn_dim: 30

dropout: 0.2

train:

learning_rate: 0.0001

optimizer: 'Adam' ('Adagrad', 'Adam', 'Ftrl', 'Momentum', 'RMSProp', 'SGD')

train_steps: 15000

model_dir: 'logs/check_tiny'

save_checkpoints_steps: 1000

check_hook_n_iter: 100

min_eval_frequency: 100

print_verbose: True

debug: False

slack:

webhook_url: "" # after training notify you using slack-webhook

- debug mode : using tfdbg

-

check-tinyis a data set with about 30 sentences that are translated from Korean into English. (recommend read it :) )

Usage

Install requirements.

pip install -r requirements.txt

Then, pre-process raw data.

python data_loader.py --config check-tiny

Finally, start train and evaluate model

python main.py --config check-tiny --mode train_and_evaluate

Or, you can use IWSLT'15 English-Vietnamese dataset.

sh prepare-iwslt15.en-vi.sh # download dataset

python data_loader.py --config iwslt15-en-vi # preprocessing

python main.py --config iwslt15-en-vi --mode train_and_evalueate # start training

Predict

After training, you can test the model.

- command

python predict.py --config {config} --src {src_sentence}

- example

$ python predict.py --config check-tiny --src "안녕하세요. 반갑습니다."

------------------------------------

Source: 안녕하세요. 반갑습니다.

> Result: Hello . I'm glad to see you . <\s> vectors . <\s> Hello locations . <\s> will . <\s> . <\s> you . <\s>

Experiments modes

✅ : Working

◽️ : Not tested yet.

- ✅

evaluate: Evaluate on the evaluation data. - ◽️

extend_train_hooks: Extends the hooks for training. - ◽️

reset_export_strategies: Resets the export strategies with the new_export_strategies. - ◽️

run_std_server: Starts a TensorFlow server and joins the serving thread. - ◽️

test: Tests training, evaluating and exporting the estimator for a single step. - ✅

train: Fit the estimator using the training data. - ✅

train_and_evaluate: Interleaves training and evaluation.

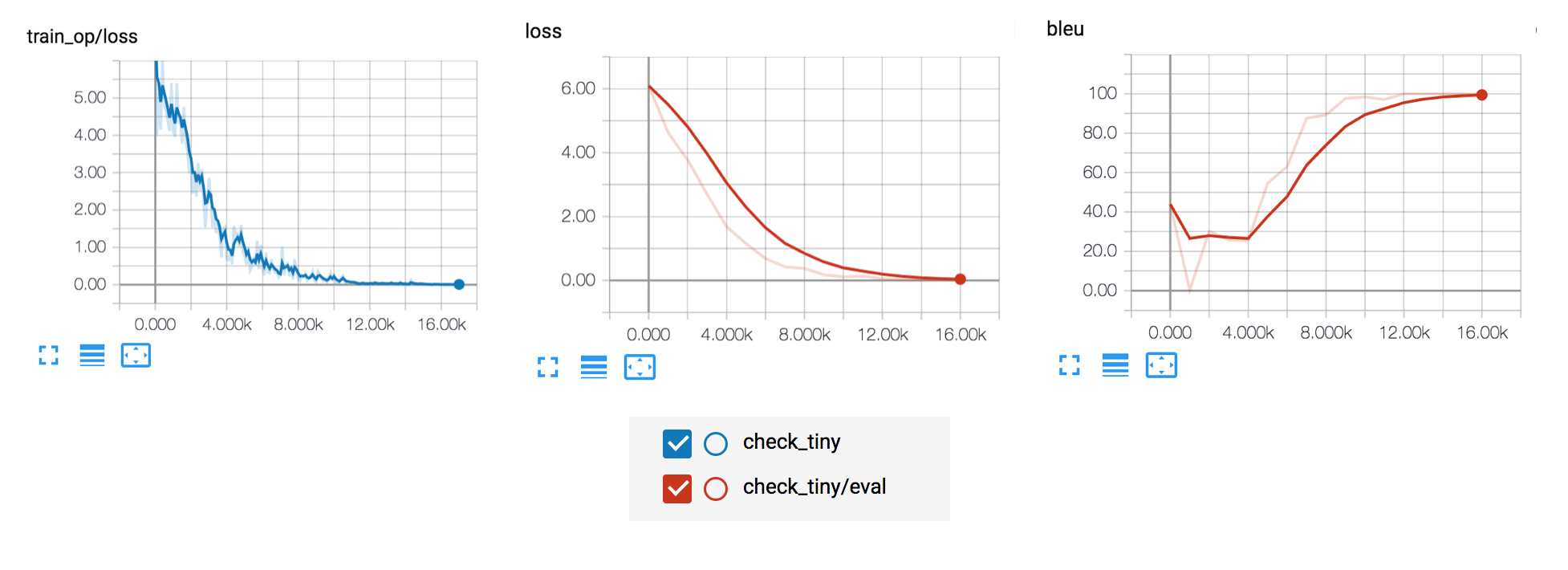

Tensorboar

tensorboard --logdir logs

- check-tiny example

Reference

- hb-research/notes - Attention Is All You Need

- Paper - Attention Is All You Need (2017. 6) by A Vaswani (Google Brain Team)

- tensor2tensor - A library for generalized sequence to sequence models (official code)

Author

Dongjun Lee ([email protected])

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].