MorvanZhou / Nlp Tutorials

Programming Languages

Projects that are alternatives of or similar to Nlp Tutorials

Natural Language Processing Tutorial

Tutorial in Chinese can be found in mofanpy.com.

This repo includes many simple implementations of models in Neural Language Processing (NLP).

All code implementations in this tutorial are organized as following:

- Search Engine

- Understand Word (W2V)

- Understand Sentence (Seq2Seq)

- All about Attention

- Pretrained Models

Thanks for the contribution made by @W1Fl with a simplified keras codes in simple_realize

Installation

$ git clone https://github.com/MorvanZhou/NLP-Tutorials

$ cd NLP-Tutorials/

$ sudo pip3 install -r requirements.txt

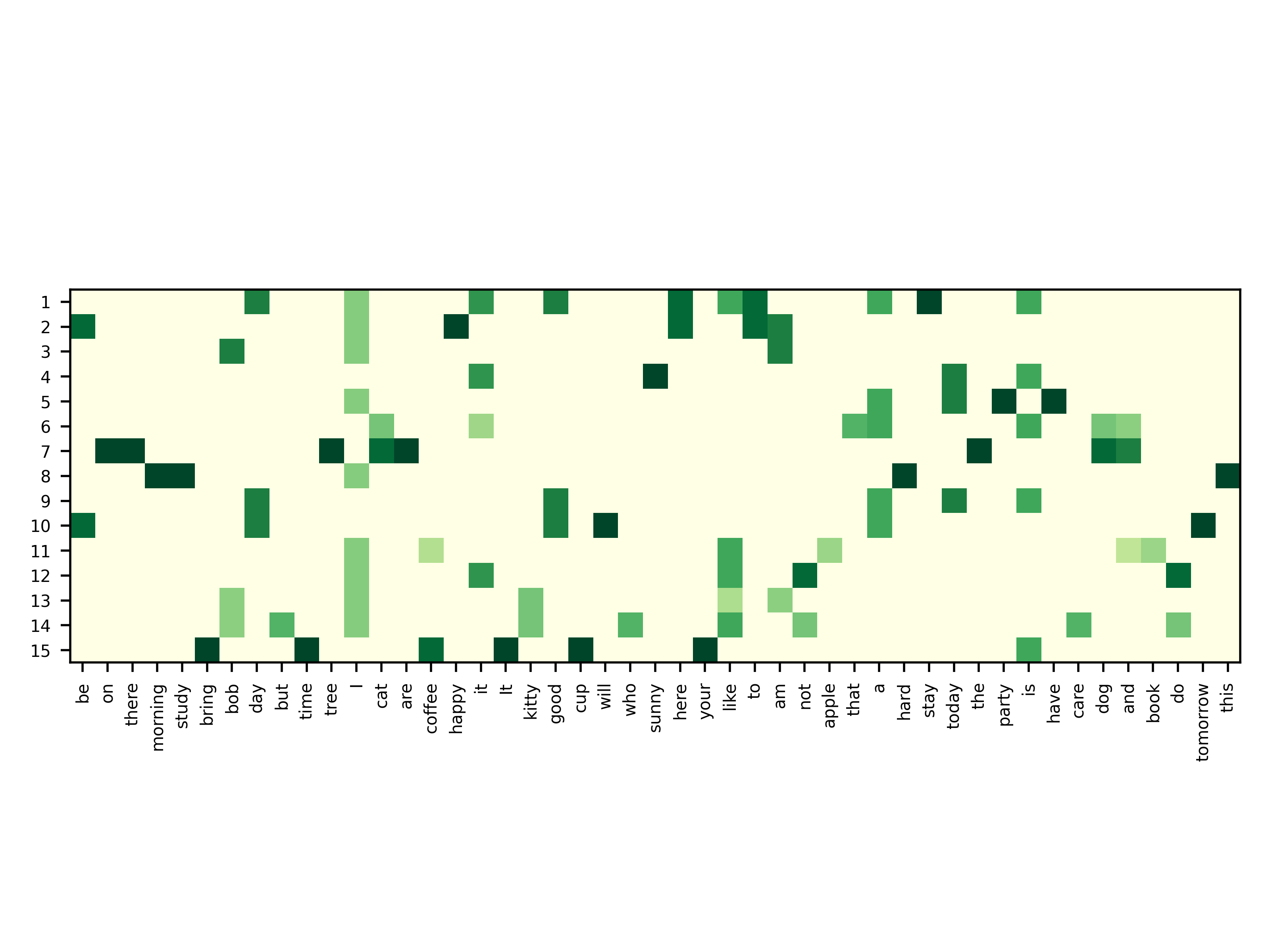

TF-IDF

TF-IDF numpy code

TF-IDF short sklearn code

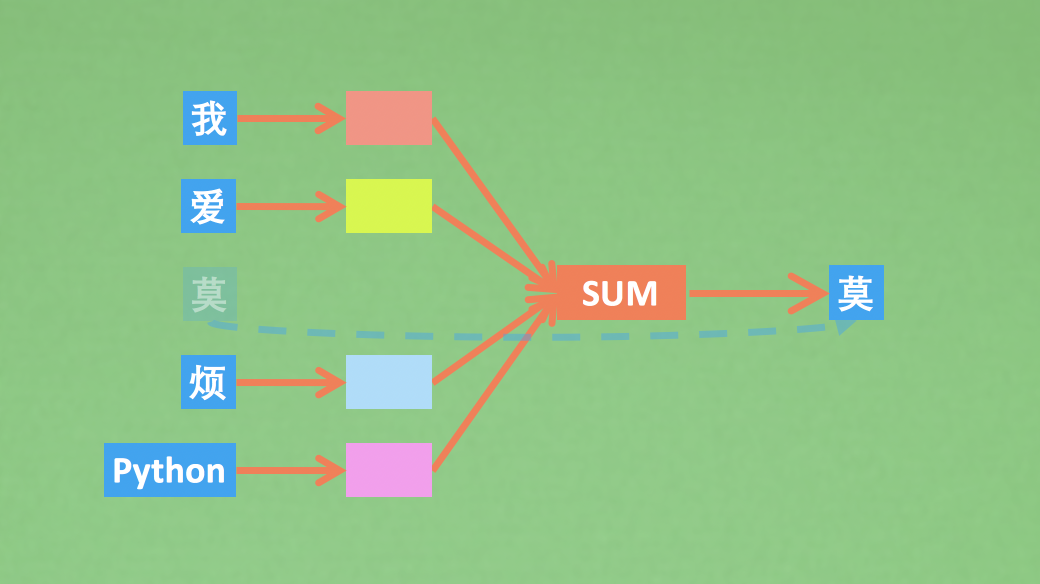

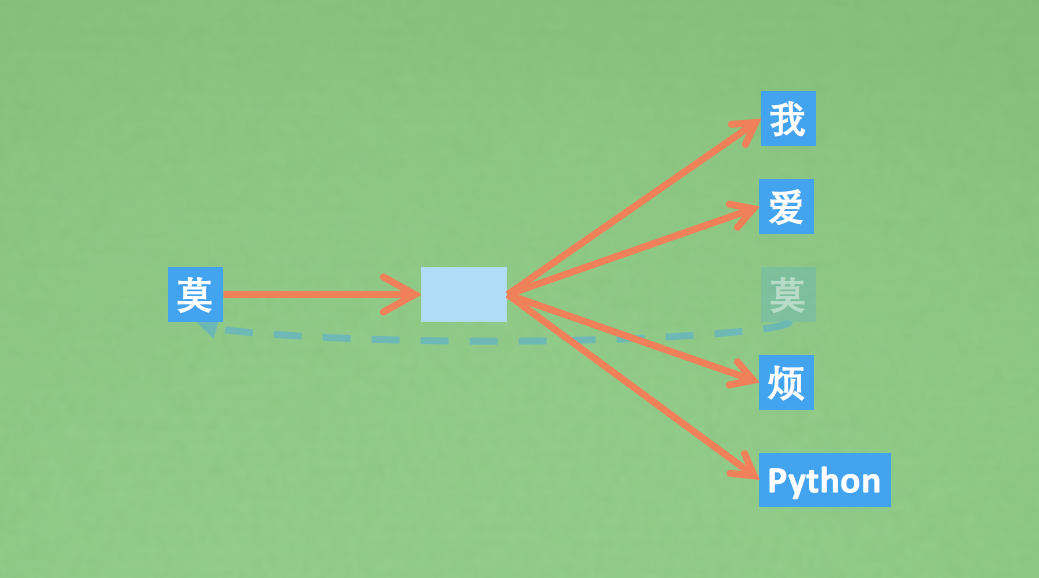

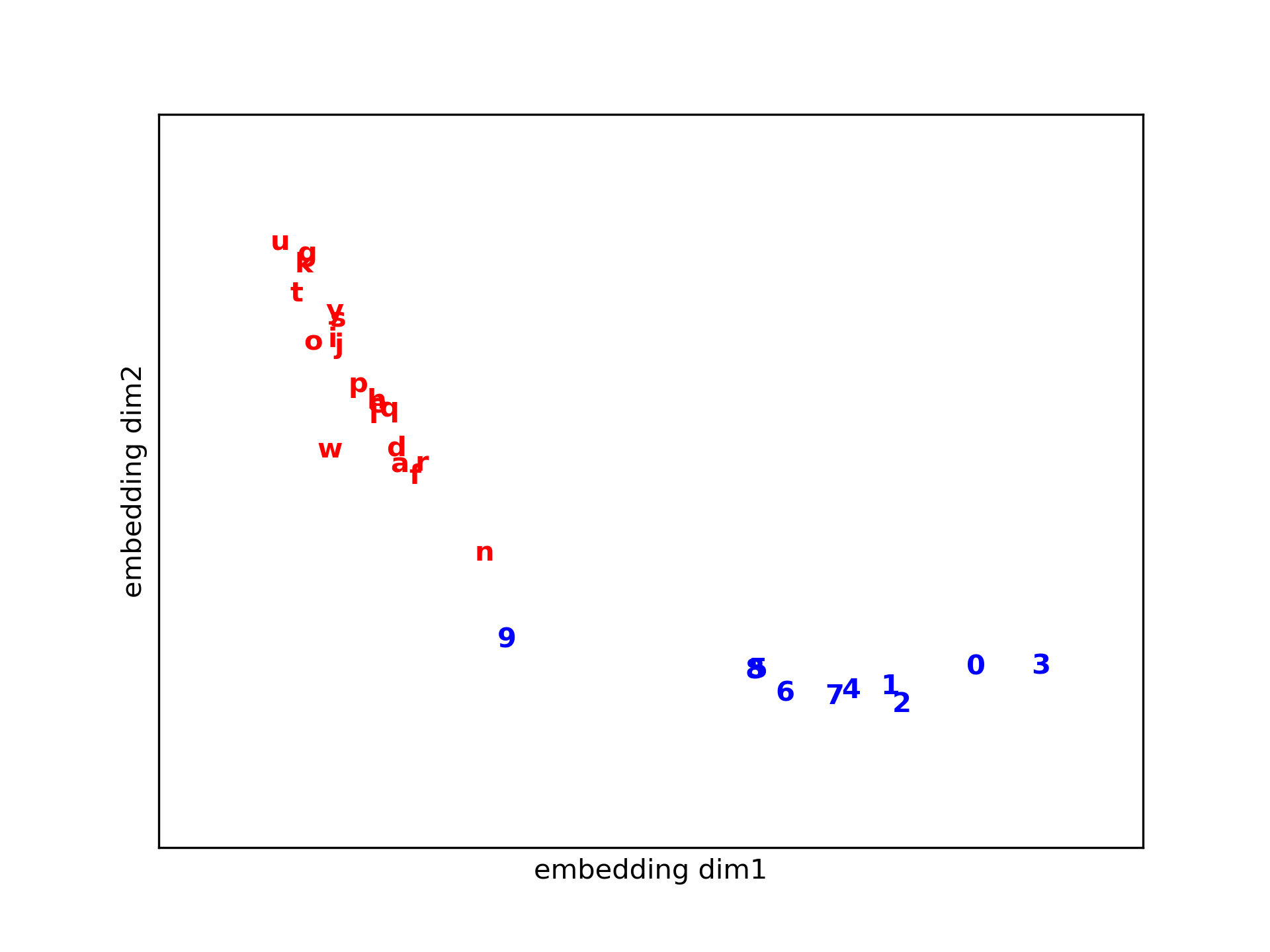

Word2Vec

Efficient Estimation of Word Representations in Vector Space

Skip-Gram code

CBOW code

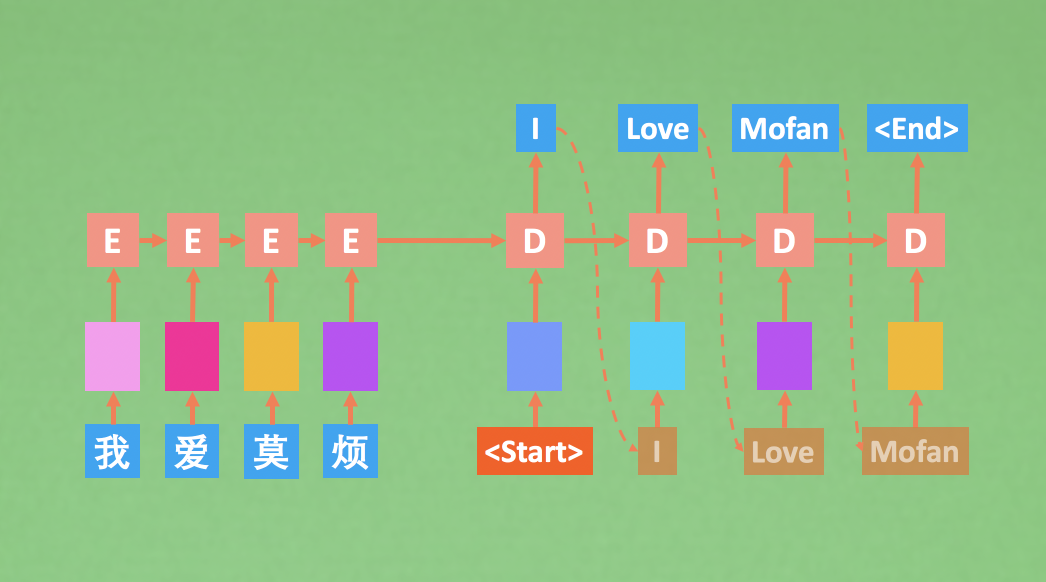

Seq2Seq

Sequence to Sequence Learning with Neural Networks

Seq2Seq code

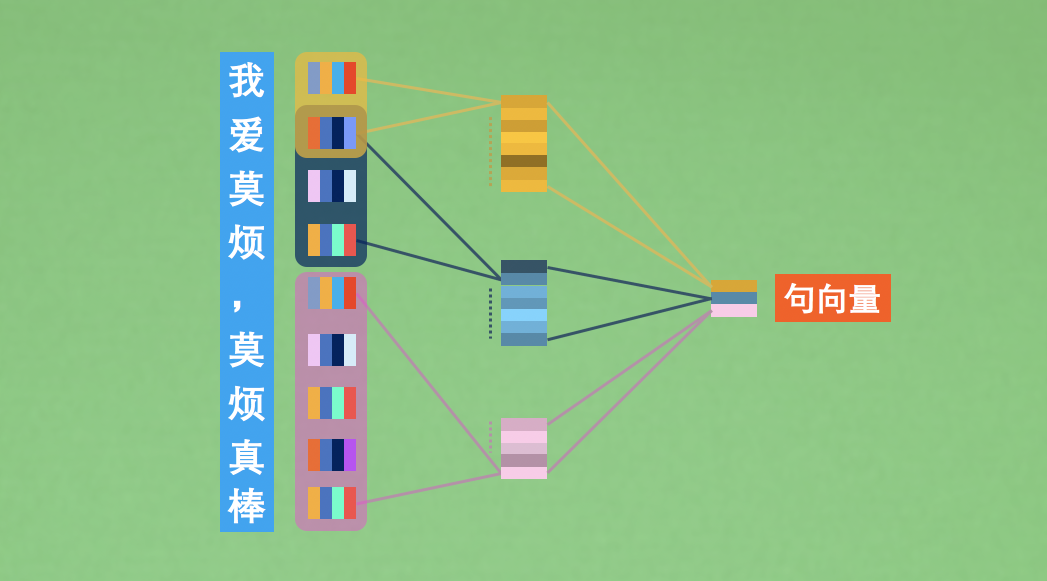

CNNLanguageModel

Convolutional Neural Networks for Sentence Classification

CNN language model code

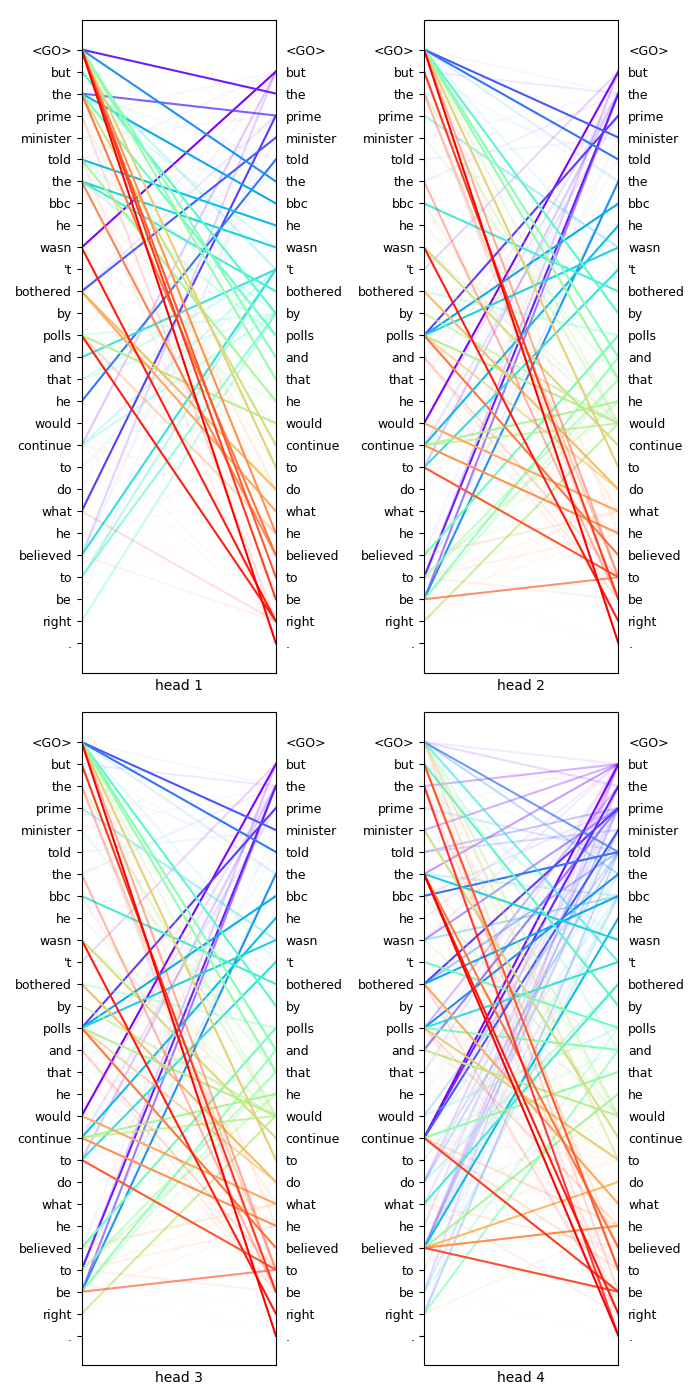

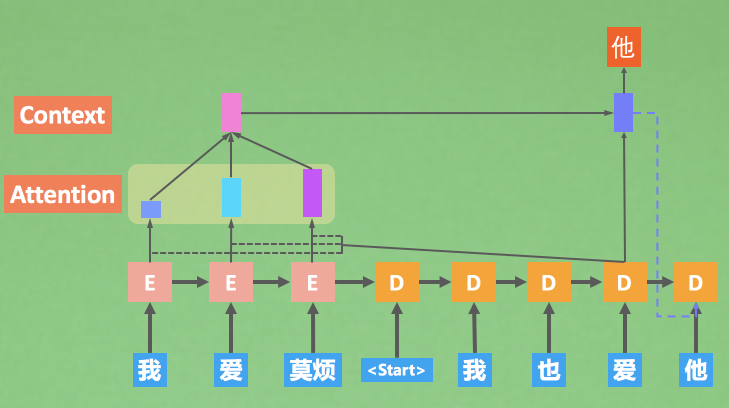

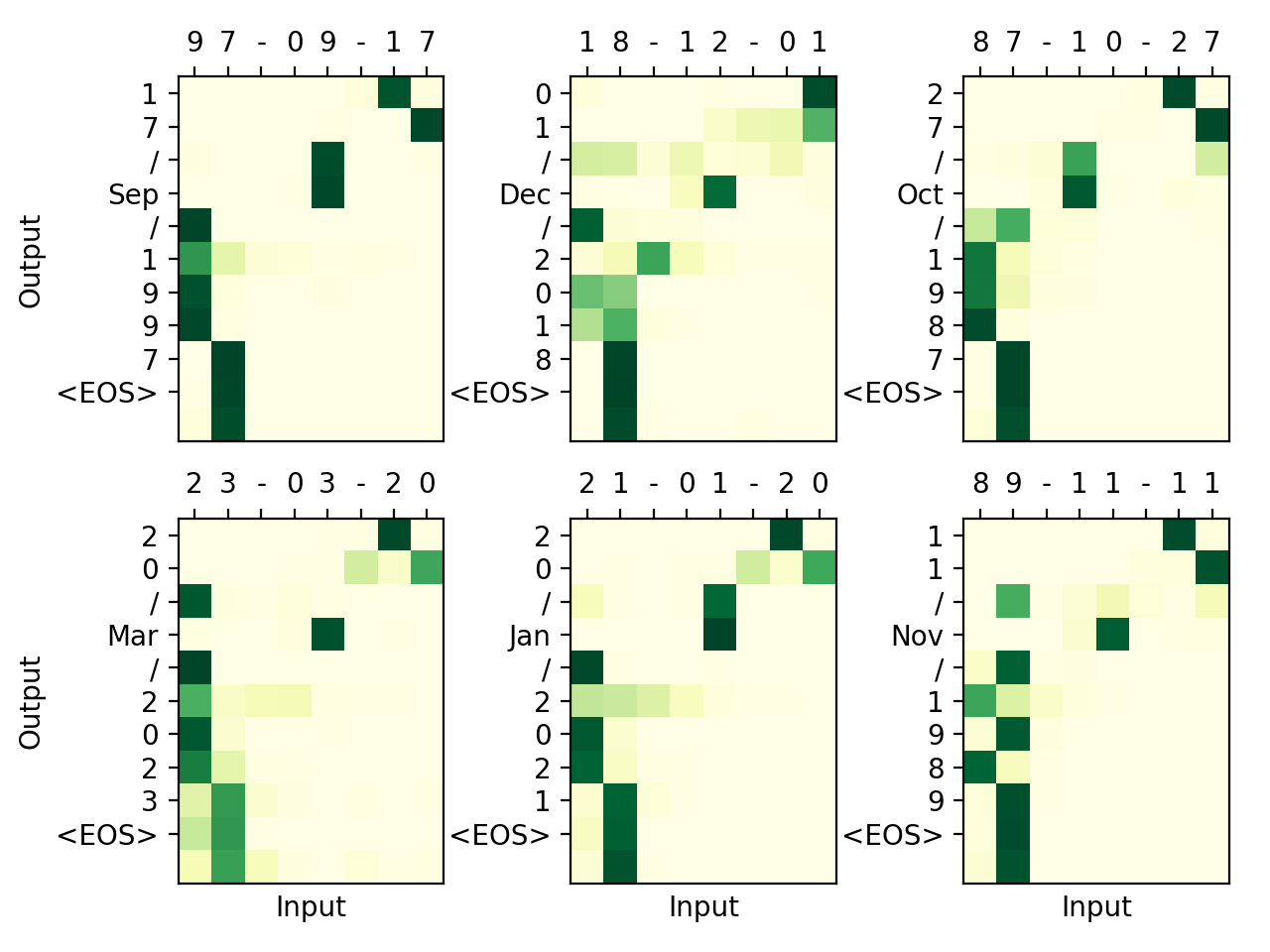

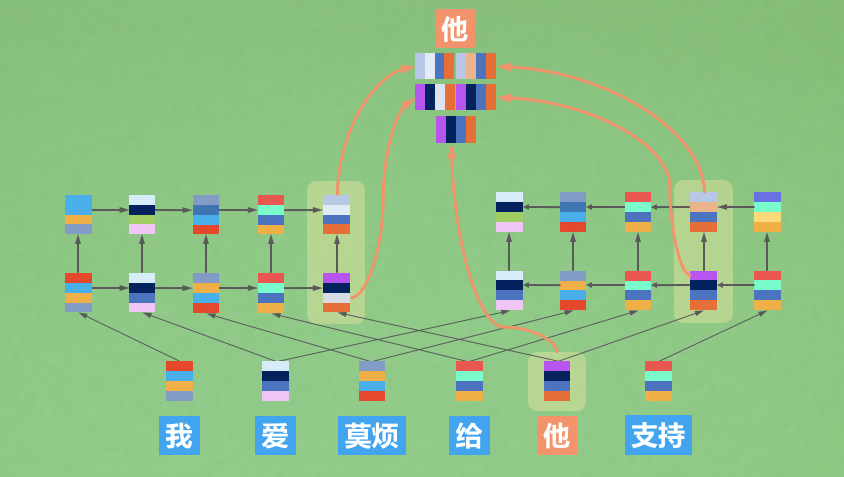

Seq2SeqAttention

Effective Approaches to Attention-based Neural Machine Translation

Seq2Seq Attention code

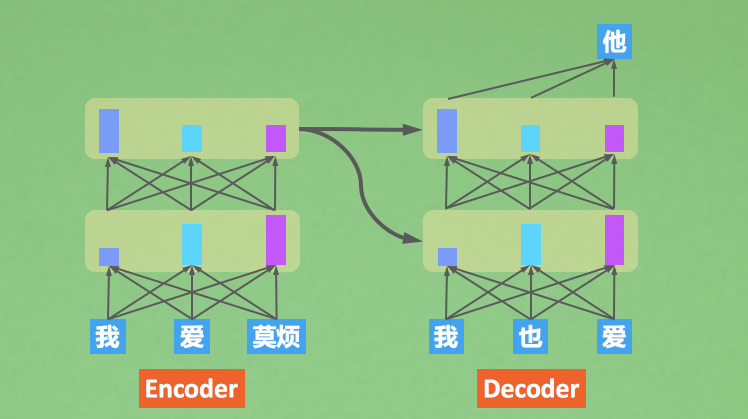

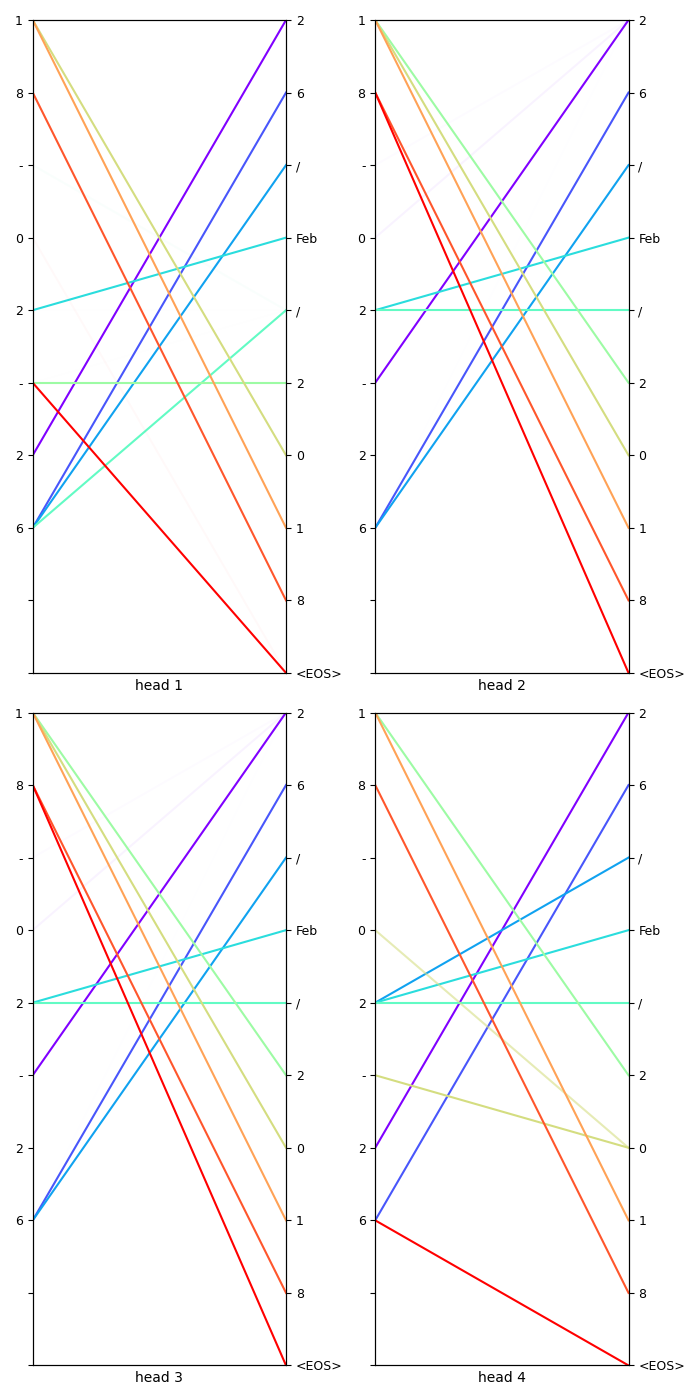

Transformer

Transformer code

ELMO

Deep contextualized word representations

ELMO code

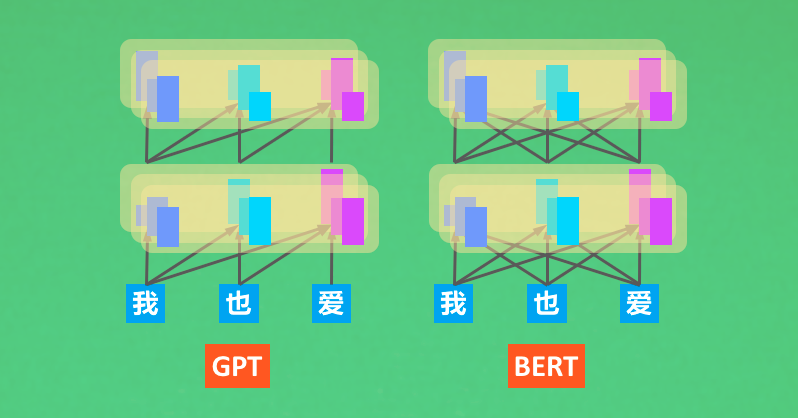

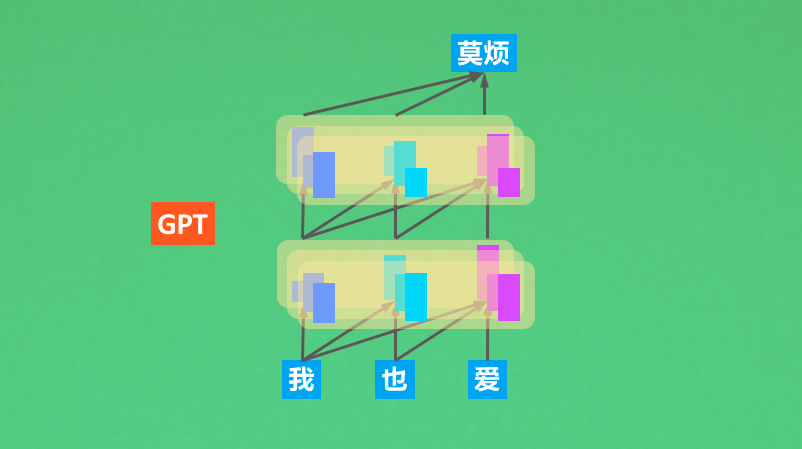

GPT

Improving Language Understanding by Generative Pre-Training

GPT code

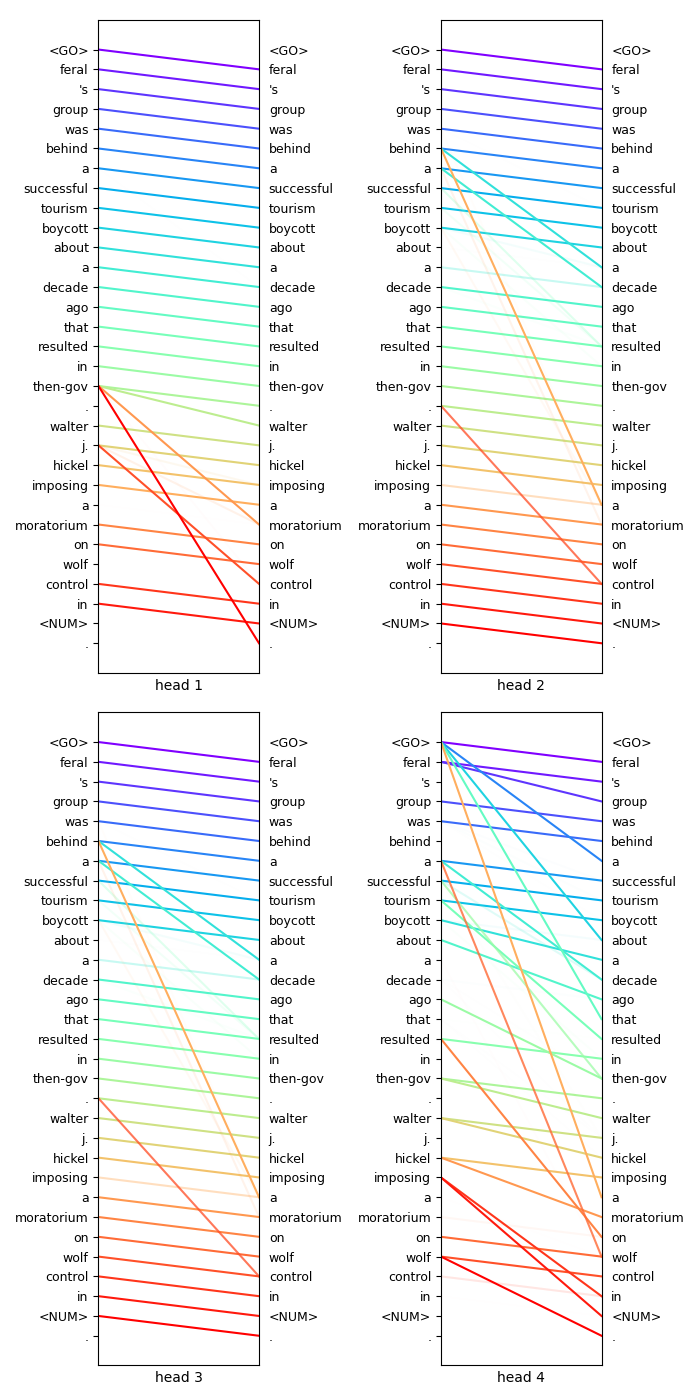

BERT

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

BERT code

My new attempt Bert with window mask