submission2019 / Cnn Quantization

Programming Languages

Projects that are alternatives of or similar to Cnn Quantization

cnn-quantization

Dependencies

- python3.x

- pytorch

- torchvision to load the datasets, perform image transforms

- pandas for logging to csv

- bokeh for training visualization

- scikit-learn for kmeans clustering

- mlflow for logging

- tqdm for progress

HW requirements

NVIDIA GPU / cuda support

Data

- To run this code you need validation set from ILSVRC2012 data

- Configure your dataset path by providing --data "PATH_TO_ILSVRC" or copy ILSVRC dir to ~/datasets/ILSVRC2012.

- To get the ILSVRC2012 data, you should register on their site for access: http://www.image-net.org/

Prepare environment

- Clone source code

git clone https://github.com/submission2019/cnn-quantization.git

cd cnn-quantization

- Create virtual environment for python3 and activate:

virtualenv --system-site-packages -p python3 venv3

. ./venv3/bin/activate

- Install dependencies

pip install torch torchvision bokeh pandas sklearn mlflow tqdm

Building cuda kernels for GEMMLOWP

To improve performance GEMMLOWP quantization was implemented in cuda and requires to compile kernels.

- build kernels

cd kernels

./build_all.sh

cd ../

Run inference experiments

Post-training quantization of Res50

Note that accuracy results could have 0.5% variance due to data shuffling.

- Experiment W4A4 naive:

python inference/inference_sim.py -a resnet50 -b 512 -pcq_w -pcq_a -sh --qtype int4 -qw int4

- [email protected] 62.154 [email protected] 84.252

- Experiment W4A4 + ACIQ + Bit Alloc(A) + Bit Alloc(W) + Bias correction:

python inference/inference_sim.py -a resnet50 -b 512 -pcq_w -pcq_a -sh --qtype int4 -qw int4 -c laplace -baa -baw -bcw

- [email protected] 73.330 [email protected] 91.334

ACIQ: Analytical Clipping for Integer Quantization

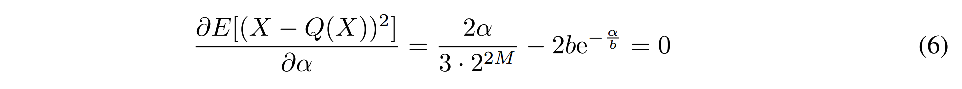

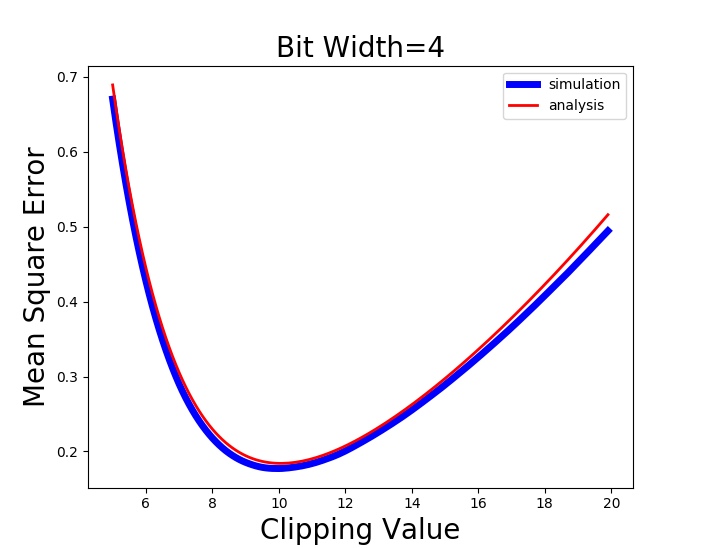

We solve eq. 6 numerically to find optimal clipping value α for both Laplace and Gaussian prior.

Solving eq. 6 numerically for bit-widths 2,3,4 results with optimal clipping values of 2.83b, 3.86b, 5.03*b respectively, where b is deviation from expected value of the activation.

Numerical solution source code:

mse_analysis.py

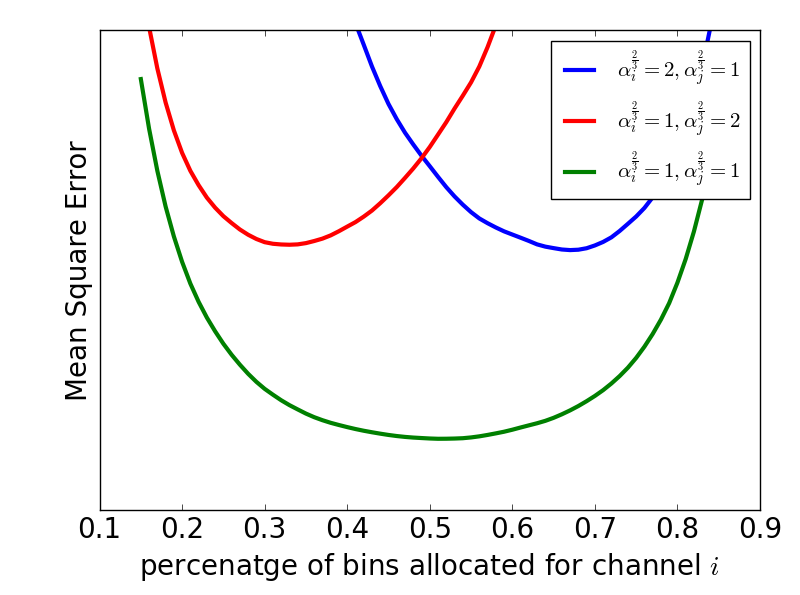

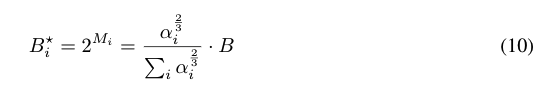

Per-channel bit allocation

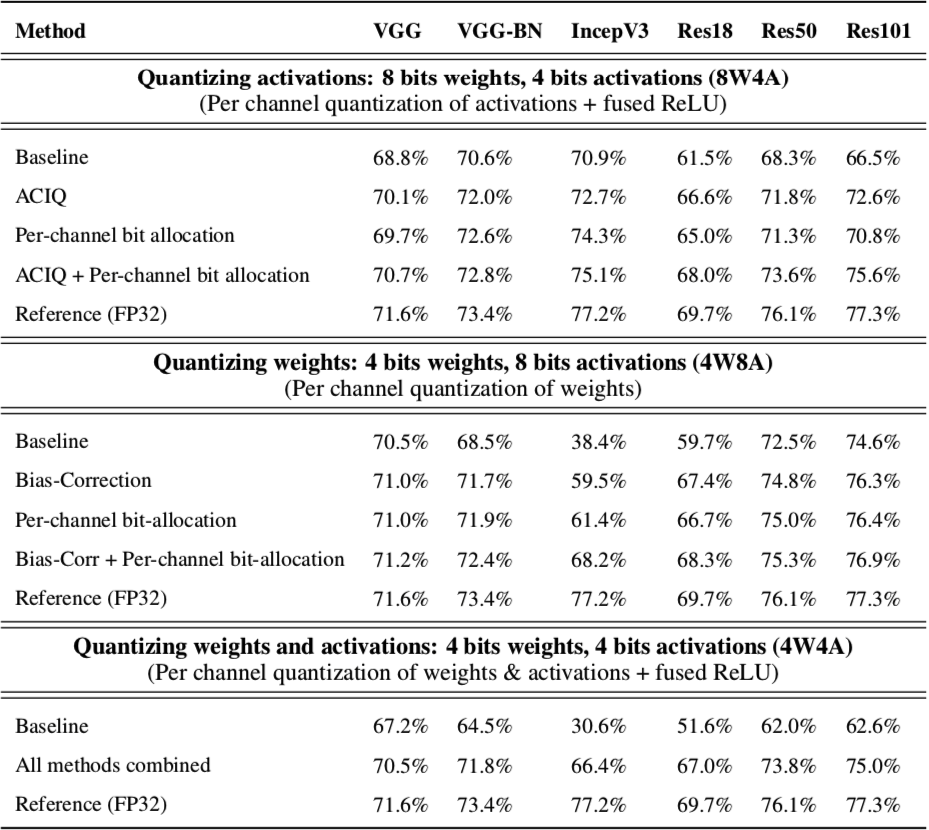

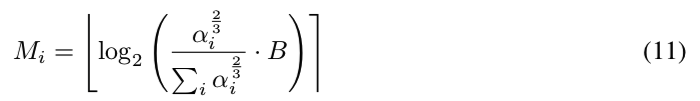

Given a quota on the total number of bits allowed to be written to memory, the optimal bit width assignment Mi for channel i according to eq. 11.

bit_allocation_synthetic.py

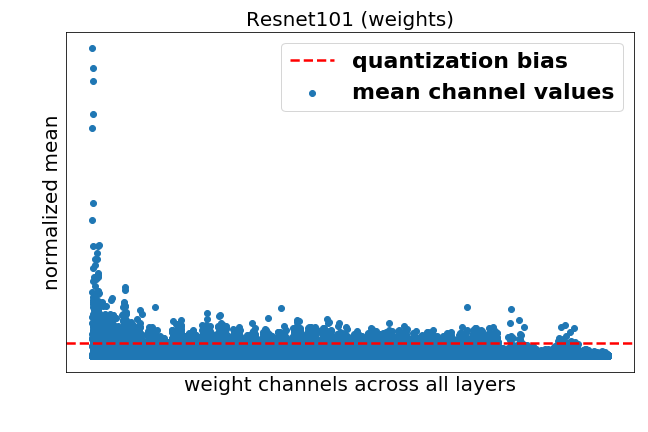

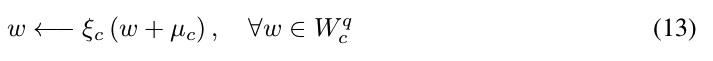

Bias correction

We observe an inherent bias in the mean and the variance of the weight values following their quantization.

bias_correction.ipynb

We calculate this bias using equation 12.

Then, we compensate for the bias for each channel of W as follows:

Quantization

We use GEMMLOWP quantization scheme described here. We implemented above quantization scheme in pytorch. We optimize this scheme by applying ACIQ to reduce range and optimally allocate bits for each channel.

Quantization code can be found in int_quantizer.py

Additional use cases and experiments

Inference using offline statistics

Collect statistics on 32 images

python inference/inference_sim.py -a resnet50 -b 1 --qtype int8 -sm collect -ac -cs 32

Run inference experiment W4A4 + ACIQ + Bit Alloc(A) + Bit Alloc(W) + Bias correction using offline statistics.

python inference/inference_sim.py -a resnet50 -b 512 -pcq_w -pcq_a --qtype int4 -qw int4 -c laplace -baa -baw -bcw -sm use

- [email protected] 74.2 [email protected] 91.932

4-bit quantization with clipping thresholds of 2 std

python inference/inference_sim.py -a resnet50 -b 512 -pcq_w -pcq_a -sh --qtype int4 -c 2std

- [email protected] 15.440 [email protected] 34.646

ACIQ with layer wise quantization

python inference/inference_sim.py -a resnet50 -b 512 --qtype int4 -c laplace -sm use

- [email protected] 71.404 [email protected] 90.248

Bin allocation and Variable Length Codding

Given a quota on the total number of bits allowed to be written to memory, the optimal number of bins Bi for channel i derived from eq. 10.

We evaluate the effect of huffman codding on activations and weights by mesuaring average entropy on all layers.

python -a vgg16 -b 32 --device_ids 4 -pcq_w -pcq_a -sh --qtype int4 -qw int4 -c laplace -baa -baw -bcw -bata 5.3 -batw 5.3 -mtq -me -ss 1024

- [email protected] 70.801 [email protected] 91.211

Average bit rate: avg.entropy.act - 2.215521374096473