li-xirong / Coco Cn

Licence: mit

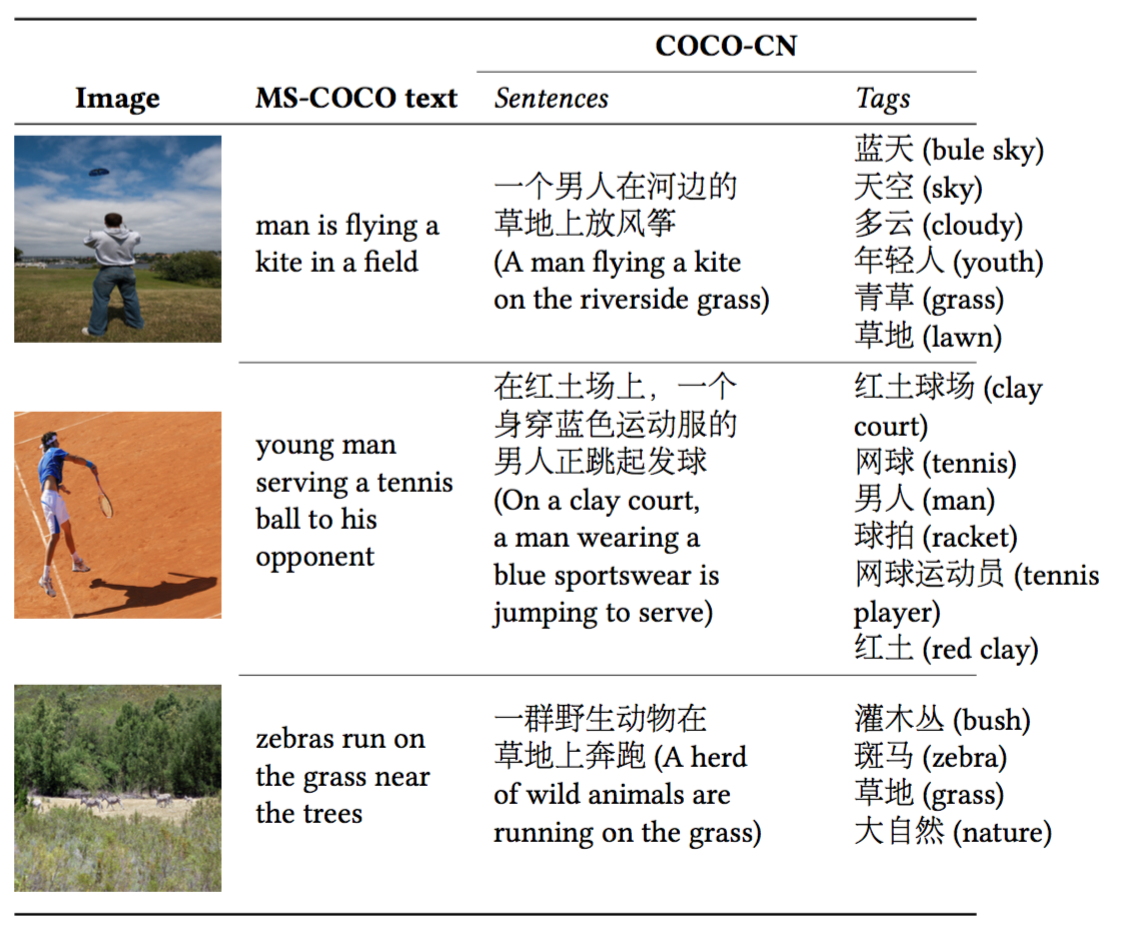

Enriching MS-COCO with Chinese sentences and tags for cross-lingual multimedia tasks

Stars: ✭ 57

Labels

Projects that are alternatives of or similar to Coco Cn

captioning chainer

A fast implementation of Neural Image Caption by Chainer

Stars: ✭ 17 (-70.18%)

Mutual labels: image-captioning

Im2p

Tensorflow implementation of paper: A Hierarchical Approach for Generating Descriptive Image Paragraphs

Stars: ✭ 15 (-73.68%)

Mutual labels: image-captioning

cvpr18-caption-eval

Learning to Evaluate Image Captioning. CVPR 2018

Stars: ✭ 79 (+38.6%)

Mutual labels: image-captioning

Cs231

Complete Assignments for CS231n: Convolutional Neural Networks for Visual Recognition

Stars: ✭ 317 (+456.14%)

Mutual labels: image-captioning

Omninet

Official Pytorch implementation of "OmniNet: A unified architecture for multi-modal multi-task learning" | Authors: Subhojeet Pramanik, Priyanka Agrawal, Aman Hussain

Stars: ✭ 448 (+685.96%)

Mutual labels: image-captioning

CS231n

My solutions for Assignments of CS231n: Convolutional Neural Networks for Visual Recognition

Stars: ✭ 30 (-47.37%)

Mutual labels: image-captioning

Image captioning

generate captions for images using a CNN-RNN model that is trained on the Microsoft Common Objects in COntext (MS COCO) dataset

Stars: ✭ 51 (-10.53%)

Mutual labels: image-captioning

Virtex

[CVPR 2021] VirTex: Learning Visual Representations from Textual Annotations

Stars: ✭ 323 (+466.67%)

Mutual labels: image-captioning

Neural Image Captioning

Implementation of Neural Image Captioning model using Keras with Theano backend

Stars: ✭ 12 (-78.95%)

Mutual labels: image-captioning

Image Captioning

Image Captioning using InceptionV3 and beam search

Stars: ✭ 290 (+408.77%)

Mutual labels: image-captioning

Scan

PyTorch source code for "Stacked Cross Attention for Image-Text Matching" (ECCV 2018)

Stars: ✭ 306 (+436.84%)

Mutual labels: image-captioning

Self Critical.pytorch

Unofficial pytorch implementation for Self-critical Sequence Training for Image Captioning. and others.

Stars: ✭ 716 (+1156.14%)

Mutual labels: image-captioning

im2p

Tensorflow implement of paper: A Hierarchical Approach for Generating Descriptive Image Paragraphs

Stars: ✭ 43 (-24.56%)

Mutual labels: image-captioning

Punny captions

An implementation of the NAACL 2018 paper "Punny Captions: Witty Wordplay in Image Descriptions".

Stars: ✭ 31 (-45.61%)

Mutual labels: image-captioning

stylenet

A pytorch implemention of "StyleNet: Generating Attractive Visual Captions with Styles"

Stars: ✭ 58 (+1.75%)

Mutual labels: image-captioning

Neuralmonkey

An open-source tool for sequence learning in NLP built on TensorFlow.

Stars: ✭ 400 (+601.75%)

Mutual labels: image-captioning

Image Captioning

Image Captioning: Implementing the Neural Image Caption Generator with python

Stars: ✭ 52 (-8.77%)

Mutual labels: image-captioning

Bottom Up Attention

Bottom-up attention model for image captioning and VQA, based on Faster R-CNN and Visual Genome

Stars: ✭ 989 (+1635.09%)

Mutual labels: image-captioning

Show Attend And Tell

TensorFlow Implementation of "Show, Attend and Tell"

Stars: ✭ 869 (+1424.56%)

Mutual labels: image-captioning

COCO-CN

COCO-CN is a bilingual image description dataset enriching MS-COCO with manually written Chinese sentences and tags. The new dataset can be used for multiple tasks including image tagging, captioning and retrieval, all in a cross-lingual setting.

| Chinese sentences | COCO-CN train | COCO-CN val | COCO-CN test |

|---|---|---|---|

| human written | ✅ | ✅ | ✅ |

| human translation | ❌ | ❌ | ✅ |

| machine translation (baidu) | ✅ | ✅ | ✅ |

Progress

- version 201805: 20,341 images (training / validation / test: 18,341 / 1,000 / 1,000), associated with 22,218 manually written Chinese sentences and 5,000 manually translated sentences. Data is freely available upon request. Please submit your request via Google Form.

- Precomputed image features: ResNext-101

- COCO-CN-Results-Viewer: A lightweight tool to inspect the results of different image captioning systems on the COCO-CN test set, developed by Emiel van Miltenburg at the Tilburg University.

- NUS-WIDE100: An extra test set.

- 2018-12-16: Code for cross-lingual image tagging and captioning released.

- 2018-12-20: Code for cross-lingual image retrieval and our image annotation system released.

- 2019-01-13: The COCO-CN paper accepted as a regular paper by the T-MM journal.

- 2021-02-03: Release of new annotations (4,573 images and 4,712 manually written sentences) collected via our iCap interactive image captioning System. The images have no overlap with the prevously released dataset.

Citation

If you find COCO-CN useful, please consider citing the following paper:

- Xirong Li, Chaoxi Xu, Xiaoxu Wang, Weiyu Lan, Zhengxiong Jia, Gang Yang, Jieping Xu, COCO-CN for Cross-Lingual Image Tagging, Captioning and Retrieval, IEEE Transactions on Multimedia, Volume 21, Number 9, pages 2347-2360, 2019

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].