lattice-ai / Deeplabv3 Plus

Tensorflow 2.3.0 implementation of DeepLabV3-Plus

Stars: ✭ 32

Projects that are alternatives of or similar to Deeplabv3 Plus

Multiclass Semantic Segmentation Camvid

Tensorflow 2 implementation of complete pipeline for multiclass image semantic segmentation using UNet, SegNet and FCN32 architectures on Cambridge-driving Labeled Video Database (CamVid) dataset.

Stars: ✭ 67 (+109.38%)

Mutual labels: jupyter-notebook, segmentation, semantic-segmentation

Hrnet Semantic Segmentation

The OCR approach is rephrased as Segmentation Transformer: https://arxiv.org/abs/1909.11065. This is an official implementation of semantic segmentation for HRNet. https://arxiv.org/abs/1908.07919

Stars: ✭ 2,369 (+7303.13%)

Mutual labels: segmentation, semantic-segmentation, cityscapes

Icnet Tensorflow

TensorFlow-based implementation of "ICNet for Real-Time Semantic Segmentation on High-Resolution Images".

Stars: ✭ 396 (+1137.5%)

Mutual labels: jupyter-notebook, semantic-segmentation, cityscapes

Cascaded Fcn

Source code for the MICCAI 2016 Paper "Automatic Liver and Lesion Segmentation in CT Using Cascaded Fully Convolutional NeuralNetworks and 3D Conditional Random Fields"

Stars: ✭ 296 (+825%)

Mutual labels: jupyter-notebook, segmentation, semantic-segmentation

Lightnet

LightNet: Light-weight Networks for Semantic Image Segmentation (Cityscapes and Mapillary Vistas Dataset)

Stars: ✭ 698 (+2081.25%)

Mutual labels: segmentation, semantic-segmentation, cityscapes

3dunet abdomen cascade

Stars: ✭ 91 (+184.38%)

Mutual labels: jupyter-notebook, segmentation, semantic-segmentation

Efficient Segmentation Networks

Lightweight models for real-time semantic segmentationon PyTorch (include SQNet, LinkNet, SegNet, UNet, ENet, ERFNet, EDANet, ESPNet, ESPNetv2, LEDNet, ESNet, FSSNet, CGNet, DABNet, Fast-SCNN, ContextNet, FPENet, etc.)

Stars: ✭ 579 (+1709.38%)

Mutual labels: segmentation, semantic-segmentation, cityscapes

Keras Segmentation Deeplab V3.1

An awesome semantic segmentation model that runs in real time

Stars: ✭ 156 (+387.5%)

Mutual labels: jupyter-notebook, segmentation, semantic-segmentation

LightNet

LightNet: Light-weight Networks for Semantic Image Segmentation (Cityscapes and Mapillary Vistas Dataset)

Stars: ✭ 710 (+2118.75%)

Mutual labels: segmentation, semantic-segmentation, cityscapes

Erfnet pytorch

Pytorch code for semantic segmentation using ERFNet

Stars: ✭ 304 (+850%)

Mutual labels: segmentation, semantic-segmentation, cityscapes

Steal

STEAL - Learning Semantic Boundaries from Noisy Annotations (CVPR 2019)

Stars: ✭ 424 (+1225%)

Mutual labels: jupyter-notebook, semantic-segmentation

Fsgan

FSGAN - Official PyTorch Implementation

Stars: ✭ 420 (+1212.5%)

Mutual labels: jupyter-notebook, segmentation

Probabilistic unet

A U-Net combined with a variational auto-encoder that is able to learn conditional distributions over semantic segmentations.

Stars: ✭ 427 (+1234.38%)

Mutual labels: jupyter-notebook, semantic-segmentation

Pytorch Unet

Simple PyTorch implementations of U-Net/FullyConvNet (FCN) for image segmentation

Stars: ✭ 470 (+1368.75%)

Mutual labels: jupyter-notebook, semantic-segmentation

Fasterseg

[ICLR 2020] "FasterSeg: Searching for Faster Real-time Semantic Segmentation" by Wuyang Chen, Xinyu Gong, Xianming Liu, Qian Zhang, Yuan Li, Zhangyang Wang

Stars: ✭ 438 (+1268.75%)

Mutual labels: semantic-segmentation, cityscapes

Vpgnet

VPGNet: Vanishing Point Guided Network for Lane and Road Marking Detection and Recognition (ICCV 2017)

Stars: ✭ 382 (+1093.75%)

Mutual labels: jupyter-notebook, semantic-segmentation

Tusimple Duc

Understanding Convolution for Semantic Segmentation

Stars: ✭ 567 (+1671.88%)

Mutual labels: semantic-segmentation, cityscapes

Fbrs interactive segmentation

[CVPR2020] f-BRS: Rethinking Backpropagating Refinement for Interactive Segmentation https://arxiv.org/abs/2001.10331

Stars: ✭ 366 (+1043.75%)

Mutual labels: jupyter-notebook, segmentation

Superpoint graph

Large-scale Point Cloud Semantic Segmentation with Superpoint Graphs

Stars: ✭ 533 (+1565.63%)

Mutual labels: segmentation, semantic-segmentation

Bisenet

Add bisenetv2. My implementation of BiSeNet

Stars: ✭ 589 (+1740.63%)

Mutual labels: segmentation, cityscapes

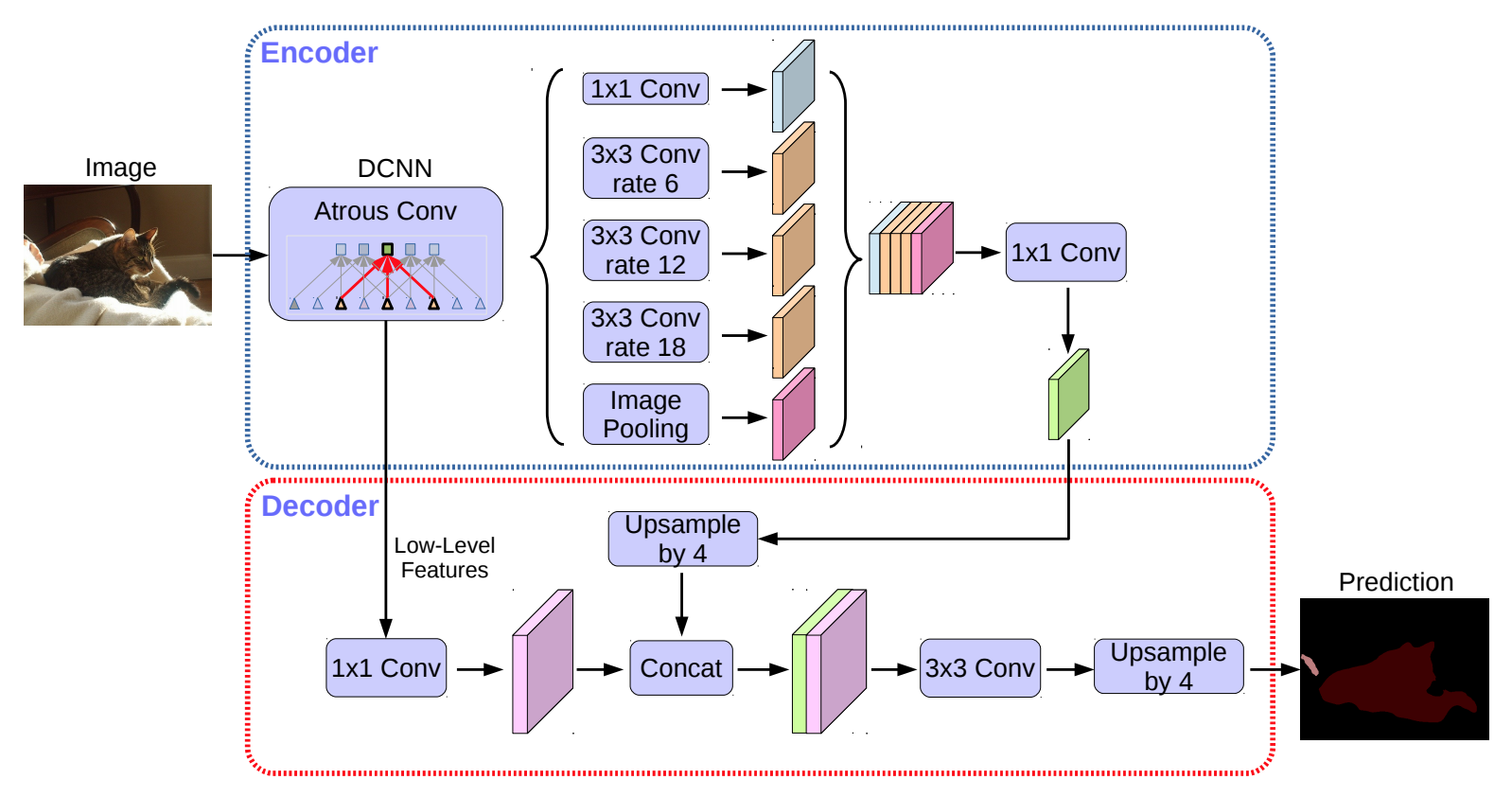

DeepLabV3-Plus (Ongoing)

Tensorflow 2.2.0 implementation of DeepLabV3-Plus architecture as proposed by the paper Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation.

Project Link: https://github.com/deepwrex/DeepLabV3-Plus/projects/.

Experiments: https://app.wandb.ai/19soumik-rakshit96/deeplabv3-plus.

Model Architectures can be found here.

Setup Datasets

-

CamVid

cd dataset bash camvid.sh -

Multi-Person Human Parsing

Register on https://www.kaggle.com/.

Generate Kaggle API Token

bash download_human_parsing_dataset.sh <kaggle-username> <kaggle-key>

Code to test Model

from deeplabv3plus.model.deeplabv3_plus import DeeplabV3Plus

model = DeepLabV3Plus(backbone='resnet50', num_classes=20)

input_shape = (1, 512, 512, 3)

input_tensor = tf.random.normal(input_shape)

result = model(input_tensor) # build model by one forward pass

model.summary()

Training

Use the trainer.py script as documented with the help description below:

usage: trainer.py [-h] [--wandb_api_key WANDB_API_KEY] config_key

Runs DeeplabV3+ trainer with the given config setting.

Registered config_key values:

camvid_resnet50

human_parsing_resnet50

positional arguments:

config_key Key to use while looking up configuration from the CONFIG_MAP dictionary.

optional arguments:

-h, --help show this help message and exit

--wandb_api_key WANDB_API_KEY

Wandb API Key for logging run on Wandb.

If provided, checkpoint_dir is set to wandb://

(Model checkpoints are saved to wandb.)

If you want to use your own custom training configuration, you can define it in the following way:

-

Define your configuration in a python dictionary as follows:

config/camvid_resnet50.py

#!/usr/bin/env python

"""Module for training deeplabv3plus on camvid dataset."""

from glob import glob

import tensorflow as tf

# Sample Configuration

CONFIG = {

# We mandate specifying project_name and experiment_name in every config

# file. They are used for wandb runs if wandb api key is specified.

'project_name': 'deeplabv3-plus',

'experiment_name': 'camvid-segmentation-resnet-50-backbone',

'train_dataset_config': {

'images': sorted(glob('./dataset/camvid/train/*')),

'labels': sorted(glob('./dataset/camvid/trainannot/*')),

'height': 512, 'width': 512, 'batch_size': 8

},

'val_dataset_config': {

'images': sorted(glob('./dataset/camvid/val/*')),

'labels': sorted(glob('./dataset/camvid/valannot/*')),

'height': 512, 'width': 512, 'batch_size': 8

},

'strategy': tf.distribute.OneDeviceStrategy(device="/gpu:0"),

'num_classes': 20, 'backbone': 'resnet50', 'learning_rate': 0.0001,

'checkpoint_dir': "./checkpoints/",

'checkpoint_file_prefix': "deeplabv3plus_with_resnet50_",

'epochs': 100

}

-

Save this file inside the configs directory. (As hinted in the file path above)

-

Register your config in the

__init.py__module like below:

config/__init__.py

#!/usr/bin/env python

# -*- coding: utf-8 -*-

"""__init__ module for configs. Register your config file here by adding it's

entry in the CONFIG_MAP as shown.

"""

import config.camvid_resnet50

import config.human_parsing_resnet50

CONFIG_MAP = {

'camvid_resnet50': config.camvid_resnet50.CONFIG, # the config file we defined above

'human_parsing_resnet50': config.human_parsing_resnet50.CONFIG # another config

}

-

Now you can run the trainer script like so (using the

camvid_resnet50config key we registered above):

./trainer.py camvid_resnet50 --wandb_api_key <YOUR_WANDB_API_KEY>

or, if you don't need wandb logging:

./trainer.py camvid_resnet50

Inference

Sample Inference Code:

model_file = './dataset/deeplabv3-plus-human-parsing-resnet-50-backbone.h5'

train_images = glob('./dataset/instance-level_human_parsing/Training/Images/*')

val_images = glob('./dataset/instance-level_human_parsing/Validation/Images/*')

test_images = glob('./dataset/instance-level_human_parsing/Testing/Images/*')

def plot_predictions(images_list, size):

for image_file in images_list:

image_tensor = read_image(image_file, size)

prediction = infer(

image_tensor=image_tensor,

model_file=model_file

)

plot_samples_matplotlib(

[image_tensor, prediction], figsize=(10, 6)

)

plot_predictions(train_images[:4], (512, 512))

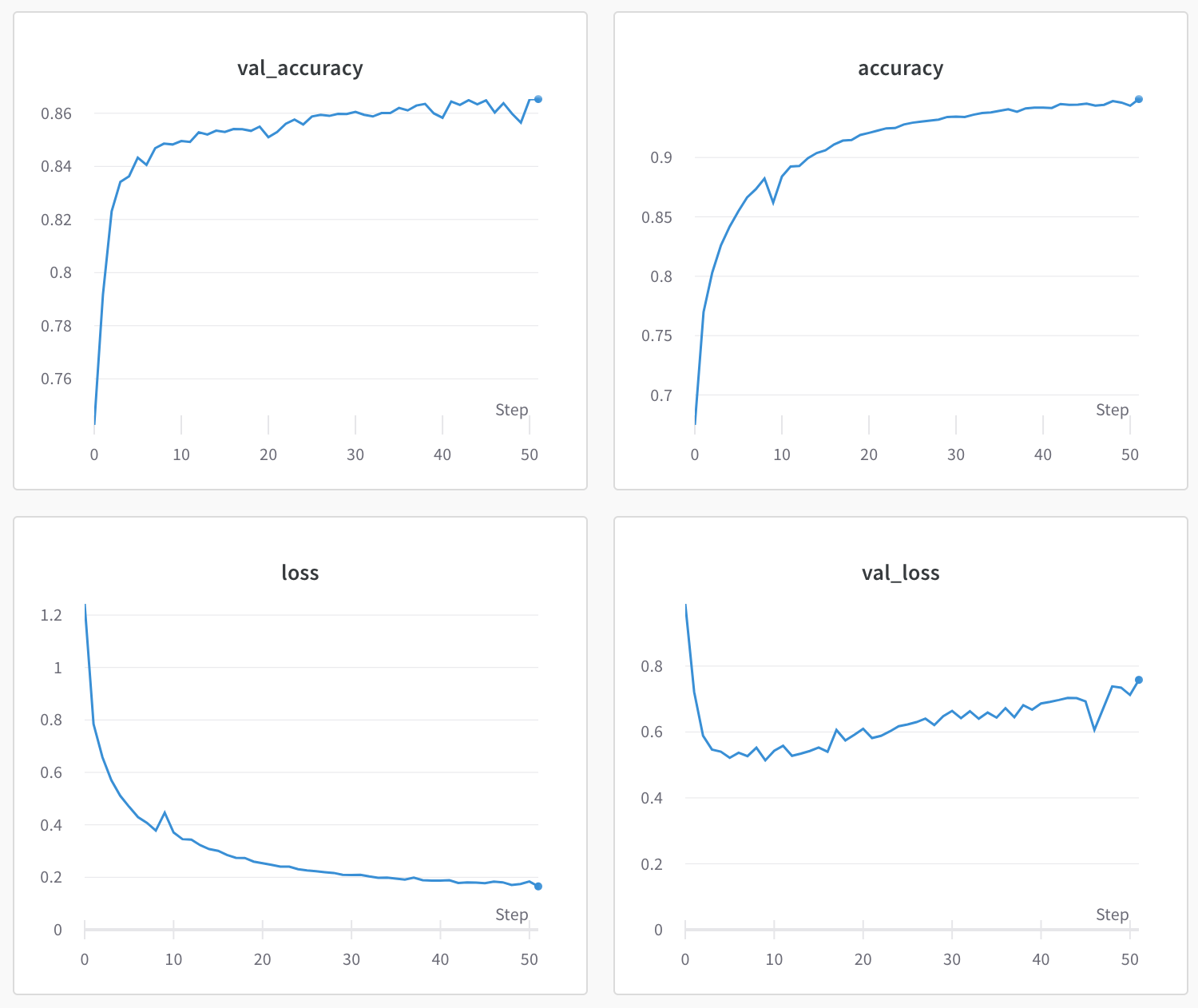

Results

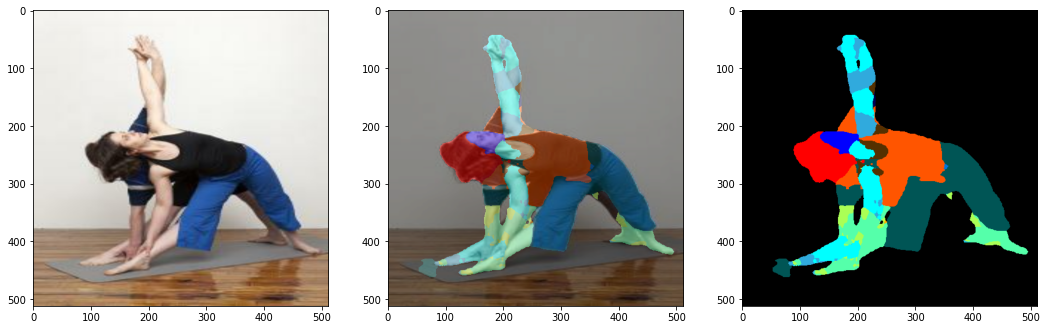

Multi-Person Human Parsing

Training Set Results

Validation Set Results

Test Set Results

Citation

@misc{1802.02611,

Author = {Liang-Chieh Chen and Yukun Zhu and George Papandreou and Florian Schroff and Hartwig Adam},

Title = {Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation},

Year = {2018},

Eprint = {arXiv:1802.02611},

}

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].