houxianxu / Dfc Vae

Programming Languages

Labels

Projects that are alternatives of or similar to Dfc Vae

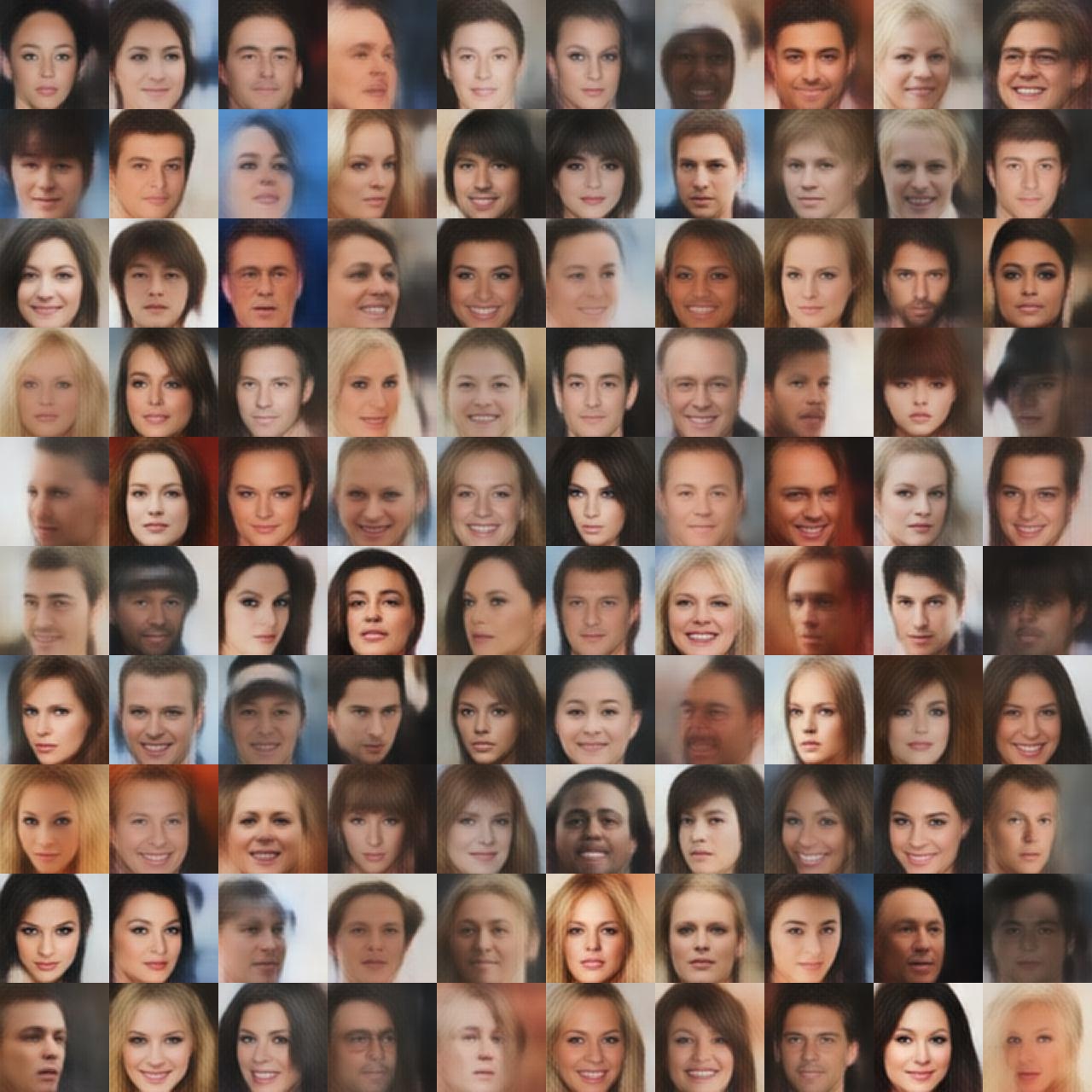

DFC-VAE

This is the code for the paper

Deep Feature Consistent Variational Autoencoder

The paper trained a Variational Autoencoder (VAE) model for face image generation. In addition, it provided a method to manipluate facial attributes by using attribute-specific vector.

- Pretrained model trained on CelebA dataset

- Code for training on GPU

- Code for different analysis

Installation

Our implementation is based on Torch and several dependencies.

After installing Torch according to this tutorial, use following code to install dependencies:

sudo apt-get install libprotobuf-dev protobuf-compiler

luarocks install loadcaffe

luarocks install https://raw.githubusercontent.com/szym/display/master/display-scm-0.rockspec

luarocks install nngraph

sudo apt-get install libmatio2

luarocks install matio

luarocks install manifold

sudo apt-get install libatlas3-base # for manifold

we use a NVIDIA GPU for training and testing, so you also need install GPU related packages

luarocks install cutorch

luarocks install cunn

luarocks install cudnn

Dataset preparation

cd celebA

Download img_align_celeba.zip from http://mmlab.ie.cuhk.edu.hk/projects/CelebA.html under the link "Align&Cropped Images".

Download list_attr_celeba.txt for annotation.

unzip img_align_celeba.zip; cd ..

DATA_ROOT=celebA th data/crop_celebA.lua

We need pretrained VGG-19 to compute feature perceptual loss.

cd data/pretrained && bash download_models.sh && cd ../..

Training

Open a new terminal and start the server for display images in the browser

th -ldisplay.start 8000 0.0.0.0

The images can be seen at http://localhost:8000 in the browser

Training with feature perceptual loss.

DATA_ROOT=celebA th main_cvae_content.lua

Training with pixel-by-pixel loss.

DATA_ROOT=celebA th main_cvae.lua

Generate face images using pretrained Encoder and Decoder

Pretrained model

mkdir checkpoints; cd checkpoints

We provide both Encoder and Decoder

cvae_content_123_encoder.t7 and cvae_content_123_decoder.t7 trained with relu1_1, relu2_1, relu3_1 in VGG.

Reconstruction with CelebA dataset:

DATA_ROOT=celebA reconstruction=1 th generate.lua

Face images randomly generated from latent variables:

DATA_ROOT=celebA reconstruction=0 th generate.lua

Following are some examples:

## Linear interpolation between two face imagesth linear_walk_two_images.lua

## Vector arithmetic for visual attributes

First preprocess the celebA dataset annotations to separate the dataset to two parts for each attribute, indicating whether containing the specific attribute or not.

cd celebA

python get_binary_attr.py

# should generate file: 'celebA/attr_binary_list.txt'

cd ..

th linear_walk_attribute_vector.lua

Here are some examples:

Better face attributes manipulation by incorporating GAN

Credits

The code is based on neural-style, dcgan.torch, VAE-Torch and texture_nets.

Citation

If you find this code useful for your research, please cite:

@inproceedings{hou2017deep,

title={Deep Feature Consistent Variational Autoencoder},

author={Hou, Xianxu and Shen, Linlin and Sun, Ke and Qiu, Guoping},

booktitle={Applications of Computer Vision (WACV), 2017 IEEE Winter Conference on},

pages={1133--1141},

year={2017},

organization={IEEE}

}

@article{hou2019improving,

title={Improving Variational Autoencoder with Deep Feature Consistent and Generative Adversarial Training},

author={Hou, Xianxu and Sun, Ke and Shen, Linlin and Qiu, Guoping},

journal={Neurocomputing},

volume={341},

pages={183--194},

year={2019},

publisher={Elsevier},

}