aiff22 / Dped

Programming Languages

Labels

Projects that are alternatives of or similar to Dped

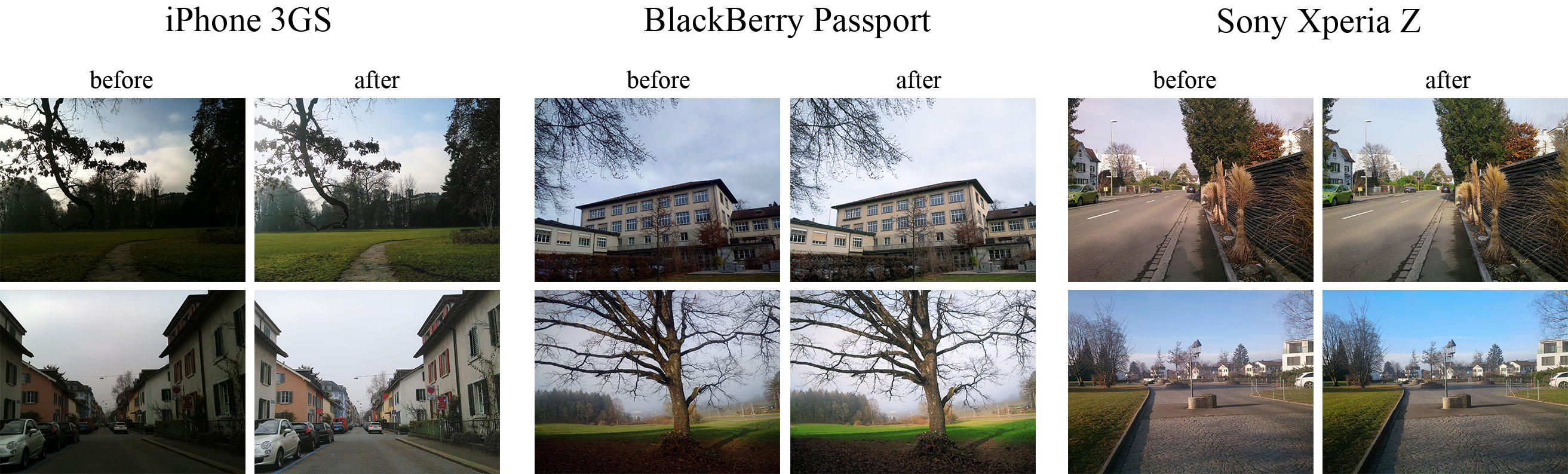

DSLR-Quality Photos on Mobile Devices with Deep Convolutional Networks

1. Overview [Paper] [Project webpage] [Enhancing RAW photos] [Rendering Bokeh Effect]

The provided code implements the paper that presents an end-to-end deep learning approach for translating ordinary photos from smartphones into DSLR-quality images. The learned model can be applied to photos of arbitrary resolution, while the methodology itself is generalized to any type of digital camera. More visual results can be found here.

2. Prerequisites

- Python + Pillow, scipy, numpy, imageio packages

- TensorFlow 1.x / 2.x + CUDA CuDNN

- Nvidia GPU

3. First steps

- Download the pre-trained VGG-19 model Mirror and put it into

vgg_pretrained/folder - Download DPED dataset (patches for CNN training) and extract it into

dped/folder.

This folder should contain three subolders:sony/,iphone/andblackberry/

4. Train the model

python train_model.py model=<model>

Obligatory parameters:

model:iphone,blackberryorsony

Optional parameters and their default values:

batch_size:50- batch size [smaller values can lead to unstable training]

train_size:30000- the number of training patches randomly loaded eacheval_stepiterations

eval_step:1000- eacheval_stepiterations the model is saved and the training data is reloaded

num_train_iters:20000- the number of training iterations

learning_rate:5e-4- learning rate

w_content:10- the weight of the content loss

w_color:0.5- the weight of the color loss

w_texture:1- the weight of the texture [adversarial] loss

w_tv:2000- the weight of the total variation loss

dped_dir:dped/- path to the folder with DPED dataset

vgg_dir:vgg_pretrained/imagenet-vgg-verydeep-19.mat- path to the pre-trained VGG-19 network

Example:

python train_model.py model=iphone batch_size=50 dped_dir=dped/ w_color=0.7

5. Test the provided pre-trained models

python test_model.py model=<model>

Obligatory parameters:

model:iphone_orig,blackberry_origorsony_orig

Optional parameters:

test_subset:full,small- all 29 or only 5 test images will be processed

resolution:orig,high,medium,small,tiny- the resolution of the test images [origmeans original resolution]

use_gpu:true,false- run models on GPU or CPU

dped_dir:dped/- path to the folder with DPED dataset

Example:

python test_model.py model=iphone_orig test_subset=full resolution=orig use_gpu=true

6. Test the obtained models

python test_model.py model=<model>

Obligatory parameters:

model:iphone,blackberryorsony

Optional parameters:

test_subset:full,small- all 29 or only 5 test images will be processed

iteration:allor<number>- get visual results for all iterations or for the specific iteration,

<number>must be a multiple ofeval_step

resolution:orig,high,medium,small,tiny- the resolution of the test images [origmeans original resolution]

use_gpu:true,false- run models on GPU or CPU

dped_dir:dped/- path to the folder with DPED dataset

Example:

python test_model.py model=iphone iteration=13000 test_subset=full resolution=orig use_gpu=true

7. Folder structure

dped/- the folder with the DPED dataset

models/- logs and models that are saved during the training process

models_orig/- the provided pre-trained models foriphone,sonyandblackberry

results/- visual results for small image patches that are saved while training

vgg-pretrained/- the folder with the pre-trained VGG-19 network

visual_results/- processed [enhanced] test images

load_dataset.py- python script that loads training data

models.py- architecture of the image enhancement [resnet] and adversarial networks

ssim.py- implementation of the ssim score

train_model.py- implementation of the training procedure

test_model.py- applying the pre-trained models to test images

utils.py- auxiliary functions

vgg.py- loading the pre-trained vgg-19 network

8. Problems and errors

What if I get an error: "OOM when allocating tensor with shape [...]"?

Your GPU does not have enough memory. If this happens during the training process:

- Decrease the size of the training batch [

batch_size]. Note however that smaller values can lead to unstable training.

If this happens while testing the models:

- Run the model on CPU (set the parameter

use_gputofalse). Note that this can take up to 5 minutes per image.

- Use cropped images, set the parameter

resolutionto:

high- center crop of size1680x1260pixels

medium- center crop of size1366x1024pixels

small- center crop of size1024x768pixels

tiny- center crop of size800x600pixels

The less resolution is - the smaller part of the image will be processed

9. Citation

@inproceedings{ignatov2017dslr,

title={DSLR-Quality Photos on Mobile Devices with Deep Convolutional Networks},

author={Ignatov, Andrey and Kobyshev, Nikolay and Timofte, Radu and Vanhoey, Kenneth and Van Gool, Luc},

booktitle={Proceedings of the IEEE International Conference on Computer Vision},

pages={3277--3285},

year={2017}

}

10. Any further questions?

Please contact Andrey Ignatov ([email protected]) for more information