dynverse / Dynbenchmark

Programming Languages

Labels

Projects that are alternatives of or similar to Dynbenchmark

Benchmarking trajectory inference methods

This repo contains the scripts to reproduce the manuscript

A comparison of single-cell trajectory inference methods Wouter Saelens*

, Robrecht Cannoodt*

, Helena Todorov

, Yvan Saeys

doi:10.1038/s41587-019-0071-9

Dynverse

Under the hood, dynbenchmark makes use of most dynverse package for running the methods, comparing them to a gold standard, and plotting the output. Check out dynverse.org for an overview!

Experiments

From start to finish, the repository is divided into several experiments, each with their own scripts and results. These are accompanied by documentation using github readmes and can thus be easily explored by going to the appropriate folders:

| # | id | scripts | results |

|---|---|---|---|

| 1 | Datasets | 📄➡ | 📊➡ |

| 2 | Metrics | 📄➡ | 📊➡ |

| 3 | Methods | 📄➡ | 📊➡ |

| 4 | Method testing | 📄➡ | 📊➡ |

| 5 | Scaling | 📄➡ | 📊➡ |

| 6 | Benchmark | 📄➡ | 📊➡ |

| 7 | Stability | 📄➡ | 📊➡ |

| 8 | Summary | 📄➡ | 📊➡ |

| 9 | Guidelines | 📄➡ | 📊➡ |

| 10 | Benchmark interpretation | 📄➡ | 📊➡ |

| 11 | Example predictions | 📄➡ | 📊➡ |

| 12 | Manuscript | 📄➡ | 📊➡ |

| Varia | 📄➡ |

We also have several additional subfolders:

- Manuscript: Source files for producing the manuscript.

- Package: An R package with several helper functions for organizing the benchmark and rendering the manuscript.

- Raw: Files generated by hand, such as figures and spreadsheets.

- Derived: Intermediate data files produced by the scripts. These files are not git committed.

Guidelines

Based on the results of the benchmark, we provide context-dependent user guidelines, available as a shiny app. This app is integrated within the dyno pipeline, which also includes the wrappers used in the benchmarking and other packages for visualising and interpreting the results.

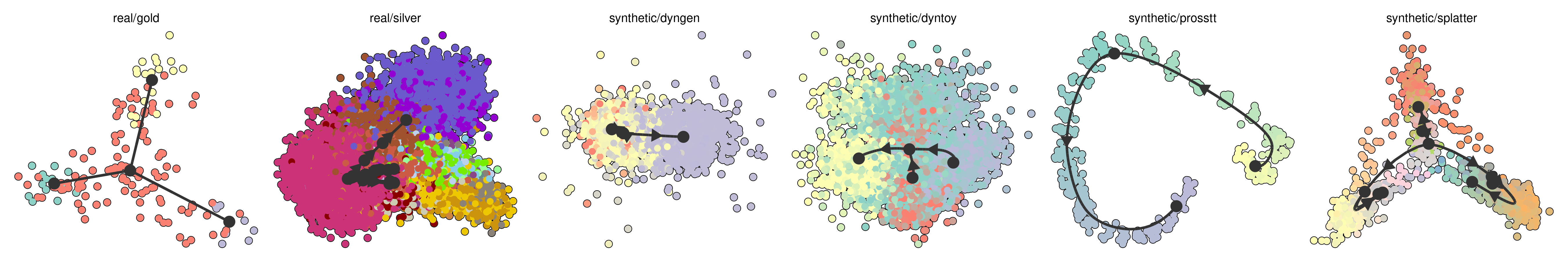

Datasets

The benchmarking pipeline generates (and uses) the following datasets:

-

Gold standard single-cell datasets, both real and synthetic,

used to evaluated the trajectory inference methods

-

The performance of methods used for the results overview figure and the dynguidelines app.

-

General information about trajectory inference methods, available as a data frame in

dynmethods::methods

Methods

All wrapped methods are wrapped as both docker and singularity containers. These can be easily run using dynmethods.

Installation

dynbenchmark has been tested using R version 3.5.1 on Linux. While running the methods also works on on Windows and Mac (see dyno), running the benchmark is currently not supported on these operating system, given that a lot of commands are linux specific.

In R, you can install the dependencies of dynbenchmark from github using:

# install.packages("devtools")

devtools::install_github("dynverse/dynbenchmark/package")

This will install several other “dynverse” packages. Depending on the number of R packages already installed, this installation should take approximately 5 to 30 minutes.

On Linux, you will need to install udunits and ImageMagick:

- Debian / Ubuntu / Linux Mint:

sudo apt-get install libudunits2-dev imagemagick - Fedora / CentOS / RHEL:

sudo dnf install udunits2-devel ImageMagick-c++-devel

Docker or Singularity (version ≥ 3.0) has to be installed to run TI methods. We suggest docker on Windows and MacOS, while both docker and singularity are fine when running on linux. Singularity is strongly recommended when running the method on shared computing clusters.

For windows 10 you can install Docker CE, older Windows installations require the Docker toolbox.

You can test whether docker is correctly installed by running:

dynwrap::test_docker_installation(detailed = TRUE)

## ✔ Docker is installed

## ✔ Docker daemon is running

## ✔ Docker is at correct version (>1.0): 1.39

## ✔ Docker is in linux mode

## ✔ Docker can pull images

## ✔ Docker can run image

## ✔ Docker can mount temporary volumes

## ✔ Docker test successful -----------------------------------------------------------------

## [1] TRUE

Same for singularity:

dynwrap::test_singularity_installation(detailed = TRUE)

## ✔ Singularity is installed

## ✔ Singularity is at correct version (>=3.0): v3.0.0-13-g0273e90f is installed

## ✔ Singularity can pull and run a container from Dockerhub

## ✔ Singularity can mount temporary volumes

## ✔ Singularity test successful ------------------------------------------------------------

## [1] TRUE

These commands will give helpful tips if some parts of the installation are missing.