Gsunshine / Enjoy Hamburger

Programming Languages

Projects that are alternatives of or similar to Enjoy Hamburger

Enjoy-Hamburger 🍔

Official implementation of Hamburger, Is Attention Better Than Matrix Decomposition? (ICLR 2021)

Under construction.

Introduction

This repo provides the official implementation of Hamburger for further research. We sincerely hope that this paper can bring you inspiration about the Attention Mechanism, especially how the low-rankness and the optimization-driven method can help model the so-called Global Information in deep learning.

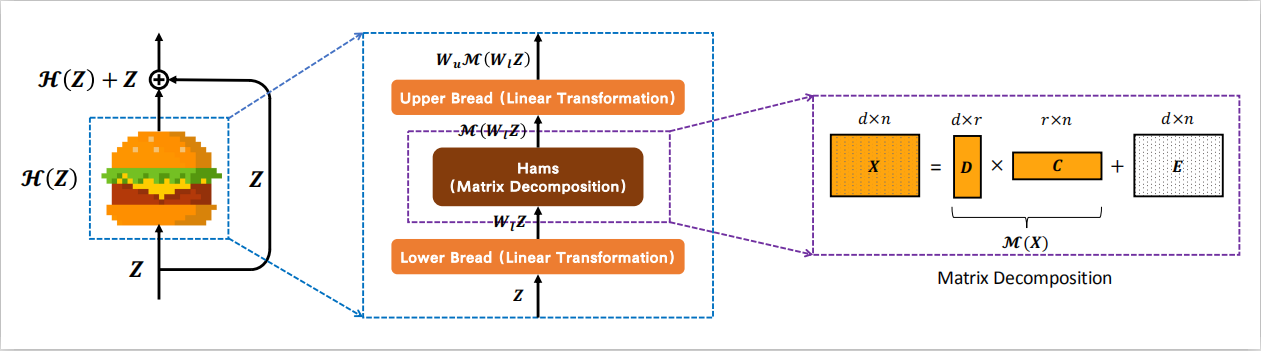

We model the global context issue as a low-rank completion problem and show that its optimization algorithms can help design global information blocks. This paper then proposes a series of Hamburgers, in which we employ the optimization algorithms for solving MDs to factorize the input representations into sub-matrices and reconstruct a low-rank embedding. Hamburgers with different MDs can perform favorably against the popular global context module self-attention when carefully coping with gradients back-propagated through MDs.

We are working on some exciting topics. Please wait for our new papers!

Enjoy Hamburger, please!

Organization

This section introduces the organization of this repo.

We strongly recommend the readers to read the blog (incoming soon) as a supplement to the paper!

- blog.

- Some random thoughts about Hamburger and beyond.

- Possible directions based on Hamburger.

- FAQ.

- seg.

- We provide the PyTorch implementation of Hamburger (V1) in the paper and an enhanced version (V2) flavored with Cheese. Some experimental features are included in V2+.

- We release the codebase for systematical research on the PASCAL VOC dataset, including the two-stage training on the

trainaugandtrainvaldatasets and the MSFlip test. - We offer three checkpoints of HamNet, in which one is 85.90+ with the test server link, while the other two are 85.80+ with the test server link 1 and link 2. You can reproduce the test results using the checkpoints combined with the MSFlip test code.

- Statistics about HamNet that might ease further research.

- gan.

- Official implementation of Hamburger in TensorFlow.

- Data preprocessing code for using ImageNet in tensorflow-datasets. (Possibly useful if you hope to run the JAX code of BYOL or other ImageNet training code with the Cloud TPUs.)

- Training and evaluation protocol of HamGAN on the ImageNet.

- Checkpoints of HamGAN-strong and HamGAN-baby.

TODO:

- [ ] README doc for HamGAN.

- [ ] PyTorch Hamburger with less encapsulation.

- [ ] Suggestions for using and further developing Hamburger.

- [ ] Blog in both English and Chinese.

- [ ]

We also consider adding a collection of popular context modules to this repo.It depends on the time. No Guarantee. Perhaps GuGu 🕊️ (which means standing someone up).

Citation

If you find our work interesting or helpful to your research, please consider citing Hamburger. :)

@inproceedings{

ham,

title={Is Attention Better Than Matrix Decomposition?},

author={Zhengyang Geng and Meng-Hao Guo and Hongxu Chen and Xia Li and Ke Wei and Zhouchen Lin},

booktitle={International Conference on Learning Representations},

year={2021},

}

Contact

Feel free to contact me if you have additional questions or have interests in collaboration. Please drop me an email at [email protected]. Find me at Twitter. Thank you!

Response to recent emails may be slightly delayed to March 26th due to the deadlines of ICLR. I feel sorry, but people are always deadline-driven. QAQ

Acknowledgments

Our research is supported with Cloud TPUs from Google's Tensorflow Research Cloud (TFRC). Nice and joyful experience with the TFRC program. Thank you!

We would like to sincerely thank EMANet, PyTorch-Encoding, YLG, and TF-GAN for their awesome released code.