lucidrains / Isab Pytorch

Licence: mit

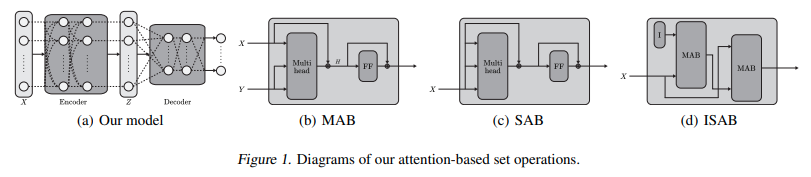

An implementation of (Induced) Set Attention Block, from the Set Transformers paper

Stars: ✭ 21

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Isab Pytorch

Performer Pytorch

An implementation of Performer, a linear attention-based transformer, in Pytorch

Stars: ✭ 546 (+2500%)

Mutual labels: artificial-intelligence, attention-mechanism, attention

Lambda Networks

Implementation of LambdaNetworks, a new approach to image recognition that reaches SOTA with less compute

Stars: ✭ 1,497 (+7028.57%)

Mutual labels: artificial-intelligence, attention-mechanism, attention

Global Self Attention Network

A Pytorch implementation of Global Self-Attention Network, a fully-attention backbone for vision tasks

Stars: ✭ 64 (+204.76%)

Mutual labels: artificial-intelligence, attention-mechanism, attention

Self Attention Cv

Implementation of various self-attention mechanisms focused on computer vision. Ongoing repository.

Stars: ✭ 209 (+895.24%)

Mutual labels: artificial-intelligence, attention-mechanism, attention

ntua-slp-semeval2018

Deep-learning models of NTUA-SLP team submitted in SemEval 2018 tasks 1, 2 and 3.

Stars: ✭ 79 (+276.19%)

Mutual labels: attention, attention-mechanism

automatic-personality-prediction

[AAAI 2020] Modeling Personality with Attentive Networks and Contextual Embeddings

Stars: ✭ 43 (+104.76%)

Mutual labels: attention, attention-mechanism

NTUA-slp-nlp

💻Speech and Natural Language Processing (SLP & NLP) Lab Assignments for ECE NTUA

Stars: ✭ 19 (-9.52%)

Mutual labels: attention, attention-mechanism

Vit Pytorch

Implementation of Vision Transformer, a simple way to achieve SOTA in vision classification with only a single transformer encoder, in Pytorch

Stars: ✭ 7,199 (+34180.95%)

Mutual labels: artificial-intelligence, attention-mechanism

Hierarchical-Word-Sense-Disambiguation-using-WordNet-Senses

Word Sense Disambiguation using Word Specific models, All word models and Hierarchical models in Tensorflow

Stars: ✭ 33 (+57.14%)

Mutual labels: attention, attention-mechanism

Alphafold2

To eventually become an unofficial Pytorch implementation / replication of Alphafold2, as details of the architecture get released

Stars: ✭ 298 (+1319.05%)

Mutual labels: artificial-intelligence, attention-mechanism

visualization

a collection of visualization function

Stars: ✭ 189 (+800%)

Mutual labels: attention, attention-mechanism

datastories-semeval2017-task6

Deep-learning model presented in "DataStories at SemEval-2017 Task 6: Siamese LSTM with Attention for Humorous Text Comparison".

Stars: ✭ 20 (-4.76%)

Mutual labels: attention, attention-mechanism

AoA-pytorch

A Pytorch implementation of Attention on Attention module (both self and guided variants), for Visual Question Answering

Stars: ✭ 33 (+57.14%)

Mutual labels: attention, attention-mechanism

Linear-Attention-Mechanism

Attention mechanism

Stars: ✭ 27 (+28.57%)

Mutual labels: attention, attention-mechanism

Timesformer Pytorch

Implementation of TimeSformer from Facebook AI, a pure attention-based solution for video classification

Stars: ✭ 225 (+971.43%)

Mutual labels: artificial-intelligence, attention-mechanism

Bottleneck Transformer Pytorch

Implementation of Bottleneck Transformer in Pytorch

Stars: ✭ 408 (+1842.86%)

Mutual labels: artificial-intelligence, attention-mechanism

Neural sp

End-to-end ASR/LM implementation with PyTorch

Stars: ✭ 408 (+1842.86%)

Mutual labels: attention-mechanism, attention

Pytorch Original Transformer

My implementation of the original transformer model (Vaswani et al.). I've additionally included the playground.py file for visualizing otherwise seemingly hard concepts. Currently included IWSLT pretrained models.

Stars: ✭ 411 (+1857.14%)

Mutual labels: attention-mechanism, attention

h-transformer-1d

Implementation of H-Transformer-1D, Hierarchical Attention for Sequence Learning

Stars: ✭ 121 (+476.19%)

Mutual labels: attention, attention-mechanism

Induced Set Attention Block (ISAB) - Pytorch

A concise implementation of (Induced) Set Attention Block, from the Set Transformers paper. It proposes to reduce attention from O(n²) to O(mn), where m is the number of inducing points (learned queries).

Install

$ pip install isab-pytorch

Usage

You can either set the number of induced points, in which the parameters will be instantiated and returned on completion of cross attention.

import torch

from isab_pytorch import ISAB

attn = ISAB(

dim = 512,

heads = 8,

num_induced_points = 128

)

x = torch.randn(1, 1024, 512)

m = torch.ones((1, 1024)).bool()

out, induced_points = attn(x, mask = m) # (1, 1024, 512), (1, 128, 512)

Or you can not set the number of induced points, where you can pass in the induced points yourself (some global memory that propagates down the transformer, as an example)

import torch

from torch import nn

from isab_pytorch import ISAB

attn = ISAB(

dim = 512,

heads = 8

)

mem = nn.Parameter(torch.randn(128, 512)) # some memory, passed through multiple ISABs

x = torch.randn(1, 1024, 512)

out, mem_updated = attn(x, mem) # (1, 1024, 512), (1, 128, 512)

Citations

@misc{lee2019set,

title={Set Transformer: A Framework for Attention-based Permutation-Invariant Neural Networks},

author={Juho Lee and Yoonho Lee and Jungtaek Kim and Adam R. Kosiorek and Seungjin Choi and Yee Whye Teh},

year={2019},

eprint={1810.00825},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].