lucidrains / Global Self Attention Network

Licence: mit

A Pytorch implementation of Global Self-Attention Network, a fully-attention backbone for vision tasks

Stars: ✭ 64

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Global Self Attention Network

Bottleneck Transformer Pytorch

Implementation of Bottleneck Transformer in Pytorch

Stars: ✭ 408 (+537.5%)

Mutual labels: artificial-intelligence, image-classification, attention-mechanism

Self Attention Cv

Implementation of various self-attention mechanisms focused on computer vision. Ongoing repository.

Stars: ✭ 209 (+226.56%)

Mutual labels: artificial-intelligence, attention-mechanism, attention

Performer Pytorch

An implementation of Performer, a linear attention-based transformer, in Pytorch

Stars: ✭ 546 (+753.13%)

Mutual labels: artificial-intelligence, attention-mechanism, attention

Vit Pytorch

Implementation of Vision Transformer, a simple way to achieve SOTA in vision classification with only a single transformer encoder, in Pytorch

Stars: ✭ 7,199 (+11148.44%)

Mutual labels: artificial-intelligence, image-classification, attention-mechanism

Lambda Networks

Implementation of LambdaNetworks, a new approach to image recognition that reaches SOTA with less compute

Stars: ✭ 1,497 (+2239.06%)

Mutual labels: artificial-intelligence, attention-mechanism, attention

Isab Pytorch

An implementation of (Induced) Set Attention Block, from the Set Transformers paper

Stars: ✭ 21 (-67.19%)

Mutual labels: artificial-intelligence, attention-mechanism, attention

Sianet

An easy to use C# deep learning library with CUDA/OpenCL support

Stars: ✭ 353 (+451.56%)

Mutual labels: artificial-intelligence, image-classification

Neural sp

End-to-end ASR/LM implementation with PyTorch

Stars: ✭ 408 (+537.5%)

Mutual labels: attention-mechanism, attention

Meme Generator

MemeGen is a web application where the user gives an image as input and our tool generates a meme at one click for the user.

Stars: ✭ 57 (-10.94%)

Mutual labels: artificial-intelligence, image-classification

Structured Self Attention

A Structured Self-attentive Sentence Embedding

Stars: ✭ 459 (+617.19%)

Mutual labels: attention-mechanism, attention

Alphafold2

To eventually become an unofficial Pytorch implementation / replication of Alphafold2, as details of the architecture get released

Stars: ✭ 298 (+365.63%)

Mutual labels: artificial-intelligence, attention-mechanism

Pytorch Original Transformer

My implementation of the original transformer model (Vaswani et al.). I've additionally included the playground.py file for visualizing otherwise seemingly hard concepts. Currently included IWSLT pretrained models.

Stars: ✭ 411 (+542.19%)

Mutual labels: attention-mechanism, attention

The Third Eye

An AI based application to identify currency and gives audio feedback.

Stars: ✭ 63 (-1.56%)

Mutual labels: artificial-intelligence, image-classification

Artificio

Deep Learning Computer Vision Algorithms for Real-World Use

Stars: ✭ 326 (+409.38%)

Mutual labels: artificial-intelligence, image-classification

Lightnet

🌓 Bringing pjreddie's DarkNet out of the shadows #yolo

Stars: ✭ 322 (+403.13%)

Mutual labels: artificial-intelligence, image-classification

Seq2seq Summarizer

Pointer-generator reinforced seq2seq summarization in PyTorch

Stars: ✭ 306 (+378.13%)

Mutual labels: attention-mechanism, attention

Caer

High-performance Vision library in Python. Scale your research, not boilerplate.

Stars: ✭ 452 (+606.25%)

Mutual labels: artificial-intelligence, image-classification

Residual Attention Network

Residual Attention Network for Image Classification

Stars: ✭ 525 (+720.31%)

Mutual labels: image-classification, attention

Pytorch Gat

My implementation of the original GAT paper (Veličković et al.). I've additionally included the playground.py file for visualizing the Cora dataset, GAT embeddings, an attention mechanism, and entropy histograms. I've supported both Cora (transductive) and PPI (inductive) examples!

Stars: ✭ 908 (+1318.75%)

Mutual labels: attention-mechanism, attention

Timesformer Pytorch

Implementation of TimeSformer from Facebook AI, a pure attention-based solution for video classification

Stars: ✭ 225 (+251.56%)

Mutual labels: artificial-intelligence, attention-mechanism

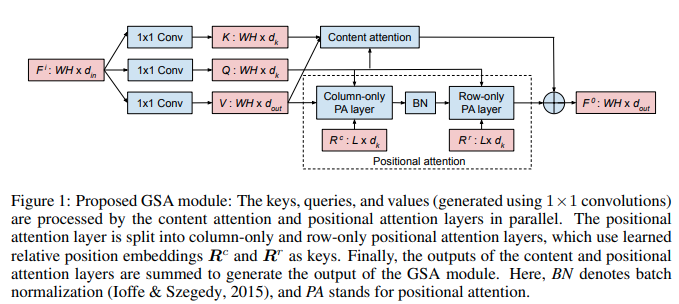

Global Self-attention Network

An implementation of Global Self-Attention Network, which proposes an all-attention vision backbone that achieves better results than convolutions with less parameters and compute.

They use a previously discovered linear attention variant with a small modification for further gains (no normalization of the queries), paired with relative positional attention, computed axially for efficiency.

The result is an extremely simple circuit composed of 8 einsums, 1 softmax, and normalization.

Install

$ pip install gsa-pytorch

Usage

import torch

from gsa_pytorch import GSA

gsa = GSA(

dim = 3,

dim_out = 64,

dim_key = 32,

heads = 8,

rel_pos_length = 256 # in paper, set to max(height, width). you can also turn this off by omitting this line

)

x = torch.randn(1, 3, 256, 256)

gsa(x) # (1, 64, 256, 256)

Citations

@inproceedings{

anonymous2021global,

title={Global Self-Attention Networks},

author={Anonymous},

booktitle={Submitted to International Conference on Learning Representations},

year={2021},

url={https://openreview.net/forum?id=KiFeuZu24k},

note={under review}

}

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].