solarhell / Fontobfuscator

Licence: mit

字体混淆服务

Stars: ✭ 125

Programming Languages

python

139335 projects - #7 most used programming language

Labels

Projects that are alternatives of or similar to Fontobfuscator

Moodle Downloader 2

A Moodle downloader that downloads course content fast from Moodle (eg. lecture pdfs)

Stars: ✭ 118 (-5.6%)

Mutual labels: crawler

Examples Of Web Crawlers

一些非常有趣的python爬虫例子,对新手比较友好,主要爬取淘宝、天猫、微信、豆瓣、QQ等网站。(Some interesting examples of python crawlers that are friendly to beginners. )

Stars: ✭ 10,724 (+8479.2%)

Mutual labels: crawler

Sentinel Crawler

Xenomorph Crawler, a Concise, Declarative and Observable Distributed Crawler(Node / Go / Java / Rust) For Web, RDB, OS, also can act as a Monitor(with Prometheus) or ETL for Infrastructure 💫 多语言执行器,分布式爬虫

Stars: ✭ 118 (-5.6%)

Mutual labels: crawler

Decryptlogin

APIs for loginning some websites by using requests.

Stars: ✭ 1,861 (+1388.8%)

Mutual labels: crawler

Memex Explorer

Viewers for statistics and dashboarding of Domain Search Engine data

Stars: ✭ 115 (-8%)

Mutual labels: crawler

Baiducrawler

Sample of using proxies to crawl baidu search results.

Stars: ✭ 116 (-7.2%)

Mutual labels: crawler

Qqmusicspider

基于Scrapy的QQ音乐爬虫(QQ Music Spider),爬取歌曲信息、歌词、精彩评论等,并且分享了QQ音乐中排名前6400名的内地和港台歌手的49万+的音乐语料

Stars: ✭ 120 (-4%)

Mutual labels: crawler

Docs

《数据采集从入门到放弃》源码。内容简介:爬虫介绍、就业情况、爬虫工程师面试题 ;HTTP协议介绍; Requests使用 ;解析器Xpath介绍; MongoDB与MySQL; 多线程爬虫; Scrapy介绍 ;Scrapy-redis介绍; 使用docker部署; 使用nomad管理docker集群; 使用EFK查询docker日志

Stars: ✭ 118 (-5.6%)

Mutual labels: crawler

Black Widow

GUI based offensive penetration testing tool (Open Source)

Stars: ✭ 124 (-0.8%)

Mutual labels: crawler

Skill Share Crawler Dl

Download Videos Skill Share per ID or per Class

Stars: ✭ 122 (-2.4%)

Mutual labels: crawler

Php Crawler

A php crawler that finds emails on the internets

Stars: ✭ 119 (-4.8%)

Mutual labels: crawler

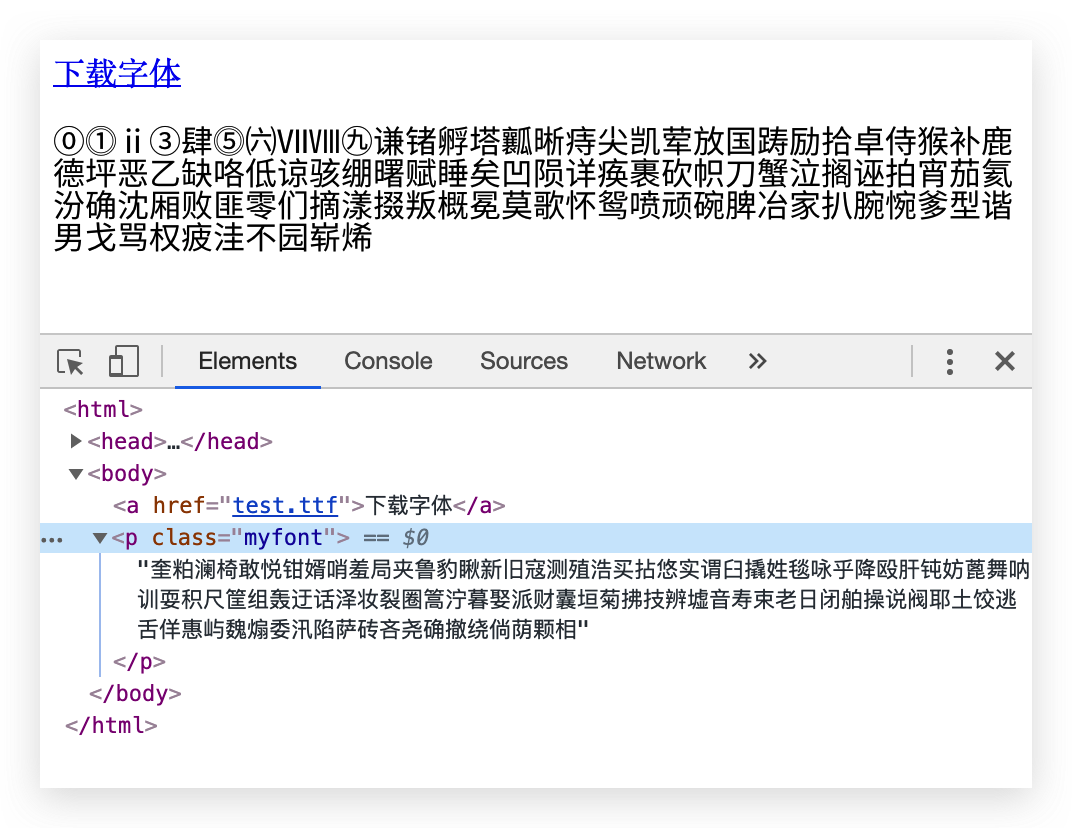

fontObfuscator

欢迎加入爬虫讨论群组 https://t.me/crawler_discussion

简介

感谢基础字体作者。

利用 fontTools 包,可以混淆英文,数字以及大部分CJK字符。

制作出的字体可以用于反爬虫、保护隐私信息。

支持生成本地字体文件、base64内容,也可以上传到阿里云oss。

如需使用阿里云oss,请修改 config.py。

由于Sanic框架使用到了uvloop,windows用户请参考这个链接: https://github.com/huge-success/sanic#installation

测试

建议先新建一个python venv

python3 -m venv venv

pip install -r requirements.txt

python3 run test.py

然后打开 test/demo.html 即可体验效果。

API

如果需要上传到阿里云oss,请将upload设置为true。

普通混淆(明文+阴书)

curl -X POST \

http://127.0.0.1:1323/api/encrypt \

-H 'Content-Type: application/json' \

-d '{

"plaintext": "⓪⓵ⅱ③肆⓹㈥ⅦⅧ㊈谦锗孵塔瓤晰痔尖凯荤放国踌励拾卓侍猴补鹿德坪恶乙缺咯低谅骇绷曙赋睡矣凹陨详痪裹砍帜刀蟹泣搁诬拍宵茄氦汾确沈厢败匪零们摘漾掇叛概冕莫歌怀鸳喷顽碗脾冶家扒腕惋爹型谐男戈骂权疲洼不园崭烯",

"shadowtext": "奎粕澜椅敢悦钳婿哨羞局夹鲁豹瞅新旧寇测殖浩买拈悠实谓臼撬姓毯咏乎降殴肝钝妨蓖舞呐训耍积尺筐组轰迂话泽妆裂圈篙泞暮娶派财囊垣菊拂技辨墟音寿束老日闭舶操说阀耶土饺逃舌佯惠屿魏煽委汛陷萨砖吝尧确撤绕倘荫颗相",

"only_ttf": false,

"upload": false

}'

加强混淆(混淆name )

curl -X POST \

http://127.0.0.1:1323/api/encrypt-plus \

-H 'Content-Type: application/json' \

-d '{

"plaintext": "⓪⓵ⅱ③肆⓹㈥ⅦⅧ㊈谦锗孵塔瓤晰痔尖凯荤放国踌励拾卓侍猴补鹿德坪恶乙缺咯低谅骇绷曙赋睡矣凹陨详痪裹砍帜刀蟹泣搁诬拍宵茄氦汾确沈厢败匪零们摘漾掇叛概冕莫歌怀鸳喷顽碗脾冶家扒腕惋爹型谐男戈骂权疲洼不园崭烯",

"only_ttf": false,

"upload": false

}'

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].