GAT

Graph Attention Networks (Veličković et al., ICLR 2018): https://arxiv.org/abs/1710.10903

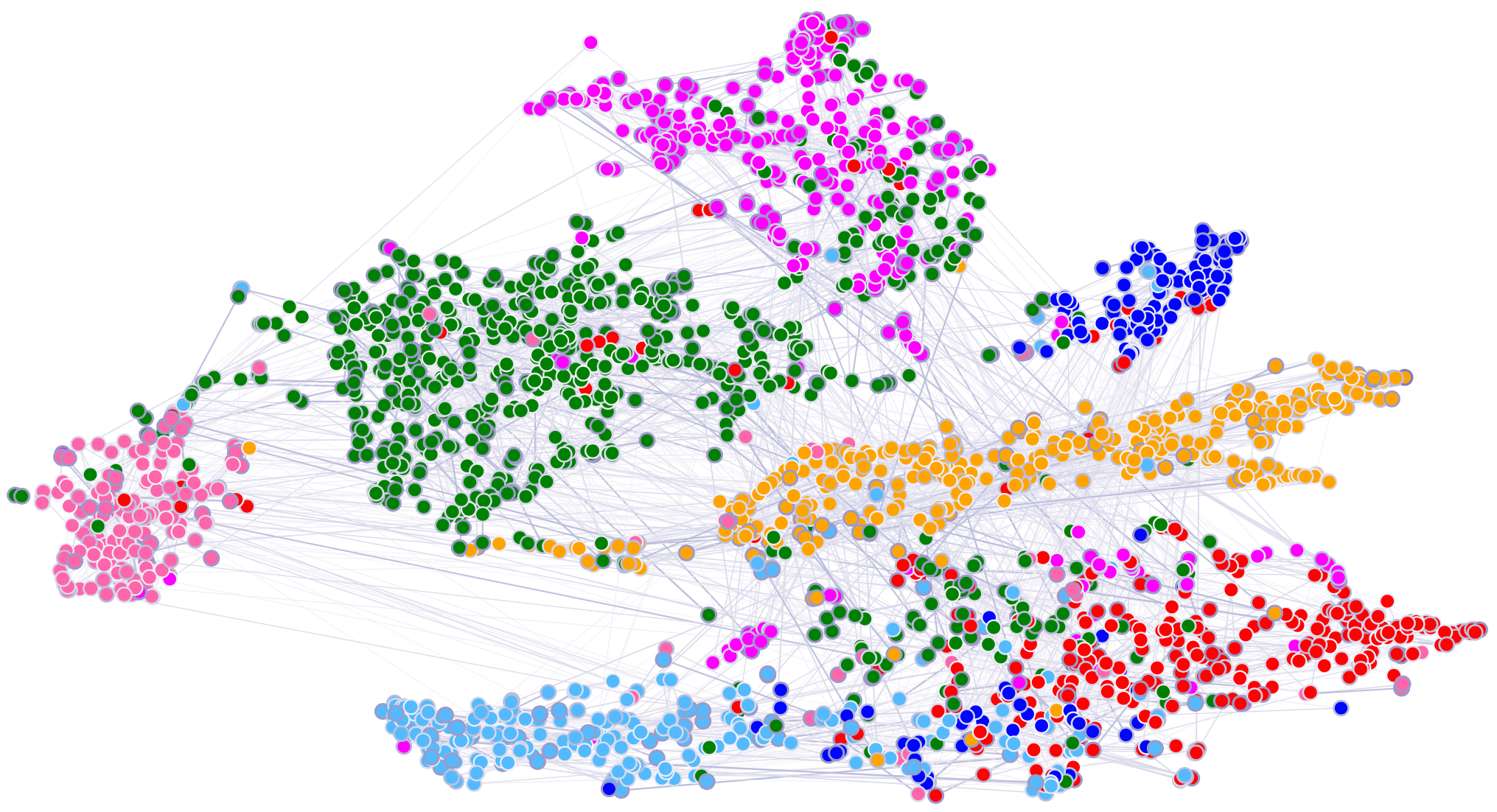

| GAT layer | t-SNE + Attention coefficients on Cora |

|---|---|

|

Overview

Here we provide the implementation of a Graph Attention Network (GAT) layer in TensorFlow, along with a minimal execution example (on the Cora dataset). The repository is organised as follows:

data/contains the necessary dataset files for Cora;models/contains the implementation of the GAT network (gat.py);pre_trained/contains a pre-trained Cora model (achieving 84.4% accuracy on the test set);utils/contains:- an implementation of an attention head, along with an experimental sparse version (

layers.py); - preprocessing subroutines (

process.py); - preprocessing utilities for the PPI benchmark (

process_ppi.py).

- an implementation of an attention head, along with an experimental sparse version (

Finally, execute_cora.py puts all of the above together and may be used to execute a full training run on Cora.

Sparse version

An experimental sparse version is also available, working only when the batch size is equal to 1.

The sparse model may be found at models/sp_gat.py.

You may execute a full training run of the sparse model on Cora through execute_cora_sparse.py.

Dependencies

The script has been tested running under Python 3.5.2, with the following packages installed (along with their dependencies):

numpy==1.14.1scipy==1.0.0networkx==2.1tensorflow-gpu==1.6.0

In addition, CUDA 9.0 and cuDNN 7 have been used.

Reference

If you make advantage of the GAT model in your research, please cite the following in your manuscript:

@article{

velickovic2018graph,

title="{Graph Attention Networks}",

author={Veli{\v{c}}kovi{\'{c}}, Petar and Cucurull, Guillem and Casanova, Arantxa and Romero, Adriana and Li{\`{o}}, Pietro and Bengio, Yoshua},

journal={International Conference on Learning Representations},

year={2018},

url={https://openreview.net/forum?id=rJXMpikCZ},

note={accepted as poster},

}

For getting started with GATs, as well as graph representation learning in general, we highly recommend the pytorch-GAT repository by Aleksa Gordić. It ships with an inductive (PPI) example as well.

GAT is a popular method for graph representation learning, with optimised implementations within virtually all standard GRL libraries:

- [PyTorch] PyTorch Geometric

- [PyTorch/TensorFlow] Deep Graph Library

- [TensorFlow] Spektral

- [JAX] jraph

We recommend using either one of those (depending on your favoured framework), as their implementations have been more readily battle-tested.

Early on post-release, two unofficial ports of the GAT model to various frameworks quickly surfaced. To honour the effort of their developers as early adopters of the GAT layer, we leave pointers to them here.

- [Keras] keras-gat, developed by Daniele Grattarola;

- [PyTorch] pyGAT, developed by Diego Antognini.

License

MIT