eellak / Gsoc2018 3gm

Programming Languages

Projects that are alternatives of or similar to Gsoc2018 3gm

🚀 Greek Government Gazette Text Mining, Cross Linking and Codification - 3gm

Welcome to Government Gazette text mining, cross linking, and codification Project (or 3gm for short) using Natural Language Processing Methods and Practices on Greek Legislation.

This project aims to provide with the most recent versions of each law, i.e. an automated codex via NLP methods and practices.

About the project

We live in a complex regulatory environment. As citizens, we obey government regulations from many authorities. As members of organized societies and groups, we must obey organizational policies and rules. As social beings, we are bound by conventions we make with others. As individuals, they are bound by personal rules of conduct. The full number and size of regulations can be really scary. We can agree on some general principles but, at the same time, we can disagree on how these principles apply to specific situations. In order to minimize such disagreements, regulators are often obliged to create numerous regulations or very large regulations to deal with special cases.

In the recent years plenty of attention has been gathering around analyzing public sector texts via text mining methods enabled by modern libraries, algorithms and practices and bought to to the forefront by open source projects such as textblob, spaCy, SciPy, Tensorflow and NLTK. These collaborative productive efforts seem to be a shift towards more efficient understanding of natural language by machines which can be used in conjunction with public documents in order to provide useful tools for legislators. This emerging sector is usually referred as "Computational Law".

This project, developed under the auspices the Google Summer of Code 2018 Program, carries out the extraction of Government Gazette (ΦΕΚ) texts from the National Printing House (ET), cross-links them with each other and, finally, identifies and applies the amendments to the legal text by providing automatic codification of the Greek legislation using methods and techniques of Natural Language Processing. This will allow the elimination of bureaucratic procedures and great time savings for lawyers looking for the most recent versions of statutes in legal databases. The detection of amendments is automated in order to amend the amendments to the laws merged into a common law, a procedure known as codification of the law. The new "merged" / modified / codified laws can show the current text of a law at every moment. This is something that is being traditionally done by hand and our aim was to automate it.

Finally, the laws are clustered into topics according to their content using a non-supervised machine learning model (Latent Dirichlet Allocation) to provide a more holistic representation of Greek legislation. Also, for easier indexing, PageRank was used and therefore the interconnections of the laws were positively taken into account, because the more references there is a legislative text than the other the more important it is characterized.

Through the analysis, categorization and codification of the GG documents, this project facilitates key elements of everyday life such as the elimination of bureaucracy and the efficient management of public documents to implement tangible solutions, which allows huge savings for lawyers and citizens.

A presentation of the project is available here as part of FOSSCOMM 2018 at the University of Crete

Demo

The project is hosted at 3gm.ellak.gr or openlaws.ellak.gr. A video presentation of the project is available here.

Timeline

You can view the detailed timeline here. What has been done during the program can be found in the Final Progress Report.

Google Summer of Code 2019

This repository will host the changes and code developped for 3gm as part of the Google Summer of Code 2019. This year's effort mainly aims to enhence NLP functionalities of the project and is based on this project proposal. The timeline of the project is described here and you can also find a worklog documenting the progress made during the development of the project.

The main goals for GSoC-2019 are populating the database with more types of amendments, widening the range of feature extraction and training a new Doc2Vec model and a new NER annotator specifically for our corpus.

Migrating Data

As part of the first week of GSoC-2019 a data mirgation project. In the scope of this project we had to mine the website of the Greek National Printing House and upload as many GGG issues to the respective Internet Archive Collection. Until now, 87,874 issues have been uploaded, in addition to the ~45.000 files that the collection contained initially and this number will continue to surge. The main goal of this whole endeavour is making the greek legislation archive more accessible.

We tried documenting our insights from this process. We would like to evolve this to an entry at the project wiki, titled " A simple guide to mining the Greek Government Gazette".

NER model Training

After uploading a major part of the Greek Government Gazette issues, including all primary type issues, it was time to start building a dataset to train a new NER tagger based on the Greek spaCy model. To do that it is necessary to use an annotation tool. A tool that is fully compatible with spaCy is prodigy. We contacted them and they provided a research licence for the duration of the project.

To mine, prepare and annotate our data we followed this workflow and followed the guidlines for annotation described here.

All above documents will be incorporated on the project wiki shortely.

As a result of this process we have created a dataset containing around 3000 sentences. A first version of this dataset can be found in the projects data folder. We have also deployed the prodigy annotator, in an effort to showcase our progress. In case you want to support this year's project. All annotations gathered will be used for model training after quality control.You can find it here.

After obtaining a large enough data-set to train our models we trained the small and medium sized Greek spaCy models using the prodigy recipes for training. The models showed significant improvement after training. A version of the small NER model that we trained can be found in the data directory of this repo. Our goal now is to optimize the model and properly evaluate it. As a first step to this process we will use the train-curve recipe of prodigy to see how to the model performs when trained with different portions of our data. Finally we will develop a python script to train the spacy model, document all its metrics and tune hyperparameters. The is process is documented in this report

The final version of the NER model is located in the models folder alongside a model of word-embedding containing around 20000 word vectors.

The most efficient in terms of performance and complexity model will then be integrated to the 3gm app.

Broadening fact extaction

During this year's GSOC we focused a lot on enhancing the NLP capabilities of the project.

As part of this procedure it is vital to broaden fact extraction on the project. Using regular expressions we will work on the entities file aiming to make it possible for the app to identify useful information such as metrics, codes, ids, contact info e.t.c.

We have created a script to test regular expressions for fact extractions. Unfortunately there is very little consistency when it come to writing information between issues and this results to difficulties in entities extraction.

After optimizing the extraction queries we integrated them to the entities module that can be found in the 3gm directory. We now have to use the regular expressions to extract entities in the pparser module, the module that is responsible for extracting amendments, laws and ratifications.

Training a new Doc2vec model

We will train a new model for doc2vec using the gensim library following the proposed workflow in the project wiki. We will use the codifier to create a large corpus and subsequently train the gensim model on it. To make sure that the model is efficient we will have to create a corpus of several thousand issues and then finetune the model's hyperparameters.

For the time being we have created a corpus file containing 2878 laws and presidential decisions totaling around 223Mb. We have also trained a doc2vec model that can be found in the models directory. Our goal is to create a corpus as big as possible and this is the reason we will continue to expand it.

Creating a natural language model

Even though it was not included in the initial project proposal we also decided to create a natural language model that generates texts, aiming to make use of the word vectors we had produced earlier using prodigy. to achieve this we will deploy transfer learning techniques

Our approach includes training a variation of a character level based LSTM model that we trained on a corpus of GGG texts.The idea is to use the embeddings produced, in an embedding layer and then stack this model on top of it. To train the model we are using Google Colab using TPU acceleration on a variation of this notebook provided by the TensorFlow Hub Authors.

Documentation

As part of our effort to document the changes to the project during GSOC-2019 we thought that it would be vital to update and integrate changes to the project's wiki. You can follow up on the process in this repo

Deliverables

The deliverables for the GSOC-2019 include:

- An expanded version of the Internet Archive collection containing a total of 134,113 issues from several issue types.

- A new Named Entity Recognision model trained exlusively on Greek Government Gazzette texts.

- An expanded entities.py module with broadened fact extraction functionality

- A new Doc2vec model containing around 3000 vectors

Final Progress Report

You can find the final progress report in the form of github gist at the following link

Google Summer of Code 2018

The project met and exceeded its goals for Google Summer of Code 2018. Link

Google Summer of Code participant: Marios Papachristou (papachristoumarios)

Organization: GFOSS - Open Technologies Alliance

Contibutors

Mentors for GSOC 2019

- Mentor: Marios Papachristou (papachristoumarios)

- Mentor: Diomidis Spinellis (dsplinellis)

- Mentor: Ioannis Anagnostopoulos

- Mentor: Panos Louridas (louridas)

Mentors for GSOC 2018

- Mentor: Diomidis Spinellis (dsplinellis)

- Mentor: Sarantos Kapidakis

- Mentor: Marios Papachristou (papachristoumarios)

Development

- Marios Papachristou (Original Developer - Google Summer of Code 2018)

- Theodore Papadopoulos (AngularJS UI)

- Sotirios Papadiamantis (Google Summer of Code 2019)

Overview

- Getting started

- Algorithms

- Datasets and Continuous Integration

-

Documentation

- API Documentation

- RESTful API

- Help (for web application)

- Development

Technologies used

- The project is written in Python 3.x using the following libraries: spaCy, gensim, selenium, pdfminer.six, networkx, Flask_RESTful, Flask, pytest, numpy, pymongo, sklearn, pyocr, bs4, pillow and wand.

- The information is stored in MongoDB (document-oriented database schema) and is accessible through a RESTful API.

- The UI is based on angular 7

Project Features & Production Ready Tools

- Document parser can parse PDFs from Government Gazette Issues (see the

datafor examples). The documents are split into articles in order to detect amendments. - Parser for existing laws.

- Named Entities for Legal Acts (e.g. Laws, Legislative Decrees etc.) encoded in regular expressions.

-

Similarity analyzer using topic models for finding Government Gazette Issues that have the same topics.

- We use an unsupervised model to extract the topics and then group Issues by topics for cross-linking between Government Gazette Documents. Topic modelling is done with the LDA algorithm as illustrated in the Wiki Page. The source code is located at

3gm/topic_models.py. - There is also a Doc2Vec approach.

- We use an unsupervised model to extract the topics and then group Issues by topics for cross-linking between Government Gazette Documents. Topic modelling is done with the LDA algorithm as illustrated in the Wiki Page. The source code is located at

- Documented end-2-end procedure at Project Wiki

- MongoDB Integration

- Fetching Tool for automated fetching of documents from ET

- Parallelized tool for batch conversion of documents with pdf2txt (for newer documents) or Google Tesseract 4.0 (for performing OCR on older documents) with

pdfminer.six,tesseractandpyocr -

Digitalized archive of Government Gazette Issues from 1976 - today in PDF and plaintext format. Conversion of documents is done either via

pdfminer.sixortesseract(for OCR on older documents). -

Web application written in Flask located at

3gm/app.pyhosted at 3gm.ellak.gr -

RESTful API written in

flask-restfulfor providing versions of the laws and - Unit tests integrated to Travis CI.

- Versioning system for laws with support for checkouts, rollbacks etc.

-

Ranking of laws using PageRank provided by the

networkxpackage. - Summarization Module using TextRank for providing summaries at the search results.

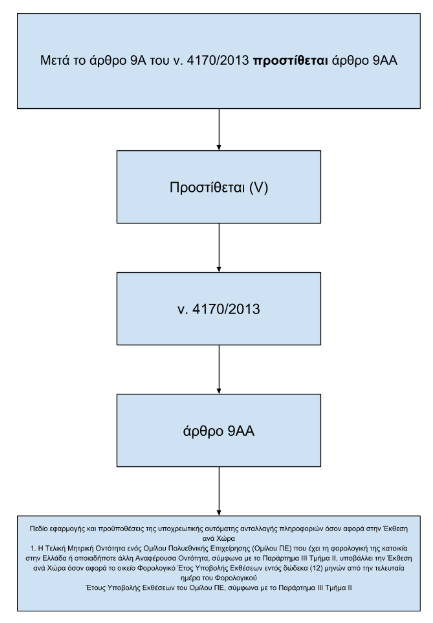

- Amendment Detection Algorithm. For example (taken from Greek Government Gazette):

Μετά το άρθρο 9Α του ν. 4170/2013, που προστέθηκε με το άρθρο 3 του ν. 4474/2017, προστίθεται άρθρο 9ΑΑ, ως εξής:

Main Body / Extract

Άρθρο 9ΑΑ

Πεδίο εφαρμογής και προϋποθέσεις της υποχρεωτικής αυτόματης ανταλλαγής πληροφοριών όσον αφορά στην Έκθεση ανά Χώρα

- Η Τελική Μητρική Οντότητα ενός Ομίλου Πολυεθνικής Επιχείρησης (Ομίλου ΠΕ) που έχει τη φορολογική της κατοικία στην Ελλάδα ή οποιαδήποτε άλλη Αναφέρουσα Οντότητα, σύμφωνα με το Παράρτημα ΙΙΙ Τμήμα ΙΙ, υποβάλλει την Έκθεση ανά Χώρα όσον αφορά το οικείο Φορολογικό Έτος Υποβολής Εκθέσεων εντός δώδεκα (12) μηνών από την τελευταία ημέρα του Φορολογικού Έτους Υποβολής Εκθέσεων του Ομίλου ΠΕ, σύμφωνα με το Παράρτημα ΙΙΙ Τμήμα ΙΙ.

The above text signifies the addition of an article to an existing law. We use a combination of heuristics and NLP from the spaCy package to detect the keywords (e.g. verbs, subjects etc.):

- Detect keywords for additions, removals, replacements etc.

- Detect the subject which is in nominative in Greek. The subject is also part of some keywords such as article (άρθρο), paragraph(παράγραφος), period (εδάφιο), phrase (φράση) etc. These words have a subset relationship which means that once the algorithm finds the subject it should look up for its predecessors. So it results in a structure like this:

- A Python dictionary is generated:

{'action': 'αντικαθίσταται', 'law': {'article': { '_id': '9AA', 'content': 'Πεδίο εφαρμογής και προϋποθέσεις της υποχρεωτικής αυτόματης ανταλλαγής πληροφοριών όσον αφορά στην Έκθεση ανά Χώρα 1. Η Τελική Μητρική Οντότητα ενός Ομίλου Πολυεθνικής Επιχείρησης (Ομίλου ΠΕ) που έχει τη φορολογική της κατοικία στην Ελλάδα ή οποιαδήποτε άλλη Αναφέρουσα Οντότητα, σύμφωνα με το Παράρτημα ΙΙΙ Τμήμα ΙΙ, υποβάλλει την Έκθεση ανά Χώρα όσον αφορά το οικείο Φορολογικό Έτος Υποβολής Εκθέσεων εντός δώδεκα (12) μηνών από την τελευταία ημέρα του Φορολογικού Έτους Υποβολής Εκθέσεων του Ομίλου ΠΕ, σύμφωνα με το Παράρτημα ΙΙΙ Τμήμα ΙΙ.'}, '_id': 'ν. 4170/2013'}, '_id': 14}

- And is translated to a MongoDB operation (in this case insertion into the database). Then the information is stored to the database.

For more information visit the corresponding Wiki Page

Challenges

- Government Gazette Issues may not always follow guidelines.

- Improving heuristics.

- Gathering Information.

- Digitizing very old articles.

Mailing List

Development Mailing List: [email protected]

License

The project is opensourced as a part of the Google Summer of Code Program and Vision. Here, the GNU GPLv3 license is adopted. For more information see LICENSE.