AminHP / Gym Anytrading

Programming Languages

Projects that are alternatives of or similar to Gym Anytrading

gym-anytrading

AnyTrading is a collection of OpenAI Gym environments for reinforcement learning-based trading algorithms.

Trading algorithms are mostly implemented in two markets: FOREX and Stock. AnyTrading aims to provide some Gym environments to improve and facilitate the procedure of developing and testing RL-based algorithms in this area. This purpose is obtained by implementing three Gym environments: TradingEnv, ForexEnv, and StocksEnv.

TradingEnv is an abstract environment which is defined to support all kinds of trading environments. ForexEnv and StocksEnv are simply two environments that inherit and extend TradingEnv. In the future sections, more explanations will be given about them but before that, some environment properties should be discussed.

Installation

Via PIP

pip install gym-anytrading

From Repository

git clone https://github.com/AminHP/gym-anytrading

cd gym-anytrading

pip install -e .

## or

pip install --upgrade --no-deps --force-reinstall https://github.com/AminHP/gym-anytrading/archive/master.zip

Environment Properties

First of all, you can't simply expect an RL agent to do everything for you and just sit back on your chair in such complex trading markets! Things need to be simplified as much as possible in order to let the agent learn in a faster and more efficient way. In all trading algorithms, the first thing that should be done is to define actions and positions. In the two following subsections, I will explain these actions and positions and how to simplify them.

Trading Actions

If you search on the Internet for trading algorithms, you will find them using numerous actions such as Buy, Sell, Hold, Enter, Exit, etc. Referring to the first statement of this section, a typical RL agent can only solve a part of the main problem in this area. If you work in trading markets you will learn that deciding whether to hold, enter, or exit a pair (in FOREX) or stock (in Stocks) is a statistical decision depending on many parameters such as your budget, pairs or stocks you trade, your money distribution policy in multiple markets, etc. It's a massive burden for an RL agent to consider all these parameters and may take years to develop such an agent! In this case, you certainly will not use this environment but you will extend your own.

So after months of work, I finally found out that these actions just make things complicated with no real positive impact. In fact, they just increase the learning time and an action like Hold will be barely used by a well-trained agent because it doesn't want to miss a single penny. Therefore there is no need to have such numerous actions and only Sell=0 and Buy=1 actions are adequate to train an agent just as well.

Trading Positions

If you're not familiar with trading positions, refer here. It's a very important concept and you should learn it as soon as possible.

In a simple vision: Long position wants to buy shares when prices are low and profit by sticking with them while their value is going up, and Short position wants to sell shares with high value and use this value to buy shares at a lower value, keeping the difference as profit.

Again, in some trading algorithms, you may find numerous positions such as Short, Long, Flat, etc. As discussed earlier, I use only Short=0 and Long=1 positions.

Trading Environments

As I noticed earlier, now it's time to introduce the three environments. Before creating this project, I spent so much time to search for a simple and flexible Gym environment for any trading market but didn't find one. They were almost a bunch of complex codes with many unclear parameters that you couldn't simply look at them and comprehend what's going on. So I concluded to implement this project with a great focus on simplicity, flexibility, and comprehensiveness.

In the three following subsections, I will introduce our trading environments and in the next section, some IPython examples will be mentioned and briefly explained.

TradingEnv

TradingEnv is an abstract class which inherits gym.Env. This class aims to provide a general-purpose environment for all kinds of trading markets. Here I explain its public properties and methods. But feel free to take a look at the complete source code.

- Properties:

df: An abbreviation for DataFrame. It's a pandas' DataFrame which contains your dataset and is passed in the class' constructor.

prices: Real prices over time. Used to calculate profit and render the environment.

signal_features: Extracted features over time. Used to create Gym observations.

window_size: Number of ticks (current and previous ticks) returned as a Gym observation. It is passed in the class' constructor.

action_space: The Gym action_space property. Containing discrete values of 0=Sell and 1=Buy.

observation_space: The Gym observation_space property. Each observation is a window onsignal_featuresfrom index current_tick - window_size + 1 to current_tick. So_start_tickof the environment would be equal towindow_size. In addition, initial value for_last_trade_tickis window_size - 1 .

shape: Shape of a single observation.

history: Stores the information of all steps.

- Methods:

seed: Typical Gym seed method.

reset: Typical Gym reset method.

step: Typical Gym step method.

render: Typical Gym render method. Renders the information of the environment's current tick.

render_all: Renders the whole environment.

close: Typical Gym close method.

- Abstract Methods:

_process_data: It is called in the constructor and returnspricesandsignal_featuresas a tuple. In different trading markets, different features need to be obtained. So this method enables our TradingEnv to be a general-purpose environment and specific features can be returned for specific environments such as FOREX, Stocks, etc.

_calculate_reward: The reward function for the RL agent.

_update_profit: Calculates and updates total profit which the RL agent has achieved so far. Profit indicates the amount of units of currency you have achieved by starting with 1.0 unit (Profit = FinalMoney / StartingMoney).

max_possible_profit: The maximum possible profit that an RL agent can obtain regardless of trade fees.

ForexEnv

This is a concrete class which inherits TradingEnv and implements its abstract methods. Also, it has some specific properties for the FOREX market. For more information refer to the source code.

- Properties:

frame_bound: A tuple which specifies the start and end ofdf. It is passed in the class' constructor.

unit_side: Specifies the side you start your trading. Containing string values of left (default value) and right. As you know, there are two sides in a currency pair in FOREX. For example in the EUR/USD pair, when you choose theleftside, your currency unit is EUR and you start your trading with 1 EUR. It is passed in the class' constructor.

trade_fee: A default constant fee which is subtracted from the real prices on every trade.

StocksEnv

Same as ForexEnv but for the Stock market. For more information refer to the source code.

- Properties:

frame_bound: A tuple which specifies the start and end ofdf. It is passed in the class' constructor.

trade_fee_bid_percent: A default constant fee percentage for bids. For example with trade_fee_bid_percent=0.01, you will lose 1% of your money every time you sell your shares.

trade_fee_ask_percent: A default constant fee percentage for asks. For example with trade_fee_ask_percent=0.005, you will lose 0.5% of your money every time you buy some shares.

Besides, you can create your own customized environment by extending TradingEnv or even ForexEnv or StocksEnv with your desired policies for calculating reward, profit, fee, etc.

Examples

Create an environment

import gym

import gym_anytrading

env = gym.make('forex-v0')

# env = gym.make('stocks-v0')

- This will create the default environment. You can change any parameters such as dataset, frame_bound, etc.

Create an environment with custom parameters

I put two default datasets for FOREX and Stocks but you can use your own.

from gym_anytrading.datasets import FOREX_EURUSD_1H_ASK, STOCKS_GOOGL

custom_env = gym.make('forex-v0',

df = FOREX_EURUSD_1H_ASK,

window_size = 10,

frame_bound = (10, 300),

unit_side = 'right')

# custom_env = gym.make('stocks-v0',

# df = STOCKS_GOOGL,

# window_size = 10,

# frame_bound = (10, 300))

- It is to be noted that the first element of

frame_boundshould be greater than or equal towindow_size.

Print some information

print("env information:")

print("> shape:", env.shape)

print("> df.shape:", env.df.shape)

print("> prices.shape:", env.prices.shape)

print("> signal_features.shape:", env.signal_features.shape)

print("> max_possible_profit:", env.max_possible_profit())

print()

print("custom_env information:")

print("> shape:", custom_env.shape)

print("> df.shape:", env.df.shape)

print("> prices.shape:", custom_env.prices.shape)

print("> signal_features.shape:", custom_env.signal_features.shape)

print("> max_possible_profit:", custom_env.max_possible_profit())

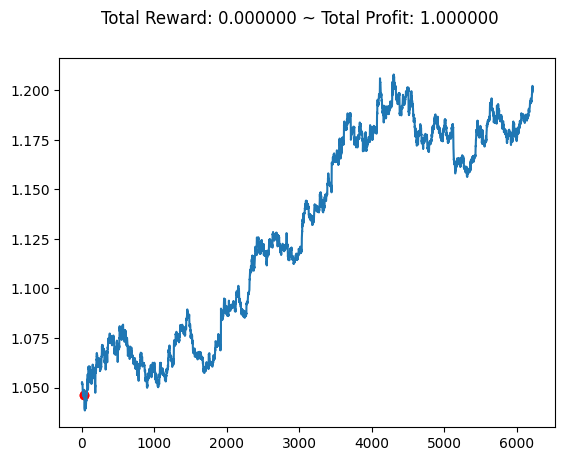

env information:

> shape: (24, 2)

> df.shape: (6225, 5)

> prices.shape: (6225,)

> signal_features.shape: (6225, 2)

> max_possible_profit: 4.054414887146586

custom_env information:

> shape: (10, 2)

> df.shape: (6225, 5)

> prices.shape: (300,)

> signal_features.shape: (300, 2)

> max_possible_profit: 1.122900180008982

- Here

max_possible_profitsignifies that if the market didn't have trade fees, you could have earned 4.054414887146586 (or 1.122900180008982) units of currency by starting with 1.0. In other words, your money is almost quadrupled.

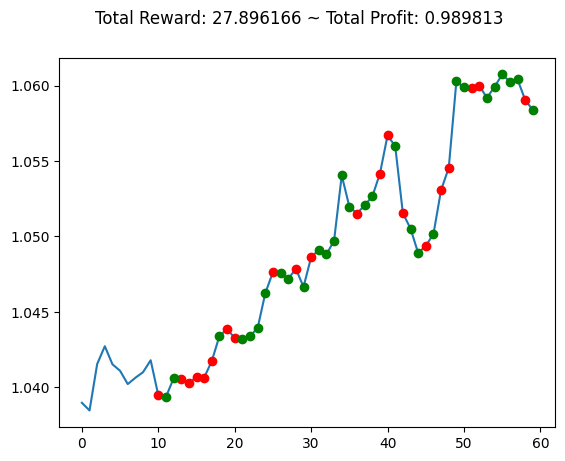

Plot the environment

env.reset()

env.render()

-

Short and Long positions are shown in

redandgreencolors. - As you see, the starting position of the environment is always Short.

A complete example

import gym

import gym_anytrading

from gym_anytrading.envs import TradingEnv, ForexEnv, StocksEnv, Actions, Positions

from gym_anytrading.datasets import FOREX_EURUSD_1H_ASK, STOCKS_GOOGL

import matplotlib.pyplot as plt

env = gym.make('forex-v0', frame_bound=(50, 100), window_size=10)

# env = gym.make('stocks-v0', frame_bound=(50, 100), window_size=10)

observation = env.reset()

while True:

action = env.action_space.sample()

observation, reward, done, info = env.step(action)

# env.render()

if done:

print("info:", info)

break

plt.cla()

env.render_all()

plt.show()

info: {'total_reward': -173.10000000000602, 'total_profit': 0.980652456904312, 'position': 0}

- You can use

render_allmethod to avoid rendering on each step and prevent time-wasting. - As you see, the first 10 points (

window_size=10) on the plot don't have a position. Because they aren't involved in calculating reward, profit, etc. They just display the first observations. So the environment's_start_tickand initial_last_trade_tickare 10 and 9.

Mix with stable-baselines and quantstats

Here is an example that mixes gym-anytrading with the mentioned famous libraries and shows how to utilize our trading environments in other RL or trading libraries.

Extend and manipulate TradingEnv

In case you want to process data and extract features outside the environment, it can be simply done by two methods:

Method 1 (Recommended):

def my_process_data(env):

start = env.frame_bound[0] - env.window_size

end = env.frame_bound[1]

prices = env.df.loc[:, 'Low'].to_numpy()[start:end]

signal_features = env.df.loc[:, ['Close', 'Open', 'High', 'Low']].to_numpy()[start:end]

return prices, signal_features

class MyForexEnv(ForexEnv):

_process_data = my_process_data

env = MyForexEnv(df=FOREX_EURUSD_1H_ASK, window_size=12, frame_bound=(12, len(FOREX_EURUSD_1H_ASK)))

Method 2:

def my_process_data(df, window_size, frame_bound):

start = frame_bound[0] - window_size

end = frame_bound[1]

prices = df.loc[:, 'Low'].to_numpy()[start:end]

signal_features = df.loc[:, ['Close', 'Open', 'High', 'Low']].to_numpy()[start:end]

return prices, signal_features

class MyStocksEnv(StocksEnv):

def __init__(self, prices, signal_features, **kwargs):

self._prices = prices

self._signal_features = signal_features

super().__init__(**kwargs)

def _process_data(self):

return self._prices, self._signal_features

prices, signal_features = my_process_data(df=STOCKS_GOOGL, window_size=30, frame_bound=(30, len(STOCKS_GOOGL)))

env = MyStocksEnv(prices, signal_features, df=STOCKS_GOOGL, window_size=30, frame_bound=(30, len(STOCKS_GOOGL)))