carpedm20 / Lstm Char Cnn Tensorflow

Licence: mit

in progress

Stars: ✭ 737

Programming Languages

python

139335 projects - #7 most used programming language

Labels

Projects that are alternatives of or similar to Lstm Char Cnn Tensorflow

Multi Class Text Classification Cnn Rnn

Classify Kaggle San Francisco Crime Description into 39 classes. Build the model with CNN, RNN (GRU and LSTM) and Word Embeddings on Tensorflow.

Stars: ✭ 570 (-22.66%)

Mutual labels: cnn, lstm

Unet Zoo

A collection of UNet and hybrid architectures in PyTorch for 2D and 3D Biomedical Image segmentation

Stars: ✭ 302 (-59.02%)

Mutual labels: cnn, lstm

Automatic speech recognition

End-to-end Automatic Speech Recognition for Madarian and English in Tensorflow

Stars: ✭ 2,751 (+273.27%)

Mutual labels: cnn, lstm

Cryptocurrencyprediction

Predict Cryptocurrency Price with Deep Learning

Stars: ✭ 453 (-38.53%)

Mutual labels: cnn, lstm

stylenet

A pytorch implemention of "StyleNet: Generating Attractive Visual Captions with Styles"

Stars: ✭ 58 (-92.13%)

Mutual labels: cnn, lstm

Image Captioning

Image Captioning using InceptionV3 and beam search

Stars: ✭ 290 (-60.65%)

Mutual labels: cnn, lstm

Natural Language Processing With Tensorflow

Natural Language Processing with TensorFlow, published by Packt

Stars: ✭ 222 (-69.88%)

Mutual labels: cnn, lstm

Cnn lstm ctc tensorflow

CNN+LSTM+CTC based OCR implemented using tensorflow.

Stars: ✭ 343 (-53.46%)

Mutual labels: cnn, lstm

Personality Detection

Implementation of a hierarchical CNN based model to detect Big Five personality traits

Stars: ✭ 338 (-54.14%)

Mutual labels: cnn, lstm

Lightnet

Efficient, transparent deep learning in hundreds of lines of code.

Stars: ✭ 243 (-67.03%)

Mutual labels: cnn, lstm

Textclassificationbenchmark

A Benchmark of Text Classification in PyTorch

Stars: ✭ 534 (-27.54%)

Mutual labels: cnn, lstm

Caption generator

A modular library built on top of Keras and TensorFlow to generate a caption in natural language for any input image.

Stars: ✭ 243 (-67.03%)

Mutual labels: cnn, lstm

Text Classification

Implementation of papers for text classification task on DBpedia

Stars: ✭ 682 (-7.46%)

Mutual labels: cnn, lstm

Pytorch Sentiment Analysis

Tutorials on getting started with PyTorch and TorchText for sentiment analysis.

Stars: ✭ 3,209 (+335.41%)

Mutual labels: cnn, lstm

Cs291k

🎭 Sentiment Analysis of Twitter data using combined CNN and LSTM Neural Network models

Stars: ✭ 287 (-61.06%)

Mutual labels: cnn, lstm

Screenshot To Code

A neural network that transforms a design mock-up into a static website.

Stars: ✭ 13,561 (+1740.03%)

Mutual labels: cnn, lstm

Sign Language Gesture Recognition

Sign Language Gesture Recognition From Video Sequences Using RNN And CNN

Stars: ✭ 214 (-70.96%)

Mutual labels: cnn, lstm

Basicocr

BasicOCR是一个致力于解决自然场景文字识别算法研究的项目。该项目由长城数字大数据应用技术研究院佟派AI团队发起和维护。

Stars: ✭ 336 (-54.41%)

Mutual labels: cnn, lstm

Lstm Fcn

Codebase for the paper LSTM Fully Convolutional Networks for Time Series Classification

Stars: ✭ 482 (-34.6%)

Mutual labels: cnn, lstm

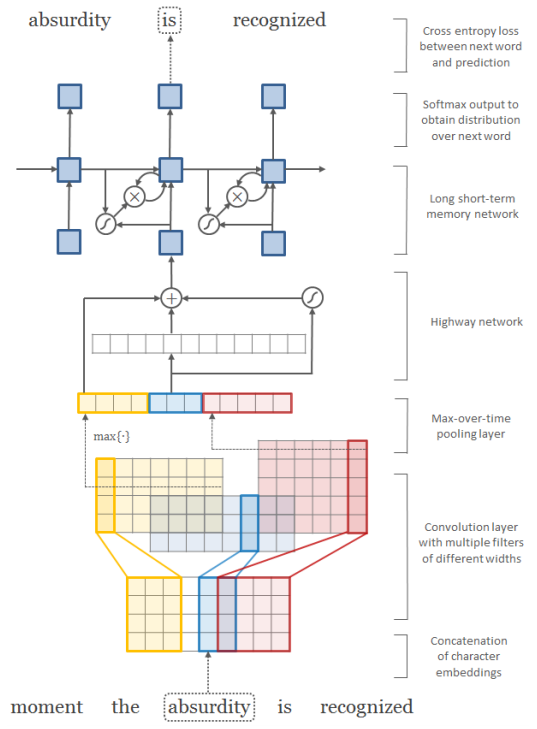

Character-Aware Neural Language Models

Tensorflow implementation of Character-Aware Neural Language Models. The original code of author can be found here.

This implementation contains:

- Word-level and Character-level Convolutional Neural Network

- Highway Network

- Recurrent Neural Network Language Model

The current implementation has a performance issue. See #3.

Prerequisites

- Python 2.7 or Python 3.3+

- Tensorflow

Usage

To train a model with ptb dataset:

$ python main.py --dataset ptb

To test an existing model:

$ python main.py --dataset ptb --forward_only True

To see all training options, run:

$ python main.py --help

which will print

usage: main.py [-h] [--epoch EPOCH] [--word_embed_dim WORD_EMBED_DIM]

[--char_embed_dim CHAR_EMBED_DIM]

[--max_word_length MAX_WORD_LENGTH] [--batch_size BATCH_SIZE]

[--seq_length SEQ_LENGTH] [--learning_rate LEARNING_RATE]

[--decay DECAY] [--dropout_prob DROPOUT_PROB]

[--feature_maps FEATURE_MAPS] [--kernels KERNELS]

[--model MODEL] [--data_dir DATA_DIR] [--dataset DATASET]

[--checkpoint_dir CHECKPOINT_DIR]

[--forward_only [FORWARD_ONLY]] [--noforward_only]

[--use_char [USE_CHAR]] [--nouse_char] [--use_word [USE_WORD]]

[--nouse_word]

optional arguments:

-h, --help show this help message and exit

--epoch EPOCH Epoch to train [25]

--word_embed_dim WORD_EMBED_DIM

The dimension of word embedding matrix [650]

--char_embed_dim CHAR_EMBED_DIM

The dimension of char embedding matrix [15]

--max_word_length MAX_WORD_LENGTH

The maximum length of word [65]

--batch_size BATCH_SIZE

The size of batch images [100]

--seq_length SEQ_LENGTH

The # of timesteps to unroll for [35]

--learning_rate LEARNING_RATE

Learning rate [1.0]

--decay DECAY Decay of SGD [0.5]

--dropout_prob DROPOUT_PROB

Probability of dropout layer [0.5]

--feature_maps FEATURE_MAPS

The # of feature maps in CNN

[50,100,150,200,200,200,200]

--kernels KERNELS The width of CNN kernels [1,2,3,4,5,6,7]

--model MODEL The type of model to train and test [LSTM, LSTMTDNN]

--data_dir DATA_DIR The name of data directory [data]

--dataset DATASET The name of dataset [ptb]

--checkpoint_dir CHECKPOINT_DIR

Directory name to save the checkpoints [checkpoint]

--forward_only [FORWARD_ONLY]

True for forward only, False for training [False]

--noforward_only

--use_char [USE_CHAR]

Use character-level language model [True]

--nouse_char

--use_word [USE_WORD]

Use word-level language [False]

--nouse_word

but more options can be found in models/LSTMTDNN and models/TDNN.

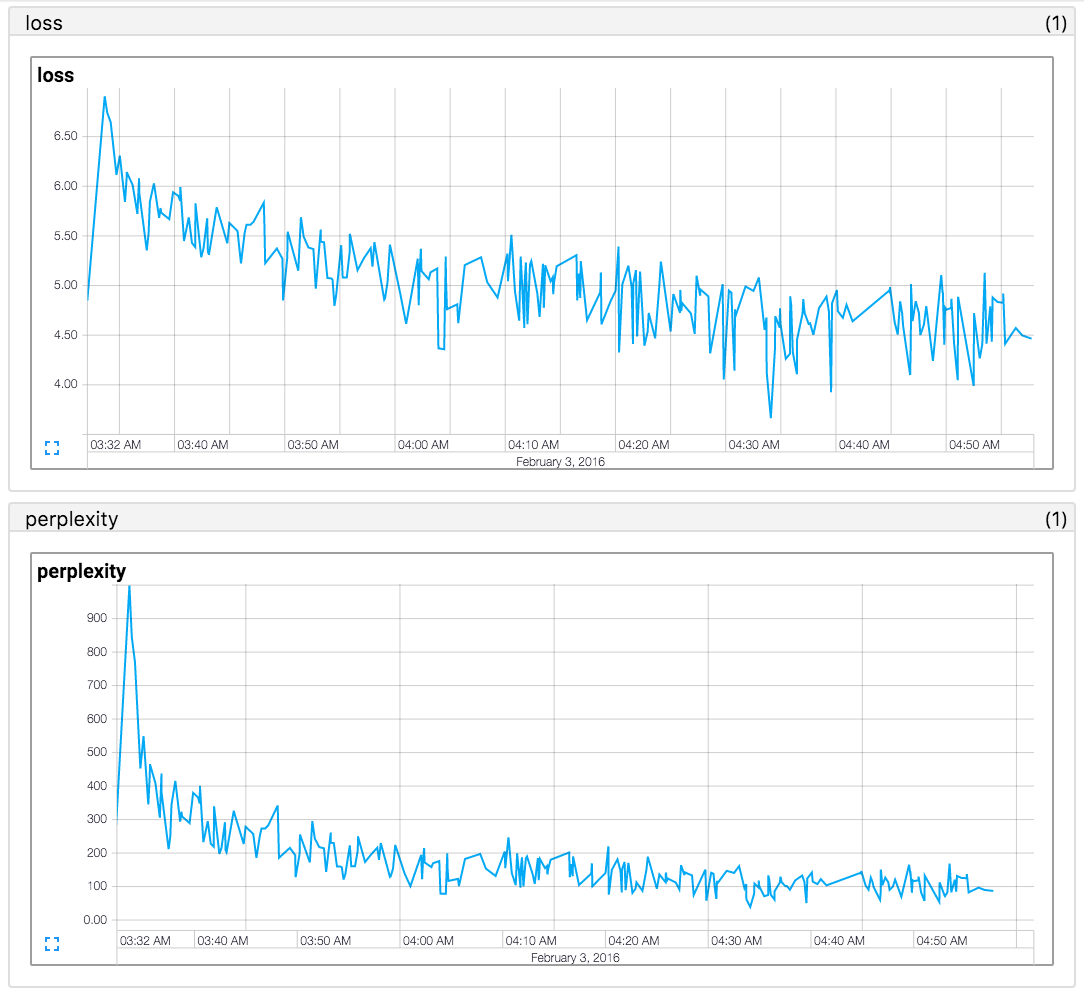

Performance

Failed to reproduce the results of paper (2016.02.12). If you are looking for a code that reproduced the paper's result, see https://github.com/mkroutikov/tf-lstm-char-cnn.

The perplexity on the test sets of Penn Treebank (PTB) corpora.

| Name | Character embed | LSTM hidden units | Paper (Y Kim 2016) | This repo. |

|---|---|---|---|---|

| LSTM-Char-Small | 15 | 100 | 92.3 | in progress |

| LSTM-Char-Large | 15 | 150 | 78.9 | in progress |

Author

Taehoon Kim / @carpedm20

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].