sisl / Ngsim_env

Licence: mit

Learning human driver models from NGSIM data with imitation learning.

Stars: ✭ 96

Projects that are alternatives of or similar to Ngsim env

Awesome Carla

👉 CARLA resources such as tutorial, blog, code and etc https://github.com/carla-simulator/carla

Stars: ✭ 246 (+156.25%)

Mutual labels: autonomous-vehicles, reinforcement-learning, deeplearning, imitation-learning

Reinforcement learning tutorial with demo

Reinforcement Learning Tutorial with Demo: DP (Policy and Value Iteration), Monte Carlo, TD Learning (SARSA, QLearning), Function Approximation, Policy Gradient, DQN, Imitation, Meta Learning, Papers, Courses, etc..

Stars: ✭ 442 (+360.42%)

Mutual labels: jupyter-notebook, reinforcement-learning, imitation-learning

Novel Deep Learning Model For Traffic Sign Detection Using Capsule Networks

capsule networks that achieves outstanding performance on the German traffic sign dataset

Stars: ✭ 88 (-8.33%)

Mutual labels: autonomous-vehicles, jupyter-notebook, deeplearning

Release

Deep Reinforcement Learning for de-novo Drug Design

Stars: ✭ 201 (+109.38%)

Mutual labels: jupyter-notebook, reinforcement-learning, deeplearning

Tensorwatch

Debugging, monitoring and visualization for Python Machine Learning and Data Science

Stars: ✭ 3,191 (+3223.96%)

Mutual labels: jupyter-notebook, reinforcement-learning, deeplearning

Text summurization abstractive methods

Multiple implementations for abstractive text summurization , using google colab

Stars: ✭ 359 (+273.96%)

Mutual labels: jupyter-notebook, reinforcement-learning, deeplearning

Basic reinforcement learning

An introductory series to Reinforcement Learning (RL) with comprehensive step-by-step tutorials.

Stars: ✭ 826 (+760.42%)

Mutual labels: jupyter-notebook, reinforcement-learning, deeplearning

Awesome Decision Making Reinforcement Learning

A selection of state-of-the-art research materials on decision making and motion planning.

Stars: ✭ 68 (-29.17%)

Mutual labels: autonomous-vehicles, reinforcement-learning

Rl Workshop

Reinforcement Learning Workshop for Data Science BKK

Stars: ✭ 73 (-23.96%)

Mutual labels: jupyter-notebook, reinforcement-learning

Deep learning for biologists with keras

tutorials made for biologists to learn deep learning

Stars: ✭ 74 (-22.92%)

Mutual labels: jupyter-notebook, deeplearning

Mathy

Tools for using computer algebra systems to solve math problems step-by-step with reinforcement learning

Stars: ✭ 79 (-17.71%)

Mutual labels: jupyter-notebook, reinforcement-learning

Sru Deeplearning Workshop

دوره 12 ساعته یادگیری عمیق با چارچوب Keras

Stars: ✭ 66 (-31.25%)

Mutual labels: jupyter-notebook, deeplearning

Pgdrive

PGDrive: an open-ended driving simulator with infinite scenes from procedural generation

Stars: ✭ 60 (-37.5%)

Mutual labels: reinforcement-learning, imitation-learning

Polyaxon Examples

Code for polyaxon tutorials and examples

Stars: ✭ 57 (-40.62%)

Mutual labels: jupyter-notebook, deeplearning

Reinforcement Learning

Reinforcement learning material, code and exercises for Udacity Nanodegree programs.

Stars: ✭ 77 (-19.79%)

Mutual labels: jupyter-notebook, reinforcement-learning

Reinforcement Learning

Implementation of Reinforcement Learning algorithms in Python, based on Sutton's & Barto's Book (Ed. 2)

Stars: ✭ 55 (-42.71%)

Mutual labels: jupyter-notebook, reinforcement-learning

Mit Deep Learning

Tutorials, assignments, and competitions for MIT Deep Learning related courses.

Stars: ✭ 8,912 (+9183.33%)

Mutual labels: jupyter-notebook, deeplearning

Tensorflow Tutorials

TensorFlow Tutorials with YouTube Videos

Stars: ✭ 8,919 (+9190.63%)

Mutual labels: jupyter-notebook, reinforcement-learning

Hand dapg

Repository to accompany RSS 2018 paper on dexterous hand manipulation

Stars: ✭ 88 (-8.33%)

Mutual labels: reinforcement-learning, imitation-learning

NGSIM Env

- This is a rllab environment for learning human driver models with imitation learning

Description

- This repository does not contain a gail / infogail / hgail implementation

- The reason ngsim_env does not contain the GAIL algorithm implementation is to enable the codebase to be more modular. This design decision enables ngsim_env to be used as an environment in which any imitation learning algorithm can be tested. Similarly, this design decision enables the GAIL algorithm to be a separate module that can be tested in any environment be that ngsim_env or otherwise. The installation process below gets the GAIL implementation from sisl/hgail

- It also does not contain the human driver data you need for the environment to work. The installation process below gets the data from sisl/NGSIM.jl.

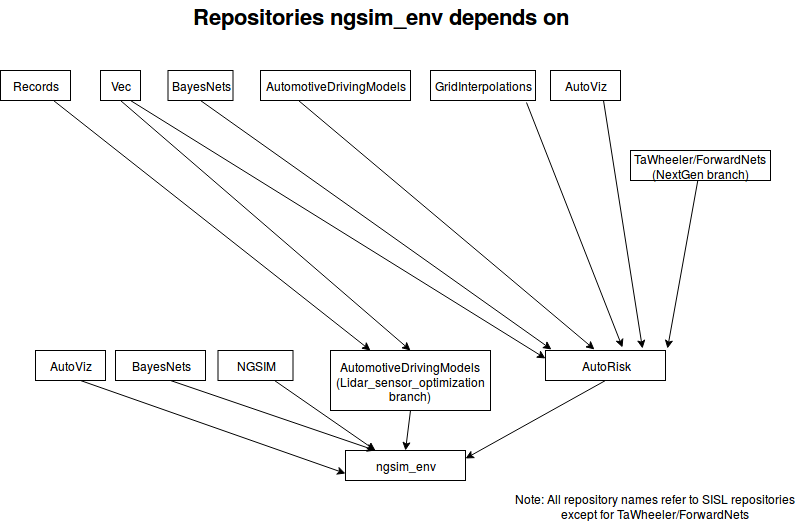

- Figure below shows a diagram of the repositories ngsim_env depends on:

Installation Process

Step-by-step install instructions are at docs/install_env_gail_full.md

Train and run a single agent GAIL policy:

- Navigate to ngsim_env/scripts/imitation

- Train a policy, this involves running imitate.py

python imitate.py --exp_name NGSIM-gail --n_itr 1000 --policy_recurrent True

- Run the trained policy by using it to drive a car (this creates trajectories on all NGSIM sections using the trained policy). The training step was called imitate. This step is called validate.

python validate.py --n_proc 5 --exp_dir ../../data/experiments/NGSIM-gail/ --params_filename itr_1000.npz --random_seed 42

- Visualize the results: Open up a jupyter notebook and use the visualize*.ipynb files.

- the visualize family of ipynb's have headers at the top of each file describing what it does.

- visualize.ipynb is for extracting the Root Mean Square Error

- visualize_trajectories.ipynb creates videos such as the one shown below in the demo section

- visualize_emergent.ipynb calculates the emergent metrics such as offroad duration and collision rate

Training process: details

- see

docs/training.md

How this works?

- See README files individual directories for details, but a high-level description is:

- The python code uses pyjulia to instantiate a Julia interpreter, see the

pythondirectory for details - The driving environment is then built in Julia, see the

juliadirectory for details - Each time the environment is stepped forward, execution passes from python to julia, updating the environment

Demo

To reproduce our experiments for the multiagent gail paper submitted to IROS, see

GAIL in a single-agent environment

Single agent GAIL (top) and PS-GAIL (bottom) in a multi-agent environment

References

If you found this library useful in your research, please consider citing our paper and/or paper:

@inproceedings{bhattacharyya2018multi,

title={Multi-agent imitation learning for driving simulation},

author={Bhattacharyya, Raunak P and Phillips, Derek J and Wulfe, Blake and Morton, Jeremy and Kuefler, Alex and Kochenderfer, Mykel J},

booktitle={2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages={1534--1539},

year={2018},

organization={IEEE}

}

@article{bhattacharyya2019simulating,

title={Simulating Emergent Properties of Human Driving Behavior Using Multi-Agent Reward Augmented Imitation Learning},

author={Bhattacharyya, Raunak P and Phillips, Derek J and Liu, Changliu and Gupta, Jayesh K and Driggs-Campbell, Katherine and Kochenderfer, Mykel J},

journal={arXiv preprint arXiv:1903.05766},

year={2019}

}

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].