dcmartin / Motion Ai

Projects that are alternatives of or similar to Motion Ai

Motion Ã👁

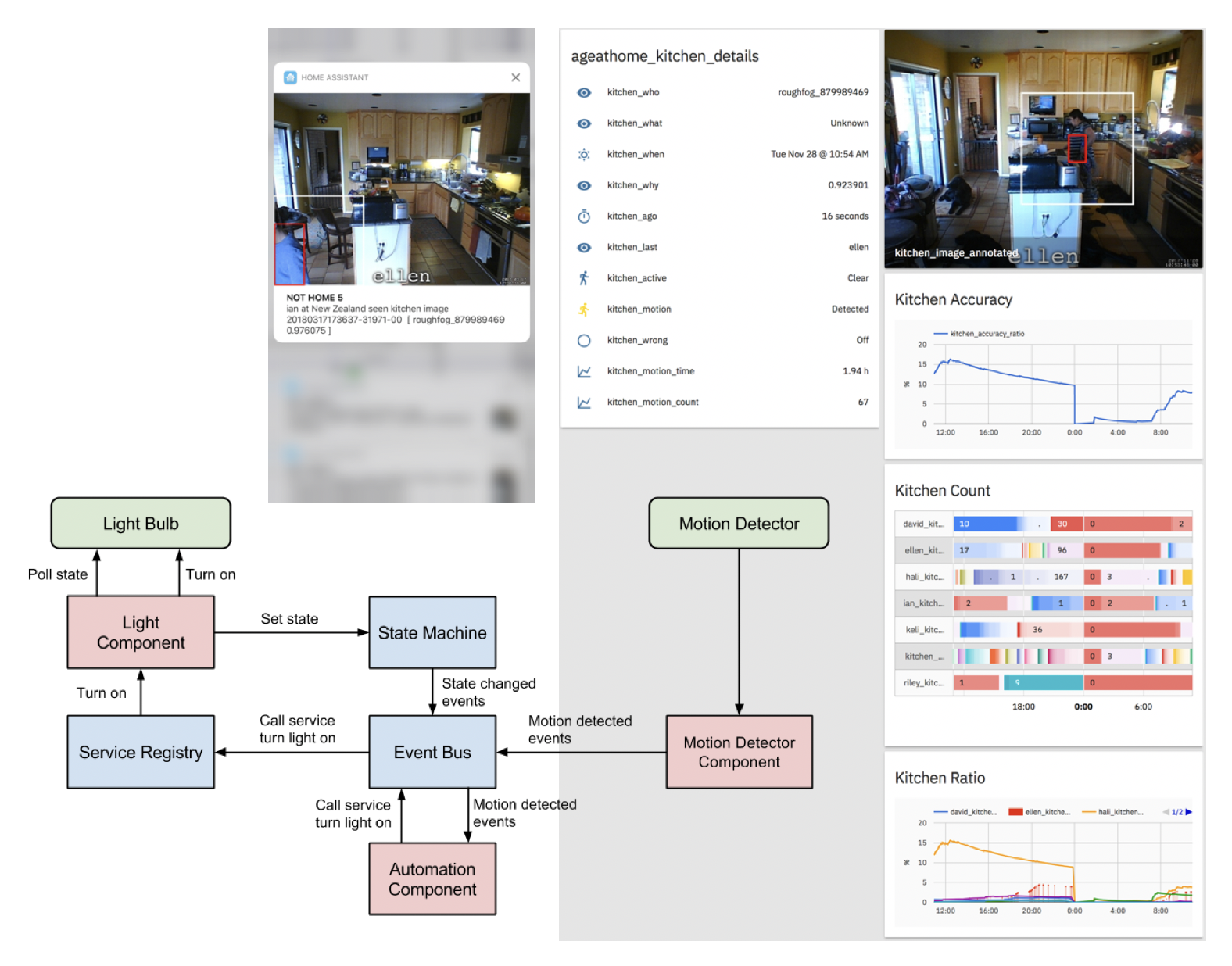

An open-source software solution for situational awareness from a network of video and audio sources. Utilizing Home Assistant, addons, the LINUX Foundation Open Horizon edge fabric, and edge AI services, the system enables personal AI on low-cost devices; integrating object detection and classification into a dashboard of daily activity.

- Watch the overview video, introduction and installation

- Use the QuickStart (see below) on RaspberryPi4, Jetson Nano, or Intel/AMD host; see guide.

- Visit us on the Web

- Find us on Facebook

- Connect with us on LinkedIn

- Message us on Slack

Status

Example

Quick Start

Start-to-finish takes about thirty (30) minutes with a broadband connection. There are options to consider; a non-executable example script may be utilized to specify commonly used options. Please edit the example script for your environment.

The following two (2) sets of commands will install motion-ai on the following types of hardware:

- RaspberryPi Model 3B+ or 4 (

arm); 2GB recommended - Ubuntu18.04 or Debian10 VM (

amd64); 2GB, 2vCPU recommended - nVidia Jetson Nano (

arm64); 4GB required

The initial configuration presumes a locally attached camera on /dev/video0. Reboot the system after completion; for example:

sudo apt update -qq -y

sudo apt install -qq -y make git curl jq apt-utils ssh network-manager apparmor

git clone http://github.com/motion-ai/motion-ai

cd ~/motion-ai

sudo ./sh/get.motion-ai.sh

sudo reboot

When the system reboots install the official MQTT broker (aka core-mosquitto) and Motion Classic (aka motion-video0) add-ons using the Home Assistant Add-on Store (n.b. Motion Classic add-on may be accessed by adding the repository http://github.com/dcmartin/hassio-addons to the Add-On Store.

Select, install, configure and start each add-on (see below). When both add-ons are running, return to the command-line and start the AI's. After the MQTT and Motion Classic addons have started, run the make restart command to synchroize the Home Assistant configuration with the Motion Classic add-on, for example:

cd ~/motion-ai

make restart

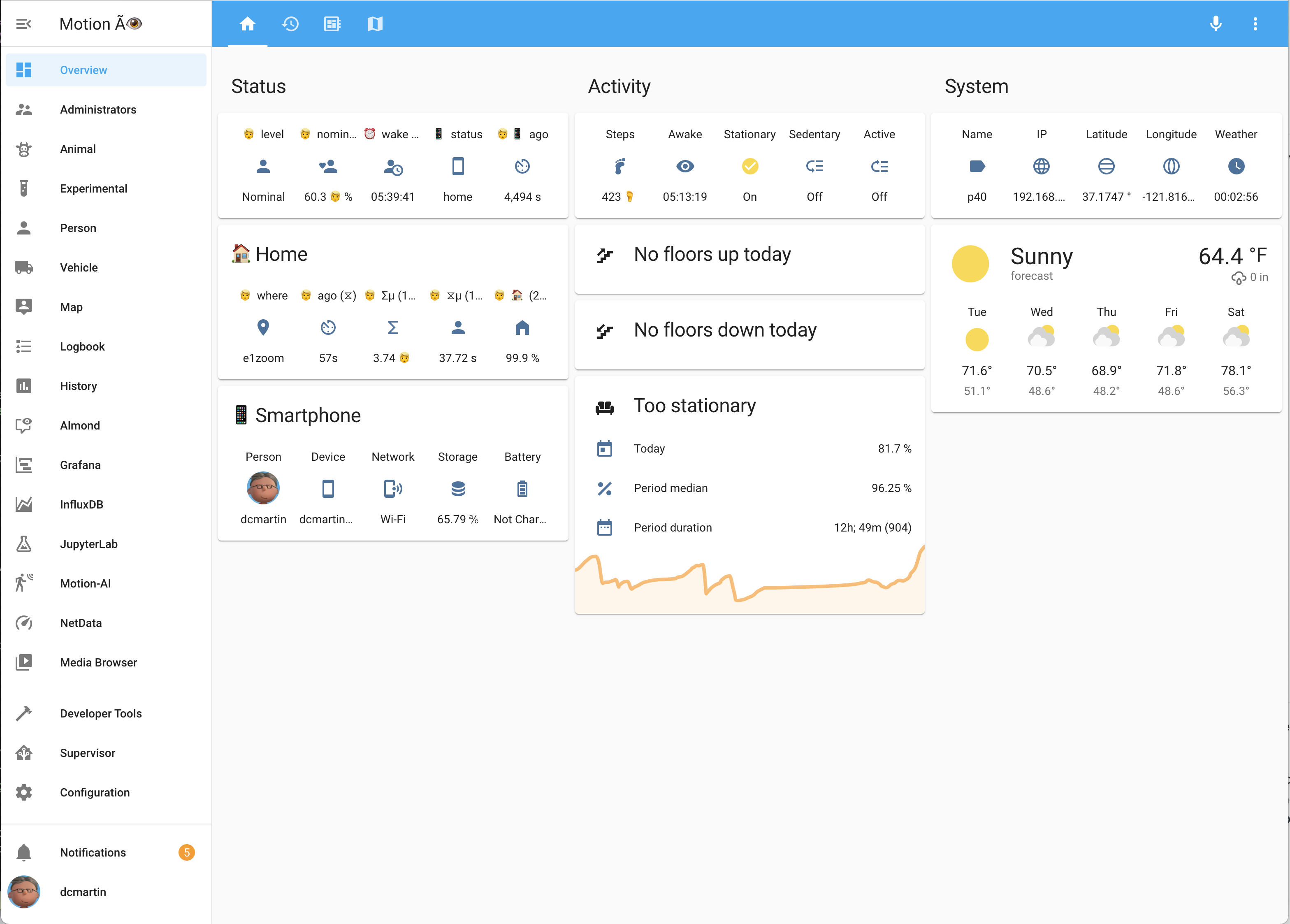

Dashboard

Once the system has rebooted it will display a default view; note the image below is of a configured system:

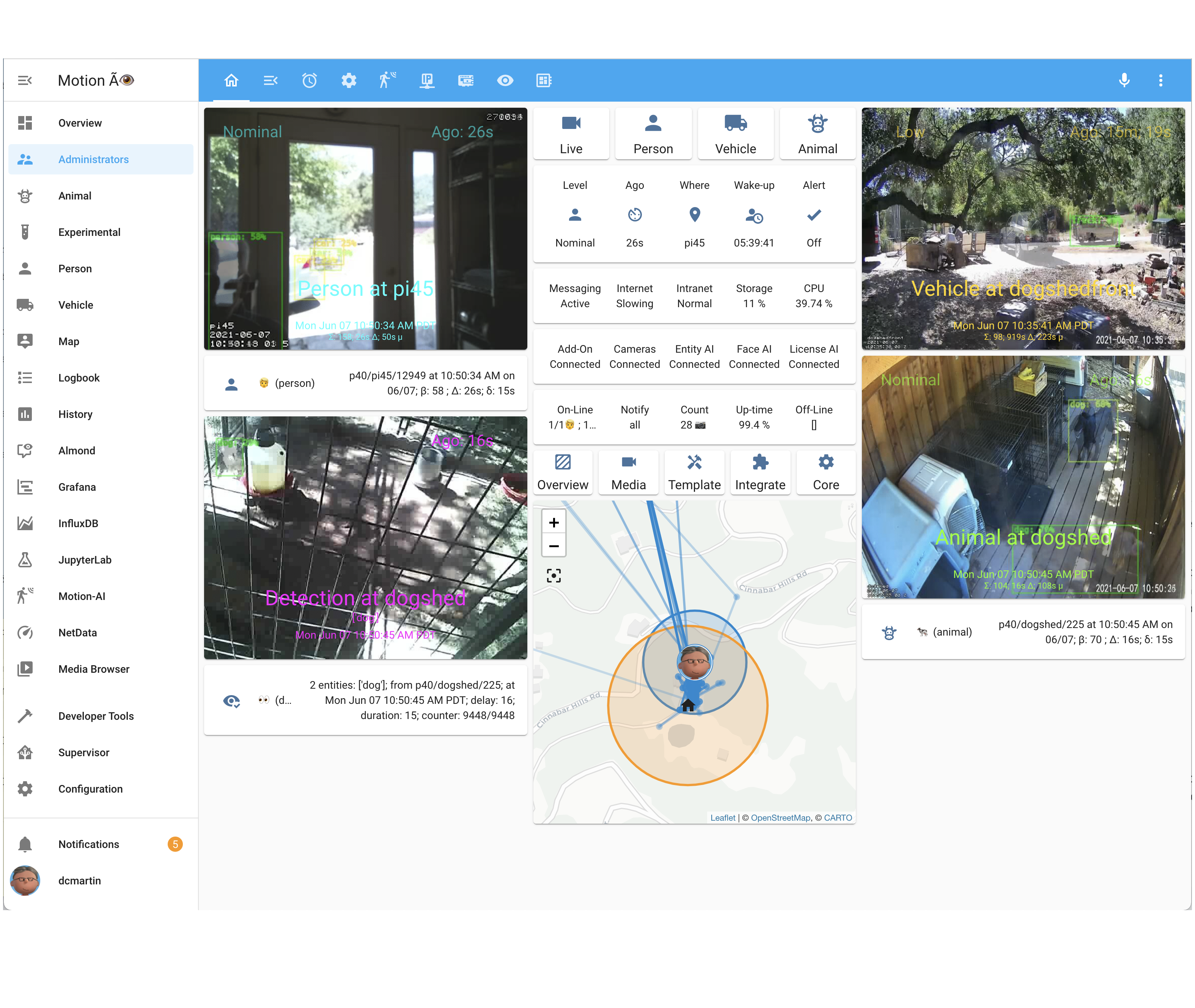

Administrators

A more detailed interface is provided to administrators only, and includes both summary and detailed views for the system, including access to NetData and the motion add-on Web interface.

Add-on's

Install the MQTT and Motion Classic add-ons from the Add-On Store and configure and start; add the repository https://github.com/dcmartin/hassio-addons to the Add-On Store to install Motion Classic.

The Motion Classic configuration includes many options, most which typically do not need to be changed. The group is provided to segment a network of devices (e.g. indoor vs. outdoor); the device determines the MQTT identifier for publishing; the client determines the MQTT identifier for subscribing; timezone should be local to installation.

Note: No capital letters [A-Z], spaces, hyphens (-), or other special characters may be utilized for any of the following identifiers:

-

group- The collection of devices -

device- The identifier for the hardware device -

name- The name of the camera

The cameras section is a listing (n.b. hence the -) and provide information for both the motion detection as well as the front-end Web interface. The name,type, and w3w attributes are required. The top, left, and icon attributes are optional and are used to locate the camera on the overview image. The width, height, and other attributes are optional and are used for motion detection.

Example configuration (subset)

...

group: motion

device: raspberrypi

client: raspberrypi

timezone: America/Los_Angeles

cameras:

- name: local

type: local

w3w: []

top: 50

left: 50

icon: webcam

width: 640

height: 480

framerate: 10

minimum_motion_frames: 30

event_gap: 60

threshold: 1000

- name: network

type: netcam

w3w:

- what

- three

- words

icon: door

netcam_url: 'rtsp://192.168.1.224/live'

netcam_userpass: 'username:password'

width: 640

height: 360

framerate: 5

event_gap: 30

threshold_percent: 2

AI's

Return to the command-line, change to the installation directory, and run the following commands to start the AI's; for example:

cd ~/motion-ai

./sh/yolo4motion.sh

./sh/face4motion.sh

./sh/alpr4motion.sh

These commands only need to be run once; the AI's will automatically restart whenever the system is rebooted.

Overview image

The overview image is used to display the location of camera icons specified in the add-on (n.b. top and left percentages). The mode may be local, indicating that a local image file should be utilized; the default is overview.jpg in the www/images/ directory. The other modes utilize the Google Maps API; they are:

hybridroadmapsatelliteterrain

The zoom value scales the images generated by Google Maps API; it does not apply to local images.

Composition

The motion-ai solution is composed of two primary components:

- Home Assistant - open-source home automation system

- Open Horizon - edge AI platform

Home Assistant add-ons:

-

motion- add-on for Home Assistant - captures images and video of motion (n.b. motion-project.github.io) -

MQTT- messaging broker -

FTP- optional, only required forftpdtype cameras

Open Horizon AI services:

-

yolo4motion- object detection and classification -

face4motion- face detection -

alpr4motion- license plate detection and classification -

pose4motion- human pose estimation

Data may be saved locally and processed to produce historical graphs as well as exported for analysis using other tools (e.g. time-series database InfluxDB and analysis front-end Grafana). Data may also be processed using Jupyter notebooks.

Supported architectures include:

CPU only

-

-

amd64- Intel/AMD 64-bit virtual machines and devices -

-

aarch64- ARMv8 64-bit devices -

-

armv7- ARMv7 32-bit devices (e.g. RaspberryPi 3/4)

GPU accelerated

-

-

aarch64- with nVidia GPU -

-

amd64- with nVida GPU -

-

armv7- with Google Coral Tensor Processing Unit -

-

armv7- with Intel/Movidius Neural Compute Stick v2

Installation

Installation is performed in five (5) steps; see detailed instructions. The software has been tested on the following devices:

- RaspberryPi Model 3B+ and Model 4 (2 GB); Debian Buster

- nVidia Jetson Nano and TX2; Ubuntu 18.04

- VirtualBox VM; Ubuntu 18.04

- Generic AMD64 w/ nVidia GPU; Ubuntu 18.04

Accelerated hardware 1: nVidia Jetson Nano (aka tegra)

Recommended components:

- nVidia Jetson Nano developer kit; 4GB required

- 4+ amp power-supply or another option

- High-endurance micro-SD card; minimum: 64 Gbyte

- One (1) jumper or female-female wire for enabling power-supply

- Fan; 40x20mm; cool heat-sink

- SSD disk; optional; recommended: 250+ Gbyte

- USB3/SATA cable and/or enclosure

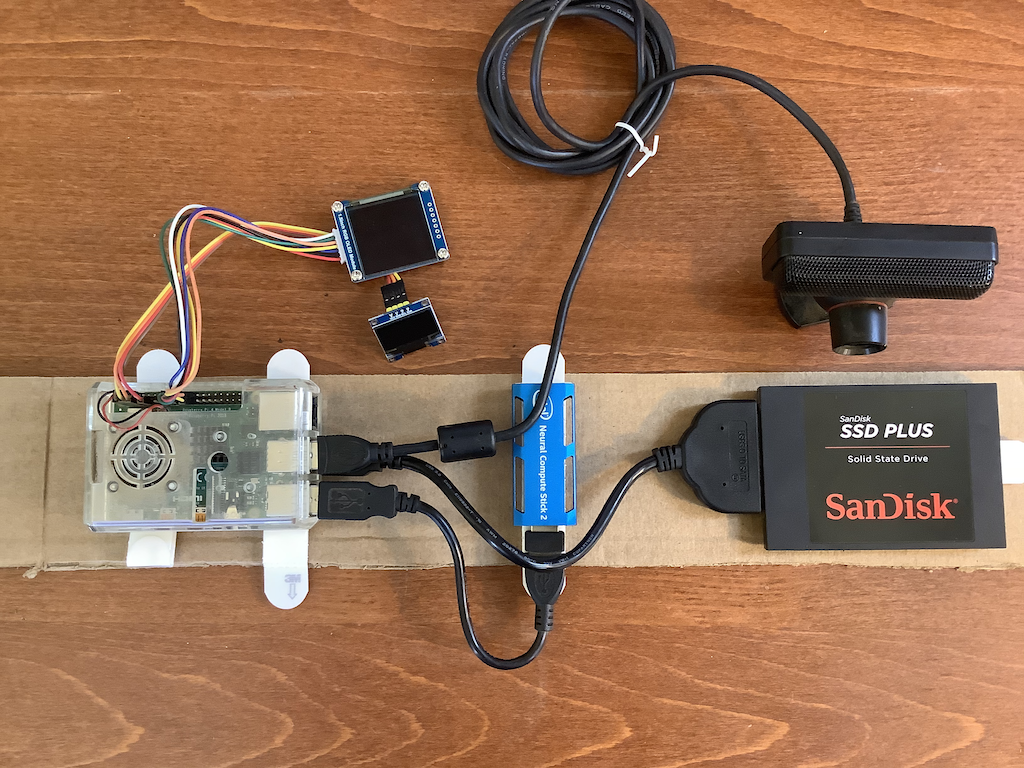

Accelerated hardware 2: RaspberryPi 4 with Intel NCS2 (aka ncs2)

This configuration includes dual OLED displays to provide display of annotations text and image, as well as a USB-attached camera (n.b. Playstation3 PS/Eye camera). The Intel/NCS2 implemtation is still in alpha mode and not in the master branch.

# What is _edge AI_? The edge of the network is where connectivity is lost and privacy is challenged.

Low-cost computing (e.g. RaspberryPi, nVidia Jetson Nano, Intel NUC) as well as hardware accelerators (e.g. Google Coral TPU, Intel Movidius Neural Compute Stick v2) provide the opportunity to utilize artificial intelligence in the privacy and safety of a home or business.

To provide for multiple operational scenarios and use-cases (e.g. the elder's activities of daily living (ADL)), the platform is relatively agnostic toward AI models or hardware and more dependent on system availability for development and testing.

An AI's prediction quality is dependent on the variety, volume, and veracity of the training data (n.b. see Understanding AI, as the underlying deep, convolutional, neural-networks -- and other algorithms -- must be trained using information that represents the scenario, use-case, and environment; better predictions come from better information.

The Motion Ã👁 system provides a personal AI incorporating both a wide variety artificial intelligence, machine learning, and statistical models as well as a closed-loop learning cycle (n.b. see Building a Better Bot); increasing the volume, variety, and veracity of the corpus of knowledge.

Example: [email protected]

This system may be used to build solutions for various operational scenarios (e.g. monitoring the elderly to determine patterns of daily activity and alert care-givers and loved ones when aberrations occur); see the Age-At-Home project for more information; example below:

Changelog & Releases

Releases are based on Semantic Versioning, and use the format

of MAJOR.MINOR.PATCH. In a nutshell, the version will be incremented

based on the following:

-

MAJOR: Incompatible or major changes. -

MINOR: Backwards-compatible new features and enhancements. -

PATCH: Backwards-compatible bugfixes and package updates.

Author

David C Martin ([email protected])

Contribute:

- Let everyone know about this project

- Test a

netcamorlocalcamera and let me know

Add motion-ai as upstream to your repository:

git remote add upstream http://github.com/dcmartin/motion-ai.git

Please make sure you keep your fork up to date by regularly pulling from upstream.

git fetch upstream

git merge upstream/master

Stargazers

CLOC

| Language | files | blank | comment | code |

|---|---|---|---|---|

| YAML | 366 | 2437 | 1932 | 37667 |

| JSON | 12 | 0 | 0 | 5570 |

| Python | 94 | 996 | 494 | 4447 |

| Jupyter Notebook | 1 | 0 | 1020 | 927 |

| make | 2 | 126 | 95 | 750 |

| Bourne Shell | 4 | 25 | 24 | 116 |

| Markdown | 1 | 9 | 0 | 29 |

| JavaScript | 1 | 0 | 0 | 7 |

| -------- | -------- | -------- | -------- | -------- |

| SUM: | 481 | 3593 | 3565 | 49513 |