NervanaSystems / Ngraph

Labels

Projects that are alternatives of or similar to Ngraph

nGraph has moved to OpenVINO: https://github.com/openvinotoolkit/openvino

Architecture & features | Ecosystem | Release notes | Documentation | Contribution guide | License: Apache 2.0

Quick start

To begin using nGraph with popular frameworks, please refer to the links below.

| Framework (Version) | Installation guide | Notes |

|---|---|---|

| TensorFlow* | Pip install or Build from source | 20 Validated workloads |

| ONNX 1.5 | Pip install | 17 Validated workloads |

Python wheels for nGraph

The Python wheels for nGraph have been tested and are supported on the following 64-bit systems:

- Ubuntu 16.04 or later

- CentOS 7.6

- Debian 10

- macOS 10.14.3 (Mojave)

To install via pip, run:

pip install --upgrade pip==19.3.1

pip install ngraph-core

Frameworks using nGraph Compiler stack to execute workloads have shown

up to 45X

performance boost when compared to native framework implementations. We've also

seen performance boosts running workloads that are not included on the list of

Validated workloads, thanks to nGraph's powerful subgraph pattern matching.

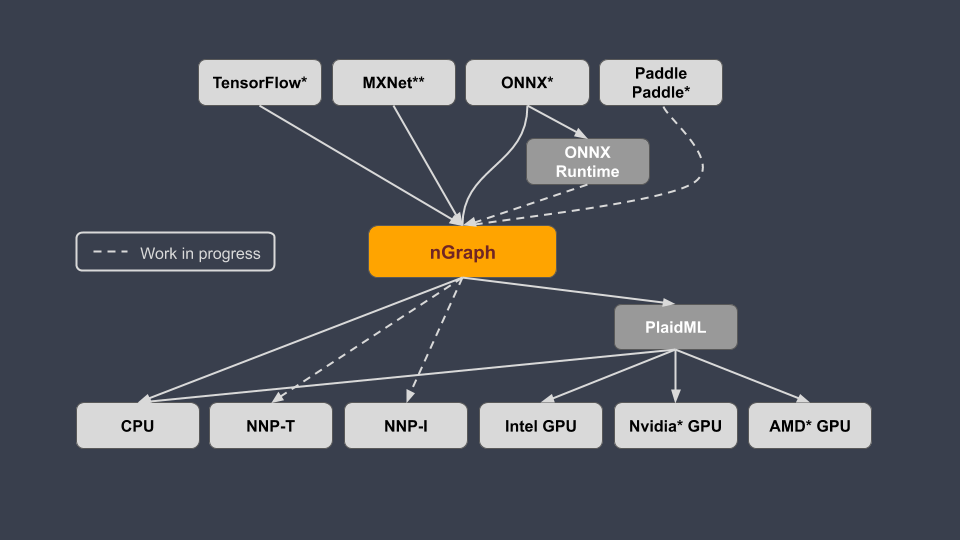

Additionally we have integrated nGraph with PlaidML to provide deep learning performance acceleration on Intel, nVidia, & AMD GPUs. More details on current architecture of the nGraph Compiler stack can be found in Architecture and features, and recent changes to the stack are explained in the Release Notes.

What is nGraph Compiler?

nGraph Compiler aims to accelerate developing AI workloads using any deep learning framework and deploying to a variety of hardware targets. We strongly believe in providing freedom, performance, and ease-of-use to AI developers.

The diagram below shows deep learning frameworks and hardware targets supported by nGraph. NNP-T and NNP-I in the diagram refer to Intel's next generation deep learning accelerators: Intel® Nervana™ Neural Network Processor for Training and Inference respectively. Future plans for supporting addtional deep learning frameworks and backends are outlined in the ecosystem section.

Our documentation has extensive information about how to use nGraph Compiler stack to create an nGraph computational graph, integrate custom frameworks, and to interact with supported backends. If you wish to contribute to the project, please don't hesitate to ask questions in GitHub issues after reviewing our contribution guide below.

How to contribute

We welcome community contributions to nGraph. If you have an idea how to improve it:

- See the contrib guide for code formatting and style guidelines.

- Share your proposal via GitHub issues.

- Ensure you can build the product and run all the examples with your patch.

- In the case of a larger feature, create a test.

- Submit a pull request.

- Make sure your PR passes all CI tests. Note: You can test locally with

make check.

We will review your contribution and, if any additional fixes or modifications are necessary, may provide feedback to guide you. When accepted, your pull request will be merged to the repository.