GitHub-HongweiZhang / Prediction Flow

Licence: mit

Deep-Learning based CTR models implemented by PyTorch

Stars: ✭ 138

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Prediction Flow

Image Caption Generator

A neural network to generate captions for an image using CNN and RNN with BEAM Search.

Stars: ✭ 126 (-8.7%)

Mutual labels: attention-mechanism, attention

Performer Pytorch

An implementation of Performer, a linear attention-based transformer, in Pytorch

Stars: ✭ 546 (+295.65%)

Mutual labels: attention-mechanism, attention

Neural sp

End-to-end ASR/LM implementation with PyTorch

Stars: ✭ 408 (+195.65%)

Mutual labels: attention-mechanism, attention

Seq2seq Summarizer

Pointer-generator reinforced seq2seq summarization in PyTorch

Stars: ✭ 306 (+121.74%)

Mutual labels: attention-mechanism, attention

Global Self Attention Network

A Pytorch implementation of Global Self-Attention Network, a fully-attention backbone for vision tasks

Stars: ✭ 64 (-53.62%)

Mutual labels: attention-mechanism, attention

Php Opencv Examples

Tutorial for computer vision and machine learning in PHP 7/8 by opencv (installation + examples + documentation)

Stars: ✭ 333 (+141.3%)

Mutual labels: torch, dnn

Structured Self Attention

A Structured Self-attentive Sentence Embedding

Stars: ✭ 459 (+232.61%)

Mutual labels: attention-mechanism, attention

ttslearn

ttslearn: Library for Pythonで学ぶ音声合成 (Text-to-speech with Python)

Stars: ✭ 158 (+14.49%)

Mutual labels: dnn, attention-mechanism

Isab Pytorch

An implementation of (Induced) Set Attention Block, from the Set Transformers paper

Stars: ✭ 21 (-84.78%)

Mutual labels: attention-mechanism, attention

Pytorch Gat

My implementation of the original GAT paper (Veličković et al.). I've additionally included the playground.py file for visualizing the Cora dataset, GAT embeddings, an attention mechanism, and entropy histograms. I've supported both Cora (transductive) and PPI (inductive) examples!

Stars: ✭ 908 (+557.97%)

Mutual labels: attention-mechanism, attention

Adaptiveattention

Implementation of "Knowing When to Look: Adaptive Attention via A Visual Sentinel for Image Captioning"

Stars: ✭ 303 (+119.57%)

Mutual labels: attention-mechanism, torch

Lambda Networks

Implementation of LambdaNetworks, a new approach to image recognition that reaches SOTA with less compute

Stars: ✭ 1,497 (+984.78%)

Mutual labels: attention-mechanism, attention

Attentionwalk

A PyTorch Implementation of "Watch Your Step: Learning Node Embeddings via Graph Attention" (NeurIPS 2018).

Stars: ✭ 266 (+92.75%)

Mutual labels: attention, torch

Gocv

Go package for computer vision using OpenCV 4 and beyond.

Stars: ✭ 4,511 (+3168.84%)

Mutual labels: torch, dnn

Pytorch Original Transformer

My implementation of the original transformer model (Vaswani et al.). I've additionally included the playground.py file for visualizing otherwise seemingly hard concepts. Currently included IWSLT pretrained models.

Stars: ✭ 411 (+197.83%)

Mutual labels: attention-mechanism, attention

AoA-pytorch

A Pytorch implementation of Attention on Attention module (both self and guided variants), for Visual Question Answering

Stars: ✭ 33 (-76.09%)

Mutual labels: attention, attention-mechanism

NTUA-slp-nlp

💻Speech and Natural Language Processing (SLP & NLP) Lab Assignments for ECE NTUA

Stars: ✭ 19 (-86.23%)

Mutual labels: attention, attention-mechanism

Vad

Voice activity detection (VAD) toolkit including DNN, bDNN, LSTM and ACAM based VAD. We also provide our directly recorded dataset.

Stars: ✭ 622 (+350.72%)

Mutual labels: attention, dnn

Attend infer repeat

A Tensorfflow implementation of Attend, Infer, Repeat

Stars: ✭ 82 (-40.58%)

Mutual labels: attention-mechanism, attention

prediction-flow

prediction-flow is a Python package providing modern Deep-Learning based CTR models. Models are implemented by PyTorch.

how to use

- Install using pip.

pip install prediction-flow

feature

how to define feature

There are two parameters for all feature types, name and column_flow. The name parameter is used to index the column raw data from input data frame. The column_flow parameter is a single transformer of a list of transformers. The transformer is used to pre-process the column data before training the model.

- dense number feature

Number('age', StandardScaler())

Number('ctr', None)

- sparse category feature

Category('movieId', CategoryEncoder(min_cnt=1))

- var length sequence feature

Sequence('genres', SequenceEncoder(sep='|', min_cnt=1))

transformer

The following transformers are provided now.

| transformer | supported feature type | detail |

|---|---|---|

| StandardScaler | Number | Wrapper of scikit-learn's StandardScaler. Null value must be filled in advance. |

| LogTransformer | Number | Log scaler. Null value must be filled in advance. |

| CategoryEncoder | Category | Converting str value to int. Null value must be filled in advance using '__UNKNOWN__'. |

| SequenceEncoder | Sequence | Converting sequence str value to int. Null value must be filled in advance using '__UNKNOWN__'. |

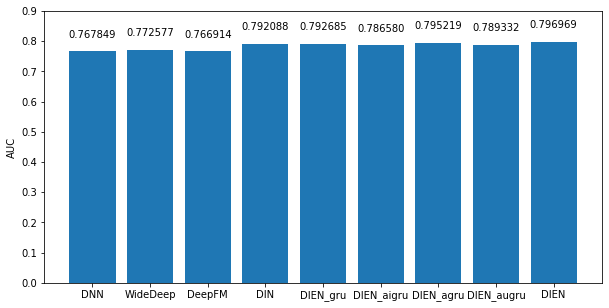

model

| model | reference |

|---|---|

| DNN | - |

| Wide & Deep | [DLRS 2016]Wide & Deep Learning for Recommender Systems |

| DeepFM | [IJCAI 2017]DeepFM: A Factorization-Machine based Neural Network for CTR Prediction |

| DIN | [KDD 2018]Deep Interest Network for Click-Through Rate Prediction |

| DNN + GRU + GRU + Attention | [AAAI 2019]Deep Interest Evolution Network for Click-Through Rate Prediction |

| DNN + GRU + AIGRU | [AAAI 2019]Deep Interest Evolution Network for Click-Through Rate Prediction |

| DNN + GRU + AGRU | [AAAI 2019]Deep Interest Evolution Network for Click-Through Rate Prediction |

| DNN + GRU + AUGRU | [AAAI 2019]Deep Interest Evolution Network for Click-Through Rate Prediction |

| DIEN | [AAAI 2019]Deep Interest Evolution Network for Click-Through Rate Prediction |

| OTHER | TODO |

example

movielens-1M

This dataset is just used to test the code can run, accuracy does not make sense.

- Prepare the dataset. preprocess.ipynb

- Run the model. movielens-1m.ipynb

amazon

- Prepare the dataset. prepare_neg.ipynb

- Run the model. amazon.ipynb

- An example using pytorch-lightning. amazon-lightning.ipynb

accuracy

acknowledge and reference

- Referring the design from DeepCTR, the features are divided into dense (class Number), sparse (class Category), sequence (class Sequence) types.

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].