tomguluson92 / Prnet_pytorch

Programming Languages

Projects that are alternatives of or similar to Prnet pytorch

PRNet PyTorch 1.1.0

This is an unofficial pytorch implementation of PRNet since there is not a complete generating and training code

of 300WLP dataset.

- Author: Samuel Ko, mjanddy.

Update Log

@date: 2019.11.13

@notice: An important bug has been fixed by mj in loading uv map. The original uv_map.jpg is

flipped, so *.npy is used here to redress this problem. Thanks to mjanddy!

@date: 2019.11.14

@notice: Inference Stage Uploaded, pretrain model available in results/latest.pth. Thanks to mjanddy!

Noitce

Since replacing the default PIL.Imgae by cv2.imread in image reader, you need

do a little revise on your tensorboard package in your_python_path/site-packages/torch/utils/tensorboard/summary.py

What you should do is add tensor = tensor[:, :, ::-1] before image = Image.fromarray(tensor) in function make_image(...).

...

def make_image(tensor, rescale=1, rois=None):

"""Convert an numpy representation image to Image protobuf"""

from PIL import Image

height, width, channel = tensor.shape

scaled_height = int(height * rescale)

scaled_width = int(width * rescale)

tensor = tensor[:, :, ::-1]

image = Image.fromarray(tensor)

...

...

① Pre-Requirements

Before we start generat uv position map and train it. The first step is generate BFM.mat according to Basel Face Model.

For simplicity, The corresponding BFM.mat has been provided here.

After download it successfully, you need to move BFM.mat to utils/.

Besides, the essential python packages were listed in requirements.txt.

② Generate uv_pos_map

YadiraF/face3d have provide scripts for generating uv_pos_map, here i wrap it for Batch processing.

You can use utils/generate_posmap_300WLP.py as:

python3 generate_posmap_300WLP.py --input_dir ./dataset/300WLP/IBUG/ --save_dir ./300WLP_IBUG/

Then 300WLP_IBUG dataset is the proper structure for training PRNet:

- 300WLP_IBUG

- 0/

- IBUG_image_xxx.npy

- original.jpg (original RGB)

- uv_posmap.jpg (corresponding UV Position Map)

- 1/

- **...**

- 100/

Except from download from 300WLP,

I provide processed original--uv_posmap pair of IBUG here.

③ Training

After finish the above two step, you can train your own PRNet as:

python3 train.py --train_dir ./300WLP_IBUG

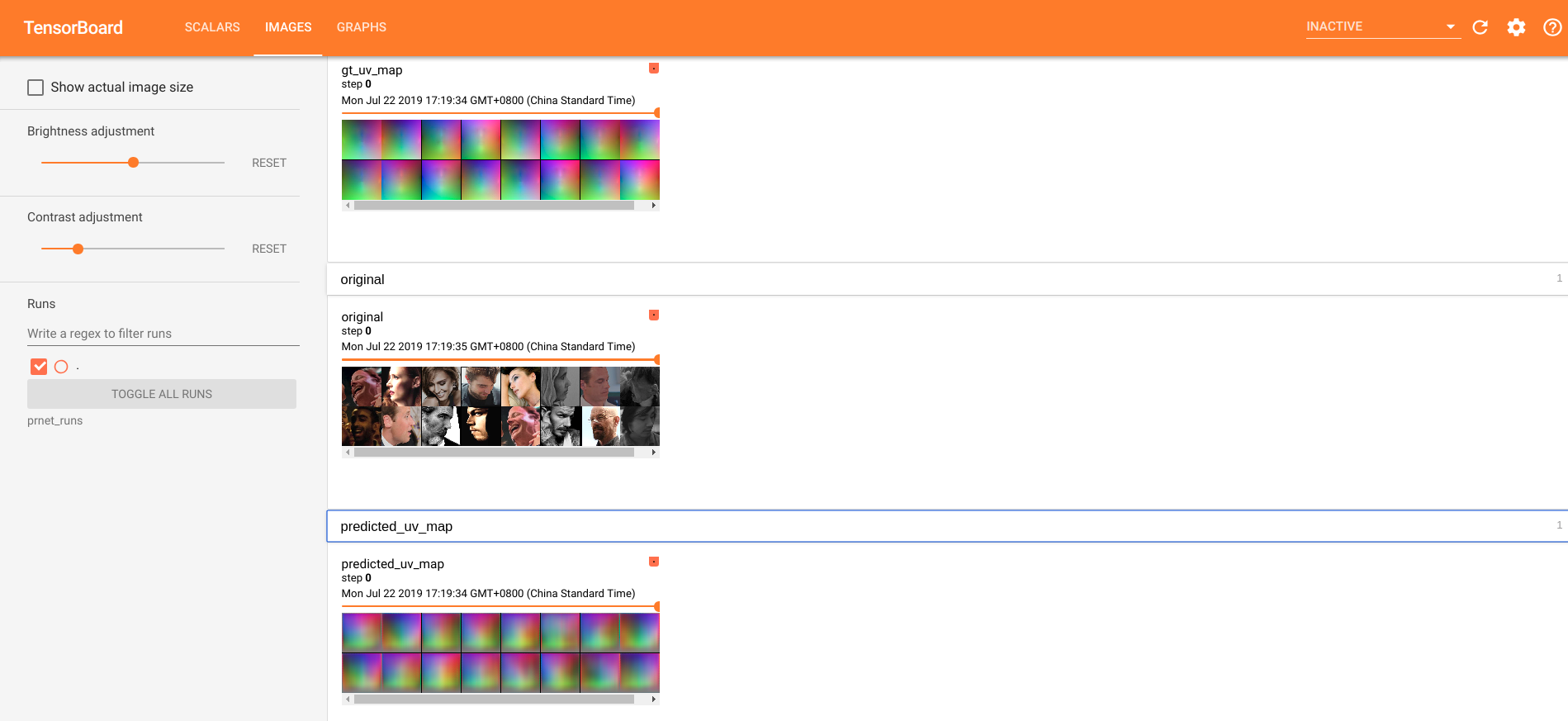

You can use tensorboard to visualize the intermediate output in localhost:6006:

tensorboard --logdir=absolute_path_of_prnet_runs/

The following image is used to judge the effectiveness of PRNet to unknown data.

(Original, UV_MAP_gt, UV_MAP_predicted)

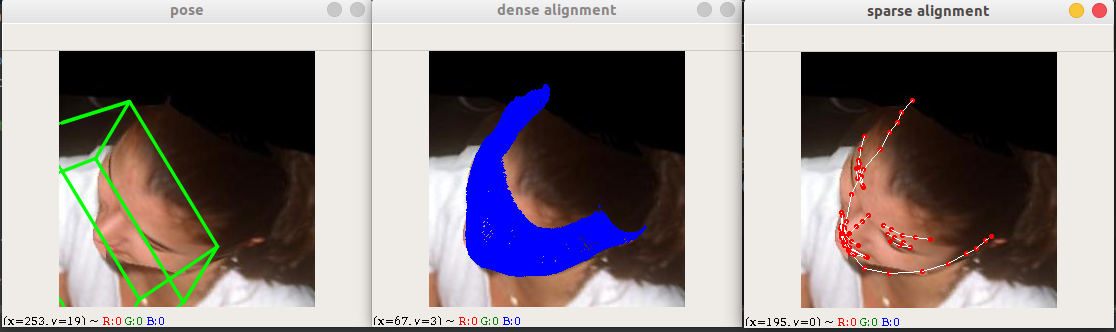

④ Inference

You can use following instruction to do your prnet inference. The detail about parameters you can find in inference.py.

python3 inference.py -i input_dir(default is TestImages) -o output_dir(default is TestImages/results) --model model_path(default is results/latest.pth) --gpu 0 (-1 denotes cpu)

Citation

If you use this code, please consider citing:

@inProceedings{feng2018prn,

title = {Joint 3D Face Reconstruction and Dense Alignment with Position Map Regression Network},

author = {Yao Feng and Fan Wu and Xiaohu Shao and Yanfeng Wang and Xi Zhou},

booktitle = {ECCV},

year = {2018}

}