hendrycks / Robustness

Programming Languages

Projects that are alternatives of or similar to Robustness

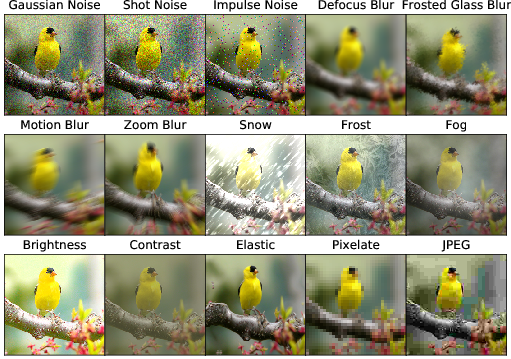

Benchmarking Neural Network Robustness to Common Corruptions and Perturbations

This repository contains the datasets and some code for the paper Benchmarking Neural Network Robustness to Common Corruptions and Perturbations (ICLR 2019) by Dan Hendrycks and Thomas Dietterich.

Requires Python 3+ and PyTorch 0.3+. For evaluation, please download the data from the links below.

ImageNet-C

Download ImageNet-C here. (Mirror.)

Download Tiny ImageNet-C here. (Mirror.)

Tiny ImageNet-C has 200 classes with images of size 64x64, while ImageNet-C has all 1000 classes where each image is the standard size. For even quicker experimentation, there is CIFAR-10-C and CIFAR-100-C. Evaluation using the JPEGs above is strongly prefered to computing the corruptions in memory, so that evaluation is deterministic and consistent.

ImageNet-C Leaderboard

ImageNet-C Robustness with a ResNet-50 Backbone trained on ImageNet-1K and evaluated on 224x224x3 images.

| Method | Reference | Standalone? | mCE | Clean Error |

|---|---|---|---|---|

| DeepAugment+AugMix | Hendrycks et al. | No | 53.6% | 24.2% |

| Assemble-ResNet50 | Lee et al. | No | 56.5% | 17.90% |

| ANT (3x3) | Rusak and Schott et al. | Yes | 63% | 23.9% |

| BlurAfterConv | Vasconcelos et al. | Yes | 64.9% | 21.2% |

| AugMix | Hendrycks and Mu et al. (ICLR 2020) | Yes | 65.3% | 22.47% |

| Stylized ImageNet | Geirhos et al. (ICLR 2019) | Yes | 69.3% | 25.41% |

| Patch Uniform | Lopes et al. | Yes | 74.3% | 24.5% |

| ResNet-50 Baseline | N/A | 76.7% | 23.85% |

"Standalone" indicates whether the method is a combination of techniques or a standalone/single method. Combining methods and proposing standalone methods are both valuable but not necessarily commensurable.

Be sure to check each paper for results on all 15 corruptions, as some of these techniques improve robustness on all corruptions, some methods help on some corruptions and hurt on others, and some are exceptional against noise corruptions. Other backbones can obtain better results. For example, a vanilla ResNeXt-101 has an mCE of 62.2%. Note Lopes et al. have a ResNet-50 backbone with an mCE of 80.6, so their improvement is larger than what is immediately suggested by the table.

Submit a pull request if you beat the state-of-the-art on ImageNet-C with a ResNet-50 backbone.

UPDATE: New Robustness Benchmarks

For other distribution shift benchmarks like ImageNet-C, consider datasets like ImageNet-A or ImageNet-R.

ImageNet-A contains real-world, unmodified natural images that cause model accuracy to substantially degrade. ImageNet-R(endition) has 30,000 renditions of ImageNet classes cocering art, cartoons, deviantart, graffiti, embroidery, graphics, origami, paintings, patterns, plastic objects, plush objects, sculptures, sketches, tattoos, toys, and video games.

Calculating the mCE

This spreadsheet shows how to calculate the mean Corruption Error.

ImageNet-P

ImageNet-P sequences are MP4s not GIFs. The spatter perturbation sequence is a validation sequence.

Download Tiny ImageNet-P here. (Mirror.)

Download ImageNet-P here. (Mirror.)

ImageNet-P Leaderboard

ImageNet-P Perturbation Robustness with a ResNet-50 Backbone

| Method | Reference | mFR | mT5D |

|---|---|---|---|

| AugMix | Hendrycks and Mu et al. (ICLR 2020) | 37.4% | |

| Low Pass Filter Pooling (bin-5) | Zhang (ICML 2019) | 51.2% | 71.9% |

| ResNet-50 Baseline | 58.0% | 78.4% |

Submit a pull request if you beat the state-of-the-art on ImageNet-P.

Citation

If you find this useful in your research, please consider citing:

@article{hendrycks2019robustness,

title={Benchmarking Neural Network Robustness to Common Corruptions and Perturbations},

author={Dan Hendrycks and Thomas Dietterich},

journal={Proceedings of the International Conference on Learning Representations},

year={2019}

}

Part of the code was contributed by Tom Brown.