mkearney / Rstudioconf_tweets

Programming Languages

Projects that are alternatives of or similar to Rstudioconf tweets

rstudio::conf tweets

A repository for tracking tweets about rstudio::conf 2018. Read more about the Rstudio conference at rstudio.com/conference/.

Data

Two data collection methods are described in detail below. Hoewver, if you want to skip straight to the data, run the following code:

## download status IDs file

download.file(

"https://github.com/mkearney/rstudioconf_tweets/blob/master/data/search-ids.rds?raw=true",

"rstudioconf_search-ids.rds"

)

## read status IDs fromdownloaded file

ids <- readRDS("rstudioconf_search-ids.rds")

## lookup data associated with status ids

rt <- rtweet::lookup_tweets(ids$status_id)

rtweet

Whether you lookup the status IDs or search/stream new tweets, make sure you've installed the rtweet package. The code below will install [if it's not already] and load rtweet.

## install rtweet if not already

if (!requireNamespace("rtweet", quietly = TRUE)) {

install.packages("rtweet")

}

## load rtweet

library(rtweet)

Twitter APIs

There are two easy [and free] ways to get lots of Twitter data, filtering by one or more keywords. Each method is described and demonstrated below.

Stream

The first way is to stream the data (using Twitter's stream API). For example, in the code below, a stream is setup to run continuously from the moment its executed until the Saturday at midnight (to roughly coincide with the end of the conference).

## set stream time

timeout <- as.numeric(

difftime(as.POSIXct("2018-02-04 00:00:00"),

Sys.time(), tz = "US/Pacific", "secs")

)

## search terms

rstudioconf <- c("rstudioconf", "rstudio::conf",

"rstudioconference", "rstudioconference18",

"rstudioconference2018", "rstudio18",

"rstudioconf18", "rstudioconf2018",

"rstudio::conf18", "rstudio::conf2018")

## name of file to save output

json_file <- file.path("data", "stream.json")

## stream the tweets and write to "data/stream.json"

stream_tweets(

q = paste(rstudioconf, collapse = ","),

timeout = timeout,

file_name = json_file,

parse = FALSE

)

## parse json data and convert to tibble

rt <- parse_stream(json_file)

Search

The second easy way to gather Twitter data using one or more keywords is to search for the data (using Twitter's REST API). Unlike streaming, searching makes it possible to go back in time. Unfortunately, Twitter sets a rather restrictive cap–roughly nine days–on how far back you can go. Regardless, searching for tweets is often the preferred method. For example, the code below is setup in such a way that it can be executed once [or even several times] a day throughout the conference.

## search terms

rstudioconf <- c("rstudioconf", "rstudio::conf",

"rstudioconference", "rstudioconference18",

"rstudioconference2018", "rstudio18",

"rstudioconf18", "rstudioconf2018",

"rstudio::conf18", "rstudio::conf2018")

## use since_id from previous search (if exists)

if (file.exists(file.path("data", "search.rds"))) {

since_id <- readRDS(file.path("data", "search.rds"))

since_id <- since_id$status_id[1]

} else {

since_id <- NULL

}

## search for up to 100,000 tweets mentionging rstudio::conf

rt <- search_tweets(

paste(rstudioconf, collapse = " OR "),

n = 1e5, verbose = FALSE,

since_id = since_id,

retryonratelimit = TRUE

)

## if there's already a search data file saved, then read it in,

## drop the duplicates, and then update the `rt` data object

if (file.exists(file.path("data", "search.rds"))) {

## bind rows (for tweets AND users data)

rt <- do_call_rbind(

list(rt, readRDS(file.path("data", "search.rds"))))

## determine whether each observation has a unique status ID

kp <- !duplicated(rt$status_id)

## only keep rows (observations) with unique status IDs

users <- users_data(rt)[kp, ]

## the rows of users should correspond with the tweets

rt <- rt[kp, ]

## restore as users attribute

attr(rt, "users") <- users

}

## save the data

saveRDS(rt, file.path("data", "search.rds"))

## save shareable data (only status_ids)

saveRDS(rt[, "status_id"], file.path("data", "search-ids.rds"))

Explore

To explore the Twitter data, go ahead and load the tidyverse packages.

suppressPackageStartupMessages(library(tidyverse))

Tweet frequency over time

In the code below, the data is summarized into a time series-like data frame and then plotted in order depict the frequency of tweets–aggregated using 2-hour intevals–about rstudio::conf over time.

rt %>%

filter(created_at > "2018-01-29") %>%

ts_plot("2 hours", color = "transparent") +

geom_smooth(method = "loess", se = FALSE, span = .1,

size = 2, colour = "#0066aa") +

geom_point(size = 5,

shape = 21, fill = "#ADFF2F99", colour = "#000000dd") +

theme_minimal(base_size = 15, base_family = "Roboto Condensed") +

theme(axis.text = element_text(colour = "#222222"),

plot.title = element_text(size = rel(1.7), face = "bold"),

plot.subtitle = element_text(size = rel(1.3)),

plot.caption = element_text(colour = "#444444")) +

labs(title = "Frequency of tweets about rstudio::conf over time",

subtitle = "Twitter status counts aggregated using two-hour intervals",

caption = "\n\nSource: Data gathered via Twitter's standard `search/tweets` API using rtweet",

x = NULL, y = NULL)

Positive/negative sentiment

Next, some sentiment analysis of the tweets so far.

## clean up the text a bit (rm mentions and links)

rt$text2 <- gsub(

"^RT:?\\s{0,}|#|@\\S+|https?[[:graph:]]+", "", rt$text)

## convert to lower case

rt$text2 <- tolower(rt$text2)

## trim extra white space

rt$text2 <- gsub("^\\s{1,}|\\s{1,}$", "", rt$text2)

rt$text2 <- gsub("\\s{2,}", " ", rt$text2)

## estimate pos/neg sentiment for each tweet

rt$sentiment <- syuzhet::get_sentiment(rt$text2, "syuzhet")

## write function to round time into rounded var

round_time <- function(x, sec) {

as.POSIXct(hms::hms(as.numeric(x) %/% sec * sec))

}

## plot by specified time interval (1-hours)

rt %>%

mutate(time = round_time(created_at, 60 * 60)) %>%

group_by(time) %>%

summarise(sentiment = mean(sentiment, na.rm = TRUE)) %>%

mutate(valence = ifelse(sentiment > 0L, "Positive", "Negative")) %>%

ggplot(aes(x = time, y = sentiment)) +

geom_smooth(method = "loess", span = .1,

colour = "#aa11aadd", fill = "#bbbbbb11") +

geom_point(aes(fill = valence, colour = valence),

shape = 21, alpha = .6, size = 3.5) +

theme_minimal(base_size = 15, base_family = "Roboto Condensed") +

theme(legend.position = "none",

axis.text = element_text(colour = "#222222"),

plot.title = element_text(size = rel(1.7), face = "bold"),

plot.subtitle = element_text(size = rel(1.3)),

plot.caption = element_text(colour = "#444444")) +

scale_fill_manual(

values = c(Positive = "#2244ee", Negative = "#dd2222")) +

scale_colour_manual(

values = c(Positive = "#001155", Negative = "#550000")) +

labs(x = NULL, y = NULL,

title = "Sentiment (valence) of rstudio::conf tweets over time",

subtitle = "Mean sentiment of tweets aggregated in one-hour intervals",

caption = "\nSource: Data gathered using rtweet. Sentiment analysis done using syuzhet")

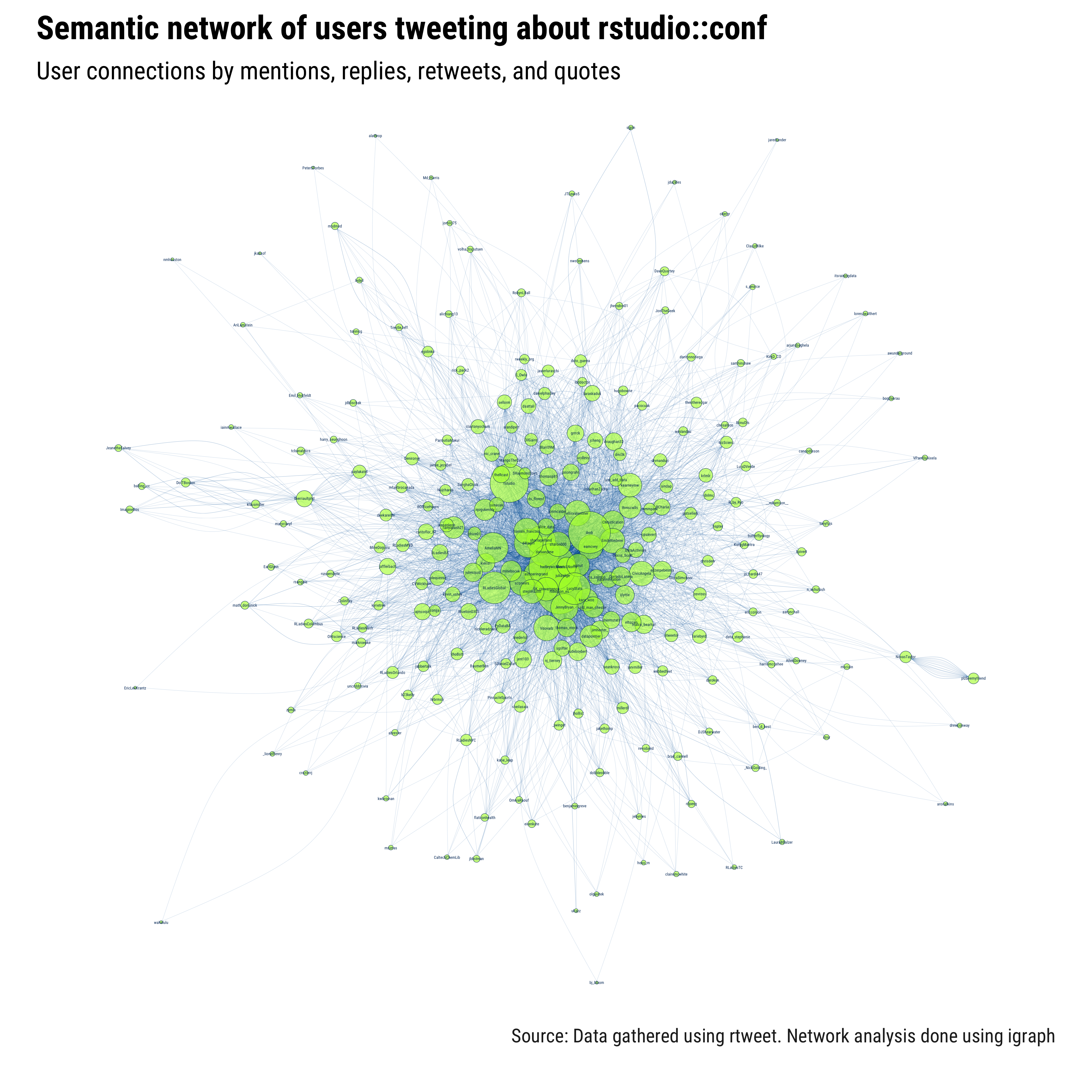

Semantic networks

The code below provides a quick and dirty visualization of the semantic network (connections via retweet, quote, mention, or reply) found in the data.

## unlist observations into long-form data frame

unlist_df <- function(...) {

dots <- lapply(list(...), unlist)

tibble::as_tibble(dots)

}

## iterate by row

row_dfs <- lapply(

seq_len(nrow(rt)), function(i)

unlist_df(from_screen_name = rt$screen_name[i],

reply = rt$reply_to_screen_name[i],

mention = rt$mentions_screen_name[i],

quote = rt$quoted_screen_name[i],

retweet = rt$retweet_screen_name[i])

)

## bind rows, gather (to long), convert to matrix, and filter out NAs

rdf <- dplyr::bind_rows(row_dfs)

rdf <- tidyr::gather(rdf, interaction_type, to_screen_name, -from_screen_name)

mat <- as.matrix(rdf[, -2])

mat <- mat[apply(mat, 1, function(i) !any(is.na(i))), ]

## get rid of self references

mat <- mat[mat[, 1] != mat[, 2], ]

## filter out users who don't appear in RHS at least 3 times

apps1 <- table(mat[, 1])

apps1 <- apps1[apps1 > 1L]

apps2 <- table(mat[, 2])

apps2 <- apps2[apps2 > 1L]

apps <- names(apps1)[names(apps1) %in% names(apps2)]

mat <- mat[mat[, 1] %in% apps & mat[, 2] %in% apps, ]

## create graph object

g <- igraph::graph_from_edgelist(mat)

## calculate size attribute (and transform to fit)

matcols <- factor(c(mat[, 1], mat[, 2]), levels = names(igraph::V(g)))

size <- table(screen_name = matcols)

size <- (log(size) + sqrt(size)) / 3

## reorder freq table

size <- size[match(names(size), names(igraph::V(g)))]

## plot network

par(mar = c(12, 6, 15, 6))

plot(g,

edge.size = .4,

curved = FALSE,

margin = -.05,

edge.arrow.size = 0,

edge.arrow.width = 0,

vertex.color = "#ADFF2F99",

vertex.size = size,

vertex.frame.color = "#003366",

vertex.label.color = "#003366",

vertex.label.cex = .8,

vertex.label.family = "Roboto Condensed",

edge.color = "#0066aa",

edge.width = .2,

main = "")

par(mar = c(9, 6, 9, 6))

title("Semantic network of users tweeting about rstudio::conf",

adj = 0, family = "Roboto Condensed", cex.main = 6.5)

mtext("Source: Data gathered using rtweet. Network analysis done using igraph",

side = 1, line = 0, adj = 1.0, cex = 3.8,

family = "Roboto Condensed", col = "#222222")

mtext("User connections by mentions, replies, retweets, and quotes",

side = 3, line = -4.25, adj = 0,

family = "Roboto Condensed", cex = 4.9)

Ideally, the network visualization would be an interactive, searchable graphic. Since it's not, I've printed out the node size values below.

nodes <- as_tibble(sort(size, decreasing = TRUE))

nodes$rank <- seq_len(nrow(nodes))

nodes$screen_name <- paste0(

'<a href="https://twitter.com/', nodes$screen_name,

'">@', nodes$screen_name, '</a>')

dplyr::select(nodes, rank, screen_name, log_n = n)

| rank | screen_name | log_n |

|---|---|---|

| 1 | @hadleywickham | 11.0164053 |

| 2 | @robinson_es | 10.7205729 |

| 3 | @drob | 9.8275619 |

| 4 | @rstudio | 9.0122754 |

| 5 | @juliasilge | 8.3156539 |

| 6 | @RLadiesGlobal | 7.4782542 |

| 7 | @LucyStats | 7.4112162 |

| 8 | @AmeliaMN | 7.1228108 |

| 9 | @dataandme | 7.0632396 |

| 10 | @sharlagelfand | 6.7674473 |

| 11 | @d4tagirl | 6.7547438 |

| 12 | @JennyBryan | 6.4124132 |

| 13 | @Voovarb | 6.0284818 |

| 14 | @romain_francois | 6.0284818 |

| 15 | @ellisvalentiner | 5.9837260 |

| 16 | @EmilyRiederer | 5.7999183 |

| 17 | @CivicAngela | 5.7842362 |

| 18 | @sharon000 | 5.7842362 |

| 19 | @sconvers | 5.7049294 |

| 20 | @astroeringrand | 5.6888842 |

| 21 | @eamcvey | 5.6888842 |

| 22 | @stephhazlitt | 5.6403685 |

| 23 | @kearneymw | 5.5581979 |

| 24 | @SK_convergence | 5.5248529 |

| 25 | @visnut | 5.3186034 |

| 26 | @CMastication | 5.2831274 |

| 27 | @kara_woo | 5.2652635 |

| 28 | @datapointier | 5.1929426 |

| 29 | @thmscwlls | 5.1562458 |

| 30 | @tanyacash21 | 5.1191779 |

| 31 | @njogukennly | 5.0056364 |

| 32 | @old_man_chester | 4.8683901 |

| 33 | @minebocek | 4.8281541 |

| 34 | @elhazen | 4.5540652 |

| 35 | @CorradoLanera | 4.5097581 |

| 36 | @ijlyttle | 4.4192141 |

| 37 | @AlexisLNorris | 4.3961566 |

| 38 | @kierisi | 4.3961566 |

| 39 | @juliesquid | 4.3961566 |

| 40 | @malco_bearhat | 4.3259141 |

| 41 | @thomas_mock | 4.3259141 |

| 42 | @nj_tierney | 4.3021301 |

| 43 | @jimhester_ | 4.3021301 |

| 44 | @edzerpebesma | 4.3021301 |

| 45 | @thomasp85 | 4.2296099 |

| 46 | @ds_floresf | 4.2296099 |

| 47 | @rudeboybert | 4.2050318 |

| 48 | @dvaughan32 | 4.1045644 |

| 49 | @shermstats | 4.0267962 |

| 50 | @jent103 | 4.0267962 |

| 51 | @grrrck | 3.9195611 |

| 52 | @theRcast | 3.9195611 |

| 53 | @jasongrahn | 3.8920720 |

| 54 | @_RCharlie | 3.8920720 |

| 55 | @alice_data | 3.8642952 |

| 56 | @jafflerbach | 3.8362222 |

| 57 | @ajmcoqui | 3.8362222 |

| 58 | @therriaultphd | 3.8078439 |

| 59 | @Bluelion0305 | 3.7791511 |

| 60 | @sgrifter | 3.7501339 |

| 61 | @RLadiesBA | 3.7501339 |

| 62 | @yutannihilation | 3.7207821 |

| 63 | @taraskaduk | 3.6910847 |

| 64 | @cantoflor_87 | 3.6910847 |

| 65 | @jonmcalder | 3.6910847 |

| 66 | @jcheng | 3.6306068 |

| 67 | @nic_crane | 3.5998014 |

| 68 | @seankross | 3.5998014 |

| 69 | @ma_salmon | 3.5686007 |

| 70 | @DataActivism | 3.5369905 |

| 71 | @cpsievert | 3.5049555 |

| 72 | @gdequeiroz | 3.5049555 |

| 73 | @kevin_ushey | 3.5049555 |

| 74 | @daattali | 3.4724797 |

| 75 | @Dorris_Scott | 3.4724797 |

| 76 | @Blair09M | 3.4395462 |

| 77 | @PyDataBA | 3.3722321 |

| 78 | @sellorm | 3.3722321 |

| 79 | @claytonyochum | 3.3378116 |

| 80 | @simecek | 3.3378116 |

| 81 | @MangoTheCat | 3.3028532 |

| 82 | @lariebyrd | 3.2673334 |

| 83 | @krlmlr | 3.2312268 |

| 84 | @jessenleon | 3.1945063 |

| 85 | @paylakatel | 3.1191041 |

| 86 | @drvnanduri | 3.0803567 |

| 87 | @bhive01 | 3.0803567 |

| 88 | @zevross | 3.0408634 |

| 89 | @RLadiesMVD | 3.0408634 |

| 90 | @aindap | 3.0005839 |

| 91 | @ucdlevy | 3.0005839 |

| 92 | @ntweetor | 3.0005839 |

| 93 | @tnederlof | 2.9594743 |

| 94 | @JonathanZadra | 2.9594743 |

| 95 | @Denironyx | 2.9594743 |

| 96 | @just_add_data | 2.9594743 |

| 97 | @OilGains | 2.9594743 |

| 98 | @duto_guerra | 2.9174869 |

| 99 | @dmi3k | 2.9174869 |

| 100 | @jarvmiller | 2.9174869 |

| 101 | @javierluraschi | 2.8745690 |

| 102 | @danielphadley | 2.8745690 |

| 103 | @bizScienc | 2.8745690 |

| 104 | @SanghaChick | 2.8745690 |

| 105 | @CVWickham | 2.8745690 |

| 106 | @nicoleradziwill | 2.8745690 |

| 107 | @patsellers | 2.8306631 |

| 108 | @RhoBott | 2.8306631 |

| 109 | @RLadiesOrlando | 2.8306631 |

| 110 | @deekareithi | 2.8306631 |

| 111 | @GioraSimchoni | 2.7857054 |

| 112 | @BaumerBen | 2.7857054 |

| 113 | @mmmpork | 2.7857054 |

| 114 | @alandipert | 2.7396253 |

| 115 | @NovasTaylor | 2.7396253 |

| 116 | @millerdl | 2.7396253 |

| 117 | @sheilasaia | 2.7396253 |

| 118 | @RLadiesNYC | 2.6923444 |

| 119 | @conjja | 2.6923444 |

| 120 | @SHaymondSays | 2.6437752 |

| 121 | @PinnacleSports | 2.6437752 |

| 122 | @MineDogucu | 2.5938194 |

| 123 | @Nujcharee | 2.5938194 |

| 124 | @S_Owla | 2.5938194 |

| 125 | @theotheredgar | 2.5938194 |

| 126 | @chrisderv | 2.5938194 |

| 127 | @mfairbrocanada | 2.5938194 |

| 128 | @plzbeemyfriend | 2.5423660 |

| 129 | @dnlmc | 2.5423660 |

| 130 | @egolinko | 2.5423660 |

| 131 | @data_stephanie | 2.4892894 |

| 132 | @jabbertalk | 2.4892894 |

| 133 | @jamie_jezebel | 2.4892894 |

| 134 | @OHIscience | 2.4892894 |

| 135 | @webbedfeet | 2.4344460 |

| 136 | @ParmutiaMakui | 2.3776708 |

| 137 | @R_by_Ryo | 2.3776708 |

| 138 | @SDanielZafar1 | 2.3776708 |

| 139 | @hrbrmstr | 2.3776708 |

| 140 | @LuisDVerde | 2.3187730 |

| 141 | @_jwinget | 2.3187730 |

| 142 | @ROfficeHours | 2.2575296 |

| 143 | @rweekly_org | 2.2575296 |

| 144 | @b23kelly | 2.2575296 |

| 145 | @kyrietree | 2.2575296 |

| 146 | @hugobowne | 2.2575296 |

| 147 | @ibddoctor | 2.1936778 |

| 148 | @hspter | 2.1936778 |

| 149 | @jhollist | 2.1936778 |

| 150 | @jakethomp | 2.1936778 |

| 151 | @markroepke | 2.1936778 |

| 152 | @pacocuak | 2.1936778 |

| 153 | @DaveQuartey | 2.1269049 |

| 154 | @DoITBoston | 2.1269049 |

| 155 | @wmlandau | 2.1269049 |

| 156 | @RLadiesColumbus | 2.1269049 |

| 157 | @RLadiesNash | 2.1269049 |

| 158 | @rick_pack2 | 2.0568335 |

| 159 | @jo_hardin47 | 2.0568335 |

| 160 | @butterflyology | 2.0568335 |

| 161 | @RiinuOts | 2.0568335 |

| 162 | @darokun | 2.0568335 |

| 163 | @klausmiller | 1.9830028 |

| 164 | @dantonnoriega | 1.9830028 |

| 165 | @katie_leap | 1.9830028 |

| 166 | @kpivert | 1.9830028 |

| 167 | @brad_cannell | 1.9830028 |

| 168 | @dobbleobble | 1.9830028 |

| 169 | @_ColinFay | 1.9830028 |

| 170 | @math_dominick | 1.9048400 |

| 171 | @RobynLBall | 1.9048400 |

| 172 | @ericcolson | 1.9048400 |

| 173 | @KurggMantra | 1.9048400 |

| 174 | @tcbanalytics | 1.9048400 |

| 175 | @flatironhealth | 1.8216209 |

| 176 | @eirenkate | 1.8216209 |

| 177 | @revodavid | 1.8216209 |

| 178 | @harrismcgehee | 1.7324082 |

| 179 | @jherndon01 | 1.7324082 |

| 180 | @_NickGolding_ | 1.7324082 |

| 181 | @samhinshaw | 1.7324082 |

| 182 | @DJShearwater | 1.7324082 |

| 183 | @runnersbyte | 1.7324082 |

| 184 | @ImagineBos | 1.7324082 |

| 185 | @modmed | 1.7324082 |

| 186 | @rdpeng | 1.7324082 |

| 187 | @JonTheGeek | 1.7324082 |

| 188 | @chrisalbon | 1.7324082 |

| 189 | @n_ashutosh | 1.7324082 |

| 190 | @jblistman | 1.6359562 |

| 191 | @balling_cc | 1.6359562 |

| 192 | @__mharrison__ | 1.6359562 |

| 193 | @TrestleJeff | 1.6359562 |

| 194 | @EarlGlynn | 1.6359562 |

| 195 | @OmniaRaouf | 1.6359562 |

| 196 | @jdblischak | 1.6359562 |

| 197 | @JeanetheFalvey | 1.6359562 |

| 198 | @harry_seunghoon | 1.6359562 |

| 199 | @alichiang13 | 1.6359562 |

| 200 | @volha_tryputsen | 1.5305538 |

| 201 | @abresler | 1.5305538 |

| 202 | @aaronchall | 1.5305538 |

| 203 | @AllenDowney | 1.5305538 |

| 204 | @tonyfujs | 1.5305538 |

| 205 | @maryclaryf | 1.5305538 |

| 206 | @uncmbbtrivia | 1.5305538 |

| 207 | @KirkD_CO | 1.4137497 |

| 208 | @rsangole | 1.4137497 |

| 209 | @msciain | 1.4137497 |

| 210 | @jebyrnes | 1.4137497 |

| 211 | @ledell | 1.4137497 |

| 212 | @ben_d_best | 1.4137497 |

| 213 | @canoodleson | 1.4137497 |

| 214 | @benjamingreve | 1.4137497 |

| 215 | @tonmcg | 1.4137497 |

| 216 | @zymla | 1.4137497 |

| 217 | @strnr | 1.2818353 |

| 218 | @LauraBBalzer | 1.2818353 |

| 219 | @JTLewis5 | 1.2818353 |

| 220 | @clairemcwhite | 1.2818353 |

| 221 | @s_pearce | 1.2818353 |

| 222 | @jomilo75 | 1.2818353 |

| 223 | @nwstephens | 1.2818353 |

| 224 | @Emil_Hvitfeldt | 1.2818353 |

| 225 | @kwbroman | 1.2818353 |

| 226 | @VParrillaAixela | 1.2818353 |

| 227 | @ClausWilke | 1.1287648 |

| 228 | @sgpln | 1.1287648 |

| 229 | @lorenzwalthert | 1.1287648 |

| 230 | @hoxo_m | 1.1287648 |

| 231 | @obergr | 1.1287648 |

| 232 | @drewconway | 1.1287648 |

| 233 | @iainmwallace | 1.1287648 |

| 234 | @arjunsbaghela | 1.1287648 |

| 235 | @_lionelhenry | 1.1287648 |

| 236 | @olgavitek | 1.1287648 |

| 237 | @crozierrj | 1.1287648 |

| 238 | @msimas | 1.1287648 |

| 239 | @CaltechChemLib | 1.1287648 |

| 240 | @AriLamstein | 1.1287648 |

| 241 | @bogdanrau | 1.1287648 |

| 242 | @RLadiesTC | 1.1287648 |

| 243 | @jduckles | 0.9435544 |

| 244 | @Md_Harris | 0.9435544 |

| 245 | @aronatkins | 0.9435544 |

| 246 | @awunderground | 0.9435544 |

| 247 | @ukacz | 0.9435544 |

| 248 | @itsrainingdata | 0.9435544 |

| 249 | @EricLeeKrantz | 0.7024536 |

| 250 | @PeterSForbes | 0.7024536 |

| 251 | @wahalulu | 0.7024536 |

| 252 | @jkassof | 0.7024536 |

| 253 | @nmhouston | 0.7024536 |

| 254 | @alathrop | 0.7024536 |

| 255 | @bj_bloom | 0.7024536 |

| 256 | @jaredlander | 0.7024536 |

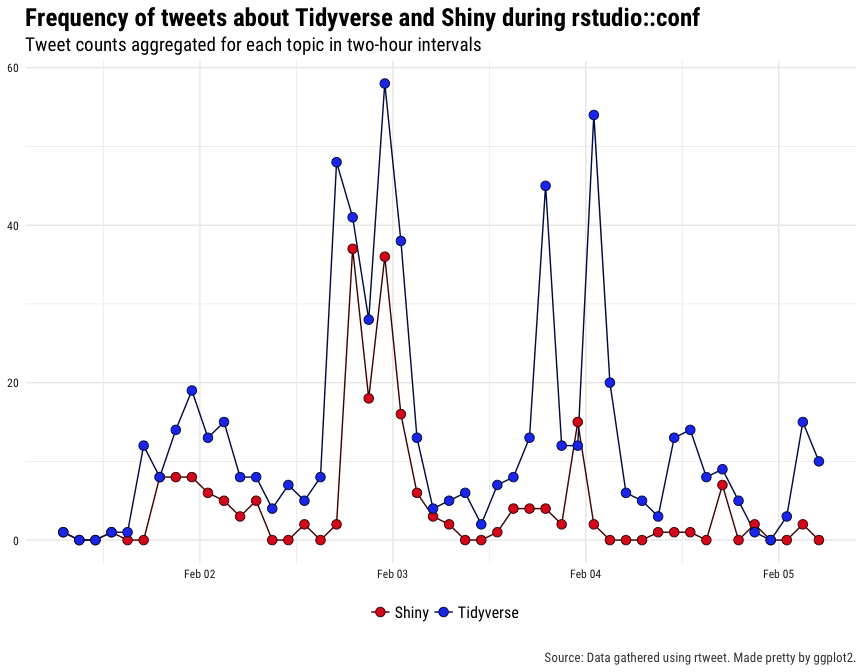

Tidyverse vs. Shiny

This code identifies tweets by topic, detecting mentions of the tidyverse [packages] and shiny. It then plots the frequency of those tweets over time.

rt %>%

filter(created_at > "2018-02-01" & created_at < "2018-02-05") %>%

mutate(

text = tolower(text),

tidyverse = str_detect(

text, "dplyr|tidyeval|tidyverse|rlang|map|purrr|readr|tibble"),

shiny = str_detect(text, "shiny|dashboard|interactiv")

) %>%

select(created_at, tidyverse:shiny) %>%

gather(pkg, mention, -created_at) %>%

mutate(pkg = factor(pkg, labels = c("Shiny", "Tidyverse"))) %>%

filter(mention) %>%

group_by(pkg) %>%

ts_plot("2 hours") +

geom_point(shape = 21, size = 3, aes(fill = pkg)) +

theme_minimal(base_family = "Roboto Condensed") +

scale_x_datetime(timezone = "America/Los_Angelos") +

theme(legend.position = "bottom",

legend.title = element_blank(),

legend.text = element_text(size = rel(1.1)),

axis.text = element_text(colour = "#222222"),

plot.title = element_text(size = rel(1.7), face = "bold"),

plot.subtitle = element_text(size = rel(1.3)),

plot.caption = element_text(colour = "#444444")) +

scale_fill_manual(

values = c(Tidyverse = "#2244ee", Shiny = "#dd2222")) +

scale_colour_manual(

values = c(Tidyverse = "#001155", Shiny = "#550000")) +

labs(x = NULL, y = NULL,

title = "Frequency of tweets about Tidyverse and Shiny during rstudio::conf",

subtitle = "Tweet counts aggregated for each topic in two-hour intervals",

caption = "\nSource: Data gathered using rtweet. Made pretty by ggplot2.")

Word clouds

I didn't want to add a bunch more code, so here I'm sourcing the prep work/code I used to get word lists.

source(file.path("R", "words.R"))

Shiny word cloud

This first word cloud depicts the most popular non-stopwords used in tweets about Shiny.

par(mar = c(0, 0, 0, 0))

wordcloud::wordcloud(

shiny$var, shiny$n, min.freq = 3,

random.order = FALSE,

random.color = FALSE,

colors = gg_cols(5)

)

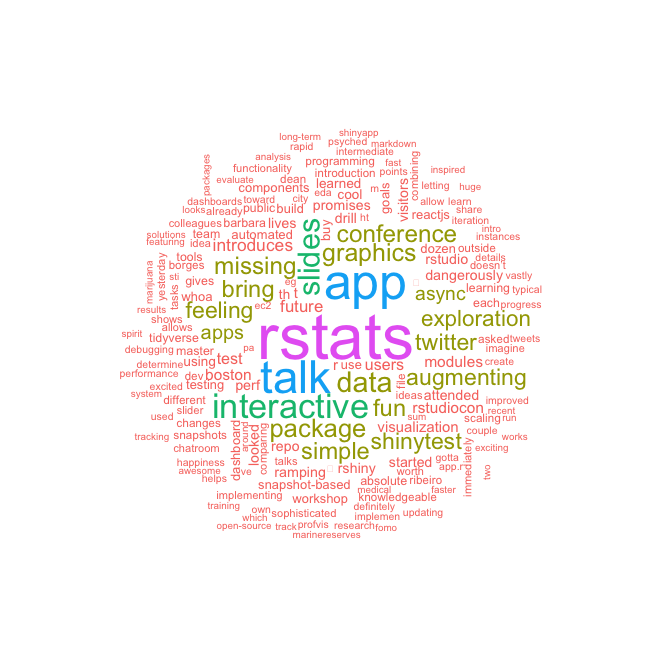

Tidyverse word cloud

The second word cloud depicts the most popular non-stopwords used in tweets about the tidyverse.

par(mar = c(0, 0, 0, 0))

wordcloud::wordcloud(

tidyverse$var, tidyverse$n, min.freq = 5,

random.order = FALSE,

random.color = FALSE,

colors = gg_cols(5)

)