flrngel / Self Attentive Tensorflow

Tensorflow implementation of "A Structured Self-Attentive Sentence Embedding"

Stars: ✭ 189

Programming Languages

python

139335 projects - #7 most used programming language

Labels

Projects that are alternatives of or similar to Self Attentive Tensorflow

Fastpunct

Punctuation restoration and spell correction experiments.

Stars: ✭ 121 (-35.98%)

Mutual labels: attention

Multihead Siamese Nets

Implementation of Siamese Neural Networks built upon multihead attention mechanism for text semantic similarity task.

Stars: ✭ 144 (-23.81%)

Mutual labels: attention

Rnn For Joint Nlu

Pytorch implementation of "Attention-Based Recurrent Neural Network Models for Joint Intent Detection and Slot Filling" (https://arxiv.org/abs/1609.01454)

Stars: ✭ 176 (-6.88%)

Mutual labels: attention

Absa keras

Keras Implementation of Aspect based Sentiment Analysis

Stars: ✭ 126 (-33.33%)

Mutual labels: attention

Vqa regat

Research Code for ICCV 2019 paper "Relation-aware Graph Attention Network for Visual Question Answering"

Stars: ✭ 129 (-31.75%)

Mutual labels: attention

Hey Jetson

Deep Learning based Automatic Speech Recognition with attention for the Nvidia Jetson.

Stars: ✭ 161 (-14.81%)

Mutual labels: attention

Nlp Models Tensorflow

Gathers machine learning and Tensorflow deep learning models for NLP problems, 1.13 < Tensorflow < 2.0

Stars: ✭ 1,603 (+748.15%)

Mutual labels: attention

Datastories Semeval2017 Task4

Deep-learning model presented in "DataStories at SemEval-2017 Task 4: Deep LSTM with Attention for Message-level and Topic-based Sentiment Analysis".

Stars: ✭ 184 (-2.65%)

Mutual labels: attention

Prediction Flow

Deep-Learning based CTR models implemented by PyTorch

Stars: ✭ 138 (-26.98%)

Mutual labels: attention

Attentionn

All about attention in neural networks. Soft attention, attention maps, local and global attention and multi-head attention.

Stars: ✭ 175 (-7.41%)

Mutual labels: attention

Image Caption Generator

A neural network to generate captions for an image using CNN and RNN with BEAM Search.

Stars: ✭ 126 (-33.33%)

Mutual labels: attention

Multimodal Sentiment Analysis

Attention-based multimodal fusion for sentiment analysis

Stars: ✭ 172 (-8.99%)

Mutual labels: attention

Ccnet Pure Pytorch

Criss-Cross Attention for Semantic Segmentation in pure Pytorch with a faster and more precise implementation.

Stars: ✭ 124 (-34.39%)

Mutual labels: attention

Pyramid Attention Networks Pytorch

Implementation of Pyramid Attention Networks for Semantic Segmentation.

Stars: ✭ 182 (-3.7%)

Mutual labels: attention

Sightseq

Computer vision tools for fairseq, containing PyTorch implementation of text recognition and object detection

Stars: ✭ 116 (-38.62%)

Mutual labels: attention

Medical Transformer

Pytorch Code for "Medical Transformer: Gated Axial-Attention for Medical Image Segmentation"

Stars: ✭ 153 (-19.05%)

Mutual labels: attention

Graph attention pool

Attention over nodes in Graph Neural Networks using PyTorch (NeurIPS 2019)

Stars: ✭ 186 (-1.59%)

Mutual labels: attention

Deep Time Series Prediction

Seq2Seq, Bert, Transformer, WaveNet for time series prediction.

Stars: ✭ 183 (-3.17%)

Mutual labels: attention

Transformers.jl

Julia Implementation of Transformer models

Stars: ✭ 173 (-8.47%)

Mutual labels: attention

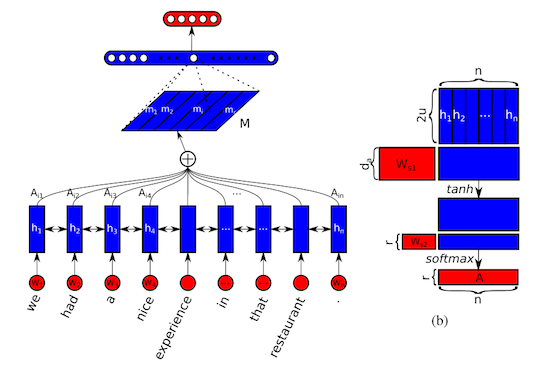

Self-Attentive-Tensorflow

Tensorflow implementation of A Structured Self-Attentive Sentence Embedding

You can read more about concept from this paper

Key Concept

Frobenius norm with attention

Usage

Download ag news dataset as below

$ tree ./data

./data

└── ag_news_csv

├── classes.txt

├── readme.txt

├── test.csv

├── train.csv

└── train_mini.csv

and then

$ python train.py

Result

Accuracy 0.895

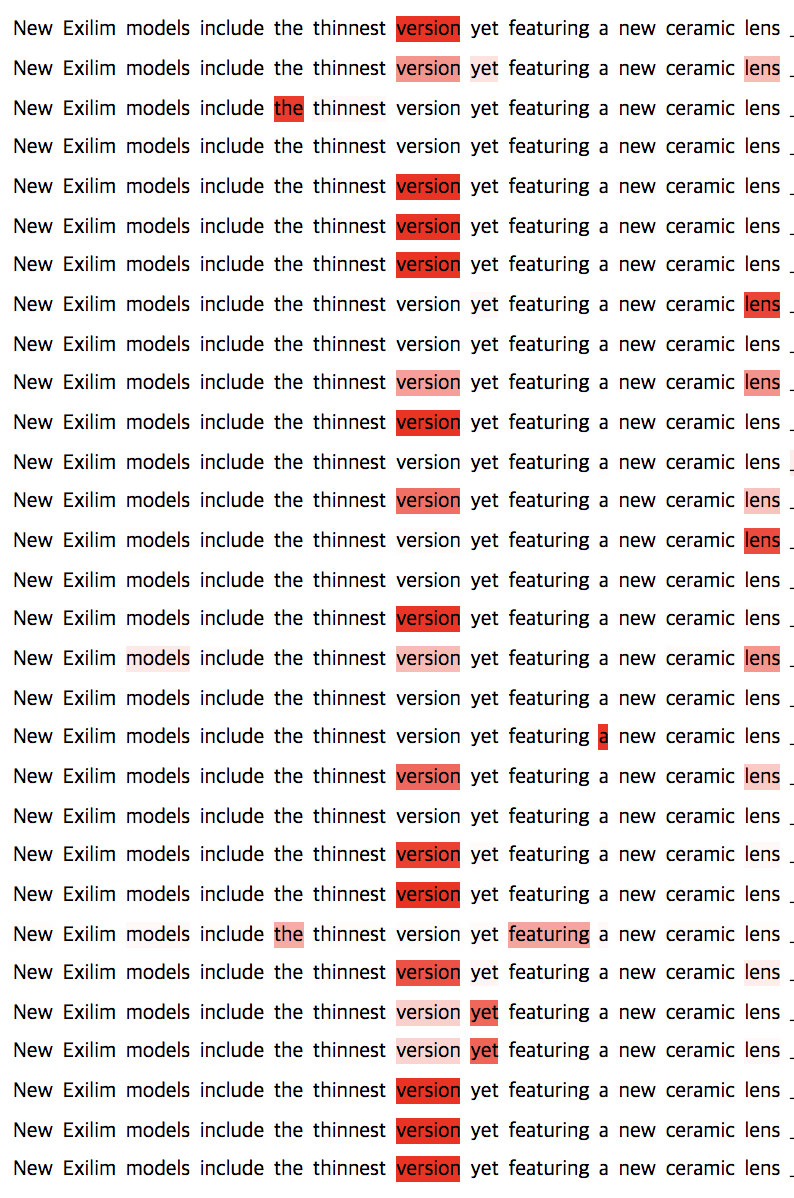

visualize without penalization

visualize with penalization

To-do list

- support multiple dataset

Notes

This implementation does not use pretrained GloVe or Word2vec.

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].