tsenghungchen / Show Adapt And Tell

Programming Languages

Projects that are alternatives of or similar to Show Adapt And Tell

show-adapt-and-tell

This is the official code for the paper

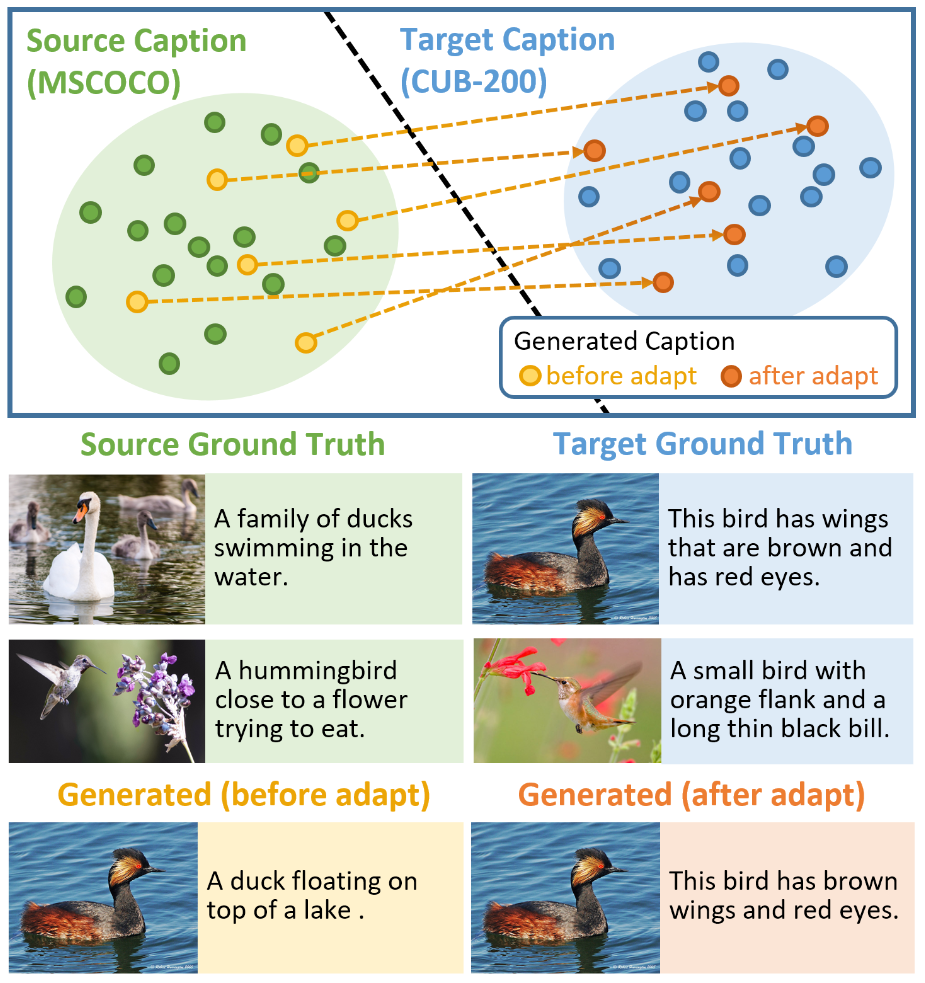

Show, Adapt and Tell: Adversarial Training of Cross-domain Image Captioner

Tseng-Hung Chen,

Yuan-Hong Liao,

Ching-Yao Chuang,

Wan-Ting Hsu,

Jianlong Fu,

Min Sun

To appear in ICCV 2017

In this repository we provide:

- The cross-domain captioning models used in the paper

- Script for preprocessing MSCOCO data

- Script for preprocessing CUB-200-2011 captions

- Code for training the cross-domain captioning models

If you find this code useful for your research, please cite

@article{chen2017show,

title={Show, Adapt and Tell: Adversarial Training of Cross-domain Image Captioner},

author={Chen, Tseng-Hung and Liao, Yuan-Hong and Chuang, Ching-Yao and Hsu, Wan-Ting and Fu, Jianlong and Sun, Min},

journal={arXiv preprint arXiv:1705.00930},

year={2017}

}

Requirements

- Python 2.7

- Tensoflow 0.12.1

- Caffe

- OpenCV 2.4.9

P.S. Please clone the repository with the --recursive flag:

# Make sure to clone with --recursive

git clone --recursive https://github.com/tsenghungchen/show-adapt-and-tell.git

Data Preprocessing

MSCOCO Captioning dataset

Feature Extraction

- Download the pretrained ResNet-101 model and place it under

data-prepro/MSCOCO_preprocess/resnet_model/. - Please modify the caffe path in

data-prepro/MSCOCO_preprocess/extract_resnet_coco.py. - Go to

data-prepro/MSCOCO_preprocessand run the following script:./download_mscoco.shfor downloading images and extracting features.

Captions Tokenization

- Clone the NeuralTalk2 repository and head over to the coco/ folder and run the IPython notebook to generate a json file for Karpathy split:

coco_raw.json. - Run the following script:

./prepro_mscoco_caption.shfor downloading and tokenizing captions. - Run

python prepro_coco_annotation.pyto generate annotation json file for testing.

CUB-200-2011 with Descriptions

Feature Extraction

- Run the script

./download_cub.shto download the images in CUB-200-2011. - Please modify the input/output path in

data-prepro/MSCOCO_preprocess/extract_resnet_coco.pyto extract and pack features in CUB-200-2011.

Captions Tokenization

- Download the description data.

- Run

python get_split.pyto generate dataset split following the ECCV16 paper "Generating Visual Explanations". - Run

python prepro_cub_annotation.pyto generate annotation json file for testing. - Run

python CUB_preprocess_token.pyfor tokenization.

Models from the paper

Pretrained Models

Download all pretrained and adaption models:

- MSCOCO pretrained model

- CUB-200-2011 adaptation model

- TGIF adaptation model

- Flickr30k adaptation model

Example Results

Here are some example results where the captions are generated from these models:

Training

The training codes are under the show-adapt-tell/ folder.

Simply run python main.py for two steps of training:

Training the source model with paired image-caption data

Please set the Boolean value of "G_is_pretrain" to True in main.py to start pretraining the generator.

Training the cross-domain captioner with unpaired data

After pretraining, set "G_is_pretrain" to False to start training the cross-domain model.

License

Free for personal or research use; for commercial use please contact me.