soobinseo / Transformer Tts

Licence: mit

A Pytorch Implementation of "Neural Speech Synthesis with Transformer Network"

Stars: ✭ 418

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Transformer Tts

ttslearn

ttslearn: Library for Pythonで学ぶ音声合成 (Text-to-speech with Python)

Stars: ✭ 158 (-62.2%)

Mutual labels: text-to-speech, tts, attention-mechanism

AdaSpeech

AdaSpeech: Adaptive Text to Speech for Custom Voice

Stars: ✭ 108 (-74.16%)

Mutual labels: text-to-speech, tts, transformer

Zero-Shot-TTS

Unofficial Implementation of Zero-Shot Text-to-Speech for Text-Based Insertion in Audio Narration

Stars: ✭ 33 (-92.11%)

Mutual labels: text-to-speech, tts, transformer

Cognitive Speech Tts

Microsoft Text-to-Speech API sample code in several languages, part of Cognitive Services.

Stars: ✭ 312 (-25.36%)

Mutual labels: text-to-speech, tts, transformer

google-translate-tts

Node library for Google Translate TTS (Text-to-Speech) API

Stars: ✭ 23 (-94.5%)

Mutual labels: text-to-speech, tts

Voice Builder

An opensource text-to-speech (TTS) voice building tool

Stars: ✭ 362 (-13.4%)

Mutual labels: text-to-speech, tts

Neural sp

End-to-end ASR/LM implementation with PyTorch

Stars: ✭ 408 (-2.39%)

Mutual labels: attention-mechanism, transformer

Parakeet

PAddle PARAllel text-to-speech toolKIT (supporting WaveFlow, WaveNet, Transformer TTS and Tacotron2)

Stars: ✭ 279 (-33.25%)

Mutual labels: text-to-speech, tts

editts

Official implementation of EdiTTS: Score-based Editing for Controllable Text-to-Speech

Stars: ✭ 74 (-82.3%)

Mutual labels: text-to-speech, tts

esp32-flite

Speech synthesis running on ESP32 based on Flite engine.

Stars: ✭ 28 (-93.3%)

Mutual labels: text-to-speech, tts

Glow Tts

A Generative Flow for Text-to-Speech via Monotonic Alignment Search

Stars: ✭ 284 (-32.06%)

Mutual labels: text-to-speech, tts

Tts

🐸💬 - a deep learning toolkit for Text-to-Speech, battle-tested in research and production

Stars: ✭ 305 (-27.03%)

Mutual labels: text-to-speech, tts

talkbot

Text-to-speech and translation bot for Discord

Stars: ✭ 27 (-93.54%)

Mutual labels: text-to-speech, tts

galerkin-transformer

[NeurIPS 2021] Galerkin Transformer: a linear attention without softmax

Stars: ✭ 111 (-73.44%)

Mutual labels: transformer, attention-mechanism

linformer

Implementation of Linformer for Pytorch

Stars: ✭ 119 (-71.53%)

Mutual labels: transformer, attention-mechanism

Comprehensive-Tacotron2

PyTorch Implementation of Google's Natural TTS Synthesis by Conditioning WaveNet on Mel Spectrogram Predictions. This implementation supports both single-, multi-speaker TTS and several techniques to enforce the robustness and efficiency of the model.

Stars: ✭ 22 (-94.74%)

Mutual labels: text-to-speech, tts

Multilingual text to speech

An implementation of Tacotron 2 that supports multilingual experiments with parameter-sharing, code-switching, and voice cloning.

Stars: ✭ 324 (-22.49%)

Mutual labels: text-to-speech, tts

Hifi Gan

HiFi-GAN: Generative Adversarial Networks for Efficient and High Fidelity Speech Synthesis

Stars: ✭ 325 (-22.25%)

Mutual labels: text-to-speech, tts

Tts

🤖 💬 Deep learning for Text to Speech (Discussion forum: https://discourse.mozilla.org/c/tts)

Stars: ✭ 5,427 (+1198.33%)

Mutual labels: text-to-speech, tts

pynmt

a simple and complete pytorch implementation of neural machine translation system

Stars: ✭ 13 (-96.89%)

Mutual labels: transformer, attention-mechanism

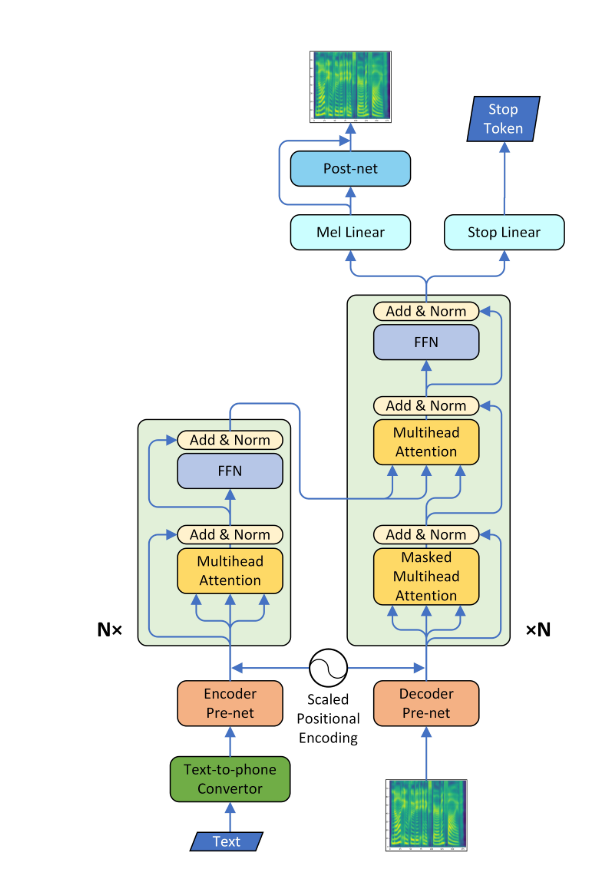

Transformer-TTS

- A Pytorch Implementation of Neural Speech Synthesis with Transformer Network

- This model can be trained about 3 to 4 times faster than the well known seq2seq model like tacotron, and the quality of synthesized speech is almost the same. It was confirmed through experiment that it took about 0.5 second per step.

- I did not use the wavenet vocoder but learned the post network using CBHG model of tacotron and converted the spectrogram into raw wave using griffin-lim algorithm.

Requirements

- Install python 3

- Install pytorch == 0.4.0

- Install requirements:

pip install -r requirements.txt

Data

- I used LJSpeech dataset which consists of pairs of text script and wav files. The complete dataset (13,100 pairs) can be downloaded here. I referred https://github.com/keithito/tacotron and https://github.com/Kyubyong/dc_tts for the preprocessing code.

Pretrained Model

- you can download pretrained model here (160K for AR model / 100K for Postnet)

- Locate the pretrained model at checkpoint/ directory.

Attention plots

- A diagonal alignment appeared after about 15k steps. The attention plots below are at 160k steps. Plots represent the multihead attention of all layers. In this experiment, h=4 is used for three attention layers. Therefore, 12 attention plots were drawn for each of the encoder, decoder and encoder-decoder. With the exception of the decoder, only a few multiheads showed diagonal alignment.

Self Attention encoder

Self Attention decoder

Attention encoder-decoder

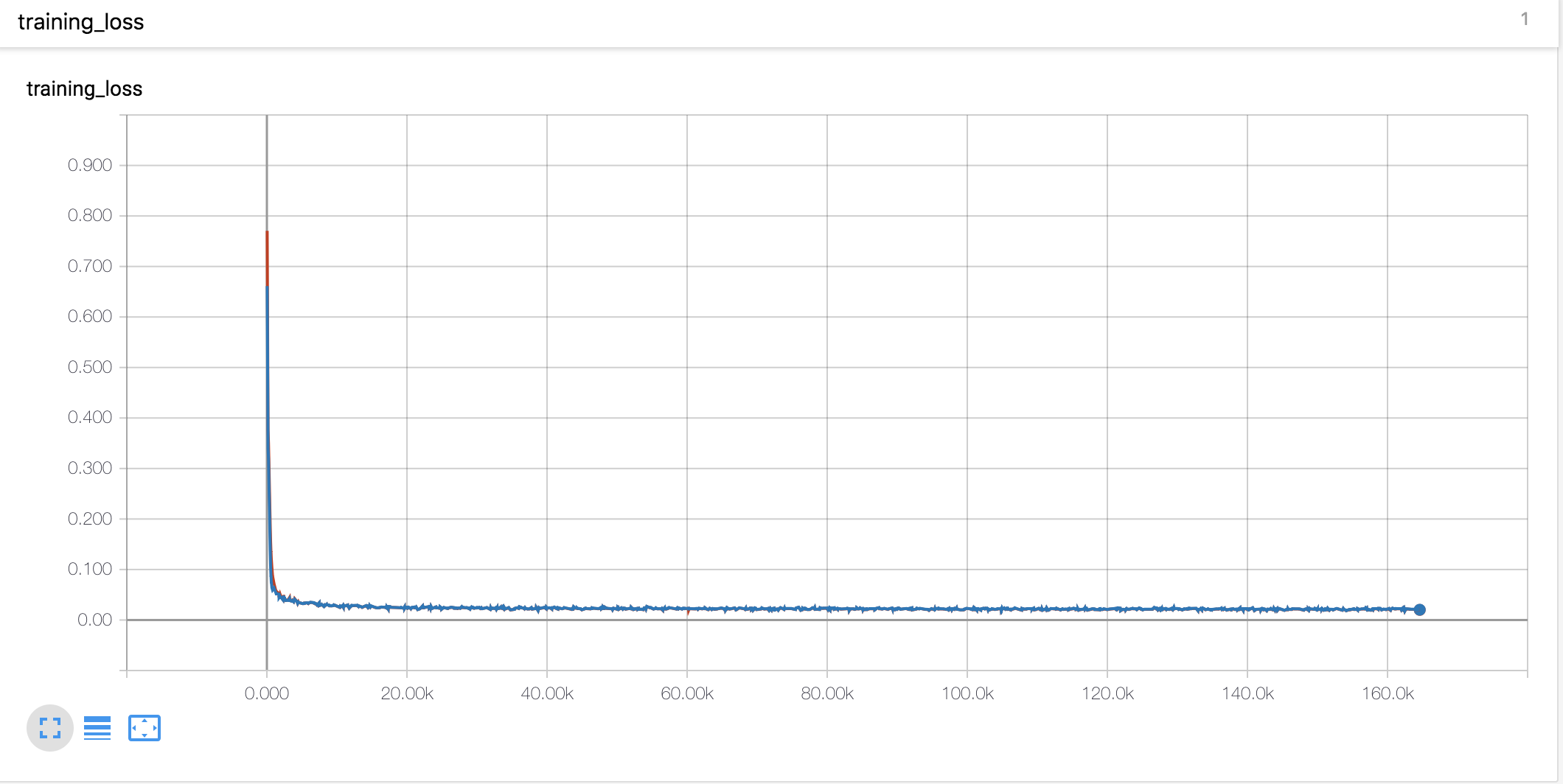

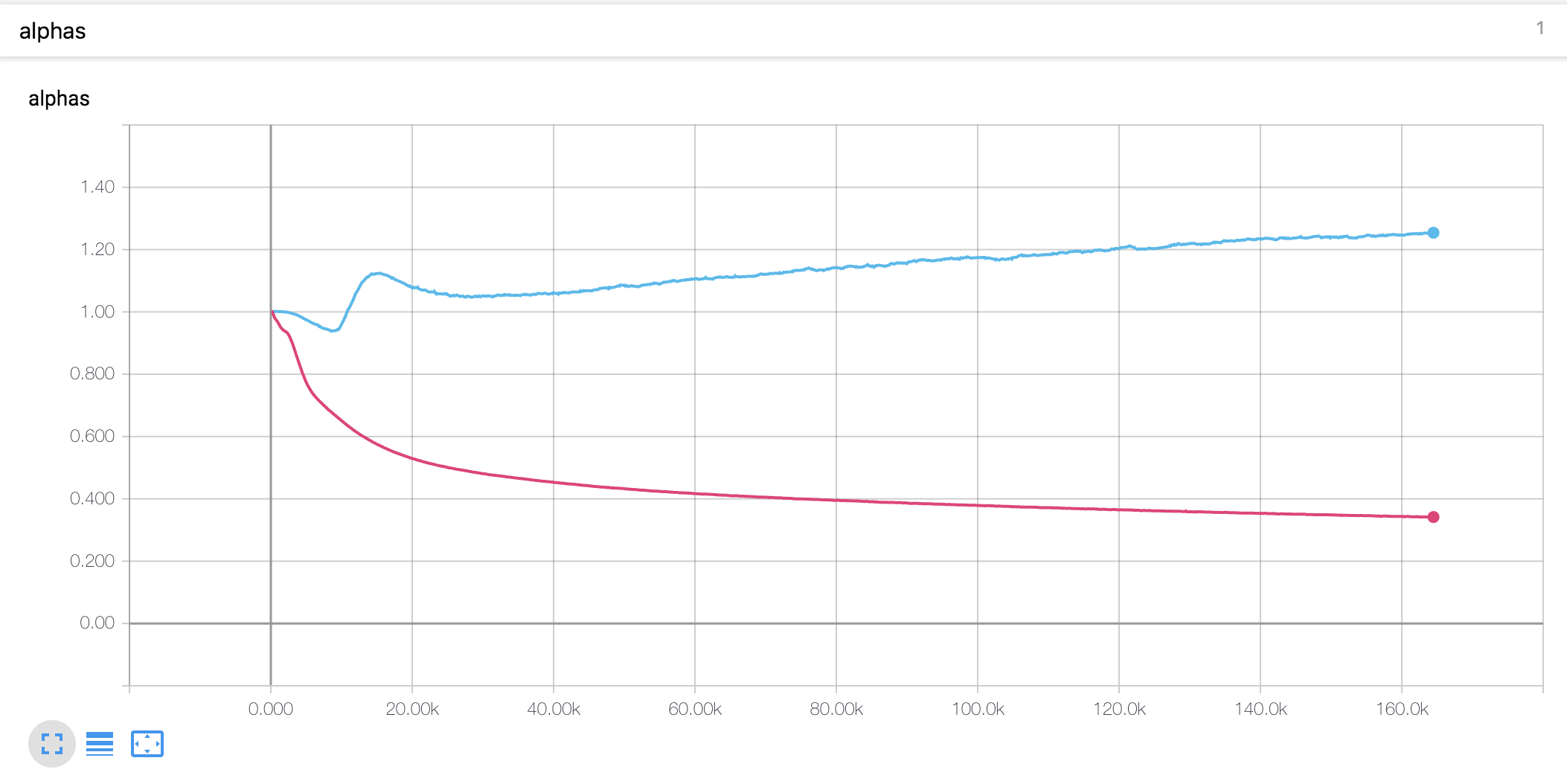

Learning curves & Alphas

- I used Noam style warmup and decay as same as Tacotron

- The alpha value for the scaled position encoding is different from the thesis. In the paper, the alpha value of the encoder is increased to 4, whereas in the present experiment, it slightly increased at the beginning and then decreased continuously. The decoder alpha has steadily decreased since the beginning.

Experimental notes

- The learning rate is an important parameter for training. With initial learning rate of 0.001 and exponentially decaying doesn't work.

- The gradient clipping is also an important parameter for training. I clipped the gradient with norm value 1.

- With the stop token loss, the model did not training.

- It was very important to concatenate the input and context vectors in the Attention mechanism.

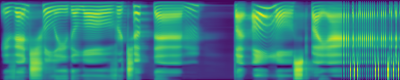

Generated Samples

-

You can check some generated samples below. All samples are step at 160k, so I think the model is not converged yet. This model seems to be lower performance in long sentences.

-

The first plot is the predicted mel spectrogram, and the second is the ground truth.

File description

-

hyperparams.pyincludes all hyper parameters that are needed. -

prepare_data.pypreprocess wav files to mel, linear spectrogram and save them for faster training time. Preprocessing codes for text is in text/ directory. -

preprocess.pyincludes all preprocessing codes when you loads data. -

module.pycontains all methods, including attention, prenet, postnet and so on. -

network.pycontains networks including encoder, decoder and post-processing network. -

train_transformer.pyis for training autoregressive attention network. (text --> mel) -

train_postnet.pyis for training post network. (mel --> linear) -

synthesis.pyis for generating TTS sample.

Training the network

- STEP 1. Download and extract LJSpeech data at any directory you want.

- STEP 2. Adjust hyperparameters in

hyperparams.py, especially 'data_path' which is a directory that you extract files, and the others if necessary. - STEP 3. Run

prepare_data.py. - STEP 4. Run

train_transformer.py. - STEP 5. Run

train_postnet.py.

Generate TTS wav file

- STEP 1. Run

synthesis.py. Make sure the restore step.

Reference

- Keith ito: https://github.com/keithito/tacotron

- Kyubyong Park: https://github.com/Kyubyong/dc_tts

- jadore801120: https://github.com/jadore801120/attention-is-all-you-need-pytorch/

Comments

- Any comments for the codes are always welcome.

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].