Alibaba-MIIL / Tresnet

Programming Languages

Labels

Projects that are alternatives of or similar to Tresnet

TResNet: High Performance GPU-Dedicated Architecture

Official PyTorch Implementation

Tal Ridnik, Hussam Lawen, Asaf Noy, Itamar Friedman, Emanuel Ben Baruch, Gilad Sharir

DAMO Academy, Alibaba Group

Abstract

Many deep learning models, developed in recent years, reach higher ImageNet accuracy than ResNet50, with fewer or comparable FLOPS count. While FLOPs are often seen as a proxy for network efficiency, when measuring actual GPU training and inference throughput, vanilla ResNet50 is usually significantly faster than its recent competitors, offering better throughput-accuracy trade-off. In this work, we introduce a series of architecture modifications that aim to boost neural networks' accuracy, while retaining their GPU training and inference efficiency. We first demonstrate and discuss the bottlenecks induced by FLOPs-optimizations. We then suggest alternative designs that better utilize GPU structure and assets. Finally, we introduce a new family of GPU-dedicated models, called TResNet, which achieve better accuracy and efficiency than previous ConvNets. Using a TResNet model, with similar GPU throughput to ResNet50, we reach 80.7% top-1 accuracy on ImageNet. Our TResNet models also transfer well and achieve state-of-the-art accuracy on competitive datasets such as Stanford cars (96.0%), CIFAR-10 (99.0%), CIFAR-100 (91.5%) and Oxford-Flowers (99.1%). They also perform well on multi-label classification and object detection tasks.

07/01/2021: New SOTA Results

With better pretraining, Our medium-size newtork, TResNet-L-V2, achieved SOTA result on stanford-cars dataset, and 3rd place on CIFAR100, while being significantly faster and smaller than the competitors.

30/9/2020: New Paper Released

We released a new paper, Asymmetric Loss For Multi-Label

Classification.

The paper

presents a new novel loss function, that is applicable for tasks with

inherent data imbalancing - first and foremost multi-label

classification, and also object detection and fine-grain single-label

classification. The new paper also describe and expand in details

preliminary results provided in TResNet paper on MS-COCO multi-label

dataset.

Github link

28/8/2020: V2 of TResNet Article Released

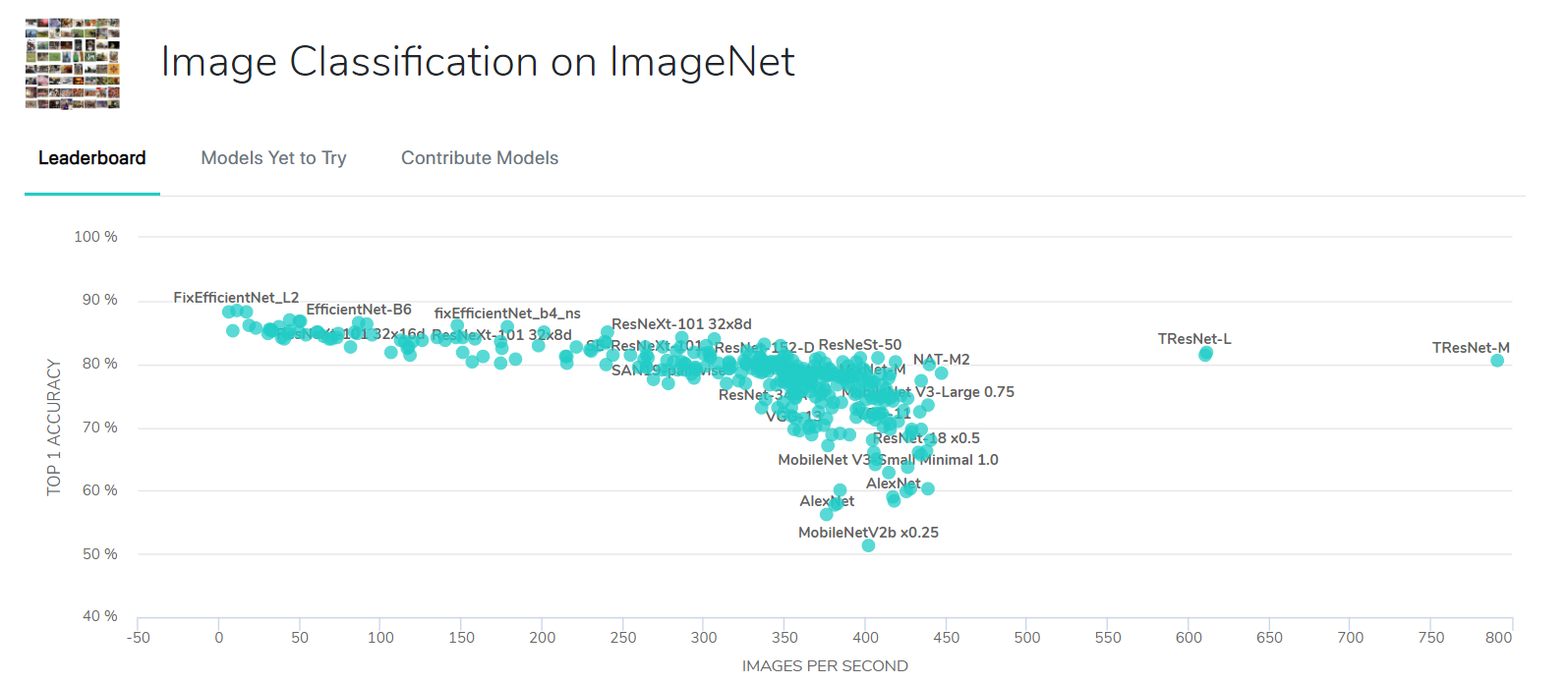

Sotabench Comparisons

Comparative results from sotabench benchamrk, demonstartaing that TReNset models give excellent speed-accuracy tradoff:

|

11/6/2020: V1 of TResNet Article Released

The main change - In addition to single label SOTA results, we also added top results for multi-label classification and object detection tasks, using TResNet. For example, we set a new SOTA record for MS-COCO multi-label dataset, surpassing the previous top results by more than 2.5% mAP !

| Bacbkone | mAP |

|---|---|

| KSSNet (previous SOTA) | 83.7 |

| TResNet-L | 86.4 |

2/6/2020: CVPR-Kaggle competitions

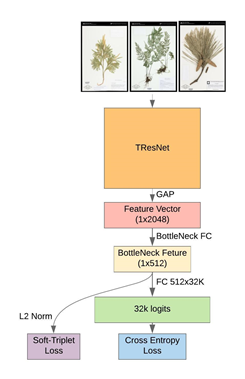

We participated and won top places in two major CVPR-Kaggle competitions:

- 2nd place in Herbarium 2020 competition, out of 153 teams.

-

7th place

in Plant-Pathology 2020 competition, out of 1317 teams.

TResNet was a vital part of our solution for both competitions, allowing us to work on high resolutions and reach top scores while doing fast and efficient experiments.

Main Article Results

TResNet Models

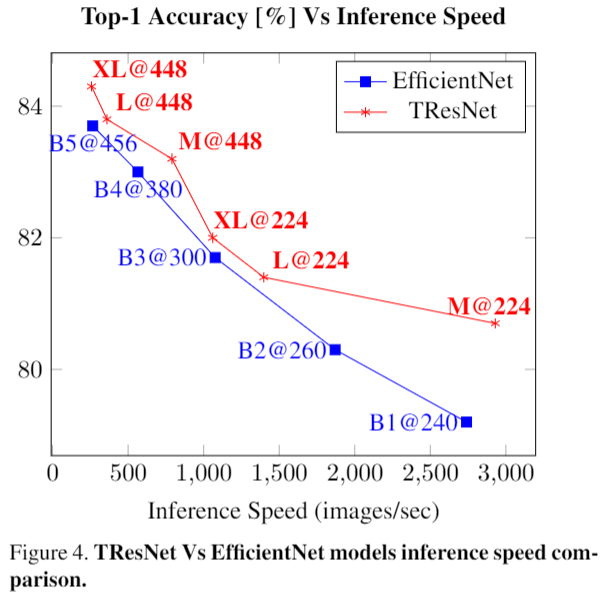

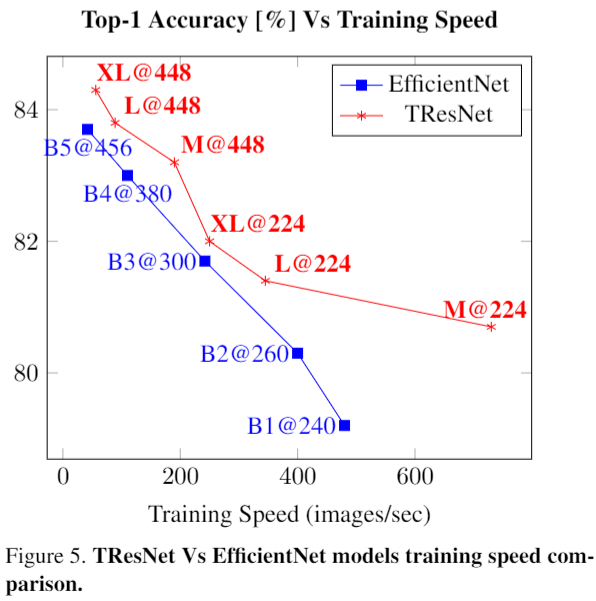

TResNet models accuracy and GPU throughput on ImageNet, compared to ResNet50. All measurements were done on Nvidia V100 GPU, with mixed precision. All models are trained on input resolution of 224.

| Models | Top Training Speed (img/sec) |

Top Inference Speed (img/sec) |

Max Train Batch Size | Top-1 Acc. |

|---|---|---|---|---|

| ResNet50 | 805 | 2830 | 288 | 79.0 |

| EfficientNetB1 | 440 | 2740 | 196 | 79.2 |

| TResNet-M | 730 | 2930 | 512 | 80.8 |

| TResNet-L | 345 | 1390 | 316 | 81.5 |

| TResNet-XL | 250 | 1060 | 240 | 82.0 |

Comparison To Other Networks

Comparison of ResNet50 to top modern networks, with similar top-1 ImageNet accuracy. All measurements were done on Nvidia V100 GPU with mixed precision. For gaining optimal speeds, training and inference were measured on 90% of maximal possible batch size. Except TResNet-M, all the models' ImageNet scores were taken from the public repository, which specialized in providing top implementations for modern networks. Except EfficientNet-B1, which has input resolution of 240, all other models have input resolution of 224.

| Model | Top Training Speed (img/sec) |

Top Inference Speed (img/sec) |

Top-1 Acc. | Flops[G] |

|---|---|---|---|---|

| ResNet50 | 805 | 2830 | 79.0 | 4.1 |

| ResNet50-D | 600 | 2670 | 79.3 | 4.4 |

| ResNeXt50 | 490 | 1940 | 79.4 | 4.3 |

| EfficientNetB1 | 440 | 2740 | 79.2 | 0.6 |

| SEResNeXt50 | 400 | 1770 | 79.9 | 4.3 |

| MixNet-L | 400 | 1400 | 79.0 | 0.5 |

| TResNet-M | 730 | 2930 | 80.8 | 5.5 |

|

|

Transfer Learning SotA Results

Comparison of TResNet to state-of-the-art models on transfer learning datasets (only ImageNet-based transfer learning results). Models inference speed is measured on a mixed precision V100 GPU. Since no official implementation of Gpipe was provided, its inference speed is unknown

| Dataset | Model |

Top-1

Acc. |

Speed

img/sec |

Input |

| CIFAR-10 | Gpipe | 99.0 | - | 480 |

| TResNet-XL | 99.0 | 1060 | 224 | |

| CIFAR-100 | EfficientNet-B7 | 91.7 | 70 | 600 |

| TResNet-XL | 91.5 | 1060 | 224 | |

| Stanford Cars | EfficientNet-B7 | 94.7 | 70 | 600 |

| TResNet-L | 96.0 | 500 | 368 | |

| Oxford-Flowers | EfficientNet-B7 | 98.8 | 70 | 600 |

| TResNet-L | 99.1 | 500 | 368 |

Reproduce Article Scores

We provide code for reproducing the validation top-1 score of TResNet models on ImageNet. First, download pretrained models from here.

Then, run the infer.py script. For example, for tresnet_m (input size 224) run:

python -m infer.py \

--val_dir=/path/to/imagenet_val_folder \

--model_path=/model/path/to/tresnet_m.pth \

--model_name=tresnet_m

--input_size=224

TResNet Training

Due to IP limitations, we do not provide the exact training code that was used to obtain the article results.

However, TResNet is now an integral part of the popular rwightman / pytorch-image-models repo. Using that repo, you can reach very similar results to the one stated in the article.

For example, training tresnet_m on rwightman / pytorch-image-models with the command line:

python -u -m torch.distributed.launch --nproc_per_node=8 \

--nnodes=1 --node_rank=0 ./train.py /data/imagenet/ \

-b=190 --lr=0.6 --model-ema --aa=rand-m9-mstd0.5-inc1 \

--num-gpu=8 -j=16 --amp \

--model=tresnet_m --epochs=300 --mixup=0.2 \

--sched='cosine' --reprob=0.4 --remode=pixel

gave accuracy of 80.5%.

Also, during the merge request, we had interesting discussions and insights regarding TResNet design. I am attaching a pdf version the mentioned discussions. They can shed more light on TResNet design considerations and directions for the future.

TResNet discussion and insights

(taken with permission from here)

Tips For Working With Inplace-ABN

See INPLACE_ABN_TIPS.

Citation

@misc{ridnik2020tresnet,

title={TResNet: High Performance GPU-Dedicated Architecture},

author={Tal Ridnik and Hussam Lawen and Asaf Noy and Itamar Friedman},

year={2020},

eprint={2003.13630},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Contact

Feel free to contact me if there are any questions or issues (Tal Ridnik, [email protected]).