ragulpr / Wtte Rnn

Programming Languages

Projects that are alternatives of or similar to Wtte Rnn

WTTE-RNN

Weibull Time To Event Recurrent Neural Network

A less hacky machine-learning framework for churn- and time to event prediction. Forecasting problems as diverse as server monitoring to earthquake- and churn-prediction can be posed as the problem of predicting the time to an event. WTTE-RNN is an algorithm and a philosophy about how this should be done.

- blog post

- master thesis

- Quick visual intro to the model

- Jupyter notebooks: basics, more

- Gianmario Spacagna's implementation for Time-To-Failure.

- Korean README

Installation

Python

Check out README for Python package.

If this seems like overkill, the basic implementation can be found inlined as a jupyter notebook

Ideas and Basics

You have data consisting of many time-series of events and want to use historic data to predict the time to the next event (TTE). If you haven't observed the last event yet we've only observed a minimum bound of the TTE to train on. This results in what's called censored data (in red):

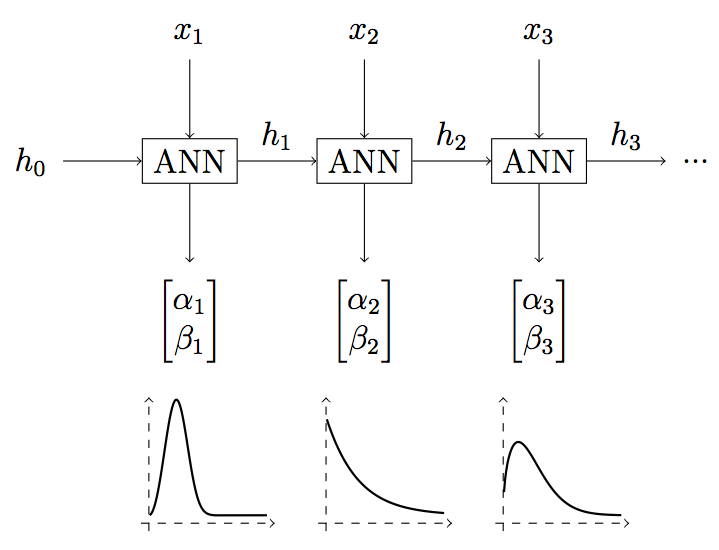

Instead of predicting the TTE itself the trick is to let your machine learning model output the parameters of a distribution. This could be anything but we like the Weibull distribution because it's awesome. The machine learning algorithm could be anything gradient-based but we like RNNs because they are awesome too.

The next step is to train the algo of choice with a special log-loss that can work with censored data. The intuition behind it is that we want to assign high probability at the next event or low probability where there wasn't any events (for censored data):

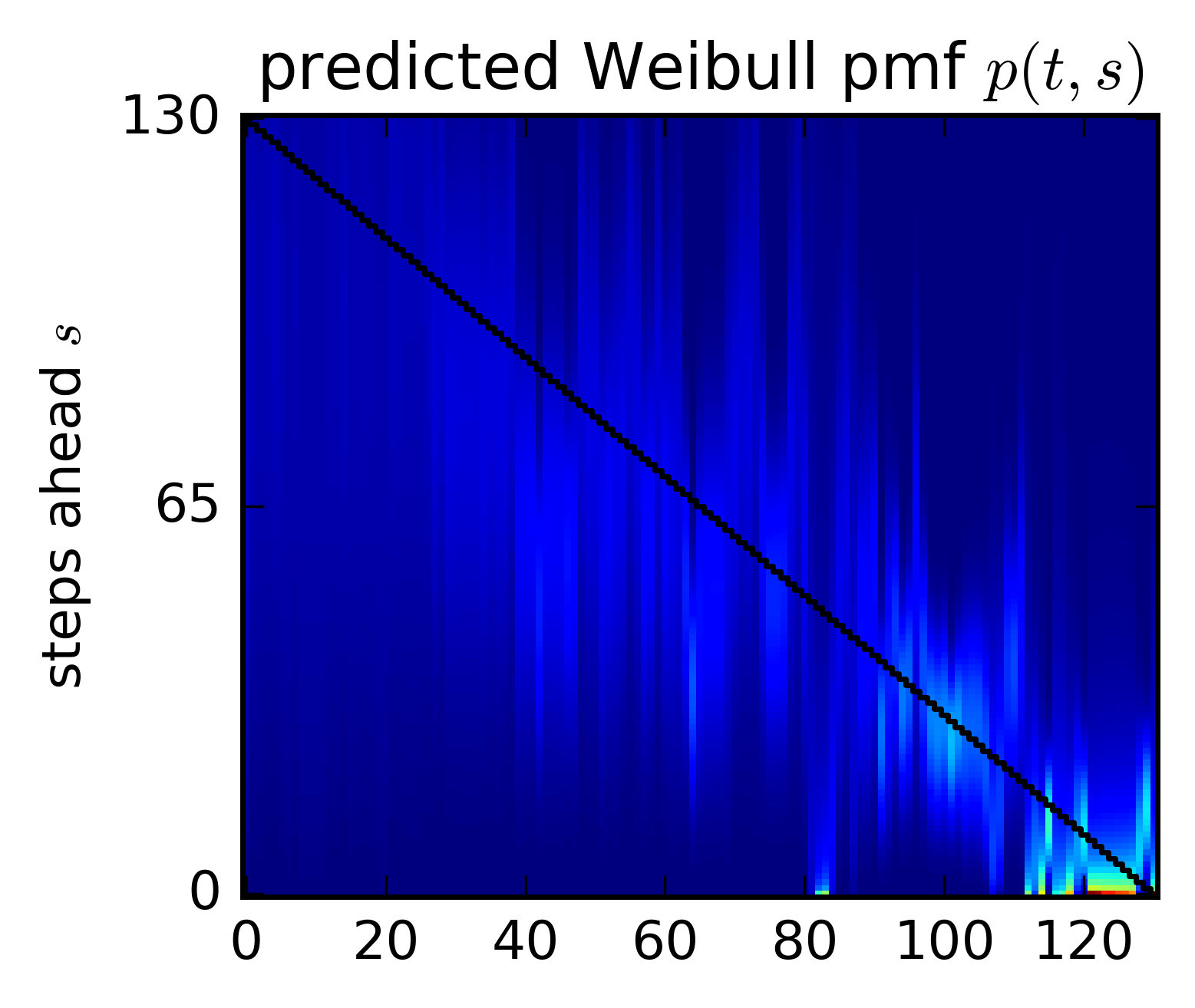

What we get is a pretty neat prediction about the distribution of the TTE in each step (here for a single event):

A neat sideresult is that the predicted params is a 2-d embedding that can be used to visualize and group predictions about how soon (alpha) and how sure (beta). Here by stacking timelines of predicted alpha (left) and beta (right):

Warnings

There's alot of mathematical theory basically justifying us to use this nice loss function in certain situations:

So for censored data it only rewards pushing the distribution up, beyond the point of censoring. To get this to work you need the censoring mechanism to be independent from your feature data. If your features contains information about the point of censoring your algorithm will learn to cheat by predicting far away based on probability of censoring instead of tte. A type of overfitting/artifact learning. Global features can have this effect if not properly treated.

Status and Roadmap

The project is under development. The goal is to create a forkable and easily deployable model framework. WTTE is the algorithm but the whole project aims to be more. It's a visual philosophy and an opinionated idea about how churn-monitoring and reporting can be made beautiful and easy.

Pull-requests, recommendations, comments and contributions very welcome.

What's in the repository

- Transformations

- Data pipeline transformations (

pandas.DataFrameof expected format to numpy) - Time to event and censoring indicator calculations

- Data pipeline transformations (

- Weibull functions (cdf, pdf, quantile, mean etc)

- Objective functions:

- Tensorflow

- Keras (Tensorflow + Theano)

- Keras helpers

- Weibull output layers

- Loss functions

- Callbacks

- ~~ Lots of example-implementations ~~

- Basic notebook will be kept here but to save space and encourage viz check out the examples-repo or fork your notebooks there

Licensing

- MIT license

Citation

@MastersThesis{martinsson:Thesis:2016,

author = {Egil Martinsson},

title = {{WTTE-RNN : Weibull Time To Event Recurrent Neural Network}},

school = {Chalmers University Of Technology},

year = {2016},

}

Contributing

Contributions/PR/Comments etc are very welcome! Post an issue if you have any questions and feel free to reach out to egil.martinsson[at]gmail.com.

Contributors (by order of commit)

- Egil Martinsson

- Dayne Batten (made the first keras-implementation)

- Clay Kim

- Jannik Hoffjann

- Daniel Klevebring

- Jeongkyu Shin

- Joongi Kim

- Jonghyun Park