amirgholami / Adahessian

Programming Languages

Projects that are alternatives of or similar to Adahessian

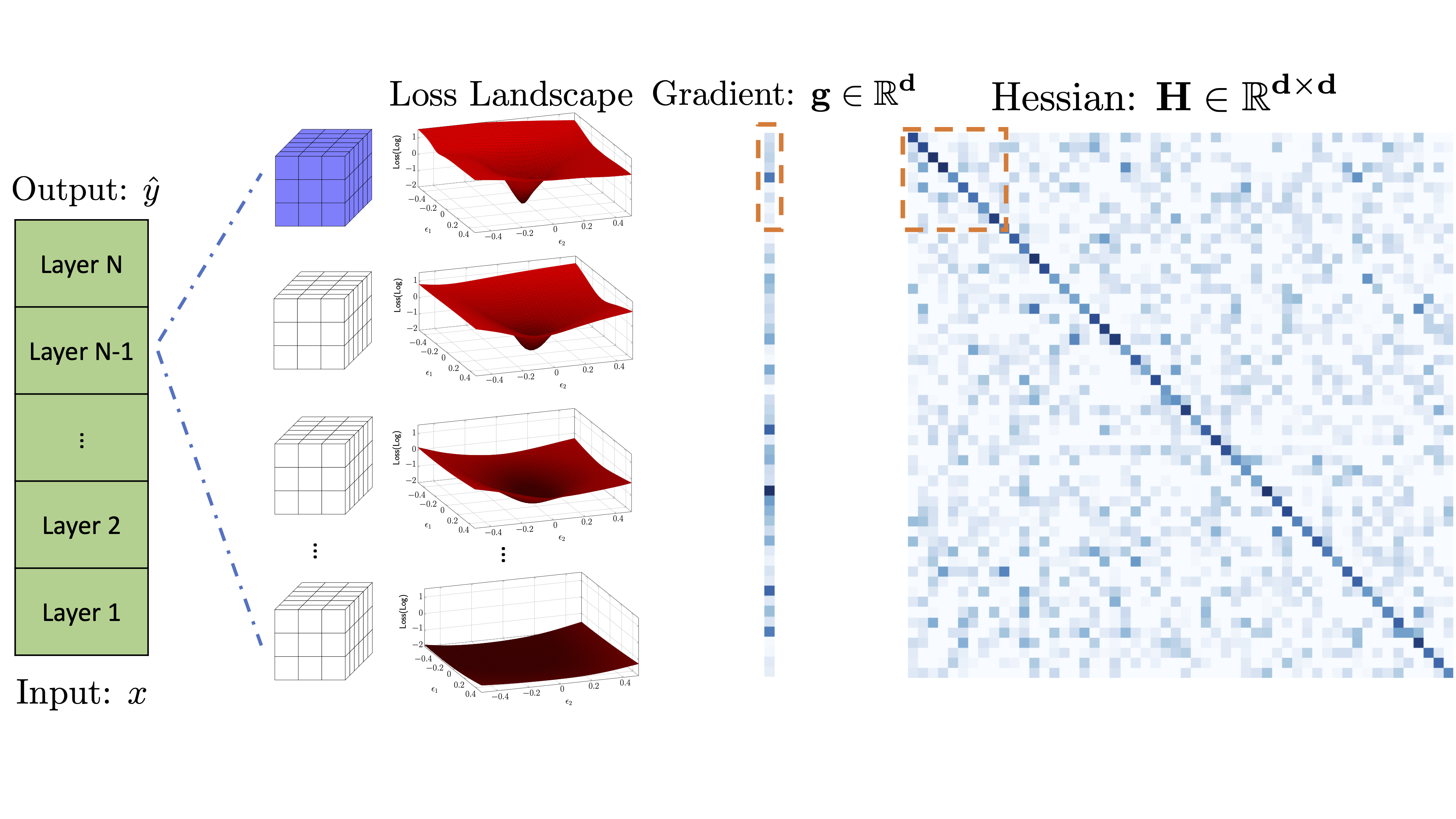

Introduction

AdaHessian is a second order based optimizer for the neural network training based on PyTorch. The library supports the training of convolutional neural networks (image_classification) and transformer-based models (transformer). Our TensorFlow implementation is adahessian_tf.

Please see this paper for more details on the AdaHessian algorithm.

For more details please see:

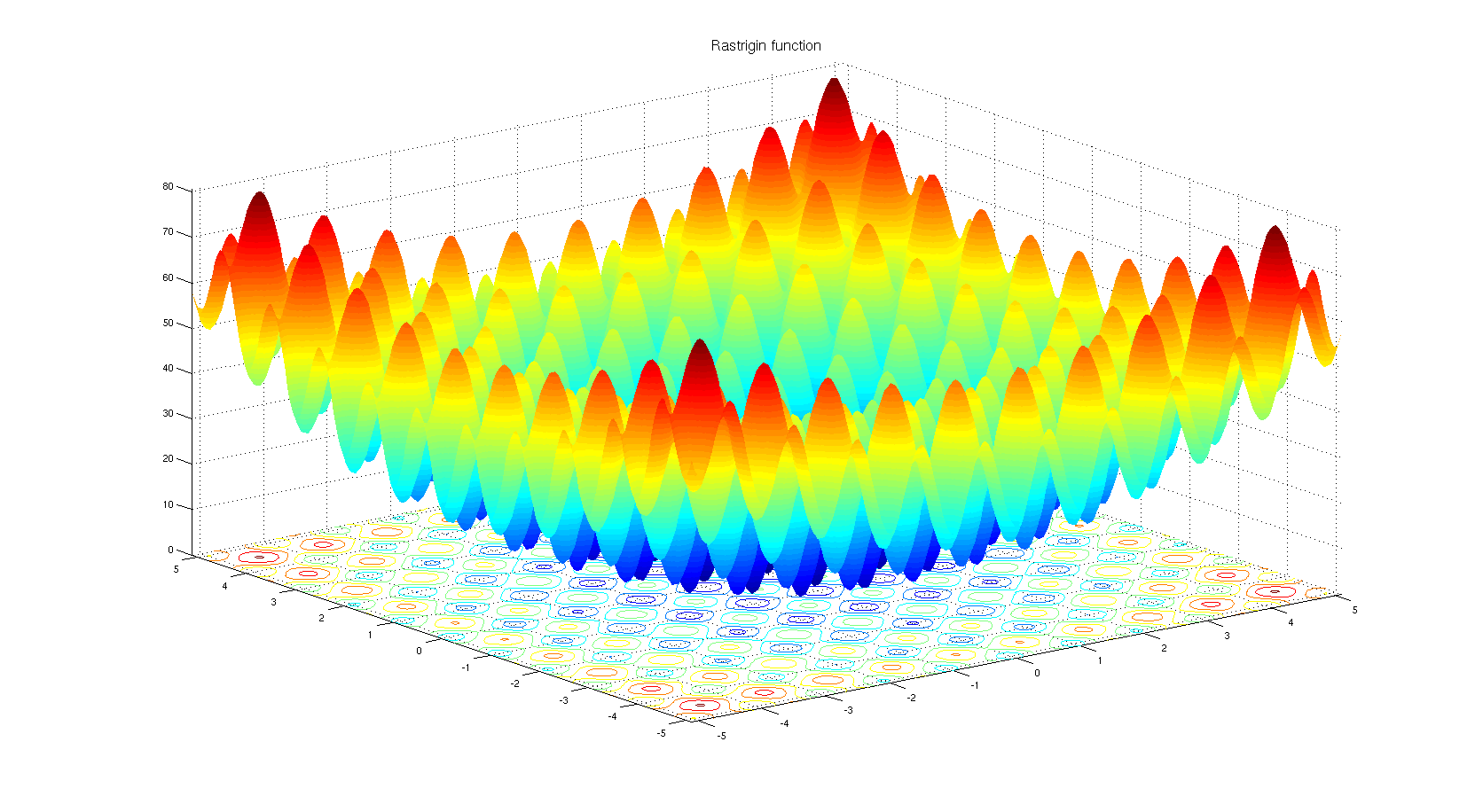

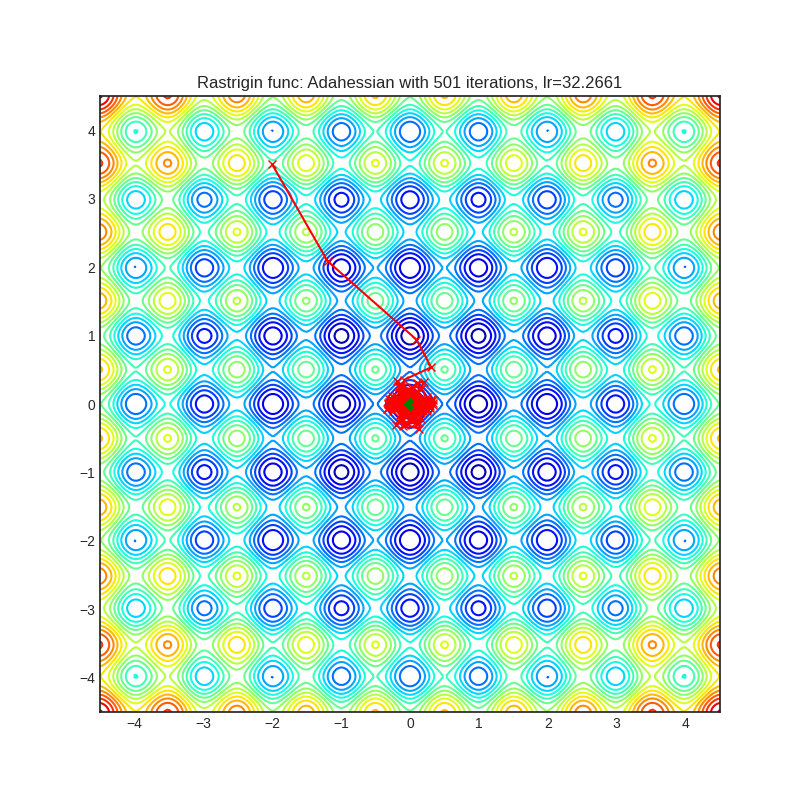

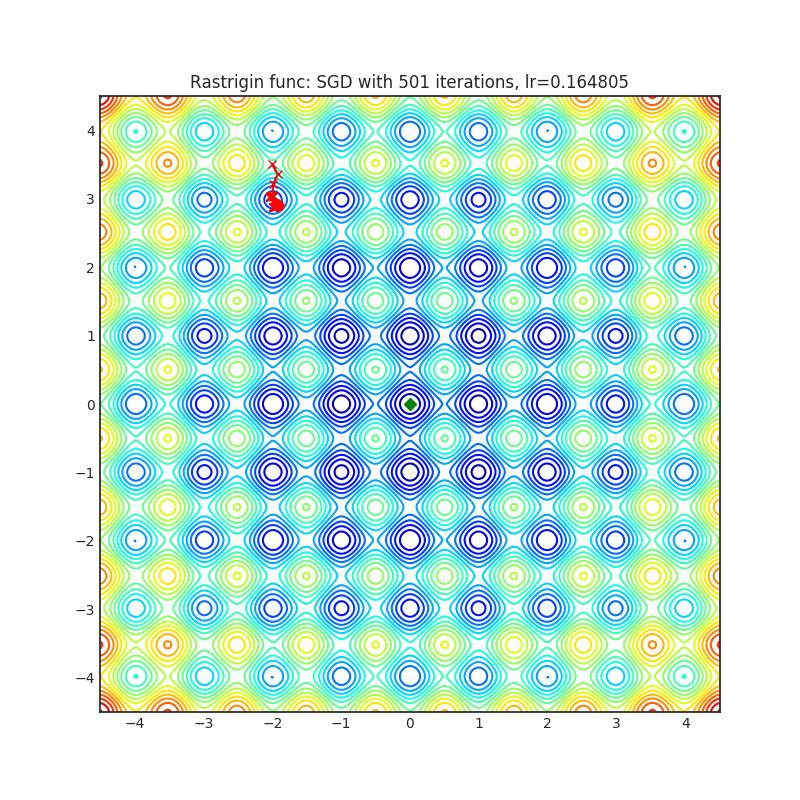

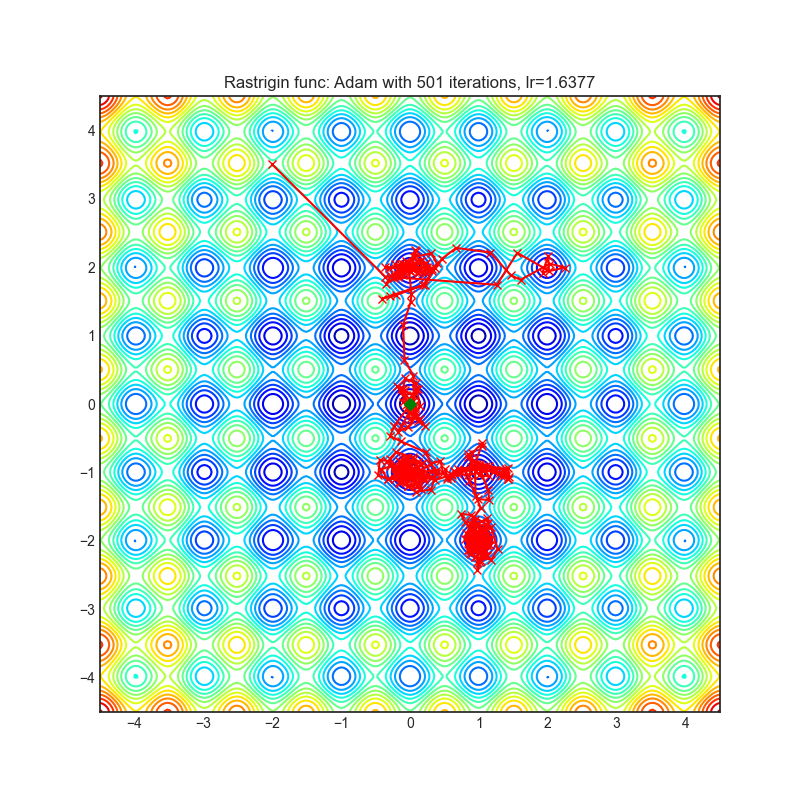

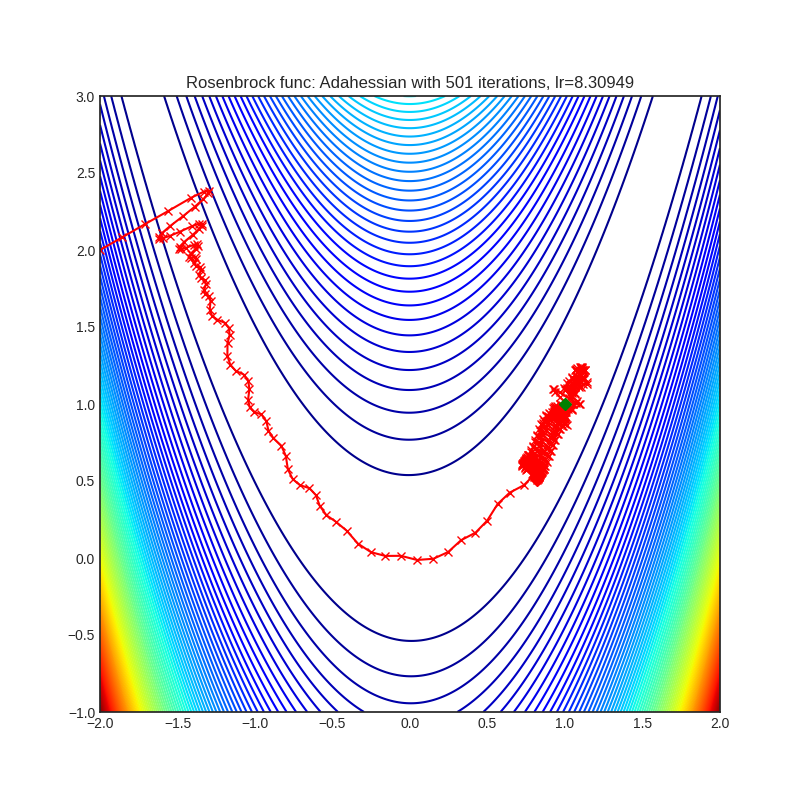

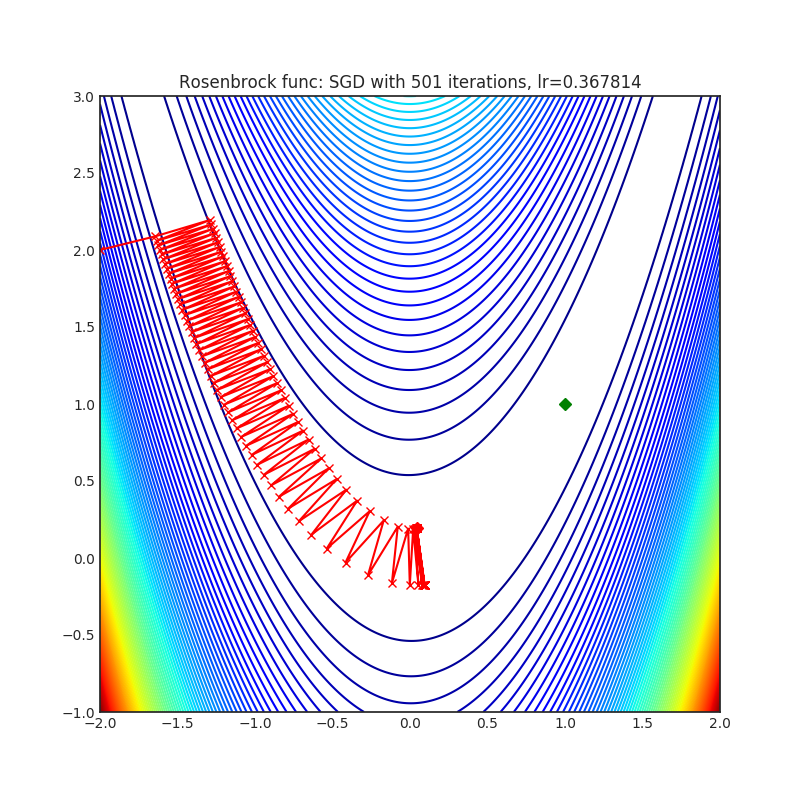

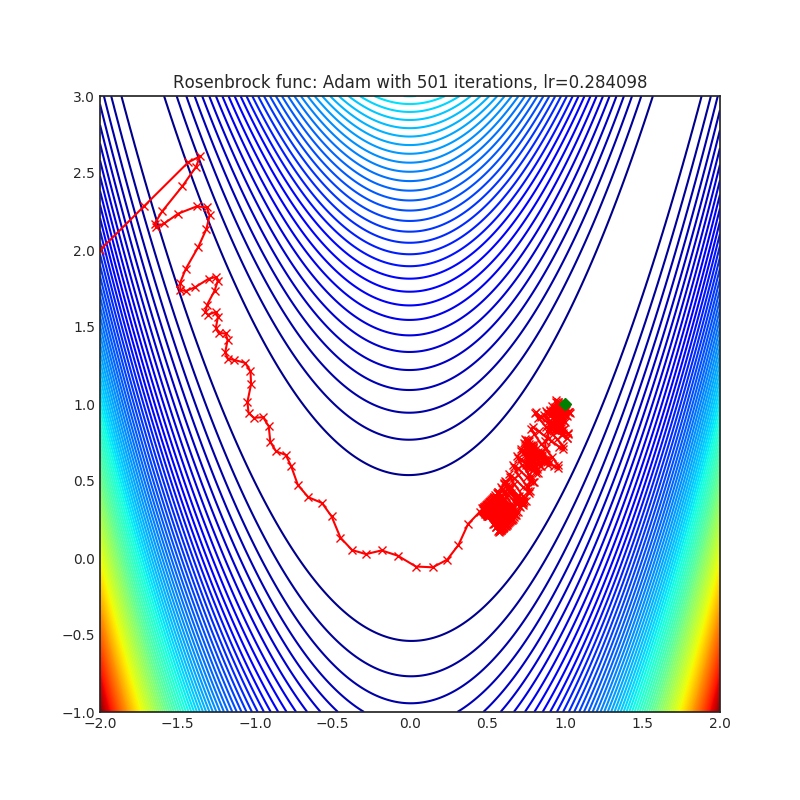

Performance on Rastrigin and Rosenbrock Fucntions:

Below is the convergence of AdaHessian on Rastrigin and Rosenbrock functions, and comparison with SGD and ADAM. Please see pytorch-optimizer repo for comparison with other optimizers.

| Loss Function | AdaHessian | SGD | ADAM |

|---|---|---|---|

|

|

|

|

|

|

|

Usage

Please first clone the AdaHessian library to your local system:

git clone https://github.com/amirgholami/adahessian.git

You can import the optimizer as follows:

from optim_adahessian import Adahessian

...

model = YourModel()

optimizer = Adahessian(model.parameters())

...

for input, output in data:

optimizer.zero_grad()

loss = loss_function(output, model(input))

loss.backward(create_graph=True) # You need this line for Hessian backprop

optimizer.step()

...

Please note that the optim_adahessian is in the image_classification folder. We also have adapted the Adahessian implementation to be compatible with fairseq repo, which can be used for NLP tasks. This is the link to that version, which can be found in transformer folder.

For different kernel size (e.g, matrix, Conv1D, Conv2D, etc)

We found out it would be helpful to add instruction about how to adopt AdaHessian for your own models and problems. Hence, we add a prototype version of AdaHessian as well as some useful comments in the instruction folder.

External implementations and discussions

We are thankful to all the researchers who have extended AdaHessian for different purposes or analyzed it. We include the following links in case you are interested to learn more about AdaHessian.

| Description | Link | New Features |

|---|---|---|

| Reddit Discussion | Link | -- |

| Fast.ai Discussion | Link | -- |

| Best-Deep-Learning-Optimizers Code | Link | -- |

| ada-hessian Code | Link | Support Delayed Hessian Update |

| JAX Code | link | -- |

| AdaHessian Analysis | Link | Analyze AdaHessian on a 2D example |

Citation

AdaHessian has been developed as part of the following paper. We appreciate it if you would please cite the following paper if you found the library useful for your work:

@article{yao2020adahessian,

title={ADAHESSIAN: An Adaptive Second Order Optimizer for Machine Learning},

author={Yao, Zhewei and Gholami, Amir and Shen, Sheng and Keutzer, Kurt and Mahoney, Michael W},

journal={AAAI (Accepted)},

year={2021}

}