theogf / Augmentedgaussianprocesses.jl

Programming Languages

Projects that are alternatives of or similar to Augmentedgaussianprocesses.jl

AugmentedGaussianProcesses.jl is a Julia package in development for Data Augmented Sparse Gaussian Processes. It contains a collection of models for different gaussian and non-gaussian likelihoods, which are transformed via data augmentation into conditionally conjugate likelihood allowing for extremely fast inference via block coordinate updates. There are also more options to use more traditional variational inference via quadrature or Monte Carlo integration.

The theory for the augmentation is given in the following paper : Automated Augmented Conjugate Inference for Non-conjugate Gaussian Process Models

You can also use the package in Python via PyJulia!

Packages models :

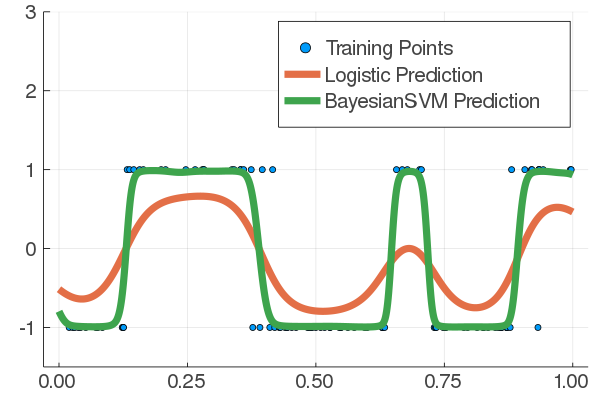

Two GP classification likelihood

- BayesianSVM : A Classifier with a likelihood equivalent to the classic SVM IJulia example/Reference

- Logistic : A Classifier with a Bernoulli likelihood with the logistic link IJulia example/Reference

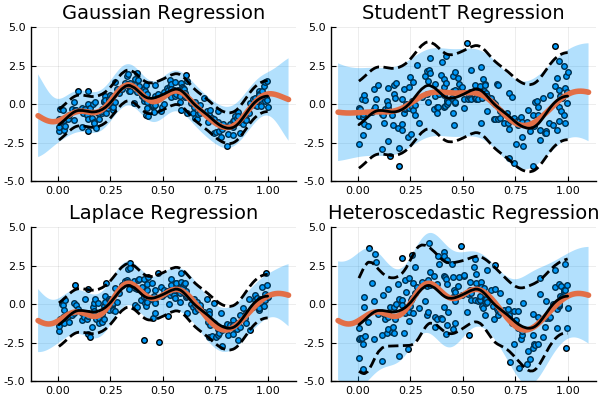

Four GP Regression likelihood

- Gaussian : The standard Gaussian Process regression model with a Gaussian Likelihood (no data augmentation was needed here) IJulia example/Reference

- StudentT : The standard Gaussian Process regression with a Student-t likelihood (the degree of freedom ν is not optimizable for the moment) IJulia example/Reference

- Laplace : Gaussian Process regression with a Laplace likelihood IJulia example/(No reference at the moment)

- Heteroscedastic : Regression with non-stationary noise, given by an additional GP. (no reference at the moment)

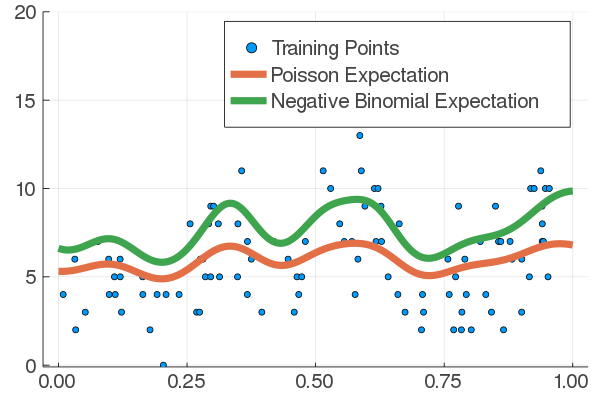

Two GP event counting likelihoods

- Discrete Poisson Process : Estimating a the Poisson parameter λ at every point (as λ₀σ(f)). (no reference at the moment)

- Negative Binomial : Estimating the success probability at every point for a negative binomial distribution (no reference at the miment)

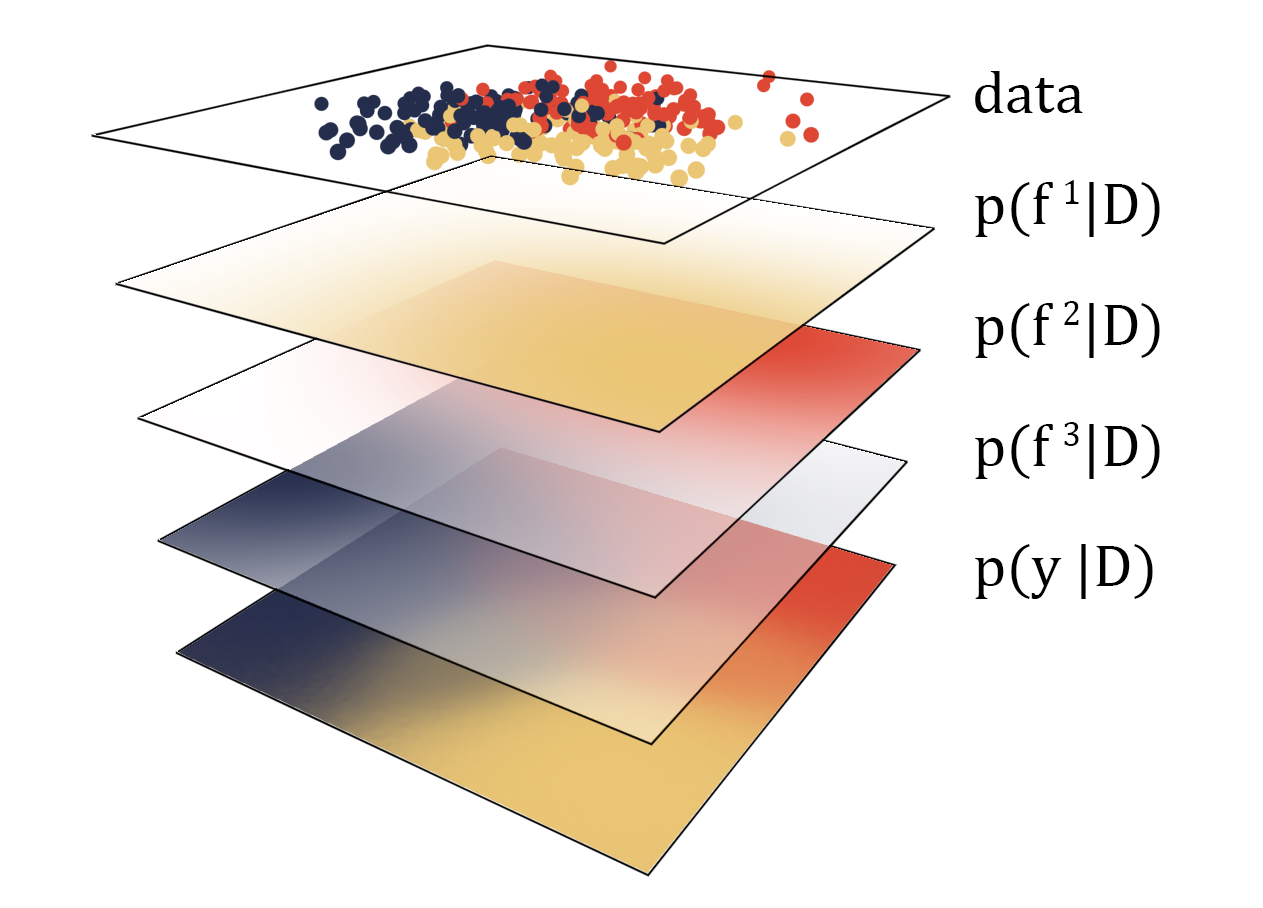

One Multi-Class Classification Likelihood

- Logistic-SoftMax : A modified version of the softmax where the exponential is replaced by the logistic function IJulia example/Reference

Multi-Ouput models

- It is also possible to create a multi-ouput model where the outputs are a linear combination of inducing variables see IJulia example in preparation/[Reference][neuripsmultiouput]

More models in development

- Probit : A Classifier with a Bernoulli likelihood with the probit link

- Online : Allowing for all algorithms to work online as well

Install the package

The package requires at least Julia 1.3

Run julia, press ] and type add AugmentedGaussianProcesses, it will install the package and all its dependencies.

Use the package

A complete documentation is available in the docs. For a short start now you can use this very basic example where X_train is a matrix N x D where N is the number of training points and D is the number of dimensions and Y_train is a vector of outputs (or matrix of independent outputs).

using AugmentedGaussianProcesses;

using KernelFunctions

model = SVGP(X_train, Y_train, SqExponentialKernel(), LogisticLikelihood(),AnalyticSVI(100), 64)

train!(model, 100)

Y_predic = predict_y(model, X_test) #For getting the label directly

Y_predic_prob, Y_predic_prob_var = proba_y(model,X_test) #For getting the likelihood (and likelihood uncertainty) of predicting class 1

Both documentation and examples/tutorials are available.

References :

Check out my website for more news

"Gaussian Processes for Machine Learning" by Carl Edward Rasmussen and Christopher K.I. Williams

AISTATS 20' "Automated Augmented Conjugate Inference for Non-conjugate Gaussian Process Models" by Théo Galy-Fajou, Florian Wenzel and Manfred Opper [https://arxiv.org/abs/2002.11451][autoconj]

UAI 19' "Multi-Class Gaussian Process Classification Made Conjugate: Efficient Inference via Data Augmentation" by Théo Galy-Fajou, Florian Wenzel, Christian Donner and Manfred Opper https://arxiv.org/abs/1905.09670

ECML 17' "Bayesian Nonlinear Support Vector Machines for Big Data" by Florian Wenzel, Théo Galy-Fajou, Matthäus Deutsch and Marius Kloft. https://arxiv.org/abs/1707.05532

AAAI 19' "Efficient Gaussian Process Classification using Polya-Gamma Variables" by Florian Wenzel, Théo Galy-Fajou, Christian Donner, Marius Kloft and Manfred Opper. https://arxiv.org/abs/1802.06383

NeurIPS 18' "Moreno-Muñoz, Pablo, Antonio Artés, and Mauricio Álvarez. "Heterogeneous multi-output Gaussian process prediction." Advances in Neural Information Processing Systems. 2018." [https://papers.nips.cc/paper/7905-heterogeneous-multi-output-gaussian-process-prediction][neuripsmultiouput]

UAI 13' "Gaussian Process for Big Data" by James Hensman, Nicolo Fusi and Neil D. Lawrence https://arxiv.org/abs/1309.6835

JMLR 11' "Robust Gaussian process regression with a Student-t likelihood." by Jylänki Pasi, Jarno Vanhatalo, and Aki Vehtari. http://www.jmlr.org/papers/v12/jylanki11a.html