Benchmark repository for optimization

BenchOpt is a benchmarking suite for optimization algorithms. It is built for simplicity, transparency, and reproducibility.

Benchopt is implemented in Python, and can run algorithms written in many programming languages (example). So far, Benchopt has been tested with Python, R, Julia and C/C++ (compiled binaries with a command line interface). Programs available via conda should be compatible.

BenchOpt is run through a command line interface as described in the API Documentation. Replicating an optimization benchmark should be as simple as doing:

conda create -n benchopt python conda activate benchopt pip install benchopt git clone https://github.com/benchopt/benchmark_logreg_l2 cd benchmark_logreg_l2 benchopt install -e . -s lightning -s sklearn benchopt run -e . --config ./config_example.yml

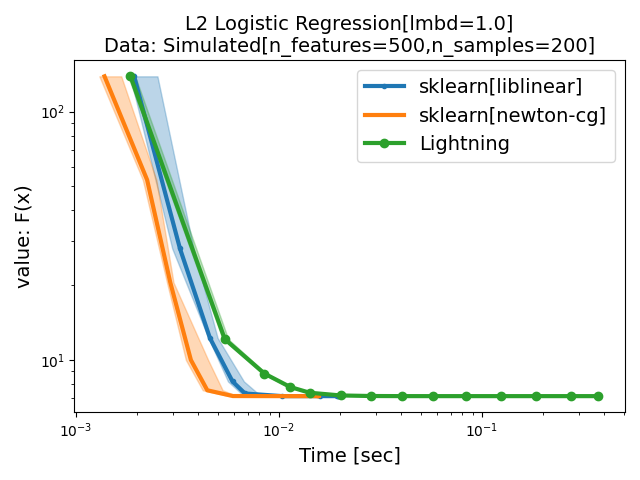

Running this command will give you a benchmark plot on l2-regularized logistic regression:

See the Available optimization problems below.

Learn how to create a new benchmark using the benchmark template.

Install

The command line tool to run the benchmarks can be installed through pip. In order to allow benchopt to automatically install solvers dependencies, the install needs to be done in a conda environment.

conda create -n benchopt python conda activate benchopt

To get the latest release, use:

pip install benchopt

To get the latest development version, use:

pip install -U -i https://test.pypi.org/simple/ benchopt

Then, existing benchmarks can be retrieved from git or created locally. For instance, the benchmark for Lasso can be retrieved with:

git clone https://github.com/benchopt/benchmark_lasso

Command line interface

The preferred way to run the benchmarks is through the command line interface. To run the Lasso benchmark on all datasets and with all solvers, run:

benchopt run --env ./benchmark_lasso

To get more details about the different options, run:

benchopt run -h

or read the CLI documentation.

Benchopt also provides a Python API described in the API documentation.

Available optimization problems

Citing Benchopt

If you use Benchopt in a scientific publication, please cite the following paper

@article{benchopt,

author = {Moreau, Thomas and Massias, Mathurin and Gramfort, Alexandre and Ablin, Pierre

and Bannier, Pierre-Antoine and Charlier, Benjamin and Dagréou, Mathieu and Dupré la Tour, Tom

and Durif, Ghislain and F. Dantas, Cassio and Klopfenstein, Quentin

and Larsson, Johan and Lai, En and Lefort, Tanguy and Malézieux, Benoit

and Moufad, Badr and T. Nguyen, Binh and Rakotomamonjy, Alain and Ramzi, Zaccharie

and Salmon, Joseph and Vaiter, Samuel},

title = {Benchopt: Reproducible, efficient and collaborative optimization benchmarks},

year = {2022},

url = {https://arxiv.org/abs/2206.13424}

}