lucidrains / Bottleneck Transformer Pytorch

Licence: mit

Implementation of Bottleneck Transformer in Pytorch

Stars: ✭ 408

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Bottleneck Transformer Pytorch

Global Self Attention Network

A Pytorch implementation of Global Self-Attention Network, a fully-attention backbone for vision tasks

Stars: ✭ 64 (-84.31%)

Mutual labels: artificial-intelligence, image-classification, attention-mechanism

Vit Pytorch

Implementation of Vision Transformer, a simple way to achieve SOTA in vision classification with only a single transformer encoder, in Pytorch

Stars: ✭ 7,199 (+1664.46%)

Mutual labels: artificial-intelligence, image-classification, attention-mechanism

Caer

High-performance Vision library in Python. Scale your research, not boilerplate.

Stars: ✭ 452 (+10.78%)

Mutual labels: artificial-intelligence, image-classification, vision

FNet-pytorch

Unofficial implementation of Google's FNet: Mixing Tokens with Fourier Transforms

Stars: ✭ 204 (-50%)

Mutual labels: vision, image-classification

Arc Robot Vision

MIT-Princeton Vision Toolbox for Robotic Pick-and-Place at the Amazon Robotics Challenge 2017 - Robotic Grasping and One-shot Recognition of Novel Objects with Deep Learning.

Stars: ✭ 224 (-45.1%)

Mutual labels: artificial-intelligence, vision

Self Attention Cv

Implementation of various self-attention mechanisms focused on computer vision. Ongoing repository.

Stars: ✭ 209 (-48.77%)

Mutual labels: artificial-intelligence, attention-mechanism

Linear Attention Transformer

Transformer based on a variant of attention that is linear complexity in respect to sequence length

Stars: ✭ 205 (-49.75%)

Mutual labels: artificial-intelligence, attention-mechanism

Home Platform

HoME: a Household Multimodal Environment is a platform for artificial agents to learn from vision, audio, semantics, physics, and interaction with objects and other agents, all within a realistic context.

Stars: ✭ 370 (-9.31%)

Mutual labels: artificial-intelligence, vision

halonet-pytorch

Implementation of the 😇 Attention layer from the paper, Scaling Local Self-Attention For Parameter Efficient Visual Backbones

Stars: ✭ 181 (-55.64%)

Mutual labels: vision, attention-mechanism

Transformer-in-Transformer

An Implementation of Transformer in Transformer in TensorFlow for image classification, attention inside local patches

Stars: ✭ 40 (-90.2%)

Mutual labels: image-classification, attention-mechanism

Timesformer Pytorch

Implementation of TimeSformer from Facebook AI, a pure attention-based solution for video classification

Stars: ✭ 225 (-44.85%)

Mutual labels: artificial-intelligence, attention-mechanism

Alphafold2

To eventually become an unofficial Pytorch implementation / replication of Alphafold2, as details of the architecture get released

Stars: ✭ 298 (-26.96%)

Mutual labels: artificial-intelligence, attention-mechanism

X Transformers

A simple but complete full-attention transformer with a set of promising experimental features from various papers

Stars: ✭ 211 (-48.28%)

Mutual labels: artificial-intelligence, attention-mechanism

Dalle Pytorch

Implementation / replication of DALL-E, OpenAI's Text to Image Transformer, in Pytorch

Stars: ✭ 3,661 (+797.3%)

Mutual labels: artificial-intelligence, attention-mechanism

Linformer Pytorch

My take on a practical implementation of Linformer for Pytorch.

Stars: ✭ 239 (-41.42%)

Mutual labels: artificial-intelligence, attention-mechanism

Transfer Learning Suite

Transfer Learning Suite in Keras. Perform transfer learning using any built-in Keras image classification model easily!

Stars: ✭ 212 (-48.04%)

Mutual labels: artificial-intelligence, image-classification

visualization

a collection of visualization function

Stars: ✭ 189 (-53.68%)

Mutual labels: vision, attention-mechanism

Artificio

Deep Learning Computer Vision Algorithms for Real-World Use

Stars: ✭ 326 (-20.1%)

Mutual labels: artificial-intelligence, image-classification

Deep Learning With Python

Deep learning codes and projects using Python

Stars: ✭ 195 (-52.21%)

Mutual labels: artificial-intelligence, image-classification

Point Transformer Pytorch

Implementation of the Point Transformer layer, in Pytorch

Stars: ✭ 199 (-51.23%)

Mutual labels: artificial-intelligence, attention-mechanism

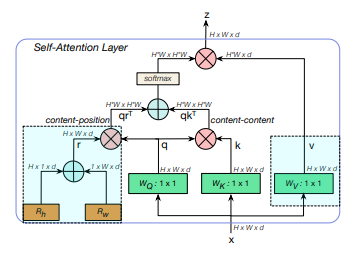

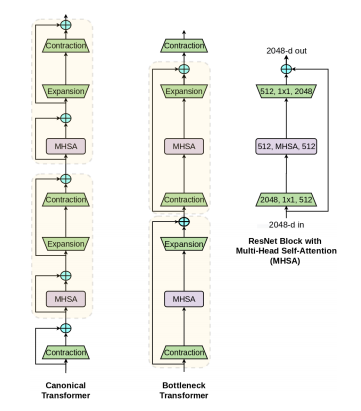

Bottleneck Transformer - Pytorch

Implementation of Bottleneck Transformer, SotA visual recognition model with convolution + attention that outperforms EfficientNet and DeiT in terms of performance-computes trade-off, in Pytorch

Install

$ pip install bottleneck-transformer-pytorch

Usage

import torch

from torch import nn

from bottleneck_transformer_pytorch import BottleStack

layer = BottleStack(

dim = 256, # channels in

fmap_size = 64, # feature map size

dim_out = 2048, # channels out

proj_factor = 4, # projection factor

downsample = True, # downsample on first layer or not

heads = 4, # number of heads

dim_head = 128, # dimension per head, defaults to 128

rel_pos_emb = False, # use relative positional embedding - uses absolute if False

activation = nn.ReLU() # activation throughout the network

)

fmap = torch.randn(2, 256, 64, 64) # feature map from previous resnet block(s)

layer(fmap) # (2, 2048, 32, 32)

BotNet

With some simple model surgery off a resnet, you can have the 'BotNet' (what a weird name) for training.

import torch

from torch import nn

from torchvision.models import resnet50

from bottleneck_transformer_pytorch import BottleStack

layer = BottleStack(

dim = 256,

fmap_size = 56, # set specifically for imagenet's 224 x 224

dim_out = 2048,

proj_factor = 4,

downsample = True,

heads = 4,

dim_head = 128,

rel_pos_emb = True,

activation = nn.ReLU()

)

resnet = resnet50()

# model surgery

backbone = list(resnet.children())

model = nn.Sequential(

*backbone[:5],

layer,

nn.AdaptiveAvgPool2d((1, 1)),

nn.Flatten(1),

nn.Linear(2048, 1000)

)

# use the 'BotNet'

img = torch.randn(2, 3, 224, 224)

preds = model(img) # (2, 1000)

Citations

@misc{srinivas2021bottleneck,

title = {Bottleneck Transformers for Visual Recognition},

author = {Aravind Srinivas and Tsung-Yi Lin and Niki Parmar and Jonathon Shlens and Pieter Abbeel and Ashish Vaswani},

year = {2021},

eprint = {2101.11605},

archivePrefix = {arXiv},

primaryClass = {cs.CV}

}

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].