BIGBALLON / Cifar 10 Cnn

Programming Languages

Projects that are alternatives of or similar to Cifar 10 Cnn

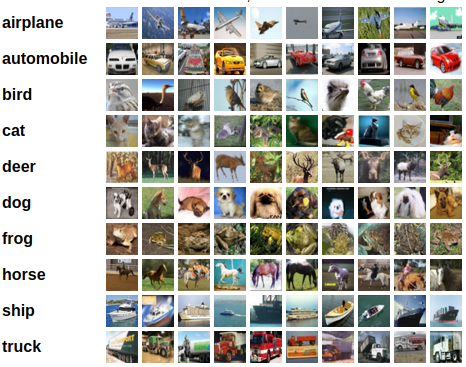

Convolutional Neural Networks for CIFAR-10

This repository is about some implementations of CNN Architecture for cifar10.

I just use Keras and Tensorflow to implementate all of these CNN models.

(maybe torch/pytorch version if I have time)

A pytorch version is available at CIFAR-ZOO

Requirements

- Python (3.5)

- keras (>= 2.1.5)

- tensorflow-gpu (>= 1.4.1)

Architectures and papers

- The first CNN model: LeNet

- Network in Network

-

Vgg19 Network

- Very Deep Convolutional Networks for Large-Scale Image Recognition

- The 1st places in ILSVRC 2014 localization tasks

- The 2nd places in ILSVRC 2014 classification tasks

-

Residual Network

- Deep Residual Learning for Image Recognition

- Identity Mappings in Deep Residual Networks

- CVPR 2016 Best Paper Award

-

1st places in all five main tracks:

- ILSVRC 2015 Classification: "Ultra-deep" 152-layer nets

- ILSVRC 2015 Detection: 16% better than 2nd

- ILSVRC 2015 Localization: 27% better than 2nd

- COCO Detection: 11% better than 2nd

- COCO Segmentation: 12% better than 2nd

- Wide Residual Network

- ResNeXt

-

DenseNet

- Densely Connected Convolutional Networks

- CVPR 2017 Best Paper Award

-

SENet

- Squeeze-and-Excitation Networks

- The 1st places in ILSVRC 2017 classification tasks

Documents & tutorials

There are also some documents and tutorials in doc & issues/3.

Get it if you need.

You can also see the articles if you can speak Chinese.

Accuracy of all my implementations

In particular:

Change the batch size according to your GPU's memory.

Modify the learning rate schedule may imporve the results of accuracy!

| network | GPU | params | batch size | epoch | training time | accuracy(%) |

|---|---|---|---|---|---|---|

| Lecun-Network | GTX1080TI | 62k | 128 | 200 | 30 min | 76.23 |

| Network-in-Network | GTX1080TI | 0.97M | 128 | 200 | 1 h 40 min | 91.63 |

| Vgg19-Network | GTX1080TI | 39M | 128 | 200 | 1 h 53 min | 93.53 |

| Residual-Network20 | GTX1080TI | 0.27M | 128 | 200 | 44 min | 91.82 |

| Residual-Network32 | GTX1080TI | 0.47M | 128 | 200 | 1 h 7 min | 92.68 |

| Residual-Network110 | GTX1080TI | 1.7M | 128 | 200 | 3 h 38 min | 93.93 |

| Wide-resnet 16x8 | GTX1080TI | 11.3M | 128 | 200 | 4 h 55 min | 95.13 |

| Wide-resnet 28x10 | GTX1080TI | 36.5M | 128 | 200 | 10 h 22 min | 95.78 |

| DenseNet-100x12 | GTX1080TI | 0.85M | 64 | 250 | 17 h 20 min | 94.91 |

| DenseNet-100x24 | GTX1080TI | 3.3M | 64 | 250 | 22 h 27 min | 95.30 |

| DenseNet-160x24 | 1080 x 2 | 7.5M | 64 | 250 | 50 h 20 min | 95.90 |

| ResNeXt-4x64d | GTX1080TI | 20M | 120 | 250 | 21 h 3 min | 95.19 |

| SENet(ResNeXt-4x64d) | GTX1080TI | 20M | 120 | 250 | 21 h 57 min | 95.60 |

About LeNet and CNN training tips/tricks

LeNet is the first CNN network proposed by LeCun.

I used different CNN training tricks to show you how to train your model efficiently.

LeNet_keras.py is the baseline of LeNet,

LeNet_dp_keras.py used the Data Prepossessing [DP],

LeNet_dp_da_keras.py used both DP and the Data Augmentation[DA],

LeNet_dp_da_wd_keras.py used DP, DA and Weight Decay [WD]

| network | GPU | DP | DA | WD | training time | accuracy(%) |

|---|---|---|---|---|---|---|

| LeNet_keras | GTX1080TI | - | - | - | 5 min | 58.48 |

| LeNet_dp_keras | GTX1080TI | √ | - | - | 5 min | 60.41 |

| LeNet_dp_da_keras | GTX1080TI | √ | √ | - | 26 min | 75.06 |

| LeNet_dp_da_wd_keras | GTX1080TI | √ | √ | √ | 26 min | 76.23 |

For more CNN training tricks, see Must Know Tips/Tricks in Deep Neural Networks (by Xiu-Shen Wei)

About Learning Rate schedule

Different learning rate schedule may get different training/testing accuracy!

See ./htd, and HTD for more details.

About Multiple GPUs Training

Since the latest version of Keras is already supported keras.utils.multi_gpu_model, so you can simply use the following code to train your model with multiple GPUs:

from keras.utils import multi_gpu_model

from keras.applications.resnet50 import ResNet50

model = ResNet50()

# Replicates `model` on 8 GPUs.

parallel_model = multi_gpu_model(model, gpus=8)

parallel_model.compile(loss='categorical_crossentropy',optimizer='adam')

# This `fit` call will be distributed on 8 GPUs.

# Since the batch size is 256, each GPU will process 32 samples.

parallel_model.fit(x, y, epochs=20, batch_size=256)

About ResNeXt & DenseNet

Since I don't have enough machines to train the larger networks, I only trained the smallest network described in the paper. You can see the results in liuzhuang13/DenseNet and prlz77/ResNeXt.pytorch

Please feel free to contact me if you have any questions!

Citation

@misc{bigballon2017cifar10cnn,

author = {Wei Li},

title = {cifar-10-cnn: Play deep learning with CIFAR datasets},

howpublished = {\url{https://github.com/BIGBALLON/cifar-10-cnn}},

year = {2017}

}